在使用nni 工具对PyTorch 模型进行剪枝的时候,出现了如下的 Runtime Error,查询并解决后,记录在此,给出现相同错误的人一个参考。

RuntimeError: 0 INTERNAL ASSERT FAILED at /opt/conda/conda-bld/pytorch_1587428398394/work/torch/csrc/jit/ir/alias_analysis.cpp:318, please report a bug to PyTorch. We don’t have an op for aten::to but it isn’t a special case. Argument types: Tensor, None, int, Device, bool, bool, bool, int,

代码以及环境如下:

'''

python 3.7

pytorch 1.5.0

nni 2.3

'''

pruner = FPGMPruner(net_stu.module, config_list,dependency_aware=True, dummy_input=dummy_input)

报错信息栈如下:

[11] Traceback (most recent call last):

[12] File "/disheng/BMP-mo/train_pruner.py", line 332, in <module>

[13] train(args)

[14] File "/disheng/BMP-mo/train_pruner.py", line 139, in train

[15] pruner = FPGMPruner(net_stu, config_list,dependency_aware=True, dummy_input=dummy_input)

[16] File "/opt/conda/lib/python3.7/site-packages/nni/algorithms/compression/pytorch/pruning/one_shot_pruner.py", line 166, in __init__

[17] dummy_input=dummy_input)

[18] File "/opt/conda/lib/python3.7/site-packages/nni/algorithms/compression/pytorch/pruning/one_shot_pruner.py", line 40, in __init__

[19] super().__init__(model, config_list, None, pruning_algorithm, dependency_aware, dummy_input, **algo_kwargs)

[20] File "/opt/conda/lib/python3.7/site-packages/nni/algorithms/compression/pytorch/pruning/dependency_aware_pruner.py", line 45, in __init__

[21] self.graph = TorchModuleGraph(model, dummy_input)

[22] File "/opt/conda/lib/python3.7/site-packages/nni/common/graph_utils.py", line 248, in __init__

[23] super().__init__(model, dummy_input, traced_model)

[24] File "/opt/conda/lib/python3.7/site-packages/nni/common/graph_utils.py", line 66, in __init__

[25] self._trace(model, dummy_input)

[26] File "/opt/conda/lib/python3.7/site-packages/nni/common/graph_utils.py", line 74, in _trace

[27] self.trace = torch.jit.trace(model, dummy_input)

[28] File "/opt/conda/lib/python3.7/site-packages/torch/jit/__init__.py", line 875, in trace

[29] check_tolerance, _force_outplace, _module_class)

[30] File "/opt/conda/lib/python3.7/site-packages/torch/jit/__init__.py", line 1027, in trace_module

[31] module._c._create_method_from_trace(method_name, func, example_inputs, var_lookup_fn, _force_outplace)

[32] RuntimeError: 0 INTERNAL ASSERT FAILED at /opt/conda/conda-bld/pytorch_1587428398394/work/torch/csrc/jit/ir/alias_analysis.cpp:318, please report a bug to PyTorch. We don't have an op for aten::to but it isn't a special case. Argument types: Tensor, None, int, Device, bool, bool, bool, int,

从上面的报错信息,可以分析出,是FPGMPruner 在调用 self.trace = torch.jit.trace(model, dummy_input)的时候出现错误,原因是 net_stu 是 DataParallel 类型,这种类型还不能作为 torch.jit.trace的参数,这是一个官方bug,还没有在这个版本中修复。

解决办法:

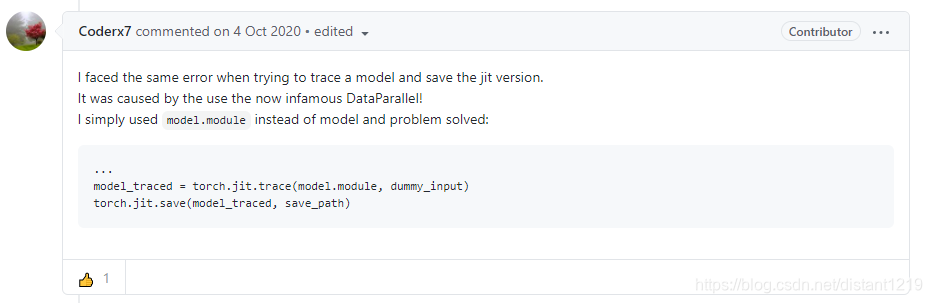

在这种情况下,传入的参数从net_stu 替换为 net_stu.module,本质是是换了传入参数的类型。

pruner = FPGMPruner(net_stu.module, config_list,dependency_aware=True, dummy_input=dummy_input)

参考:

hasSpecialCase INTERNAL ASSERT FAILED: We don’t have an op for aten::to but it isn’t a special case.

在使用NNI对PyTorch模型进行剪枝时遇到RuntimeError,报错涉及aten::to操作。错误源于FPGMPruner调用时,net_stu(ModuleList类型)无法作为torch.jit.trace参数,这是官方未修复的bug。解决方法是将参数由net_stu替换为net_stu.module,改变传入类型。

在使用NNI对PyTorch模型进行剪枝时遇到RuntimeError,报错涉及aten::to操作。错误源于FPGMPruner调用时,net_stu(ModuleList类型)无法作为torch.jit.trace参数,这是官方未修复的bug。解决方法是将参数由net_stu替换为net_stu.module,改变传入类型。

1370

1370

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?