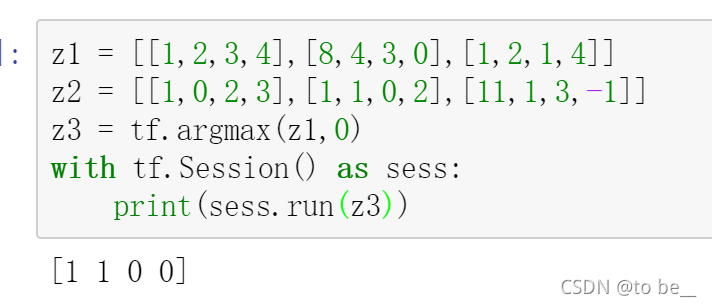

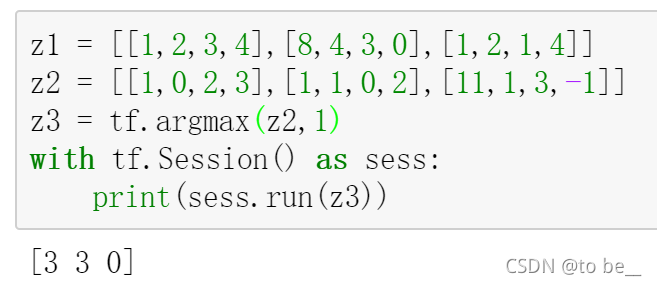

一、tf.argmax(input,axis,name=None) : 返回input中最大值的索引index。

其中axis=0时表示比较每一列的元素,返回最大元素所在行号(即第一维度);

axis=1表示比较每一行的元素, 返回最大元素所在列号(即第二维度);

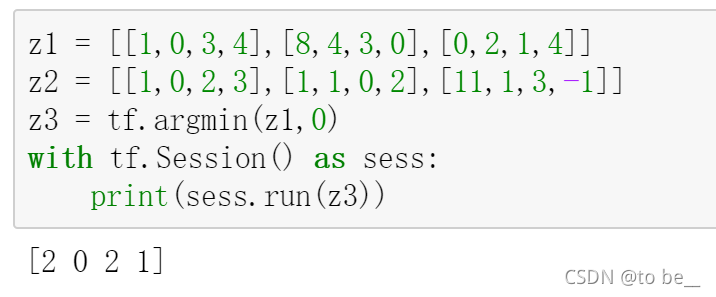

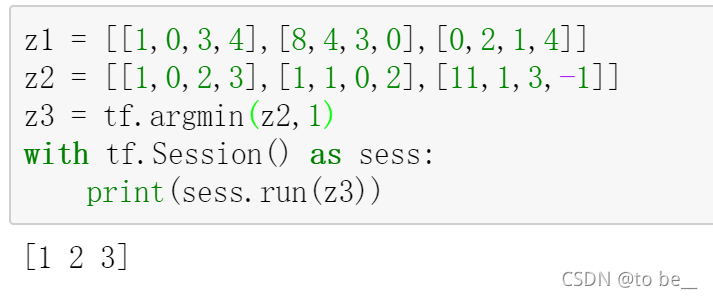

二、tf.argmin(input,axis,name=None) : 返回input中最小值的索引index。

其中axis=0时表示比较每一列的元素,返回最小元素所在行号(即第一维度);

其中axis=1时表示比较每一行的元素,返回最小元素所在列号(即第二维度);

本文详细介绍了TensorFlow中的tf.argmax与tf.argmin函数的使用方法,包括如何通过设置axis参数来获取最大值或最小值所在的索引,适用于不同维度的数据处理需求。

本文详细介绍了TensorFlow中的tf.argmax与tf.argmin函数的使用方法,包括如何通过设置axis参数来获取最大值或最小值所在的索引,适用于不同维度的数据处理需求。

2390

2390

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?