引言:AI会议纪要的行业痛点与华为云ModelArts Studio解决方案

在现代企业办公场景中,会议纪要的整理是一项高频且繁琐的任务。传统人工记录方式存在效率低、易遗漏、格式不统一等问题,而AI语音转写工具又往往缺乏语义理解、关键信息提取、多发言人区分等能力。

华为云Flexus+DeepSeek 结合 ModelArts Studio 大模型能力,为企业提供了一套完整的 AI会议纪要自动化解决方案,实现:

✅ 高精度实时语音转写(支持中英文混合场景)

✅ 发言人区分与角色标注(基于声纹识别)

✅ 关键信息自动提取(议题、结论、待办事项)

✅ 结构化输出(Markdown/Excel/API对接OA系统)

本文将结合 华为云ModelArts Studio 的 大模型训练、推理部署 及 会议纪要场景的代码实现,展示如何快速搭建企业级AI会议助手。

一、方案架构:Flexus+ModelArts Studio+DeepSeek

1.1 技术栈组成

| 模块 | 技术实现 | 作用 |

|---|---|---|

| 语音采集 | 华为云实时音视频服务(RTC) | 多终端会议录音,支持声纹分离 |

| 语音转写 | 华为云语音识别(ASR)+ DeepSeek | 高精度转写,支持行业术语优化 |

| NLP处理 | 华为云ModelArts Studio(LLM微调) | 摘要生成、待办事项提取、情感分析 |

| 业务对接 | API网关 + 企业微信/钉钉Webhook | 自动推送纪要至OA系统 |

1.2 核心优势

Flexus弹性算力:动态扩展GPU资源,应对高并发会议场景

DeepSeek大模型优化:针对金融、法律等垂直领域微调,提升专业术语识别

端到端低代码:ModelArts Studio可视化训练,无需复杂算法背景

二、前期环境准备:华为云ModelArts Studio开通

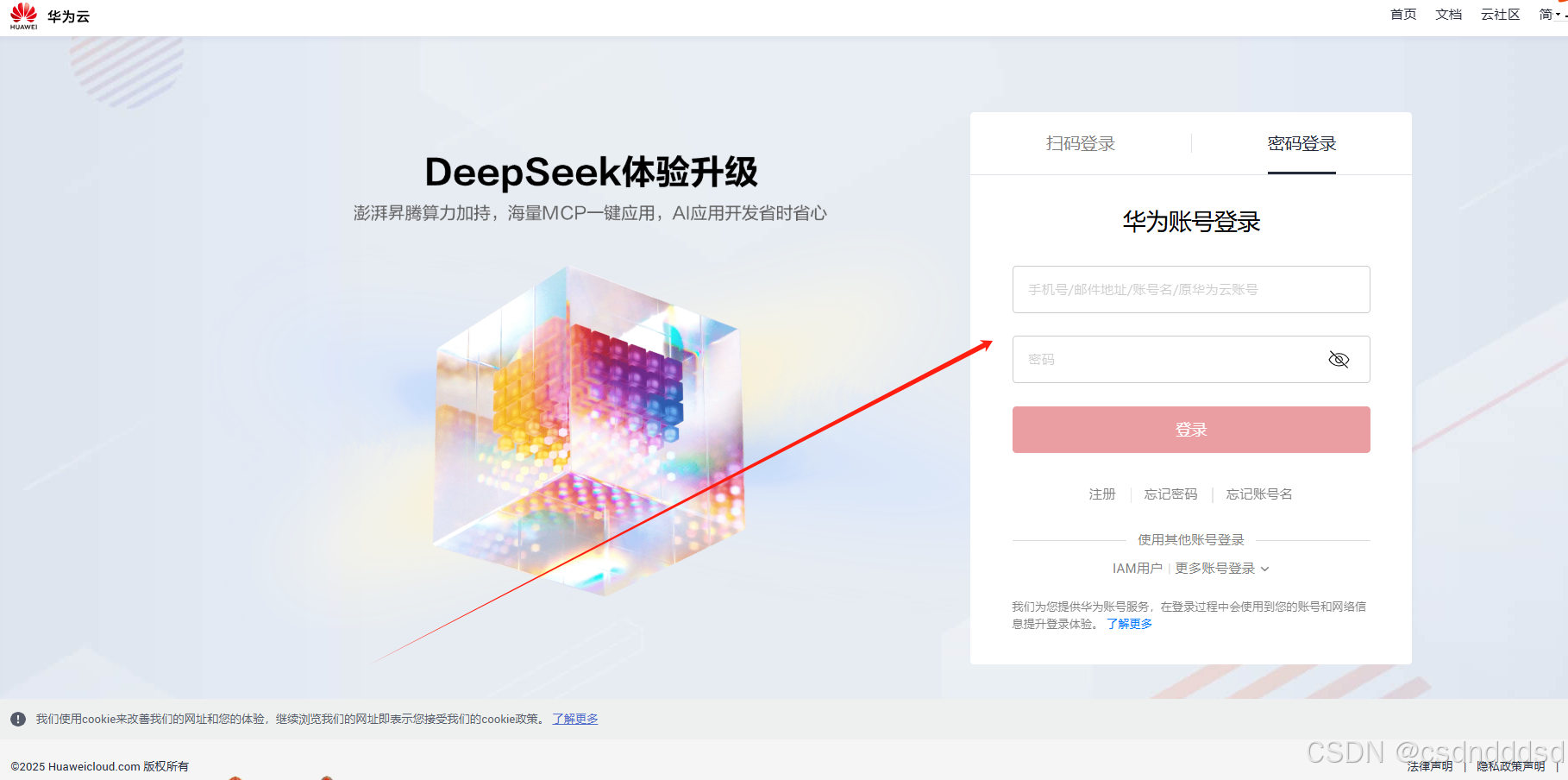

2.1 首先注册一个华为云账户

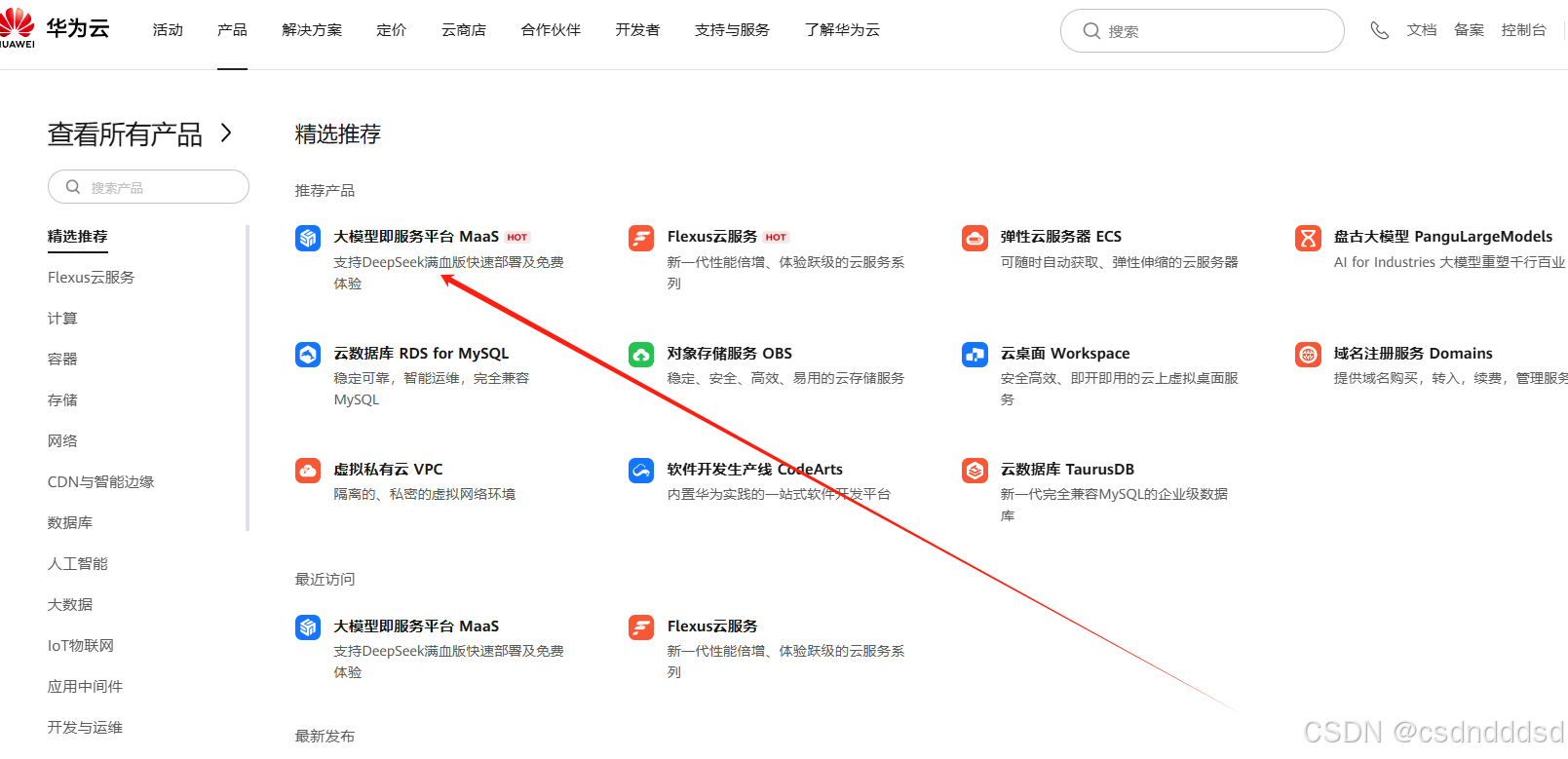

2.2 找到产品 — 大模型即服务平台 MaaS 这个菜单,然后点击进去

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

980

980

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?