1. 基础:生日悖论分析。如果一个房间有 23 人或以上,那么至少有两 个人的生日相同的概率大于 50%。编写程序,输出在不同随机样本数 量下,23 个人中至少两个人生日相同的概率。

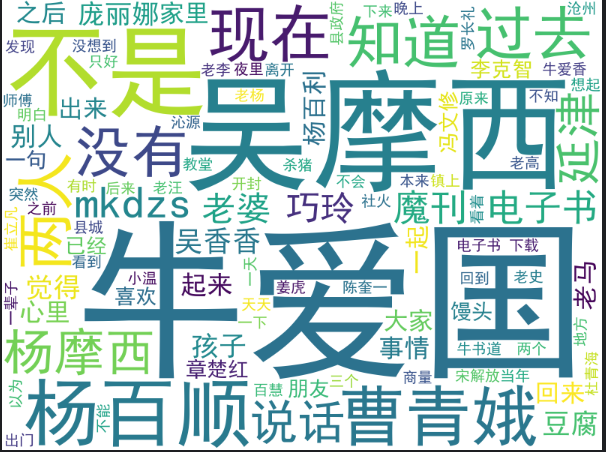

2. 进阶:统计《一句顶一万句》文本中前 10 高频词,生成词云。

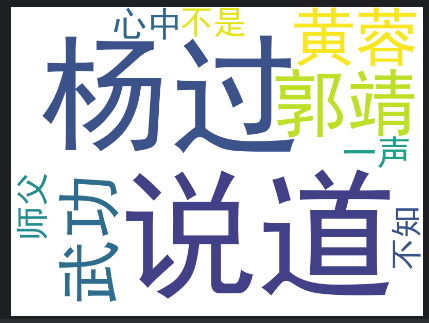

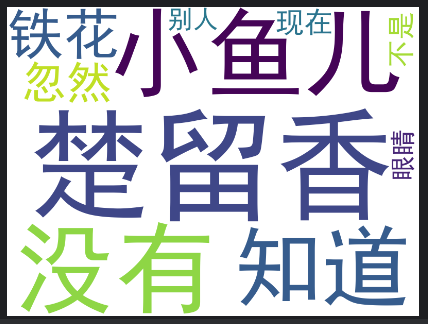

3. 拓展:金庸、古龙等武侠小说写作风格分析。输出不少于 3 个金庸(古 龙)作品的最常用 10 个词语,找到其中的相关性,总结其风格。

代码:

import random

from typing import List

def birthday_paradox(num_people: int = 23, simulations: int = 10000) -> float:

"""

计算至少两人生日相同的概率

:param num_people: 房间人数(默认23)

:param simulations: 模拟次数(默认10000次)

:return: 概率值

"""

if num_people < 2 or simulations < 1:

raise ValueError("人数需≥2,模拟次数需≥1")

matches = 0

for _ in range(simulations):

birthdays = [random.randint(1, 365) for _ in range(num_people)]

if len(birthdays) != len(set(birthdays)):

matches += 1

return matches / simulations

# 测试不同样本量下的概率

sample_sizes = [1000, 5000, 10000]

for size in sample_sizes:

prob = birthday_paradox(23, size)

print(f"模拟{size}次,概率:{prob:.2%}")

import jieba

from collections import Counter

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import os

def generate_wordcloud_and_statistics(text_path, stopwords_path, output_path="wordcloud.png"):

# 检查文件是否存在

if not os.path.exists(text_path):

print(f"文件不存在:{text_path}")

return

if not os.path.exists(stopwords_path):

print(f"文件不存在:{stopwords_path}")

return

# 读取文本

with open(text_path, 'r', encoding='utf-8') as file:

text = file.read()

# 读取停用词

with open(stopwords_path, 'r', encoding='utf-8') as file:

stopwords = set(file.read().split())

# 分词并过滤停用词

words = jieba.lcut(text)

filtered_words = [word for word in words if word not in stopwords and len(word) > 1]

# 统计词频

word_counts = Counter(filtered_words)

top_10_words = word_counts.most_common(10)

print("前10高频词:")

for word, count in top_10_words:

print(f"{word}: {count}")

# 生成词云

wc = WordCloud(

font_path="simhei.ttf", # 确保使用中文字体

background_color="white",

width=800,

height=600,

max_words=100

).generate(" ".join(filtered_words))

# 显示词云

plt.figure(figsize=(10, 8))

plt.imshow(wc, interpolation='bilinear')

plt.axis('off')

plt.title('《一句顶一万句》高频词词云')

plt.show()

# 保存词云

wc.to_file(output_path)

print(f"词云已保存至:{output_path}")

if __name__ == "__main__":

# 获取当前脚本所在目录

current_dir = os.path.dirname(os.path.abspath(__file__))

text_path = os.path.join(current_dir, "一句顶一万句.txt")

stopwords_path = os.path.join(current_dir, "stopwords.txt")

output_path = os.path.join(current_dir, "wordcloud.png")

generate_wordcloud_and_statistics(text_path, stopwords_path, output_path)

import jieba

from collections import Counter

import os

from wordcloud import WordCloud

import matplotlib.pyplot as plt

def analyze_author_style(books, stopwords_path):

"""

分析作者作品的写作风格

:param books: 书籍文件路径列表

:param stopwords_path: 停用词文件路径

:return: 前10高频词列表

"""

all_words = []

stopwords = set()

# 读取停用词

try:

with open(stopwords_path, 'r', encoding='utf-8') as file:

stopwords = set(file.read().split())

except Exception as e:

print(f"读取停用词文件时出错:{e}")

return []

# 处理每本书

for book in books:

try:

with open(book, 'r', encoding='utf-8') as f:

text = f.read()

words = jieba.lcut(text)

filtered_words = [word for word in words if word not in stopwords and len(word) > 1]

all_words.extend(filtered_words)

except Exception as e:

print(f"处理文件 {book} 时出错:{e}")

continue

# 统计词频

if not all_words:

return []

word_counts = Counter(all_words)

top_10_words = word_counts.most_common(10)

return top_10_words

def summarize_style(top_words, author):

"""

根据高频词总结作者风格

:param top_words: 高频词列表

:param author: 作者名

"""

print(f"\n{author}作品前10高频词:")

for word, count in top_words:

print(f"{word}: {count}")

# 根据高频词总结风格

print(f"\n{author}作品风格总结:")

if author == "金庸":

print("金庸的作品中常见武林门派、武功秘籍、江湖恩怨等元素,注重武学体系的构建和侠义精神的宣扬。")

print("语言华丽流畅,情节宏大多彩,人物形象丰满立体,常以宏大的叙事展现江湖恩怨和家国情怀。")

elif author == "古龙":

print("古龙作品以情节曲折、对白机智、人物形象鲜明著称,语言风格独特,富有诗意和哲理。")

print("侧重个体感受与心理描写,叙事节奏明快,常通过简洁而富有个性的对话来推动情节发展。")

# 添加词云生成

generate_wordcloud(top_words, author)

def generate_wordcloud(top_words, author):

"""

根据高频词生成词云

:param top_words: 高频词列表

:param author: 作者名

"""

# 提取词和频次

words = [word for word, count in top_words]

counts = [count for word, count in top_words]

# 创建一个字典来存储词频信息

word_freq = {word: count for word, count in zip(words, counts)}

# 生成词云

wc = WordCloud(

font_path="simhei.ttf", # 确保使用中文字体

background_color="white",

width=800,

height=600,

max_words=100

).generate_from_frequencies(word_freq)

# 显示词云

plt.figure(figsize=(10, 8))

plt.imshow(wc, interpolation='bilinear')

plt.axis('off')

plt.title(f'{author}作品词云')

plt.show()

# 保存词云

current_dir = os.path.dirname(os.path.abspath(__file__))

output_path = os.path.join(current_dir, f"{author}_wordcloud.png")

wc.to_file(output_path)

print(f"{author}词云已保存至:{output_path}")

def main():

# 获取当前脚本所在目录

current_dir = os.path.dirname(os.path.abspath(__file__))

# 停用词文件路径

stopwords_path = os.path.join(current_dir, "stopwords.txt")

# 金庸作品文件路径

jin_yong_books = [

os.path.join(current_dir, "天龙八部.txt"),

os.path.join(current_dir, "射雕英雄传.txt"),

os.path.join(current_dir, "神雕侠侣.txt")

]

# 古龙作品文件路径

gu_long_books = [

os.path.join(current_dir, "楚留香传奇.txt"),

os.path.join(current_dir, "绝代双骄.txt"),

os.path.join(current_dir, "陆小凤传奇.txt")

]

# 分析金庸作品

jin_yong_top_words = analyze_author_style(jin_yong_books, stopwords_path)

if jin_yong_top_words:

summarize_style(jin_yong_top_words, "金庸")

# 分析古龙作品

gu_long_top_words = analyze_author_style(gu_long_books, stopwords_path)

if gu_long_top_words:

summarize_style(gu_long_top_words, "古龙")

if __name__ == "__main__":

import os

main()

运行结果:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?