简介

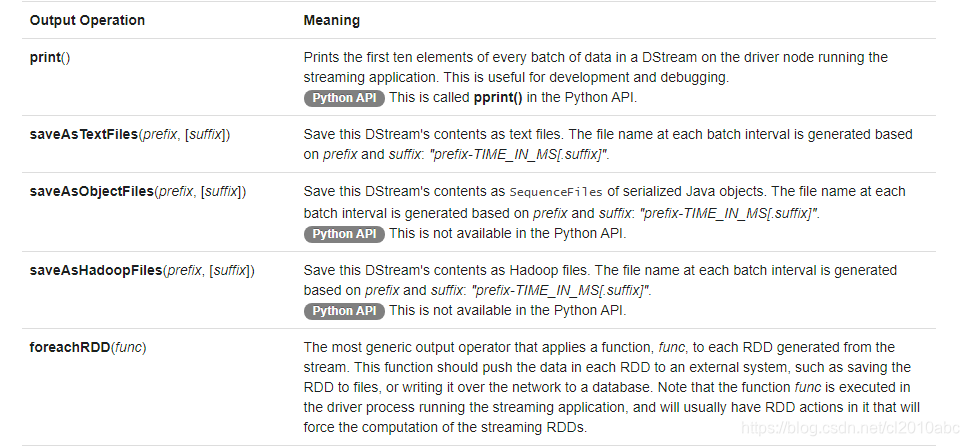

与spark SQL类似,spark streaming同样可以将数据流输出到外部系统,比如文件系统或者数据库,以下是spark streaming支持的输出操作。

foreachRDD可以自定义数据输出方式,所以在日常的使用中最广泛。

案例

本例从socket端口读取数据并将数据输出到Mysql数据库中。

spark streaming读到的数据格式为:

姓名,年龄

例: zhangsan,30

1.建表

在test数据库中创建person表。

CREATE TABLE `person` (

`name` varchar(255) DEFAULT NULL,

`age` int(11) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

2.编写代码

JDBCUtils.java

import java.sql.*;

public class JDBCUtils {

private static final String connectionURL = "jdbc:mysql://192.168.61.137:3306/test?useUnicode=true&characterEncoding=UTF8&useSSL=false";

private static final String username = "root";

private static final String password = "123456@Abc";

//创建数据库的连接

public static Connection getConnection() {

try {

Class.forName("com.mysql.jdbc.Driver");

return DriverManager.getConnection(connectionURL,username,password);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

//关闭数据库的连接

public static void close(ResultSet rs, Statement stmt, Connection con) throws SQLException {

if(rs!=null)

rs.close();

if(stmt!=null)

stmt.close();

if(con!=null)

con.close();

}

}

Person.java

public class Person implements Serializable {

private String name;

private Integer age;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public Integer getAge() {

return age;

}

public void setAge(Integer age) {

this.age = age;

}

}

StreamingOutput.java

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaReceiverInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

public class StreamingOutput {

public static void main(String[] args) throws InterruptedException {

SparkConf conf = new SparkConf().setMaster("local[*]").setAppName("stramingtomysql");

JavaStreamingContext jssc = new JavaStreamingContext(conf, Durations.seconds(5));

JavaReceiverInputDStream<String> lines = jssc.socketTextStream("192.168.61.137", 9999);

JavaDStream<Person> result = lines.map(new Function<String, Person>() {

@Override

public Person call(String v1) throws Exception {

String[] strs = v1.split(" ");

Person person = new Person();

person.setName(strs[0]);

person.setAge(Integer.parseInt(strs[1]));

return person;

}

});

result.foreachRDD(new VoidFunction<JavaRDD<Person>>() {

@Override

public void call(JavaRDD<Person> personJavaRDD) throws Exception {

personJavaRDD.foreach(new VoidFunction<Person>() {

@Override

public void call(Person person) throws Exception {

Connection connection = JDBCUtils.getConnection();

PreparedStatement ps = null;

String sql = "INSERT INTO person (name, age) VALUES(?,?)";

try {

ps = connection.prepareStatement(sql);

ps.setString(1, person.getName());

ps.setLong(2, person.getAge());

ps.executeUpdate();

} catch (SQLException e) {

e.printStackTrace();

}

}

});

}

});

jssc.start();

jssc.awaitTermination();

}

}

Maven

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.3.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

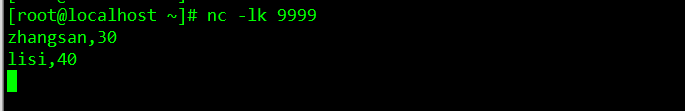

3.运行测试

运行程序,并通过netcat工具发送数据。

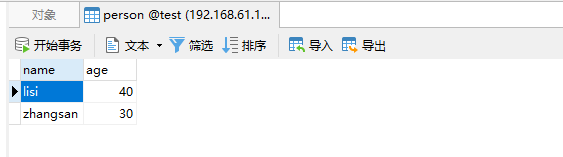

最后查看Mysql数据库中person表的结果。

1318

1318

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?