基础知识:

1、每个音视频数据帧都有自己的pts和dts,分别表示播放时间和解码时间,若无b帧则pts和dts相同。我们后面说的时间戳都是pts。

2、音视频同步共有三种方式:音频同步到视频、视频同步到音频和音视频均同步到系统时间。

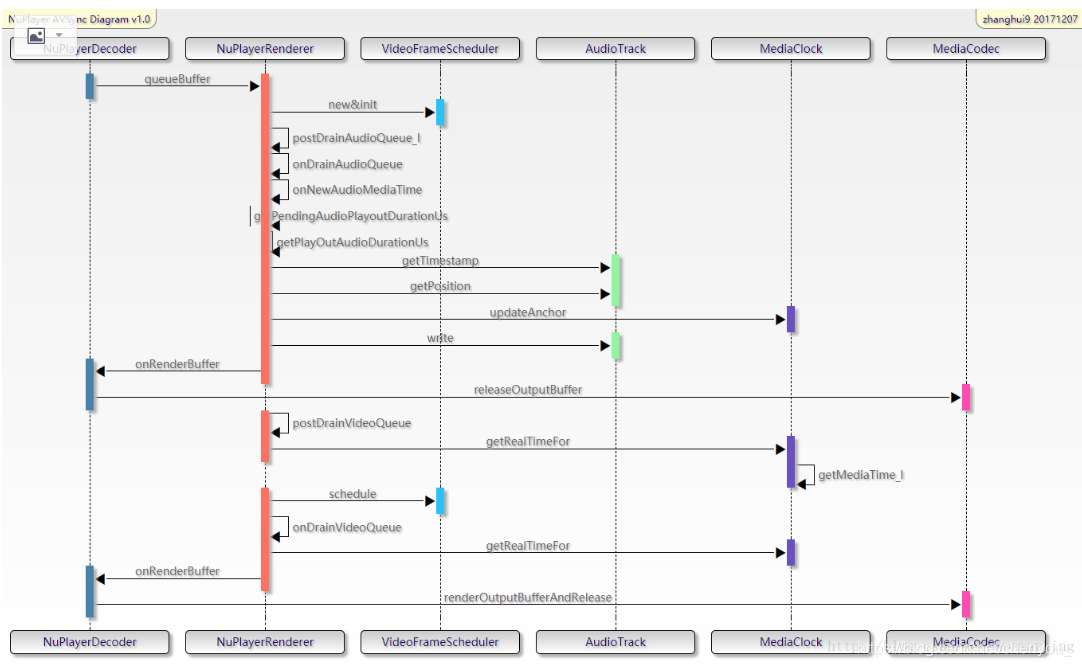

nuplayer中的音视频同步流程:

函数调用过程:

NuPlayer::Decoder::handleAnOutputBuffer // 每次有解码得到的新数据就会调用此函数

NuPlayer::Renderer::queueBuffer() // 发送kWhatQueueBuffer

NuPlayer::Renderer::onQueueBuffer // 处理kWhatQueueBuffer,将传入的视频数据push进音视频的list中,

dropBufferIfStale // 如果当前数据帧是一个已经处理过的数据,直接丢掉

postDrainAudioQueue_l // 发送kWhatDrainAudioQueue

NuPlayer::Renderer::onMessageReceived // 响应kWhatDrainAudioQueue

onDrainAudioQueue // while循环直到将list消耗完,将数据写入audiosink中,获取mediaTimeUs。

postDrainAudioQueue_l // 如果list还没消耗完则会调用

postDrainVideoQueue // 发送kWhatDrainVideoQueue,在mMediaClock中添加一个媒体播放时间

onDrainVideoQueue // 响应kWhatDrainVideoQueue,做一系列的时间戳对齐事情,具体时间戳对齐问题和下面讲的相同。其中getMediaTime_l就是计算 nowMediaUs,getRealTimeFor就是计算 realTimeUs。

syncQueuesDone_l // 如果还有没播放完的数据会继续播放完

// 然后分别取出音视频list的第一个buffer当做下次要播放的数据,然后判断下次要播放的数据的音视频时间戳,若音频比视频时间戳提前超过0.1秒,则会丢掉一些音频帧,如果不是,调用syncQueuesDone_l,把list中的数据消耗完

onRenderBuffer(msg); // isStaleReply(msg)如果decoder接收到了render发回的消息entry->mNotifyConsumed->post()则会执行onRenderBuffer

renderOutputBufferAndRelease // 渲染,然后释放buffer,发送消息kWhatReleaseOutputBuffer

onReleaseOutputBuffer // 响应消息kWhatReleaseOutputBuffer,判断硬件还是软件渲染

renderOutputBuffer // mOutputBufferDrained中会有kWhatOutputBufferDrained消息,此处会发送这个消息

ACodec::BaseState::onOutputBufferDrained // 此处是响应kWhatOutputBufferDrained消息

ACodec::BufferInfo *ACodec::dequeueBufferFromNativeWindow()

releaseOutputBuffer // 直接释放buufer

接下来一步一步去分析:

1. 先看看audio数据的大概来由和对时间的控制

audio有数据解码完了就会调用handleAnOutputBuffer。

NuPlayer::Decoder::handleAnOutputBuffer 调用 mRenderer->queueBuffer

NuPlayer::Renderer::queueBuffer 发送 kWhatQueueBuffer

NuPlayer::Renderer::onMessageReceived 收到 kWhatQueueBuffer

NuPlayer::Renderer::onQueueBuffer 处理 kWhatQueueBuffer

mAudioQueue.push_back(entry);

NuPlayer::Renderer::postDrainAudioQueue_l(int64_t delayUs) 发送 kWhatDrainAudioQueue

NuPlayer::Renderer::onDrainAudioQueue() 收到 kWhatDrainAudioQueue, 在此函数中会调用 mAudioSink->write(entry->mBuffer->data() + entry->mOffset,copy, false /* blocking */);把mAudioSink的数据copy给entry。

NuPlayer::Renderer::drainAudioQueueUntilLastEOS() 此函数中会把audio data post出去,并把mAudioQueue的第一个entry erase.

2. 接下来看重点: video渲染

代码位置: frameworks/av/media/libmediaplayerservice/nuplayer/NuPlayerRenderer.cpp

说明: video数据解码完需要显示, NuplayerRender在onQueueBuffer中收到解码后的buffer,将buffer Push到video queue,然后调用postDrainVideoQueue进行播放处理。

首先讲下postDrainVideoQueue。

2.1 postDrainVideoQueue

// Called without mLock acquired.

void NuPlayer::Renderer::postDrainVideoQueue() {

if (mDrainVideoQueuePending // 有需要渲染的视频帧

|| getSyncQueues()

|| (mPaused && mVideoSampleReceived)) {

return;

}

if (mVideoQueue.empty()) {

return;

}

QueueEntry &entry = *mVideoQueue.begin(); // 从mVideoQueue中取出第一帧

sp<AMessage> msg = new AMessage(kWhatDrainVideoQueue, this);

msg->setInt32("drainGeneration", getDrainGeneration(false /* audio */));

// 取出mVideoDrainGeneration 此变量的含义???

if (entry.mBuffer == NULL) {

// EOS doesn't carry a timestamp.

msg->post();

mDrainVideoQueuePending = true;

return;

}

int64_t nowUs = ALooper::GetNowUs(); // nowUs 当前系统时间

if (mFlags & FLAG_REAL_TIME) {

// 如果使用FLAG_REAL_TIME,一般不走,忽略

return;

}

int64_t mediaTimeUs; // mediaTimeUs = pts

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

{

Mutex::Autolock autoLock(mLock);

if (mNeedVideoClearAnchor && !mHasAudio) { // 如果没有音频流,不需要同步

mNeedVideoClearAnchor = false;

clearAnchorTime();

}

if (mAnchorTimeMediaUs < 0) { // 如果还没有标记第一帧播放的媒体时间

// 去标记锚点时间

mMediaClock->updateAnchor(mediaTimeUs, nowUs, mediaTimeUs);

mAnchorTimeMediaUs = mediaTimeUs;

}

}

mNextVideoTimeMediaUs = mediaTimeUs + 100000; // 下一帧的时间

if (!mHasAudio) {

// 没有audio直接用mNextVideoTimeMediaUs更新MaxTimeMedia

mMediaClock->updateMaxTimeMedia(mNextVideoTimeMediaUs);

}

if (!mVideoSampleReceived || mediaTimeUs < mAudioFirstAnchorTimeMediaUs) {

// 如果mVideoSampleReceived为false或者mediaTimeUs小于音频第一帧时间,立刻发消息

msg->post(); // 发送消息kWhatDrainVideoQueue

} else {

// //2倍vsync duration

int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000);

//如果delayUs大于两倍vsync duration,则延迟到“距离显示时间两倍vsync duration

//之前的时间点再发消息进入后面的流程,否则立即走后面的流程

mMediaClock->addTimer(msg, mediaTimeUs, -twoVsyncsUs);

}

mDrainVideoQueuePending = true;

}

postDrainVideoQueue这个函数的主要作用就是更新锚点时间,并控制时间发送kWhatDrainVideoQueue消息。NuPlayer::Renderer::onMessageReceived收到kWhatDrainVideoQueue消息后会分别调用onDrainVideoQueue()和postDrainVideoQueue。

onDrainVideoQueue中实现了音视频同步的主要内容,具体实现过程如下:

NuPlayer::Renderer::onDrainVideoQueue()

{

QueueEntry *entry = &*mVideoQueue.begin();

int64_t nowUs = ALooper::GetNowUs(); // nowUs就是当前系统时间

int64_t realTimeUs; // 当前帧的显示时间

int64_t mediaTimeUs = -1; // 当前帧pts

if (mFlags & FLAG_REAL_TIME) {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs));

// 如果使用FLAG_REAL_TIME,则realTimeUs就是当前pts

} else {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

// mediaTimeUs就是当前pts

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

// 通过pts和当前系统时间、锚点时间计算realTimeUs

}

realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

// 根据vsync再次调整realTimeUs

bool tooLate = false;

if (!mPaused) { // 当前处于播放状态

setVideoLateByUs(nowUs - realTimeUs); // 计算late时间

tooLate = (mVideoLateByUs > 40000); // late时间如果大于40ms就会丢掉

if (tooLate) {

ALOGV("video late by %lld us (%.2f secs)",

(long long)mVideoLateByUs, mVideoLateByUs / 1E6);

} else {

int64_t mediaUs = 0;

mMediaClock->getMediaTime(realTimeUs, &mediaUs);

// 计算mediaUs,mediaUs等于锚点播放时间加上realTimeUs减去锚点开始播放时间

ALOGV("rendering video at media time %.2f secs",

(mFlags & FLAG_REAL_TIME ? realTimeUs :

mediaUs) / 1E6);

if (!(mFlags & FLAG_REAL_TIME)

&& mLastAudioMediaTimeUs != -1

&& mediaTimeUs > mLastAudioMediaTimeUs) {

// If audio ends before video, video continues to drive media clock.

// Also smooth out videos >= 10fps.

mMediaClock->updateMaxTimeMedia(mediaTimeUs + 100000);

}

}

} else { // 当前是暂停状态

setVideoLateByUs(0);

if (!mVideoSampleReceived && !mHasAudio) {

// This will ensure that the first frame after a flush won't be used as anchor

// when renderer is in paused state, because resume can happen any time after seek.

clearAnchorTime();

}

}

// Always render the first video frame while keeping stats on A/V sync.

if (!mVideoSampleReceived) {

realTimeUs = nowUs;

tooLate = false;

}

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

entry->mNotifyConsumed->setInt32("render", !tooLate);

entry->mNotifyConsumed->post();

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

mVideoSampleReceived = true;

if (!mPaused) {

if (!mVideoRenderingStarted) {

mVideoRenderingStarted = true;

notifyVideoRenderingStart();

}

Mutex::Autolock autoLock(mLock);

notifyIfMediaRenderingStarted_l();

}

}

下面我们看看具体计算realTimeUs的过程:

// 输入为pts和当前系统时间,输出为realTimeUs

int64_t NuPlayer::Renderer::getRealTimeUs(int64_t mediaTimeUs, int64_t nowUs) {

int64_t realUs;

if (mMediaClock->getRealTimeFor(mediaTimeUs, &realUs) != OK) {

return nowUs;

}

return realUs;

}

status_t MediaClock::getRealTimeFor(

int64_t targetMediaUs, int64_t *outRealUs) const {

Mutex::Autolock autoLock(mLock);

int64_t nowUs = ALooper::GetNowUs(); // 重新获取当前系统时间,为了更准确

int64_t nowMediaUs;

status_t status =

getMediaTime_l(nowUs, &nowMediaUs, true /* allowPastMaxTime */);

// 计算nowMediaUs:

// nowMediaUs = mAnchorTimeMediaUs + (realUs - mAnchorTimeRealUs) * (double)mPlaybackRate;

if (status != OK) {

return status;

}

*outRealUs = (targetMediaUs - nowMediaUs) / (double)mPlaybackRate + nowUs;

// targetMediaUs - nowMediaUs表示当前帧pts和当前媒体时间的差

// outRealUs就表示当前帧显示的系统时间

return OK;

}

status_t MediaClock::getMediaTime_l(

int64_t realUs, int64_t *outMediaUs, bool allowPastMaxTime) const {

if (mAnchorTimeRealUs == -1) {

return NO_INIT;

}

int64_t mediaUs = mAnchorTimeMediaUs

+ (realUs - mAnchorTimeRealUs) * (double)mPlaybackRate;

// mAnchorTimeMediaUs是开始播放第一帧的时候记录的媒体时间

// mAnchorTimeRealUs是开始播放第一帧的时候记录的系统时间

// (realUs - mAnchorTimeRealUs)就表示从开始播放到现在系统时间过了多久

// mediaUs就表示当前的媒体时间

if (mediaUs > mMaxTimeMediaUs && !allowPastMaxTime) {

mediaUs = mMaxTimeMediaUs;

}

if (mediaUs < mStartingTimeMediaUs) {

mediaUs = mStartingTimeMediaUs;

}

if (mediaUs < 0) {

mediaUs = 0;

}

*outMediaUs = mediaUs;

return OK;

}

综上: realTimeUs的计算公式如下:

realTimeUs = PTS - nowMediaUs + nowUs

= PTS - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs

mAnchorTimeMediaUs代表锚点媒体时间戳,可以理解为最开始播放的时候记录下来的第一个媒体时间戳。

mAnchorTimeRealUs代表锚点real系统时间戳。

nowUs - mAnchorTimeRealUs即为从开始播放到现在,系统时间经过了多久。

再加上mAnchorTimeMediaUs,即为“在当前系统时间下,对应的媒体时间戳”

用PTS减去这个时间,表示“还有多久该播放这一帧”

最后再加上一个系统时间,即为这一帧应该显示的时间。

下面继续看看realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;这个函数的目的:

nsecs_t VideoFrameScheduler::schedule(nsecs_t renderTime) {

nsecs_t origRenderTime = renderTime;

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

if (now >= mVsyncRefreshAt) {

updateVsync();

}

// without VSYNC info, there is nothing to do

if (mVsyncPeriod == 0) {

ALOGV("no vsync: render=%lld", (long long)renderTime);

return renderTime;

}

// ensure vsync time is well before (corrected) render time

if (mVsyncTime > renderTime - 4 * mVsyncPeriod) {

mVsyncTime -=

((mVsyncTime - renderTime) / mVsyncPeriod + 5) * mVsyncPeriod;

}

// Video presentation takes place at the VSYNC _after_ renderTime. Adjust renderTime

// so this effectively becomes a rounding operation (to the _closest_ VSYNC.)

renderTime -= mVsyncPeriod / 2;

const nsecs_t videoPeriod = mPll.addSample(origRenderTime);

if (videoPeriod > 0) {

// Smooth out rendering

size_t N = 12;

nsecs_t fiveSixthDev =

abs(((videoPeriod * 5 + mVsyncPeriod) % (mVsyncPeriod * 6)) - mVsyncPeriod)

/ (mVsyncPeriod / 100);

// use 20 samples if we are doing 5:6 ratio +- 1% (e.g. playing 50Hz on 60Hz)

if (fiveSixthDev < 12) { /* 12% / 6 = 2% */

N = 20;

}

nsecs_t offset = 0;

nsecs_t edgeRemainder = 0;

for (size_t i = 1; i <= N; i++) {

offset +=

(renderTime + mTimeCorrection + videoPeriod * i - mVsyncTime) % mVsyncPeriod;

edgeRemainder += (videoPeriod * i) % mVsyncPeriod;

}

mTimeCorrection += mVsyncPeriod / 2 - offset / (nsecs_t)N;

renderTime += mTimeCorrection;

nsecs_t correctionLimit = mVsyncPeriod * 3 / 5;

edgeRemainder = abs(edgeRemainder / (nsecs_t)N - mVsyncPeriod / 2);

if (edgeRemainder <= mVsyncPeriod / 3) {

correctionLimit /= 2;

}

// estimate how many VSYNCs a frame will spend on the display

nsecs_t nextVsyncTime =

renderTime + mVsyncPeriod - ((renderTime - mVsyncTime) % mVsyncPeriod);

if (mLastVsyncTime >= 0) {

size_t minVsyncsPerFrame = videoPeriod / mVsyncPeriod;

size_t vsyncsForLastFrame = divRound(nextVsyncTime - mLastVsyncTime, mVsyncPeriod);

bool vsyncsPerFrameAreNearlyConstant =

periodicError(videoPeriod, mVsyncPeriod) / (mVsyncPeriod / 20) == 0;

if (mTimeCorrection > correctionLimit &&

(vsyncsPerFrameAreNearlyConstant || vsyncsForLastFrame > minVsyncsPerFrame)) {

// remove a VSYNC

mTimeCorrection -= mVsyncPeriod / 2;

renderTime -= mVsyncPeriod / 2;

nextVsyncTime -= mVsyncPeriod;

if (vsyncsForLastFrame > 0)

--vsyncsForLastFrame;

} else if (mTimeCorrection < -correctionLimit &&

(vsyncsPerFrameAreNearlyConstant || vsyncsForLastFrame == minVsyncsPerFrame)) {

// add a VSYNC

mTimeCorrection += mVsyncPeriod / 2;

renderTime += mVsyncPeriod / 2;

nextVsyncTime += mVsyncPeriod;

if (vsyncsForLastFrame < ULONG_MAX)

++vsyncsForLastFrame;

}

ATRACE_INT("FRAME_VSYNCS", vsyncsForLastFrame);

}

mLastVsyncTime = nextVsyncTime;

}

// align rendertime to the center between VSYNC edges

renderTime -= (renderTime - mVsyncTime) % mVsyncPeriod;

renderTime += mVsyncPeriod / 2;

ALOGV("adjusting render: %lld => %lld", (long long)origRenderTime, (long long)renderTime);

ATRACE_INT("FRAME_FLIP_IN(ms)", (renderTime - now) / 1000000);

return renderTime;

}

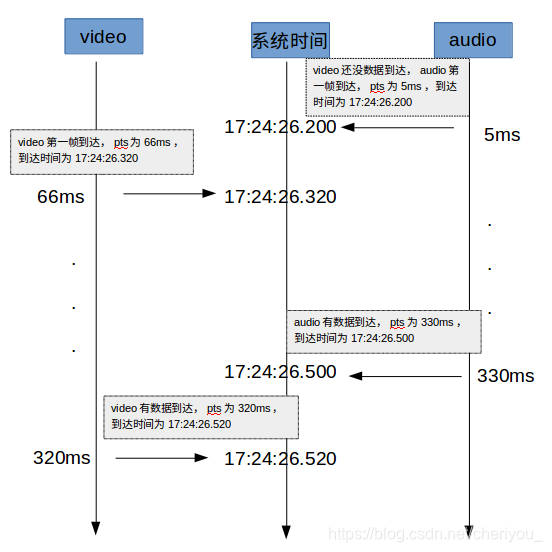

3. 为了更好的理解我们图形化展示下整个过程

如上图所示:

- step1: audio第一帧到达,pts为5ms,到达时间为17:24:26.200,此时会更新mAnchorTimeMediaUs为5ms, mAnchorTimeRealUs为17:24:26.200。

- step2: 当video第一帧到达时,nowUs=17:24:26.320,mediaTimeUs=66ms。

realTimeUs = mediaTimeUs - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs

= 66ms - (5ms + (17:24:26.320 - 17:24:26.200)) + 17:24:26.320

= 17:24:26.271

delaytime = nowUs - realTimeUs = 17:24:26.320 - 17:24:26.271 = 49ms

delaytime > 40ms, 所以drop第一帧

- step3: audio又有数据到达,此处无特殊处理,忽略。

- step4: video又有数据到达。nowUs=17:24:26.520,mediaTimeUs=320ms。

realTimeUs = mediaTimeUs - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs

= 320ms - (5ms + (17:24:26.520 - 17:24:26.200)) + 17:24:26.520

= 17:24:26.515

delaytime = nowUs - realTimeUs = 17:24:26.520 - 17:24:26.515 = 5ms

delaytime < 40ms, 所以render这一帧

上面就是整个av sync过程啦~不知道你看懂了没呢~

本文深入解析音视频同步原理,涵盖音视频数据帧的播放和解码时间,同步流程及算法,包括音频同步到视频、视频同步到音频和音视频同步到系统时间。详细介绍了Nuplayer中的同步机制,涉及时间戳对齐、锚点时间计算和帧渲染控制。

本文深入解析音视频同步原理,涵盖音视频数据帧的播放和解码时间,同步流程及算法,包括音频同步到视频、视频同步到音频和音视频同步到系统时间。详细介绍了Nuplayer中的同步机制,涉及时间戳对齐、锚点时间计算和帧渲染控制。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?