K8s集群搭建文档

本文档主要用来说明k8s集群的搭建,旨在搭建一个小型的集群,两个master节点,一个node节点。双master节点实现服务高可用,双VIP实现负载均衡和高可用,提供统一网络入口以及故障自动漂移VIP

集群规划

| 主机名(centos7.9) | IP地址 | 机器网关 | DNS | cpu | 内存 | 磁盘容量 |

|---|---|---|---|---|---|---|

| master1 | 10.10.6.91 | 10.10.19.254 | 192.168.200.254 | 4核 | 8G | 400GB |

| master2 | 10.10.16.92 | 10.10.19.254 | 192.168.200.254 | 4核 | 8G | 400GB |

| node1 | 10.10.16.93 | 10.10.19.254 | 192.168.200.254 | 4核 | 8G | 400GB |

VIP:10.10.16.94

负载均衡:10.10.16.94:8443

使用组件:

容器运行时:containerd,底层容器运行时软件

网络插件:flannel,实现节点之间网络同i性能

keepalived:实现双VIP,避免单点故障

Haproxy:负载均衡器,解决“流量分发”问题

搭建步骤

*****前沿操作*****

防火墙、交换分区、selinux等的设置

编辑hosts文件

vim /etc/hosts

# 增加主机名解析

10.10.16.91 master1

10.10.16.92 master2

10.10.16.93 node1

关闭centos默认的firewalld防火墙策略

# 关闭防火墙并设置开机不自启

systemctl stop firewalld && systemctl disable firewalld

关闭swap交换分区

-

K8s 节点需要快速响应容器调度、资源分配及网络通信等操作,内存交换会导致:

-

容器进程因频繁读写硬盘而出现严重延迟(如 Pod 响应时间变长)。

-

节点整体负载升高,甚至触发 “进程卡死” 或 “节点不可用”。

-

swapoff -a # 临时关闭

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # 更改文件永久关闭

关闭selinux,selinux是一种安全增强策略,会禁止我们进行部分高危操作,部署选择关闭

setenforce 0 # 临时关闭

# 永久关闭

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/s

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

开启ipv4路由转发功能,实现pod内数据包的网络传输

echo 'net.ipv4.ip_forward=1' >> /etc/sysctl.conf && sysctl -p

yum install ipvsadm # ipvs,Linux内核中的负载均衡模块

加载bridge技术(虚拟二层转发技术)

# 使用

yum install -y epel-release && yum install -y bridge-utils

modprobe br_netfilter # 加载内核模块

echo 'br_netfilter' >> /etc/modules-load.d/bridge.conf

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables=1' >> /etc/sysctl.conf

sysctl -p # 刷新配置文件

更新源,配置时间同步

一般集群都需要配置时间同步,根据统一时间来进行记录pod等出现问题的时间戳,任务调度周期等。

yum install -y yum_utils # yum增强包

yum install -y epel-release # 更新epel源

yum install ntpdate -y # 下载时间同步相关包

ntpdate cn.pool.ntp.org # 与网络时间做同步

crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

service crond restart # 重新加载定时任务

更新epel源(centos默认的epel相关包不更新了,加载阿里云的国内镜像源)

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

sed -i '/aliyuncs/d' /etc/yum.repos.d/*.repo

yum clean all && yum makecache fast

*****多master多VIP和负载均衡配置*****(master节点配置)

部署keepalived

yum install -y keepalived # 下载安装

vim /etc/keepalived/keepalived.conf # 修改配置文件

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id master_keepalived #router名

vrrp_skip_check_adv_addr

#vrrp_strict #如果VIP出现但是ping不通可以把这个注释掉

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER #如果是从机器,则改为BACKUP

interface ens192

virtual_router_id 182

priority 30 #权重最大的为主机器,以此类推

advert_int 1

authentication {

auth_type PASS

auth_pass 20240325 #传播密码

}

virtual_ipaddress {

10.10.19.141 #虚拟ip地址

}

}

# ------------------------wq保存退出-----------------

# 启动keepalived

systemctl enable keepalived.service && systemctl start keepalived.service

Haproxy部署(只有master才需要做负载均衡)

yum install haproxy -y

vim /etc/haproxy/haproxy.cfg

global

log /dev/log local1 warning

chroot /var/lib/haproxy

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5s

timeout client 10m

timeout server 10m

listen kube-master

bind :8443 # 监听api-server服务

mode tcp

option tcplog

option dontlognull

option dontlog-normal

balance roundrobin

server master1 10.10.19.144:6443 inter 2s fall 3 rise 5

server master2 10.10.19.145:6443 inter 2s fall 3 rise 5

server master3 10.10.19.146:6443 inter 2s fall 3 rise 5

# ---------------------wq保存退出-----------------

# 启动haproxy

systemctl start haproxy.service && systemctl enable haproxy.service

*****所有机器上部署containerd和k8s*****

# 如果yum install找不到kubelet-1.26.3,就配置阿里云的k8s镜像源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装特定版本的k8s

yum install -y kubelet-1.26.3 kubeadm-1.26.3 kubectl-1.26.3

# 启动kubelet,有些可以启动,有些不可以启动(缺乏启动关联的容器运行时),与下载的kubelet有关

# 解决方法1,修改kubelet配置文件

cat > /etc/default/kubelet <<EOF

KUBELET_EXTRA_ARGS="--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

EOF

systemctl enable kublet && systemctl start kublet

# 配置相关docker国内镜像(如果能够直接安装就直接安装)

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装containerd

yum install -y containerd.io

systemctl start containerd && systemctl enable containerd

# 设置crictl的CRI endpoint为containerd

echo 'runtime-endpoint: unix:///run/containerd/containerd.sock' >> /etc/crictl.yaml

echo 'image-endpoint: unix:///run/containerd/containerd.sock' >> /etc/crictl.yaml

echo 'timeout: 10' >> /etc/crictl.yaml

echo 'debug: false' >> /etc/crictl.yaml

手动安装cni-plugins

使用yum源安装containerd的方式会把runc安装好,但是并不会安装cni-plugins,还需要手动安装cni-plugins。

cni是容器网络接口标准,用于定义容器运行时(如 Docker、containerd)与网络插件之间的交互规范

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

# 验证安装

[root@master1 ~]# /opt/cni/bin/host-local

CNI host-local plugin v1.3.0

CNI protocol versions supported: 0.1.0, 0.2.0, 0.3.0, 0.3.1, 0.4.0, 1.0.0

[root@master1 ~]# stat -fc %T /sys/fs/cgroup/

tmpfs

containerd配置cgroup driver

# containerd配置cgroup driver

containerd config default > /etc/containerd/config.toml

# 启用systemd cgroup

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

old_sanbox_image=`grep sandbox_image /etc/containerd/config.toml`

sed -i 's#'"${old_sanbox_image}"'#sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"#g' /etc/containerd/config.toml

systemctl restart containerd

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

EOF

*****主master节点上执行集群初始化操作*****(master1执行即可)

下载网络插件(这里使用flannel插件),flannel会自动在加入的master和node上连接网络服务

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

集群初始化配置文件编辑

kubeadm config print init-defaults > kubeadm-init.yaml

vim kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.10.19.141 #VIP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock #containerd的socket

imagePullPolicy: IfNotPresent

name: master1 #机器名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.10.19.141:8443 #VIP

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.26.3 #k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #默认flannel的网段,务必与flannel一致,否则flannel起不来

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd #cgroup装置为systemd

集群初始化检查和镜像拉取

# 检查配置文件的相关版本

[root@master1 ~]# kubeadm config images list --config kubeadm-init.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.3

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.3

registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.3

registry.aliyuncs.com/google_containers/kube-proxy:v1.26.3

registry.aliyuncs.com/google_containers/pause:3.9

registry.aliyuncs.com/google_containers/etcd:3.5.6-0

registry.aliyuncs.com/google_containers/coredns:v1.9.3

# 镜像拉取,注意网络,可能没有科学上网拉不下来

[root@master1 ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.26.3

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.9.3

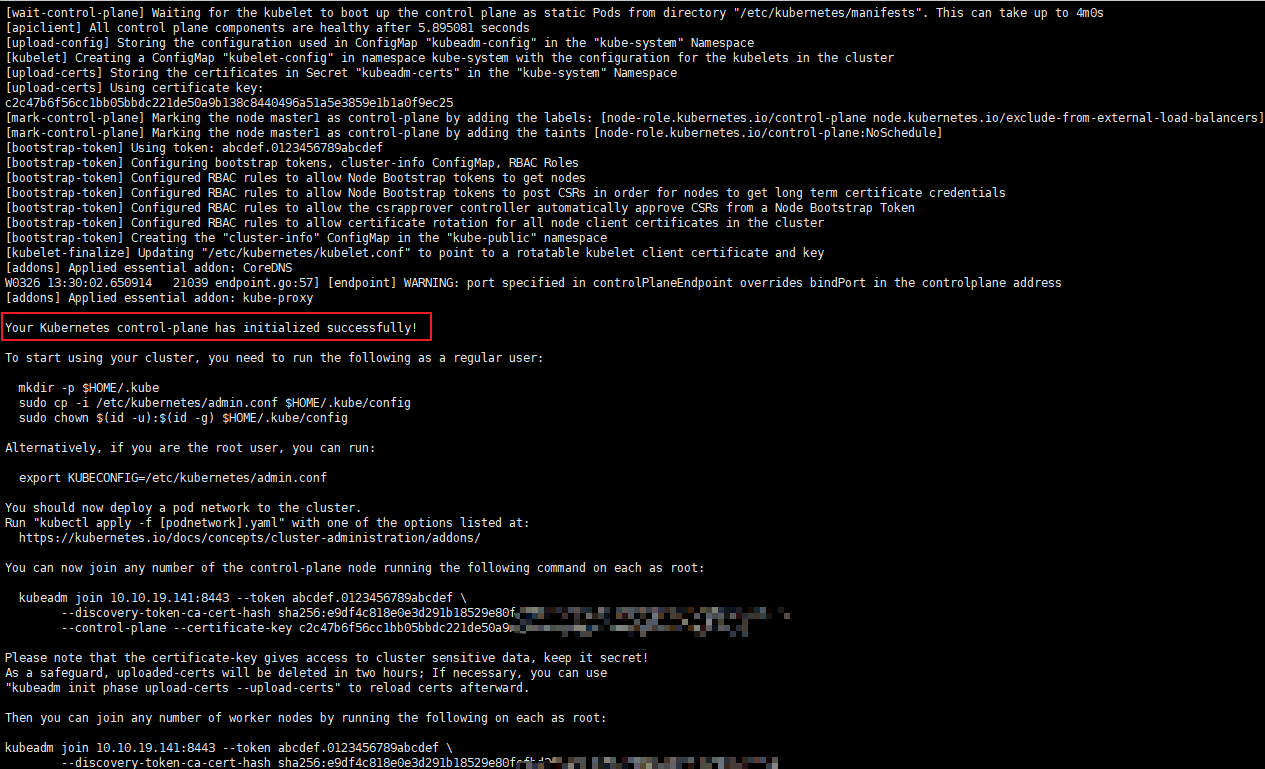

集群初始化

kubeadm init --config kubeadm-init.yaml --upload-certs

# 初始化集群配置

export KUBECONFIG=/etc/kubernetes/admin.conf

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 没有出现error则表示初始化成功

# 如果出现了error请先执行以下操作恢复为初始状态,不然端口和文件都会被占用,无法进行下一次初始化

systemctl stop kubelet

kubeadm reset -f # 强制集群初始化(谨慎执行,如果集群中存在数据先进行备份)

rm -rf /var/lib/kubelet/* # 删除所有数据信息

生成token信息

kubeadm token create --print-join-command --certificate-key --v=5

[root@master2 ~]# kubeadm join 10.10.19.141:8443 --token t45zd2.f9njrzf3dn9w0j9y --discovery-token-ca-cert-hash sha256:e9df4c818e0e3d291b18529e80fcfbd3fb2651879c0985db2e97a235b5c04f64 --control-plane --certificate-key --v=5 --cri-socket unix:///run/containerd/containerd.sock

*****节点加入集群*****

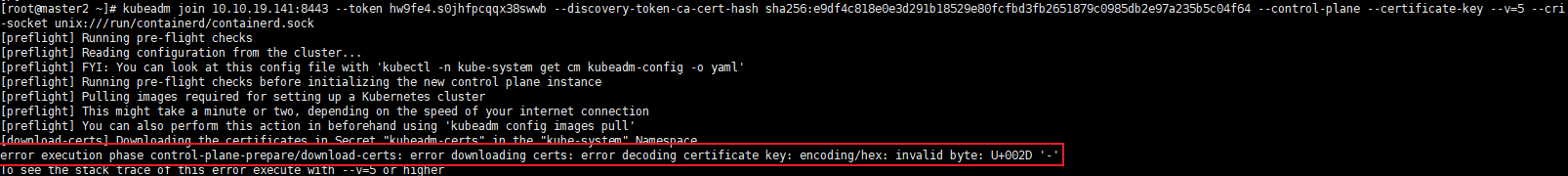

master2加入集群

# 后面内容需要加入,指定容器运行时

# --cri-socket unix:///run/containerd/containerd.sock

可能会出现以下错误

解决方案:在master1上重新生成证书,并在上条命令最末尾接上 –certificate-key uuid(集群id)

[root@master1 ~]# kubeadm init phase upload-certs --upload-certs --config kubeadm-init.yaml

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

b80e18d761b1614f8ee53b1d1018aa09bd54efb37efb7391013b76ef3e4f04cb

node节点加入集群

[root@node1 ~]# kubeadm join 10.10.19.141:8443 --token t45zd2.f9njrzf3dn9w0j9y --discovery-token-ca-cert-hash sha256:e9df4c818e0e3d291b18529e80fcfbd3fb2651879c0985db2e97a235b5c04f64 --v=5 --cri-socket unix:///run/containerd/containerd.sock

# 不需要添加第一次生成集群加入命令的--control-plane --certificate-key

*****查看集群状态*****

查看网络服务flannel是否搭建成功

(base) [root@master1 shell]# kubectl get pods -n kube-flannel -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel-ds-5qrcq 1/1 Running 0 16h 10.10.16.91 master1 <none> <none>

kube-flannel-ds-5zpv9 1/1 Running 0 16h 10.10.16.93 node1 <none> <none>

kube-flannel-ds-xrwqr 1/1 Running 0 16h 10.10.16.92 master2 <none> <none>

成功搭建!

(base) [root@master1 shell]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane 16h v1.26.3

master2 Ready control-plane 16h v1.26.3

node1 Ready <none> 16h v1.26.3

7万+

7万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?