在debian/ubuntu下的LXD或者incus容器配置python环境后,不知道是服务器的问题还是容器的设置问题,本来在容器能正常使用GPU的torch,居然无法使用显卡。连最基本判断显卡是否可以使用都会返回如标题的错误:

import torch

print(torch.cuda.is_available())

# 返回UserWarning: CUDA initialization: CUDA unknown error -

# this may be due to an incorrectly set up environment, e.g.

# changing env variable CUDA_VISIBLE_DEVICES after program start.

# Setting the available devices to be zero. (Triggered internally at

# /pytorch/c10/cuda/CUDAFunctions.cpp:109.)

# return torch._C._cuda_getDeviceCount() > 0

实际上nvidia-smi还是能正常执行的。网上搜了一圈,包括重装驱动之类,其实都没用,但在torch的官方仓里的issue(https://github.com/pytorch/pytorch/issues/49081)上,我找到了一个比较可行的分析:

I've recently ran into this error when migrating my gpu containers from nvidia docker to podman. The root cause for me was that /dev/nvidia-uvm, /dev/nvidia-uvm-tools files were missing that CUDA apparently needs to work. Check that you have them on both host and container/VM if you use containers. On the host, running sudo nvidia-modprobe -c 0 -u should fix it.

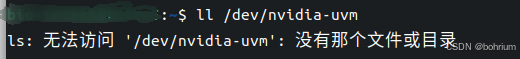

只要是基于容器使用显卡资源,都要留意一下服务/dev下有没有nvidia-uvm和nvidia-uvm-tools,而我出问题的容器所在的服务器,是缺少这些文件的:

所以按照上面所说,在终端执行以下命令:

sudo nvidia-modprobe -c 0 -u然后再ll一下上面提到的文件,返回正确的位置,于是重启容器就可以该问题。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?