记阅读的三篇序列推荐/点击率预测论文:

- Long and Short-Term Recommendations with Recurrent Neural Networks

- Deep Interest Evolution Network for Click-Through Rate Prediction

- Adaptive User Modeling with Long and Short-Term Preferences for Personalized Recommendation

使用用户的序列信息进行推荐的好处:

- The use of sequence information allowed to compensate for the scarcity of recorded interactions between users and items.

- The sequence of actions of the user holds indeed a lot of information: it can reveal the evolution of a user’s taste, it might help to identify which items became irrelevant with regards to the current user’s interests, or which items make part of a vanishing interest.

Long and Short-Term Recommendations with Recurrent Neural Networks_UMAP17

code:https://github.com/rdevooght/ sequence-based-recommendations

data:http://iridia.ulb.ac.be/~rdevooght/rnn_cf_ data.zip

动机:

- characterize the full short-term/long-term profile of many collaborative filtering methods, and we show how recurrent neural networks can be steered towards better short or long-term predictions.

- show that RNNs are not only adapted to session-based collaborative filtering, but are perfectly suited for collaborative filtering on dense datasets where it outperforms traditional item recommendation algorithms.

文章的贡献:

• We introduce a practical visualization of the short- term/long-term profile of any recommender system and use it to compare several algorithms.

• We show how to modify the RNN to find a good trade-off between long and short-term predictions.

• We explore the relationship between short-term predictions and diversity.

本篇文章中对长短期预测的定义:

Long term predictions aim to identify which items the user will consume eventually, without regards for when exactly he will consume them

short-term predictions should accurately predict the immediate behavior of the user: what he will consume soon, and in the extreme case, what he will consume next.

方法:

the input is the one-hot encoding of the current item

the final output is a fully-connect layer with a neuron for each item in the catalog.

The k items whose neurons are activated the most are used as the k recommendations.

目标函数:

Categorical cross-entropy (CCE): CCE(o, i) = log(softmax(o)i)

Hinge on the independent comparison of the output of each item against a fixed threshold.

训练过程:

A training instance is produced by randomly cutting the sequence of a user’s interactions into two parts; the first part is fed to the RNN, and the first item of the second part is used as the correct item in the computation of the objective function. (这一部分和文章介绍的划分训练集、测试集验证集的方法不同,这里有些疑惑既然这样训练在划分数据集的时候为什么要根据用户进行划分而不是直接对每位用户的序列划分呢? 之后看代码来填坑)

数据集:

MovieLens 和Netflix根据时间戳为用户构建序列,并根据用户之前评分过的电影预测接下来用用户可能会打分的电影

Rsc15是基于session的数据集,一个序列代表一个session,序列一般较短,其中位数长度是3

数据集切分方式:

根据用户切分

随机选择N位用户将其序列交互信息作为测试集,另选择N位用户将其序列交互信息作为验证集,剩余的用户作为训练集。

结果:

其中评价指标:

sps指标应该是作者自己定义的,从这个指标中可以看出作者提出的方法更好的适用于短期的推荐

从实验结果可看出较高的sps往往对应较高的item coverage,这也是之后作者探索该方法的推荐结果和推荐多样性关系的原因

从该图中看出不同实验方法对于短期/长期预测的性能

因为RNN方法更适用于短期推荐作者为将其和长期推荐做一个平衡使用了三种方法进行改善

Dropout/Shuffle Sequences/Multiple Targets

总结:

RNN在短期推荐方面表现的效果很好,同时推荐的物品也更多样,引入噪声有利于提升长期推荐的性能,该方法同样适用于稀疏数据,作者这篇文章中使用交叉熵目标函数时达到的效果是最好的。

Deep Interest Evolution Network for Click-Through Rate Prediction_AAAI2019

code: https://github.com/mouna99/dien

点击率:用户点击物品的概率

动机:

关于点击率预测问题已经存在的工作中往往直接将用户的行为建模为用户的兴趣表示而缺乏对于用户离散行为背后的潜在兴趣建模,此外很少有工作考虑用户的兴趣改变问题,而对于兴趣表示,捕捉用户动态的兴趣是重要的,因此本篇文章提出DIEN:

- 使用兴趣抽取层从用户历史行为序列中捕捉时间相关潜在的兴趣

- 使用兴趣演变层捕捉用户相对于目标项的兴趣演变过程

贡献:

• We focus on interest evolving phenomenon in ecommerce system, and propose a new structure of network to model interest evolving process. The model for interest evolution leads to more expressive interest representation and more precise CTR prediction.

• Different from taking behaviors as interests directly, we specially design interest extractor layer. Pointing at the problem that hidden state of GRU is less targeted for interest representation, we propose one auxiliary loss. Auxiliary loss uses consecutive behavior to supervise the learning of hidden state at each step. which makes hidden state expressive enough to represent latent interest.

• We design interest evolving layer novelly, where GRU with attentional update gate (AUGRU) strengthens the effect from relevant interests to target item and overcomes the inference from interest drifting.

方法:

最底层:特征均为one-hot向量表示

Embeddig 层:将one-hot向量转换为低维稠密向量

Interest Extractor Layer: 使用GRU建模行为之间的相关依赖,GRU的输入即为序列中每个时刻的表示,为了使GRU中的隐藏状态更好的学习用户的兴趣,作者引入了辅助损失使用用户序列中的下一个行为作为正例监督信息,和采样的负例(从除了用户在当前时刻点击的物品的其它物品中随机选择)监督信息指导当前隐藏状态的学习,(原始的GRU 中的隐状态只能捕捉行为之间的依赖性不能很好的表示用户的兴趣,且点击率预测问题经常使用 的负对数似然目标函数只包含对最后兴趣预测的监督,而中间的历史隐藏状态不能得到很好的监督)

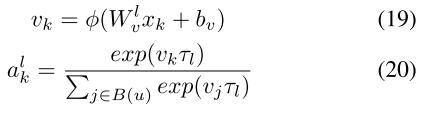

Interest Evolving Layer: 序列学习能力GRU+Attention捕捉和target ad之间的相关性

这里ht 是兴趣抽取层对应的隐状态,ea为target ad 对应的embedding表示

AIGRU:

it` 该层GRU的输入,In AIGRU, the scale of less related interest can be reduced by the attention score

AGRU:(Attention based GRU)

最早出现在QA领域,使用attention分数改变GRU的内部结构,在这里作者使用attention分数替代隐藏状态公式中的更新门

AUGRU:

AGRU中引入注意力分数时使用的标量,忽视了对于不同维度的重要性,因此作者提出了注意力更新门

损失函数:

辅助损失函数:

总体损失函数:

数据集:

public数据集中作者目的是使用T-1个行为预测用户是否写第T个评论

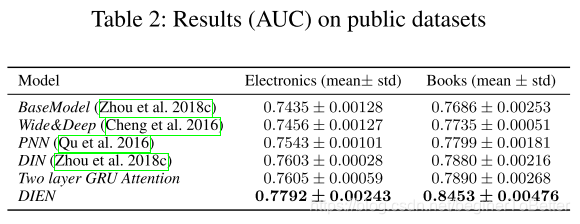

实验:

总结:

建模兴趣抽取层并使用辅助损失监督其隐状态从而更好的学习用户的兴趣,

建模兴趣演变层,使用基于注意力更新门的GRU建模用户相对于目标项的兴趣演化过程,避免兴趣漂移带来的影响同时有效的捕捉用户的兴趣进而提升CTR的预测

Adaptive User Modeling with Long and Short-Term Preferences for Personalized Recommendation

code:https://github.com/zepingyu0512/sli rec

动机:先前的工作使用RNN建模用户的短期偏好,而忽视了短期和长期偏好结合对用户的影响,因此作者在这篇文章中使用基于注意力的框架动态的结合用户长期和短期的偏好用于推荐,且在建模短期偏好时,作者对传统的RNN进行改进:1.添加基于时间的控制器 2.添加基于内容的控制器

方法:

短期偏好建模:

原始的LSTM:

引入时间门:

基于内容的控制器:

长期偏好建模:

长短期偏好的自适应融合:

损失函数:

数据集:

目的:给定用户先前的T个行为预测T+1行为

实验:

总结:

将用户的短期偏好和长期偏好动态的结合

使用基于时间和基于内容的控制器对LSTM进行改进使其更加适用于该场景下的用户建模

本文探讨了三篇关于序列推荐和点击率预测的论文,包括使用RNN进行长短期推荐,通过DIEN模型捕捉用户兴趣演变,以及结合长期和短期偏好的个性化推荐方法。

本文探讨了三篇关于序列推荐和点击率预测的论文,包括使用RNN进行长短期推荐,通过DIEN模型捕捉用户兴趣演变,以及结合长期和短期偏好的个性化推荐方法。

56

56

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?