技能点:正则 requests tdqm BeautifulSoup4 PyQuery celery redis

1.requests 来获取html页面

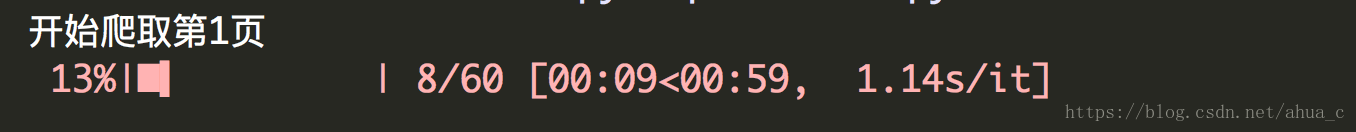

2.tdqm 来查看程序完成进度条

3. beautifulsoup4 PyQuery 用来解析HTML页面提取有效信息

4.celery 用来异步发送邮箱

5.redis用来存储celery需要执行的函数

获取html和解析html代码如下:

#-*- coding:utf-8 -*- import re from urllib.parse import urlencode import requests import time from tqdm import tqdm from bs4 import BeautifulSoup from pyquery import PyQuery from celery_tasks.email import tasks as email def get_main_html(city,word,page): '''获取主页源代码''' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36', 'Host': 'sou.zhaopin.com', 'Referer': 'https://www.zhaopin.com/', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, br', 'Accept-Language': 'zh-CN,zh;q=0.9' } data = { 'jl': city, # 搜索城市 'kw': word, # 搜索关键词 'isadv': 0, # 是否打开更详细搜索选项 'isfilter': 1, # 是否对结果过滤 'p': page # 页数 } url = 'https://sou.zhaopin.com/jobs/searchresult.ashx?'+ urlencode(data) response = requests.get(url,headers=headers) return response.text def get_detail_url(html): '''从主页html获取详细页url''' soup = BeautifulSoup(html,'lxml') a_list = soup.find_all(attrs={'style':'font-weight: bold'}) detail_url_list = [] # 获取详细a标签中的href属性 for a in a_list: detail_url_list.append(a.attrs['href']) return detail_url_list def get_detail_html(url): '''获取详细页源代码''' headers = {'Host': 'jobs.zhaopin.com', 'Upgrade-Insecure-Requests': '1', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186Safari/537.36', 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8', 'Accept-Language': 'zh-CN,zh;q=0.9' } response = requests.get(url,headers=headers) html = response.text return html def handle_detail_html(html,url): '''处理详细页源代码 获取有效信息''' employment_info = {} soup = BeautifulSoup(html,'lxml') # 获取标题 try: title_content = soup.find(attrs={'class':'inner-left'}).find('h1').string main_html = soup.find(attrs={'class':'tab-inner-cont'}) digest_html = soup.find(attrs={'class':'terminal-ul'}) doc_digest = PyQuery(digest_html.__str__()) doc_content = PyQuery(main_html.__str__()) digest_content = doc_digest.text() # 获取主要内容 main_content = re.sub(r'=+','',doc_content.text()) # 将获取的信息放入字典中 employment_info['title'] = title_content employment_info['digest'] = digest_content employment_info['main'] = main_content employment_info['url'] = url except: pass return employment_info def send_email(employment_info): '''调用celery 发送到自己的邮箱''' time.sleep(2) email.task_send_email.delay(employment_info)

def save_url(url): with open('already_url','a+') as f: f.seek(0) if url not in f.read().split('|'): f.write(url) f.write('|') else: return True def spider(city,word,page): '''主要控制函数函数''' html = get_main_html(city,word,page) urlList = get_detail_url(html) for url in tqdm(urlList): # 判断是否可以已经爬取过 if save_url(url): continue html = get_detail_html(url) employment_info = handle_detail_html(html,url) send_email(employment_info) print('第%s爬取结束'%(page+1)) if __name__ == '__main__': while True: for i in range(3): print('开始爬取第%s页'%(i+1)) spider('上海','python',i) time.sleep(1) time.sleep(3600)

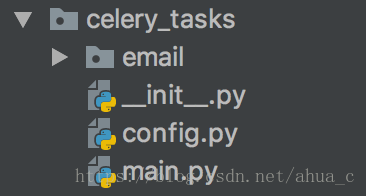

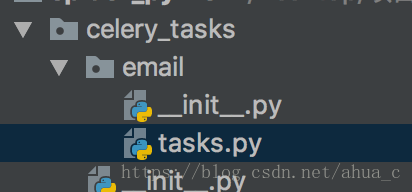

配置celery如下:

config.py用来配置异步任务存放的位置

result_backend = 'redis://172.16.14.128:6379/2' broker_url = 'redis://172.16.14.128:6379/1'

main是启动文件

from celery import Celery # 为celery使用django配置文件进行设置 # 创建celery应用 app = Celery('爬虫') # 导入celery配置 app.config_from_object('celery_tasks.config') # 自动注册celery任务 app.autodiscover_tasks(['celery_tasks.email'])

email下的tasks

from celery_tasks.main import app import smtplib import email.mime.multipart import email.mime.text @app.task(name='task_send_email') def task_send_email(employment_info): msg = email.mime.multipart.MIMEMultipart() msgFrom = '****@163.com' # 163开启stmp的邮箱 msgTo = '****@qq.com' # 接受邮件的邮箱 smtpSever = 'smtp.163.com' # 163邮箱的smtp Sever地址 smtpPort = '25' # 开放的端口 sqm = '****' # 163的使用授权码而非账户密码 msg['from'] = msgFrom msg['to'] = msgTo msg['subject'] = '招聘职位-%s'%employment_info.get('title',None) content = ''' 招聘职位-%s: 招聘信息:%s 岗位描述:%s 网址:%s '''%(employment_info.get('title',None),employment_info.get('digest',None),employment_info.get('main',None),employment_info.get('url',None)) txt = email.mime.text.MIMEText(content) msg.attach(txt) smtp = smtplib smtp = smtplib.SMTP() ''' smtplib的connect(连接到邮件服务器)、login(登陆验证)、sendmail(发送邮件) ''' smtp.connect(smtpSever, smtpPort) smtp.login(msgFrom, sqm) smtp.sendmail(msgFrom, msgTo, str(msg)) smtp.quit()

163的stmp配置链接 https://jingyan.baidu.com/article/7f41ecec3e8d35593d095c93.html

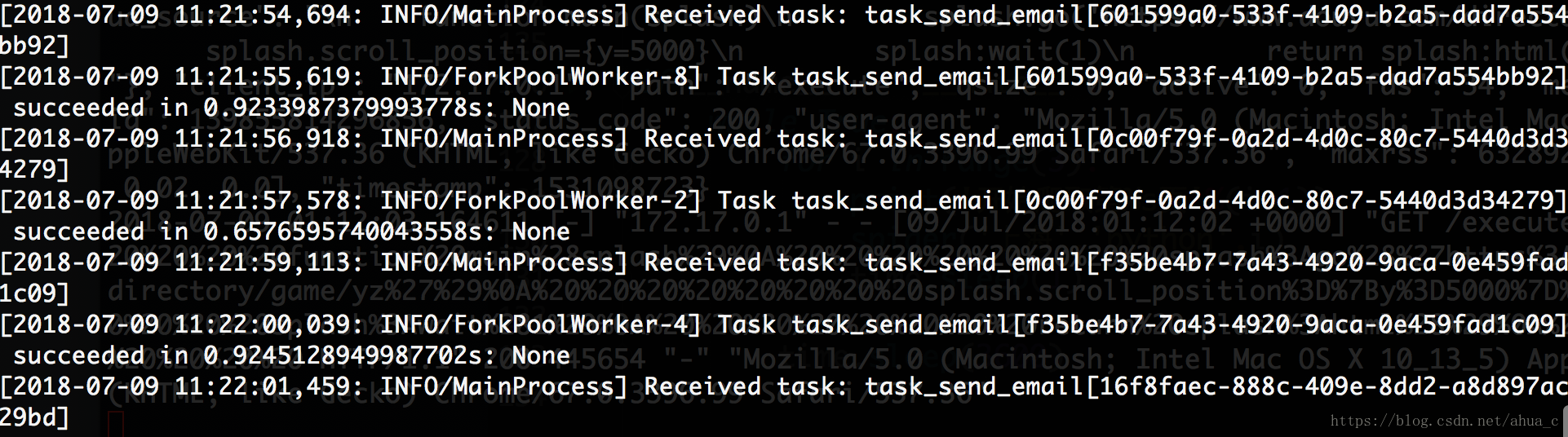

如上都配置好后 在celery_tasks同级目录下执行如下命令:

celery -A celery_tasks.main worker -l info

启动成功如下:(py3_spider) ahuadeMBP:spider_pyfile ahua$ celery -A celery_tasks.main worker -l info

-------------- celery@ahuadeMBP v4.2.0 (windowlicker)

---- **** -----

--- * *** * -- Darwin-17.6.0-x86_64-i386-64bit 2018-07-09 11:20:44

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: 爬虫:0x10619dd68

- ** ---------- .> transport: redis://172.16.14.128:6379/1

- ** ---------- .> results: redis://172.16.14.128:6379/2

- *** --- * --- .> concurrency: 8 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. task_send_email

[2018-07-09 11:20:44,969: INFO/MainProcess] Connected to redis://172.16.14.128:6379/1

[2018-07-09 11:20:44,977: INFO/MainProcess] mingle: searching for neighbors

[2018-07-09 11:20:45,995: INFO/MainProcess] mingle: all alone

[2018-07-09 11:20:46,016: INFO/MainProcess] celery@ahuadeMBP ready.

最后启动文件如下:

celery如下:

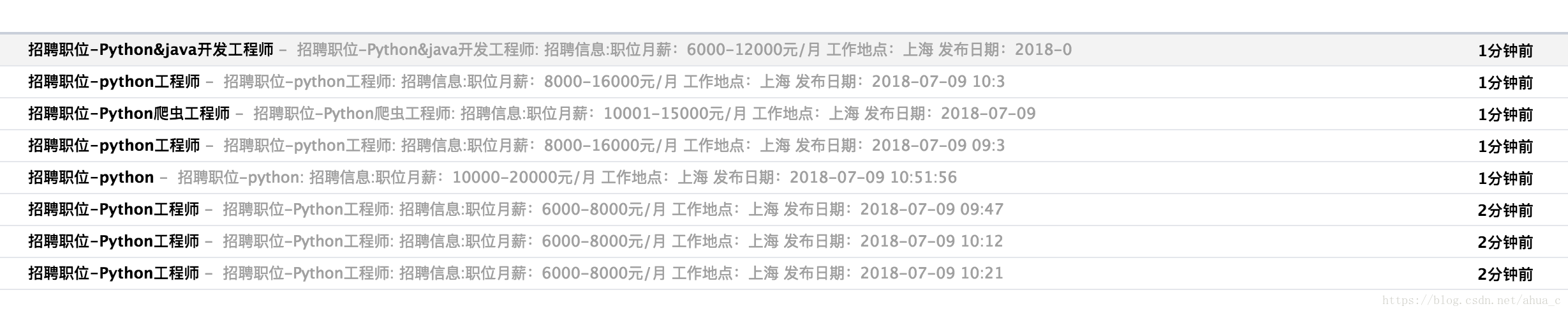

邮箱效果如下:

本文介绍了使用Python进行实时监控智联招聘岗位的方法,主要涉及requests获取网页内容,tdqm展示进度条,beautifulsoup4和PyQuery解析HTML,celery实现异步发送邮箱以及redis存储任务。配置包括163邮箱SMTP设置,并提供了启动celery worker的命令及运行状态展示。

本文介绍了使用Python进行实时监控智联招聘岗位的方法,主要涉及requests获取网页内容,tdqm展示进度条,beautifulsoup4和PyQuery解析HTML,celery实现异步发送邮箱以及redis存储任务。配置包括163邮箱SMTP设置,并提供了启动celery worker的命令及运行状态展示。

1440

1440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?