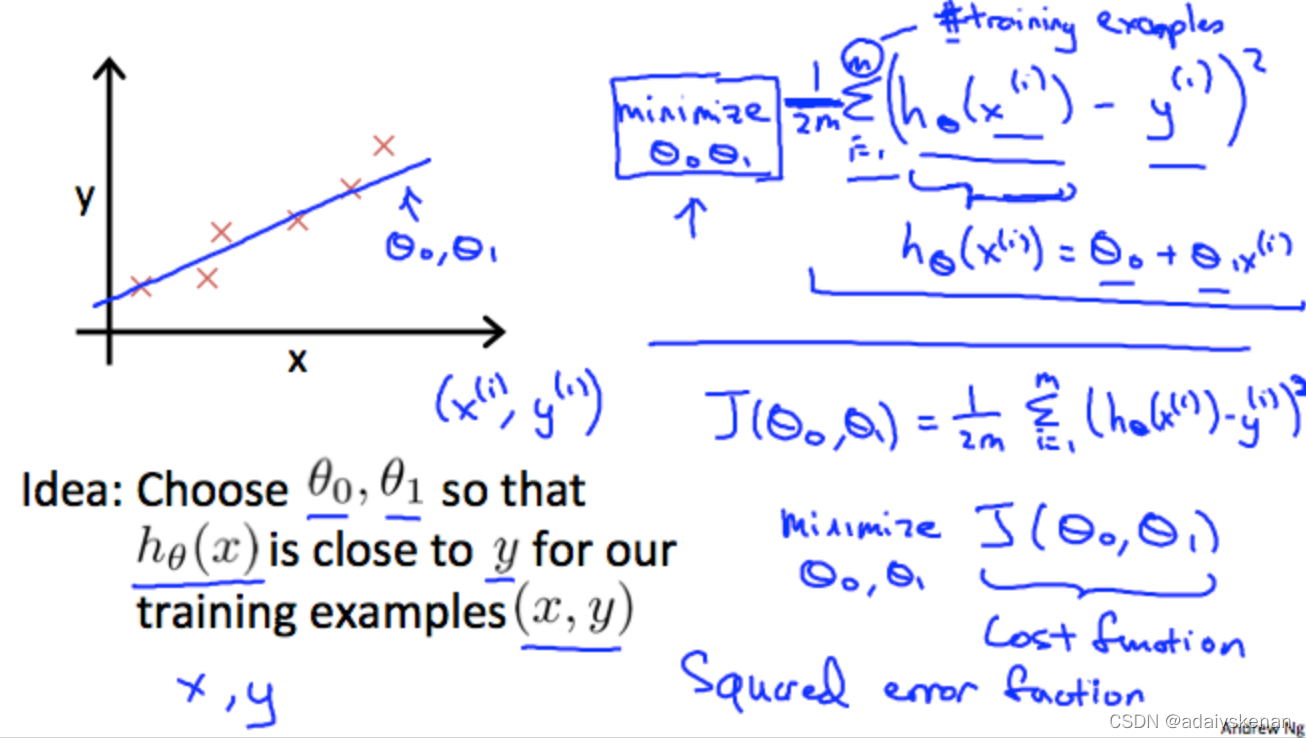

We can measure the accuracy of our hypothesis function by using a cost function. This takes an average difference (actually a fancier version of an average) of all the results of the hypothesis with inputs from x’s and the actual output y’s.

J(θ0,θ1)=12m∑i=1m(y^i−yi)2=12m∑i=1m(hθ(xi)−yi)2J(\theta_0, \theta_1)= \dfrac {1}{2m} \displaystyle \sum _{i=1}^m \left ( \hat{y}_{i}- y_{i} \right)^2 = \dfrac {1}{2m} \displaystyle \sum _{i=1}^m \left (h_\theta (x_{i}) - y_{i} \right)^2J(θ0,θ1)=2m1i=1∑m(y^i−yi)2=2m1i=1∑m(hθ(xi)−yi)2

To break it apart, it is 12xˉ\frac{1}{2} \bar{x}21xˉ where $ \bar{x}$ is the mean of the squares of hθ(xi)−yih_\theta (x_{i}) - y_{i}hθ(xi)−yi , or the difference between the predicted value and the actual value.

This function is otherwise called the “Squared error function”-平方误差函数, or “Mean squared error”-均方误差. The mean is halved (12)\left(\frac{1}{2}\right)(21) as a convenience for the computation of the gradient descent, as the derivative term of the square function will cancel out the 12\frac{1}{2}21 term. The following image summarizes what the cost function does:

本文介绍了如何通过平方误差函数(均方误差)衡量假设函数准确性,重点讲解了J(θ0,θ1)的计算公式,并阐述了它在预测值与实际值差异中的作用。此外,我们讨论了平方误差函数在梯度下降算法中的便利性,以及其在机器学习中的广泛应用。

本文介绍了如何通过平方误差函数(均方误差)衡量假设函数准确性,重点讲解了J(θ0,θ1)的计算公式,并阐述了它在预测值与实际值差异中的作用。此外,我们讨论了平方误差函数在梯度下降算法中的便利性,以及其在机器学习中的广泛应用。

1159

1159

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?