机器环境

Ubuntu 22.04.4 LTS

ip 10.0.0.203/24

hadoop 3.3.6

Hadoop配置的五个步骤

(1) 创建Hadoop用户

(2) 安装java

(3) 设置SSH登录权限

(4) 单机安装配置

(5) 伪分布式安装配置

安装单机版Hadoop

1.创建hadoop用户(官方推荐,生产必做)或设置环境变量以root用户启动(仅适合单机测试/学习环境)

1

adduser hadoop

passwd hadoop # 给它设个密码

2

为 root 用户配置 SSH 无密码登录本机

# 如果还没有 ssh key,就生成一个

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

# 把公钥追加到本机 authorized_keys

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# 测试

ssh localhost

2.安装 open jdk

将deb文件上传到服务器的/var/cache/apt/archives目录。

执行命令 sudo apt-get install openjdk-8-jdk 进行安装。

运行目录:/usr/lib/jvm/java-8-openjdk-amd64/bin

3.下载安装Hadoop软件包

Hadoop软件地址

root@ubt-2204# tar xf hadoop-3.3.6.tar.gz -C /usr/local/

root@ubt-2204:/usr/local# cd /usr/local/

root@ubt-2204:/usr/local# chown -R hadoop:hadoop hadoop-3.3.6

4.修改hadoop-env.sh配置文件指定系统中的jdk路径

- 进入hadoop文件目录:

root@ubt-2204:/usr/local/hadoop-3.3.6# vim /usr/local/hadoop-3.3.6/etc/hadoop/hadoop-env.sh

- 修改JAVA_HOME环境变量:

grep -En '^export' /usr/local/hadoop-3.3.6/etc/hadoop/hadoop-env.sh

55:export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

- 让配置生效

source /usr/local/hadoop-3.3.6/etc/hadoop/hadoop-env.sh

- 验证服务

/usr/local/hadoop-3.3.6/bin/hadoop version

-------------------------------------------------------

root@ubt-2204:/usr/local/hadoop-3.3.6/bin# /usr/local/hadoop-3.3.6/bin/hadoop version

Hadoop 3.3.6

Source code repository https://github.com/apache/hadoop.git -r 1be78238728da9266a4f88195058f08fd012bf9c

Compiled by ubuntu on 2023-06-18T08:22Z

Compiled on platform linux-x86_64

Compiled with protoc 3.7.1

From source with checksum 5652179ad55f76cb287d9c633bb53bbd

This command was run using /usr/local/hadoop-3.3.6/share/hadoop/common/hadoop-common-3.3.6.jar

5.测试hadoop是否安装成功

cd /usr/local/hadoop-3.3.6

root@ubt-2204:/usr/local/hadoop-3.3.6# mkdir ./input

root@ubt-2204:/usr/local/hadoop-3.3.6# cp ./etc/hadoop/*.xml ./input

root@ubt-2204:/usr/local/hadoop-3.3.6# ./bin/hadoop jar /usr/local/hadoop-3.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.6.jar grep ./input/ ./output/ 'dfs[a-z.]+'

- 输出以下信息

- root@ubt-2204:/usr/local/hadoop-3.3.6# ll output/

total 20

drwxr-xr-x 2 root root 4096 Aug 4 14:09 ./

drwxr-xr-x 12 hadoop hadoop 4096 Aug 4 14:09 ../

-rw-r--r-- 1 root root 8 Aug 4 14:09 ._SUCCESS.crc

-rw-r--r-- 1 root root 12 Aug 4 14:09 .part-r-00000.crc

-rw-r--r-- 1 root root 0 Aug 4 14:09 _SUCCESS

-rw-r--r-- 1 root root 11 Aug 4 14:09 part-r-00000

root@ubt-2204:/usr/local/hadoop-3.3.6# cat ./output/*

1 dfsadmin

hadoop伪分布式安装

| 配置文件的名称 | 作用 |

|---|---|

| core-site.xml | 核心配置文件,主要定义了我们文件访问的格式 hdfs:// |

| hadoop-env.sh | 主要配置我们的java路径 |

| hdfs-site.xml | 主要定义配置我们的hdfs的相关配置 |

| mapred-site.xml | 主要定义我们的mapreduce相关的一些配置 |

| slaves | 控制我们的从节点在哪里 datanode nodemanager在哪些机器上 |

| yarm-site.xml | 配置我们的resourcemanager资源调度 |

1.对于伪分布式我们需要修改 core-site.xml hdfs-site.xml 文件

- 修改 core-site.xml 文件

root@ubt-2204:/usr/local/hadoop-3.3.6# vim /usr/local/hadoop-3.3.6/etc/hadoop/core-site.xml

<configuration>

<!--指定namenode的地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<!--用来指定使用hadoop时产生文件的存放目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

</property>

<!--用来设置检查点备份日志的最长时间-->

<name>fs.checkpoint.period</name>

<value>3600</value>

</configuration>

- 修改 hdfs-site.xml 文件

root@ubt-2204:/usr/local/hadoop-3.3.6/etc/hadoop# vim /usr/local/hadoop-3.3.6/etc/hadoop/hdfs-site.xml

<configuration>

<!--指定hdfs保存数据的副本数量-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--指定hdfs中namenode的存储位置-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<!--指定hdfs中datanode的存储位置-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

root@ubt-2204:/usr/local/hadoop-3.3.6# ./bin/hadoop namenode -format

......

2025-08-04 14:30:45,170 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2025-08-04 14:30:45,197 INFO namenode.FSNamesystem: Stopping services started for active state

2025-08-04 14:30:45,198 INFO namenode.FSNamesystem: Stopping services started for standby state

2025-08-04 14:30:45,204 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2025-08-04 14:30:45,205 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ubt-2204/10.0.0.203

************************************************************/

出现以上代码,则为正确

2.启动运行hadoop

root@ubt-2204:/usr/local/hadoop-3.3.6# ./sbin/start-all.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [ubt-2204]

Starting resourcemanager

resourcemanager is running as process 2073. Stop it first and ensure /tmp/hadoop-root-resourcemanager.pid file is empty before retry.

Starting nodemanagers

# 输入jps显示以下java进程信息,既为正确

root@ubt-2204:/usr/local/hadoop-3.3.6# jps

3553 Jps

3427 NodeManager

2888 DataNode

2073 ResourceManager

3114 SecondaryNameNode

2746 NameNode

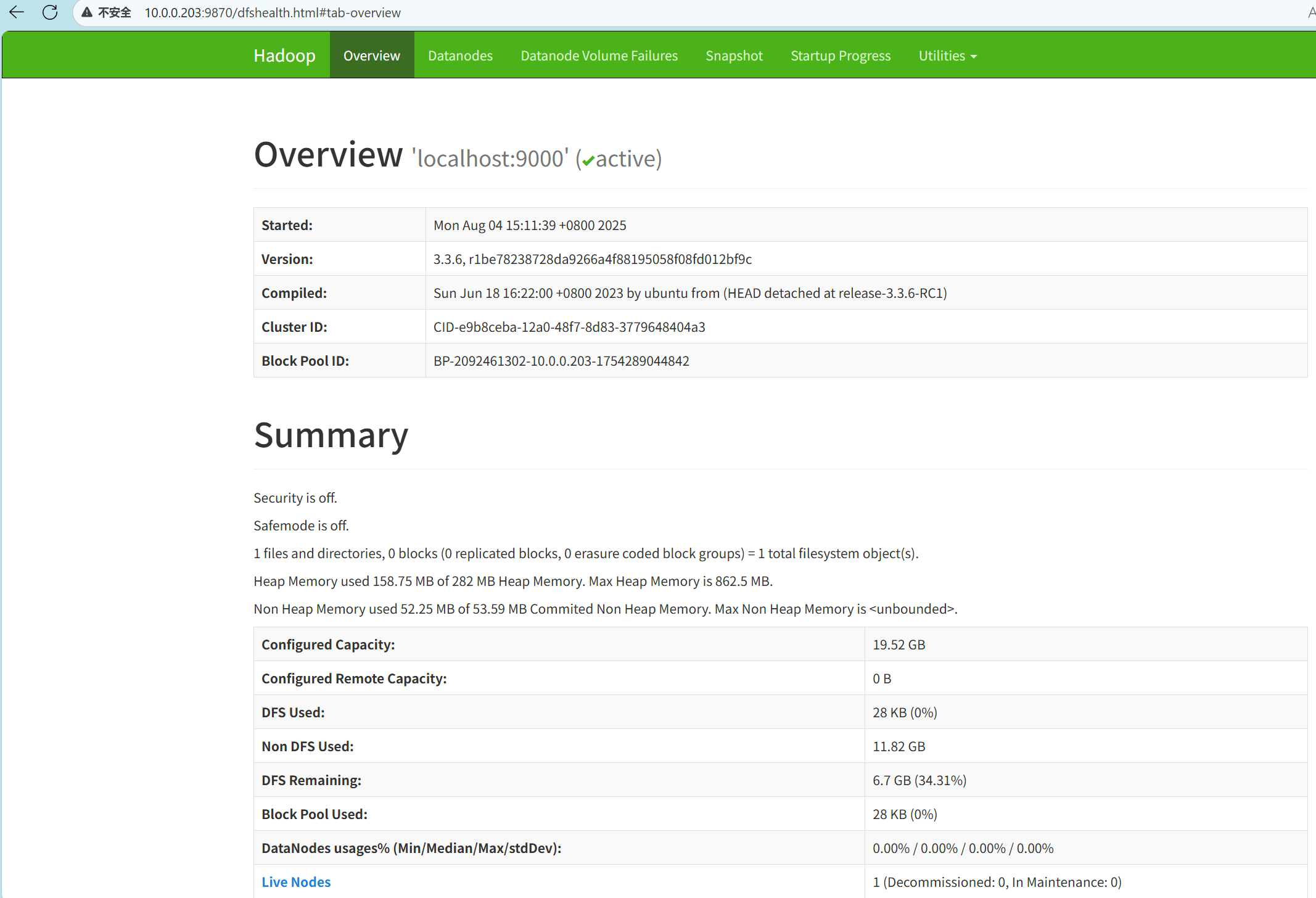

3.访问测试

http://10.0.0.203:9870/

注意!!!

1357

1357

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?