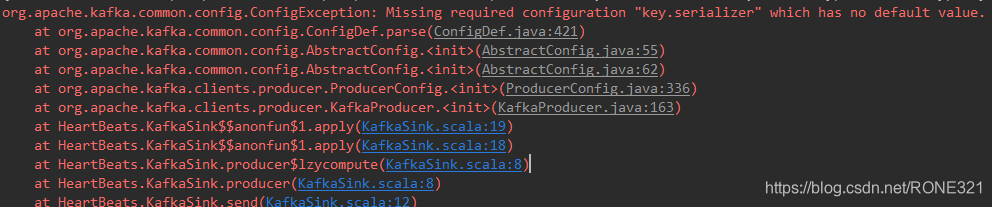

SparkStreaming写入Kafka报错Missing required configuration "key.serializer" which has no default value.

这是因为你在KafkaParams参数时没有指定key.serializer

将KafkaParams配置项设置为以下格式就ok了

/**

* 设置Kafka参数

*/

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "cdh01:9092 cdh02:9092 cdh03:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"key.serializer" -> "org.apache.kafka.common.serialization.StringSerializer",

"value.serializer" -> "org.apache.kafka.common.serialization.StringSerializer",

"group.id" -> groupId,

"auto.offset.reset" -> "latest",

"enable.auto.commit" -> (false: java.lang.Boolean))

上面包含了deserializer就是读取Kafka和写入Kafka

完美解决

SparkStreaming写入Kafka配置详解

SparkStreaming写入Kafka配置详解

本文详细解析了在使用SparkStreaming写入Kafka时遇到的Missingrequiredconfiguration错误原因及解决方案,通过正确配置KafkaParams参数,特别是key.serializer和value.serializer,确保数据的顺利传输。

本文详细解析了在使用SparkStreaming写入Kafka时遇到的Missingrequiredconfiguration错误原因及解决方案,通过正确配置KafkaParams参数,特别是key.serializer和value.serializer,确保数据的顺利传输。

2185

2185

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?