本文语音听写以及语音合成基于讯飞科技提供的sdk

根据官方文档,下载sdk,创建自己的应用,获取appid。

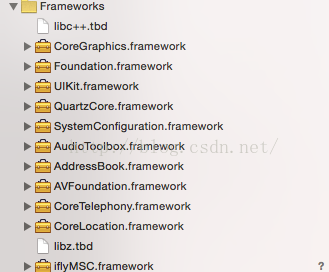

sdk放进自己的工程里面,然后添加需要的库。

编译一下 ,如果编译报错,修改Targets -》 Build Settings -》Build Options -》Enable Bitcode 设置为No 编译通过,sdk环境集成成功。

在程序入口,配置用户信息:

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions {

NSString *initString = [[NSString alloc] initWithFormat:@"appid=%@",@"000000"];//此处填写自己申请应用的appId

[IFlySpeechUtility createUtility:initString];

}

一、语音合成,也就是阅读文本,把文本消息转化成语音输出:

1.导入相关的头文件

#import <iflyMSC/IFlySpeechSynthesizerDelegate.h>

#import <iflyMSC/IFlySpeechSynthesizer.h>

#import <iflyMSC/IFlySpeechConstant.h>

2.控制器遵守协议 ,实现协议回调方法:

@interface ViewController ()<IFlySpeechSynthesizerDelegate>

@property (strong, nonatomic) IFlySpeechSynthesizer *synthesizer;

@property (nonatomic , strong) UITextView *textView;

@property (nonatomic , strong) UIButton *startBtn;

@property (nonatomic , strong) UIButton *cancelBtn;

@end

3.创建文字识别对象,

self.synthesizer = [IFlySpeechSynthesizer sharedInstance];

self.synthesizer.delegate = self;

并可以自定义设置该对象的关键属性,不设置将会是默认值

[self.synthesizer setParameter:@"50" forKey:[IFlySpeechConstant SPEED]];

[self.synthesizer setParameter:@"50" forKey:[IFlySpeechConstant VOLUME]];

[self.synthesizer setParameter:@"VIXR" forKey:[IFlySpeechConstant VOICE_NAME]];

[self.synthesizer setParameter:@"8000" forKey:[IFlySpeechConstant SAMPLE_RATE]];

[self.synthesizer setParameter:@"temp.pcm" forKey:[IFlySpeechConstant TTS_AUDIO_PATH]];

[self.synthesizer setParameter:@"custom" forKey:[IFlySpeechConstant PARAMS]];

4.功能启动的调用方法

- (void)startBtnCliked {

[self.synthesizer startSpeaking:self.textView.text];

}

5.实现协议的方法:

- (void)onCompleted:(IFlySpeechError *)error {

NSLog(@"讲完了");

}

这样简单的语音合成就实现了。

二、语音识别,将语音翻译成文本消息。

sdk中提供了两种方式,一种是带界面的语音识别,也就是有一个小话筒的界面效果。另一种是无界面的。

先说有界面的,也比较简单。

1.导入头文件

#import "iflyMSC/IFlyMSC.h"

#import "ISRDataHelper.h"

2.遵守协议

@interface ViewController ()<IFlyRecognizerViewDelegate> {

IFlyRecognizerView *_iflyRecognizerView;

}

@property (nonatomic , strong) UITextView *textView;

@property (nonatomic , strong) UIButton *startBtn;

@property (nonatomic , strong) UIButton *cancelBtn;

@end

3.创建对象

_iflyRecognizerView = [[IFlyRecognizerView alloc] initWithCenter:self.view.center];

_iflyRecognizerView.delegate = self;

[_iflyRecognizerView setParameter: @"iat" forKey: [IFlySpeechConstant IFLY_DOMAIN]];

[_iflyRecognizerView setParameter:@"asrview.pcm " forKey:[IFlySpeechConstant ASR_AUDIO_PATH]];

4.开始语音识别

- (void)startBtnCliked {

[_textView resignFirstResponder];

//启动识别服务

[_iflyRecognizerView start];

}

5.实现代理回调,处理识别的结果

/*识别结果返回代理

@param resultArray 识别结果

@ param isLast 表示是否最后一次结果

*/

- (void)onResult: (NSArray *)resultArray isLast:(BOOL) isLast

{

NSMutableString *resultString = [[NSMutableString alloc] init];

NSDictionary *dic = resultArray[0];

for (NSString *key in dic) {

[resultString appendFormat:@"%@",key];

}

NSString * resultFromJson = [ISRDataHelper stringFromJson:resultString];

_textView.text = [NSString stringWithFormat:@"%@%@", _textView.text,resultFromJson];

}

/*识别会话错误返回代理

@ param error 错误码

*/

- (void)onError: (IFlySpeechError *) error

{

NSLog(@"%s",__func__);

NSString *text ;

if (error.errorCode ==0 ) {

}

else

{

text = [NSString stringWithFormat:@"发生错误:%d %@",error.errorCode,error.errorDesc];

NSLog(@"%@",text);

[_iflyRecognizerView cancel];

}

self.startBtn.enabled = YES;

}

然后是不带界面的语音识别。

1.同样导入头文件

#import <iflyMSC/IFlySpeechRecognizerDelegate.h>

#import <iflyMSC/IFlySpeechRecognizer.h>

#import <iflyMSC/IFlySpeechError.h>

#import "ISRDataHelper.h"

2.创建对象 遵守协议

@interface ViewController ()<IFlySpeechRecognizerDelegate>

@property (nonatomic , strong) IFlySpeechRecognizer *iFlySpeechRecognizer;

@property (nonatomic , strong) UITextView *textView;

@property (nonatomic , strong) UIButton *startBtn;

@property (nonatomic , strong) UIButton *cancelBtn;

@end

self.iFlySpeechRecognizer = [IFlySpeechRecognizer sharedInstance];

self.iFlySpeechRecognizer.delegate = self;

3.按钮方法,功能启动入口

- (void)startBtnCliked {

BOOL ret = [self.iFlySpeechRecognizer startListening];

if (ret) {

NSLog(@"成功");

} else {

NSLog(@"启动失败");

}

}

- (void)cancelBtnCliked {

[self.iFlySpeechRecognizer cancel];

}

4.实现代理回调方法

- (void) onError:(IFlySpeechError *)errorCode {

NSLog(@"%@",errorCode.errorDesc);

}

- (void) onResults:(NSArray *) results isLast:(BOOL)isLast {

NSMutableString *resultString = [[NSMutableString alloc] init];

NSDictionary *dic = results[0];

for (NSString *key in dic) {

[resultString appendFormat:@"%@",key];

}

NSString * resultFromJson = [ISRDataHelper stringFromJson:resultString];

_textView.text = [NSString stringWithFormat:@"%@%@", _textView.text,resultFromJson];

}

这个ISRDataHelper是讯飞demo里面封装好的一个类,用来处理翻译过来的文本。实际上可以这样解析默认的json串:

- (void) onResults:(NSArray *) results isLast:(BOOL)isLast {

NSArray * temp = [[NSArray alloc]init];

NSString * str = [[NSString alloc]init];

NSMutableString *result = [[NSMutableString alloc] init];

NSDictionary *dic = results[0];

for (NSString *key in dic) {

[result appendFormat:@"%@",key];

}

NSLog(@"听写结果:%@",result);

//---------讯飞语音识别JSON数据解析---------//

NSError * error;

NSData * data = [result dataUsingEncoding:NSUTF8StringEncoding];

NSLog(@"data: %@",data);

NSDictionary * dic_result =[NSJSONSerialization JSONObjectWithData:data options:NSJSONReadingMutableLeaves error:&error];

NSArray * array_ws = [dic_result objectForKey:@"ws"];

//遍历识别结果的每一个单词

for (int i=0; i<array_ws.count; i++) {

temp = [[array_ws objectAtIndex:i] objectForKey:@"cw"];

NSDictionary * dic_cw = [temp objectAtIndex:0];

str = [str stringByAppendingString:[dic_cw objectForKey:@"w"]];

NSLog(@"识别结果:%@",[dic_cw objectForKey:@"w"]);

}

NSLog(@"最终的识别结果:%@",str);

//去掉识别结果最后的标点符号

if ([str isEqualToString:@"。"] || [str isEqualToString:@"?"] || [str isEqualToString:@"!"]) {

NSLog(@"末尾标点符号:%@",str);

}

else{

self.textView.text = str;

}

}

目前只整理了这两个功能的代码,后续需要会再研究其他功能的代码。

1410

1410

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?