试着用一下higress,整体分为以下几个部分:

- 创建k8s虚拟机

- 可以使用kind,我本来是本地自己搭建的虚拟机,后来因为网络、资源不足等问题直接放弃了,使用kind处理的

- 创建实例app

- 创建ingress,暴露端口,使用hgctl打开控制台

**我这里的所有前提都是得能装docker或者已经有docker了,不提供相关教程,因为内容已经很冗长了**

一:

创建虚拟机

安装kind(直接从官方照搬过来的,没有发现什么问题)

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.18.0/kind-linux-amd64

chmod +x ./kubectl ./kind

sudo mv ./kubectl ./kind /usr/local/bin启用kind

注意这里暴露了端口80,443的,在虚拟机中,如果你按照处理好了虚拟机跟宿主机之间的网络问题(ssh访问vmware虚拟机(打通本地主机与虚拟机的网络)_ssh连接vmware虚拟机-优快云博客),后续是可以通过这个端口直接在页面上进行访问的

[root@localhost higress]# cat cluster.conf

# cluster.conf

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

# networking:

# WARNING: It is _strongly_ recommended that you keep this the default

# (127.0.0.1) for security reasons. However it is possible to change this.

# apiServerAddress: "0.0.0.0"

# By default the API server listens on a random open port.

# You may choose a specific port but probably don't need to in most cases.

# Using a random port makes it easier to spin up multiple clusters.

# apiServerPort: 6443

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- containerPort: 8080

hostPort: 8080

protocol: TCP

# kind-config.yaml

# kind: Cluster

# apiVersion: kind.x-k8s.io/v1alpha4

# nodes:

# - role: control-plane

# extraPortMappings:

- containerPort: 31309 # 容器端口

hostPort: 31309 # 宿主机端口

listenAddress: "0.0.0.0" # 允许外部访问

#后面这个端口是我写完博客初稿之后再加的,因为后面发现访问foo服务搞了个端口映射弄出来,最好是预留个端口给app.py使用使用如下命令运行,其中的kind-config.yaml就是上面的代码,记住这里的端口31309,后面创建好了app之后可以把端口改成这个。

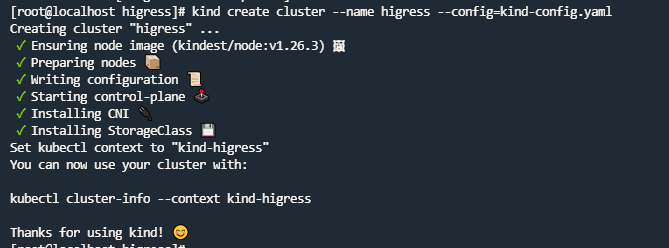

kind create cluster --name higress --config=kind-config.yaml

到这就是成功了,不过可能各种插件还有问题(比如kindnet网络插件)

处理问题

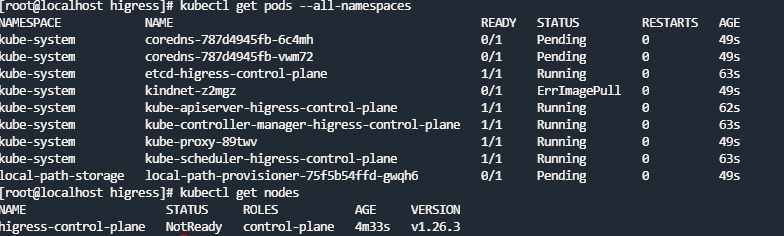

我这里安装各种东西都失败了,转头一看,节点还是notready,pod还有很多没有起来,主要是网络组件

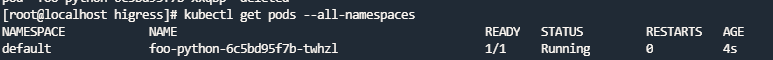

kubectl get pods --all-namespaces

kubectl get nodes

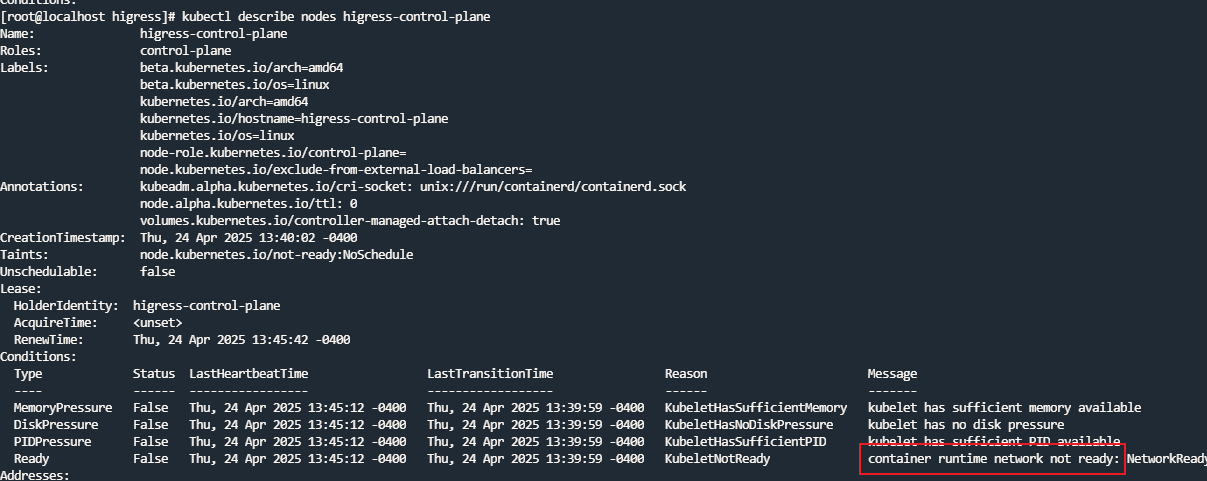

kubectl describe nodes higress-control-plane #这个higress-xx-xx是get nodes命令输出里面的唯一一个节点

查看是网络组件没有弄好,上面的kindnet和coredns也都是异常状态,至于local-path-storage可能暂时不用考虑

这个kindnet我搞了一段时间没搞定,主要还是镜像的问题,很多次都拉不下kindnet镜像,毕竟高墙()

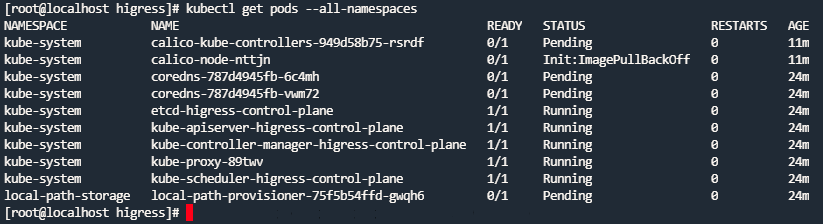

解决办法:我后面改成了calico,但是实际上还是自己找可用源手拉的镜像,拉下来之后打个tag,然后load docker-image之后就能在“k8s”中使用了

所以这段的流程基本就是查看pod状态是否running,如果不是running就去找相关的问题,一般都是镜像拉不下来,拉下来之后delete pod让pod重启一下,基本上只要网络组件好了,节点就ready了就能下一步了

具体操作:

# kubectl describe nodes higress-control-plane

# kubectl describe nodes higress-control-plane

……

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Thu, 24 Apr 2025 13:45:12 -0400 Thu, 24 Apr 2025 13:39:59 -0400 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Thu, 24 Apr 2025 13:45:12 -0400 Thu, 24 Apr 2025 13:39:59 -0400 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Thu, 24 Apr 2025 13:45:12 -0400 Thu, 24 Apr 2025 13:39:59 -0400 KubeletHasSufficientPID kubelet has sufficient PID available

Ready False Thu, 24 Apr 2025 13:45:12 -0400 Thu, 24 Apr 2025 13:39:59 -0400 KubeletNotReady container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized

……

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-787d4945fb-6c4mh 0/1 Pending 0 49s

kube-system coredns-787d4945fb-vwm72 0/1 Pending 0 49s

kube-system etcd-higress-control-plane 1/1 Running 0 63s

kube-system kindnet-z2mgz 0/1 ErrImagePull 0 49s

kube-system kube-apiserver-higress-control-plane 1/1 Running 0 62s

kube-system kube-controller-manager-higress-control-plane 1/1 Running 0 63s

kube-system kube-proxy-89twv 1/1 Running 0 49s

kube-system kube-scheduler-higress-control-plane 1/1 Running 0 63s

local-path-storage local-path-provisioner-75f5b54ffd-gwqh6 0/1 Pending 0 49s

# kubectl delete daemonset -n kube-system kindnet #删除网络上面已经将原本的网络删除了,现在是利用calico建立新的网络了:

先拉取镜像,如果找不到合适的镜像可以去"3.9-slim" 容器镜像搜索结果这个网站搜索一下,如果网站失效了就自己想办法了,至于怎么使用既简单页面又有教程自己研究去

# docker pull xxxx #拉镜像

# docker tag xxx:va yyy:vb #打tag,方便到时候yaml文件中填写好就能直接使用

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kindnetd:v20230330-48f316cd kindest/kindnetd:v20230330-48f316cd #举例

# # 加载镜像到集群中,calico就是需要这三个镜像

# kind load docker-image calico/cni:v3.25.0 --name higress

Image: "calico/cni:v3.25.0" with ID "sha256:d70a5947d57e5ab3340d126a38e6ae51bd9e8e0b342daa2012e78d8868bed5b7" not yet present on node "higress-control-plane", loading...

# kind load docker-image calico/node:v3.25.0 --name higress

Image: "calico/node:v3.25.0" with ID "sha256:08616d26b8e74867402274687491e5978ba4a6ded94e9f5ecc3e364024e5683e" not yet present on node "higress-control-plane", loading...

# kind load docker-image calico/kube-controllers:v3.25.0 --name higress

Image: "calico/kube-controllers:v3.25.0" with ID "sha256:5e785d005ccc1ab22527a783835cf2741f6f5f385a8956144c661f8c23ae9d78" not yet present on node "higress-control-plane", loading...

# kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml #安装calico到这一步基本搞点网络,但是中间也会有问题,比如下面显示节点没有正常:

通过describe查看一下

# kubectl describe calico-node-nttjn -n kube-system #错误示范()

error: the server doesn't have a resource type "calico-node-nttjn"

[root@localhost higress]# kubectl describe pods calico-node-nttjn -n kube-system

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 13m default-scheduler Successfully assigned kube-system/calico-node-nttjn to higress-control-plane

Normal Pulling 10m (x4 over 13m) kubelet Pulling image "docker.io/calico/cni:v3.26.1"

Warning Failed 10m (x4 over 12m) kubelet Failed to pull image "docker.io/calico/cni:v3.26.1": rpc error: code = Unknown desc = failed to pull and unpack image "docker.io/calico/cni:v3.26.1": failed to resolve reference "docker.io/calico/cni:v3.26.1": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

Warning Failed 10m (x4 over 12m) kubelet Error: ErrImagePull

Warning Failed 9m56s (x6 over 12m) kubelet Error: ImagePullBackOff

Normal BackOff 3m (x32 over 12m) kubelet Back-off pulling image "docker.io/calico/cni:v3.26.1"

……这里我们发现他拉的镜像版本跟我们之前tag的不一样,是的我搞错版本文件了,换一下版本就行,上面不是用的xxx/v3.26.1/xxx嘛,我们有的镜像是v3.25.0的,我们把这个东西换过去就行了

# kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml

……

……

……

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node configured

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node configured

deployment.apps/calico-kube-controllers configured

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-57b57c56f-9g6vg 0/1 Pending 0 12s

kube-system calico-node-kp9b2 1/1 Running 0 12s

kube-system coredns-787d4945fb-6c4mh 0/1 Pending 0 28m

kube-system coredns-787d4945fb-vwm72 0/1 Pending 0 28m

kube-system etcd-higress-control-plane 1/1 Running 0 28m

kube-system kube-apiserver-higress-control-plane 1/1 Running 0 28m

kube-system kube-controller-manager-higress-control-plane 1/1 Running 0 28m

kube-system kube-proxy-89twv 1/1 Running 0 28m

kube-system kube-scheduler-higress-control-plane 1/1 Running 0 28m

local-path-storage local-path-provisioner-75f5b54ffd-gwqh6 0/1 Pending 0 28m

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-57b57c56f-9g6vg 1/1 Running 0 22s

kube-system calico-node-kp9b2 1/1 Running 0 22s

kube-system coredns-787d4945fb-6c4mh 1/1 Running 0 28m

kube-system coredns-787d4945fb-vwm72 1/1 Running 0 28m

kube-system etcd-higress-control-plane 1/1 Running 0 29m

kube-system kube-apiserver-higress-control-plane 1/1 Running 0 29m

kube-system kube-controller-manager-higress-control-plane 1/1 Running 0 29m

kube-system kube-proxy-89twv 1/1 Running 0 28m

kube-system kube-scheduler-higress-control-plane 1/1 Running 0 29m

local-path-storage local-path-provisioner-75f5b54ffd-gwqh6 0/1 ContainerCreating 0 28m这就好了,我们再看看节点的状态是不是好的

![]()

节点也是ready了,我们就可以进行下一步了

二:

创建实例app,这一步简单,注意yaml跟代码匹配一下,不要跟我一样yaml中写了探针但是代码里没提供功能

python代码:

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello():

return "Hello World!"

@app.route('/healthz') # 添加健康检查端点

def healthz():

return "OK", 200

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8080) # 关键!必须监听所有接口dockerfile:

FROM python:3.9-slim

WORKDIR /app

COPY app.py ./

RUN pip install flask

EXPOSE 8080

CMD ["python", "app.py"]使用docker build -t build一下镜像,然后按照上面的load docker-image load进去

# docker build -t mrblind/foo-python:v1 . #打包镜像

# kind load docker-image mrblind/foo-python:v1 --name higress #load进集群

这时候查看一下yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: foo-python

spec:

replicas: 1

# 必须定义 selector (新增部分)

selector:

matchLabels:

app: foo-python # 必须与 template.labels 一致

template:

metadata:

# 必须包含 selector 中定义的标签 (新增部分)

labels:

app: foo-python

spec:

containers:

- name: python-app

image: mrblind/foo-python:v1 # 确保镜像已存在

ports:

- containerPort: 8080

resources:

requests:

cpu: "1m" # 虚拟机注意一下资源不要超过系统承受能力,到时候自己查问题

memory: "16Mi"

---

apiVersion: v1

kind: Service

metadata:

name: foo-python

spec:

selector:

app: foo-python # 必须与 Deployment 的标签一致

ports:

- protocol: TCP

port: 80

targetPort: 8080直接 kubectl apply -f foo-python.yaml就行了,正常这样就运行起来了,不要忘记load进集群

三:

这里写的可能有点问题,暴露端口应该是在第一部分就做了,不过问题不大不想改动了

安装higress

helm repo add higress.io https://higress.io/helm-charts

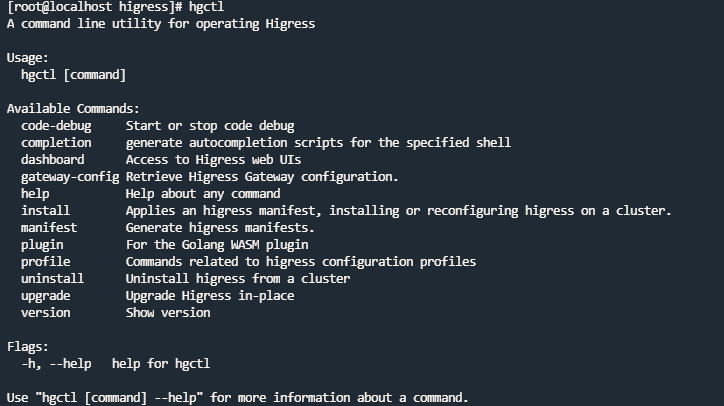

helm install higress -n higress-system higress.io/higress --create-namespace --render-subchart-notes --set global.local=true --set global.o11y.enabled=false然后需要hgctl运行命令

hgctl dashboard console这时候咱发现没有这个命令()

根据官方提供的网页hgctl 工具使用说明-Higress官网

可以使用如下的命令进行hgctl的安装,但是我发现我的虚拟机下载这个东西太慢了,实际不大的文件下载了很久

curl -Ls https://raw.githubusercontent.com/alibaba/higress/main/tools/hack/get-hgctl.sh | bash所以我稍微改了下脚本,然后从我的宿主机下载的文件上传上去,让他优先用我这个文件

# curl -Ls https://raw.githubusercontent.com/alibaba/higress/main/tools/hack/get-hgctl.sh > get-hgctl.sh 113 downloadFile() {

114 hgctl_DIST="hgctl_${VERSION}_${OS}_${ARCH}.tar.gz"

115 if [ "${OS}" == "windows" ]; then

116 hgctl_DIST="hgctl_${VERSION}_${OS}_${ARCH}.zip"

117 fi

118 DOWNLOAD_URL="https://github.com/alibaba/higress/releases/download/$VERSION/$hgctl_DIST"

119 hgctl_TMP_ROOT="$(mktemp -dt hgctl-installer-XXXXXX)"

120 hgctl_TMP_FILE="$hgctl_TMP_ROOT/$hgctl_DIST"

121 echo "Downloading $DOWNLOAD_URL" # 我添加的代码

122 cp hgctl_v2.1.1_linux_amd64.tar.gz "$hgctl_TMP_FILE" #我修改的代码

123 echo "不下载了" # 我添加的代码

124 return 0 # 我添加的代码

125 if [ "${HAS_CURL}" == "true" ]; then

126 curl -SsL "$DOWNLOAD_URL" -o "$hgctl_TMP_FILE"

127 elif [ "${HAS_WGET}" == "true" ]; then

128 wget -q -O "$hgctl_TMP_FILE" "$DOWNLOAD_URL"

129 fi

130 }

然后运行

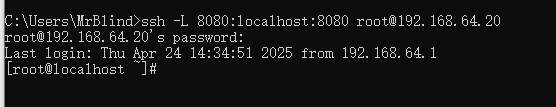

hgctl dashboard console

会出现这么个东西:

这时候我发现关了防火墙我的宿主机也没法访问到这个网络,因此我在cmd中使用了ssh连到虚拟机,然后在浏览器中直接访问localhost:8080,就是下方的样子了,这样就是成功了

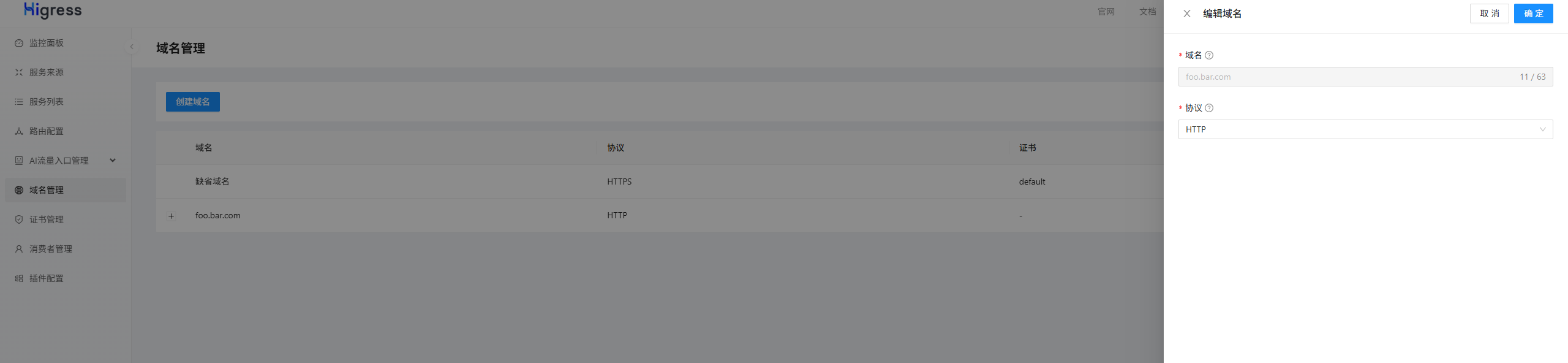

更新博客:

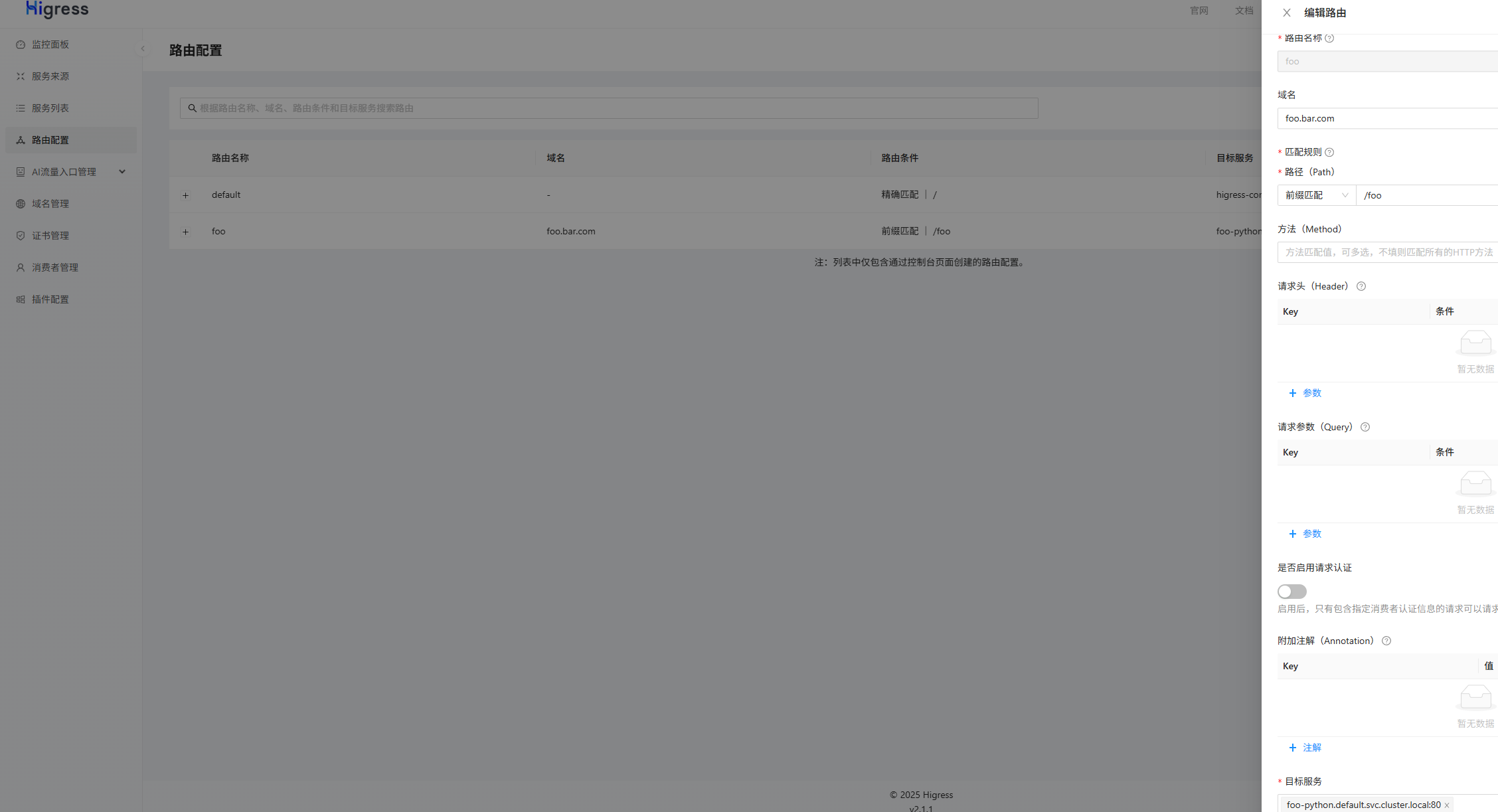

登录上去之后在页面左边有一个域名管理,点进去,选择创建域名,配置如图:

名称:foo.bar.com

协议:HTTP

然后在路由配置中新建路由,配置如图:

完成了之后选择一个能访问到kind(k8s)集群的机器,运行如下命令,端口是上面预留的并且在foo-python的service中配置的

# curl -H "Host: foo.bar.com" http://192.168.64.20:31309

Hello World from Python in Kubernetes!有你期望的输出就行了

补充:

低版本node(1.26?或者更低,就是我上面的版本)网络kindnet不能用(至少我安装过一次是这样的),高版本(1.32.1)有bug,load镜像的时候会出现:ERROR: failed to detect containerd snapshotter,版本低了我没法保护网络,版本高了我没法保护镜像(When upgrading to `kindest/node:v1.32.1` leads to `failed to detect containerd snapshotter` error · Issue #3871 · kubernetes-sigs/kind · GitHub)

Higress实践:从创建虚拟机到应用部署

Higress实践:从创建虚拟机到应用部署

801

801

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?