作业:day43的时候我们安排大家对自己找的数据集用简单cnn训练,现在可以尝试下借助这几天的知识来实现精度的进一步提高

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import numpy as np

import os

import time

from torchvision import models

# 设置中文字体支持

plt.rcParams["font.family"] = ["SimHei"]

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题

# 检查GPU是否可用

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}")

# 定义通道注意力

class ChannelAttention(nn.Module):

def __init__(self, in_channels, ratio=16):

super().__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.max_pool = nn.AdaptiveMaxPool2d(1)

self.fc = nn.Sequential(

nn.Linear(in_channels, in_channels // ratio, bias=False),

nn.ReLU(),

nn.Linear(in_channels // ratio, in_channels, bias=False)

)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

b, c, h, w = x.shape

avg_out = self.fc(self.avg_pool(x).view(b, c))

max_out = self.fc(self.max_pool(x).view(b, c))

attention = self.sigmoid(avg_out + max_out).view(b, c, 1, 1)

return x * attention

## 空间注意力模块

class SpatialAttention(nn.Module):

def __init__(self, kernel_size=7):

super().__init__()

self.conv = nn.Conv2d(2, 1, kernel_size, padding=kernel_size//2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = torch.mean(x, dim=1, keepdim=True)

max_out, _ = torch.max(x, dim=1, keepdim=True)

pool_out = torch.cat([avg_out, max_out], dim=1)

attention = self.conv(pool_out)

return x * self.sigmoid(attention)

## CBAM模块

class CBAM(nn.Module):

def __init__(self, in_channels, ratio=16, kernel_size=7):

super().__init__()

self.channel_attn = ChannelAttention(in_channels, ratio)

self.spatial_attn = SpatialAttention(kernel_size)

def forward(self, x):

x = self.channel_attn(x)

x = self.spatial_attn(x)

return x

# 自定义ResNet18模型,插入CBAM模块

class ResNet18_CBAM(nn.Module):

def __init__(self, num_classes=10, pretrained=True, cbam_ratio=16, cbam_kernel=7):

super().__init__()

# 加载预训练ResNet18

self.backbone = models.resnet18(pretrained=pretrained)

# 修改首层卷积以适应32x32输入

self.backbone.conv1 = nn.Conv2d(

in_channels=3, out_channels=64, kernel_size=3, stride=1, padding=1, bias=False

)

self.backbone.maxpool = nn.Identity() # 移除原始MaxPool层

# 在每个残差块组后添加CBAM模块

self.cbam_layer1 = CBAM(in_channels=64, ratio=cbam_ratio, kernel_size=cbam_kernel)

self.cbam_layer2 = CBAM(in_channels=128, ratio=cbam_ratio, kernel_size=cbam_kernel)

self.cbam_layer3 = CBAM(in_channels=256, ratio=cbam_ratio, kernel_size=cbam_kernel)

self.cbam_layer4 = CBAM(in_channels=512, ratio=cbam_ratio, kernel_size=cbam_kernel)

# 修改分类头

self.backbone.fc = nn.Linear(in_features=512, out_features=num_classes)

def forward(self, x):

x = self.backbone.conv1(x)

x = self.backbone.bn1(x)

x = self.backbone.relu(x) # [B, 64, 32, 32]

# 第一层残差块 + CBAM

x = self.backbone.layer1(x) # [B, 64, 32, 32]

x = self.cbam_layer1(x)

# 第二层残差块 + CBAM

x = self.backbone.layer2(x) # [B, 128, 16, 16]

x = self.cbam_layer2(x)

# 第三层残差块 + CBAM

x = self.backbone.layer3(x) # [B, 256, 8, 8]

x = self.cbam_layer3(x)

# 第四层残差块 + CBAM

x = self.backbone.layer4(x) # [B, 512, 4, 4]

x = self.cbam_layer4(x)

# 全局平均池化 + 分类

x = self.backbone.avgpool(x) # [B, 512, 1, 1]

x = torch.flatten(x, 1) # [B, 512]

x = self.backbone.fc(x) # [B, num_classes]

return x

# ==================== 数据加载修改部分 ====================

# 数据集路径

train_data_dir = 'archive/Train_Test_Valid/Train'

test_data_dir = 'archive/Train_Test_Valid/test'

# 获取类别数量

num_classes = len(os.listdir(train_data_dir))

print(f"检测到 {num_classes} 个类别")

# 数据预处理

train_transform = transforms.Compose([

transforms.RandomResizedCrop(32),

transforms.RandomHorizontalFlip(),

transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.1),

transforms.RandomRotation(15),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize(32),

transforms.CenterCrop(32),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# 加载数据集

train_dataset = datasets.ImageFolder(root=train_data_dir, transform=train_transform)

test_dataset = datasets.ImageFolder(root=test_data_dir, transform=test_transform)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True, num_workers=4)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False, num_workers=4)

print(f"训练集大小: {len(train_dataset)} 张图片")

print(f"测试集大小: {len(test_dataset)} 张图片")

# ==================== 训练函数 ====================

def set_trainable_layers(model, trainable_parts):

print(f"\n---> 解冻以下部分并设为可训练: {trainable_parts}")

for name, param in model.named_parameters():

param.requires_grad = False

for part in trainable_parts:

if part in name:

param.requires_grad = True

break

def train_staged_finetuning(model, criterion, train_loader, test_loader, device, epochs):

optimizer = None

all_iter_losses, iter_indices = [], []

train_acc_history, test_acc_history = [], []

train_loss_history, test_loss_history = [], []

for epoch in range(1, epochs + 1):

epoch_start_time = time.time()

# 动态调整学习率和冻结层

if epoch == 1:

print("\n" + "="*50 + "\n🚀 **阶段 1:训练注意力模块和分类头**\n" + "="*50)

set_trainable_layers(model, ["cbam", "backbone.fc"])

optimizer = optim.Adam(filter(lambda p: p.requires_grad, model.parameters()), lr=1e-3)

elif epoch == 6:

print("\n" + "="*50 + "\n✈️ **阶段 2:解冻高层卷积层 (layer3, layer4)**\n" + "="*50)

set_trainable_layers(model, ["cbam", "backbone.fc", "backbone.layer3", "backbone.layer4"])

optimizer = optim.Adam(filter(lambda p: p.requires_grad, model.parameters()), lr=1e-4)

elif epoch == 21:

print("\n" + "="*50 + "\n🛰️ **阶段 3:解冻所有层,进行全局微调**\n" + "="*50)

for param in model.parameters(): param.requires_grad = True

optimizer = optim.Adam(model.parameters(), lr=1e-5)

# 训练循环

model.train()

running_loss, correct, total = 0.0, 0, 0

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

iter_loss = loss.item()

all_iter_losses.append(iter_loss)

iter_indices.append((epoch - 1) * len(train_loader) + batch_idx + 1)

running_loss += iter_loss

_, predicted = output.max(1)

total += target.size(0)

correct += predicted.eq(target).sum().item()

if (batch_idx + 1) % 100 == 0:

print(f'Epoch: {epoch}/{epochs} | Batch: {batch_idx+1}/{len(train_loader)} '

f'| 单Batch损失: {iter_loss:.4f} | 累计平均损失: {running_loss/(batch_idx+1):.4f}')

epoch_train_loss = running_loss / len(train_loader)

epoch_train_acc = 100. * correct / total

train_loss_history.append(epoch_train_loss)

train_acc_history.append(epoch_train_acc)

# 测试循环

model.eval()

test_loss, correct_test, total_test = 0, 0, 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += criterion(output, target).item()

_, predicted = output.max(1)

total_test += target.size(0)

correct_test += predicted.eq(target).sum().item()

epoch_test_loss = test_loss / len(test_loader)

epoch_test_acc = 100. * correct_test / total_test

test_loss_history.append(epoch_test_loss)

test_acc_history.append(epoch_test_acc)

print(f'Epoch {epoch}/{epochs} 完成 | 耗时: {time.time() - epoch_start_time:.2f}s | 训练准确率: {epoch_train_acc:.2f}% | 测试准确率: {epoch_test_acc:.2f}%')

# 绘图函数

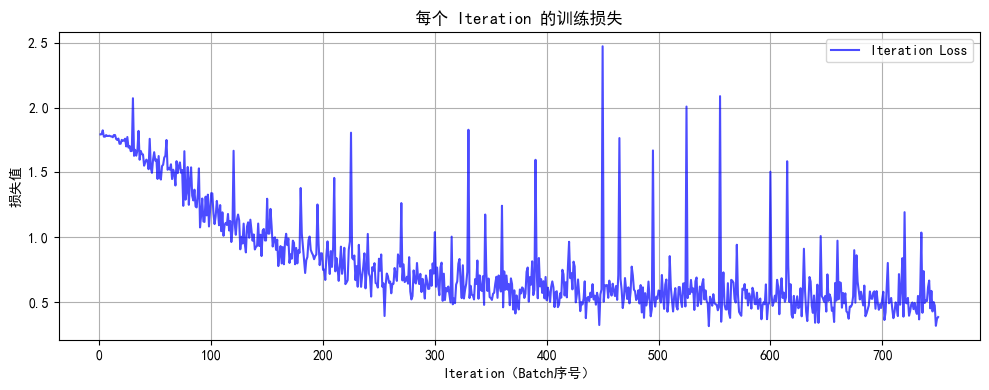

def plot_iter_losses(losses, indices):

plt.figure(figsize=(10, 4))

plt.plot(indices, losses, 'b-', alpha=0.7, label='Iteration Loss')

plt.xlabel('Iteration(Batch序号)')

plt.ylabel('损失值')

plt.title('每个 Iteration 的训练损失')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

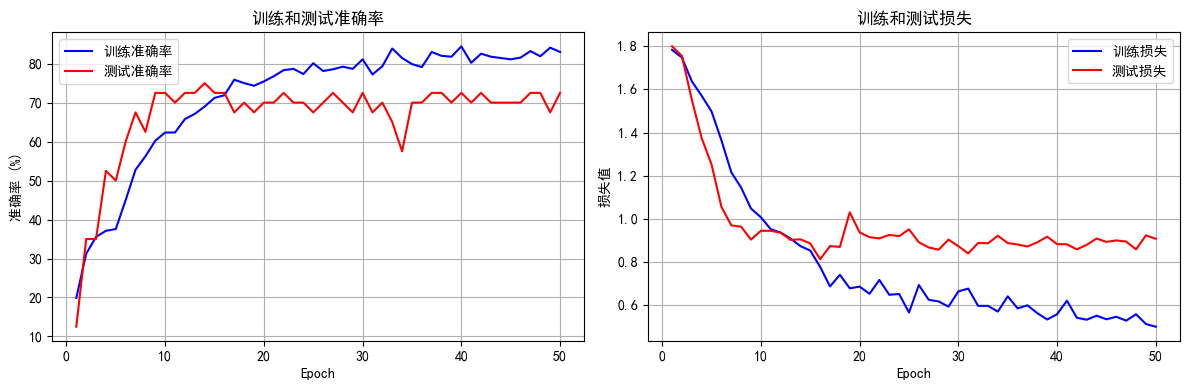

def plot_epoch_metrics(train_acc, test_acc, train_loss, test_loss):

epochs = range(1, len(train_acc) + 1)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs, train_acc, 'b-', label='训练准确率')

plt.plot(epochs, test_acc, 'r-', label='测试准确率')

plt.xlabel('Epoch')

plt.ylabel('准确率 (%)')

plt.title('训练和测试准确率')

plt.legend(); plt.grid(True)

plt.subplot(1, 2, 2)

plt.plot(epochs, train_loss, 'b-', label='训练损失')

plt.plot(epochs, test_loss, 'r-', label='测试损失')

plt.xlabel('Epoch')

plt.ylabel('损失值')

plt.title('训练和测试损失')

plt.legend(); plt.grid(True)

plt.tight_layout()

plt.show()

print("\n训练完成! 开始绘制结果图表...")

plot_iter_losses(all_iter_losses, iter_indices)

plot_epoch_metrics(train_acc_history, test_acc_history, train_loss_history, test_loss_history)

return epoch_test_acc

# ==================== 主程序 ====================

model = ResNet18_CBAM(num_classes=num_classes).to(device)

criterion = nn.CrossEntropyLoss()

epochs = 50

print("开始使用带分阶段微调策略的ResNet18+CBAM模型进行训练...")

final_accuracy = train_staged_finetuning(model, criterion, train_loader, test_loader, device, epochs)

print(f"训练完成!最终测试准确率: {final_accuracy:.2f}%")

# 保存模型

torch.save(model.state_dict(), 'resnet18_cbam_custom.pth')

print("模型已保存为: resnet18_cbam_custom.pth")

@浙大疏锦行

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?