1、前言

2025年5月,SpringAI 1.0.0终于正式发布。这不仅是另一个普通的库,更是将Java和Spring推向AI革命前沿的战略性举措。给Java生态带来了强大且全面的AI工程解决方案。众多企业级应用在SpringBoot上运行关键业务,而SpringAI 1.0.0的发布,将赋予开发者将应用程序与前沿AI模型无缝连接的能力!

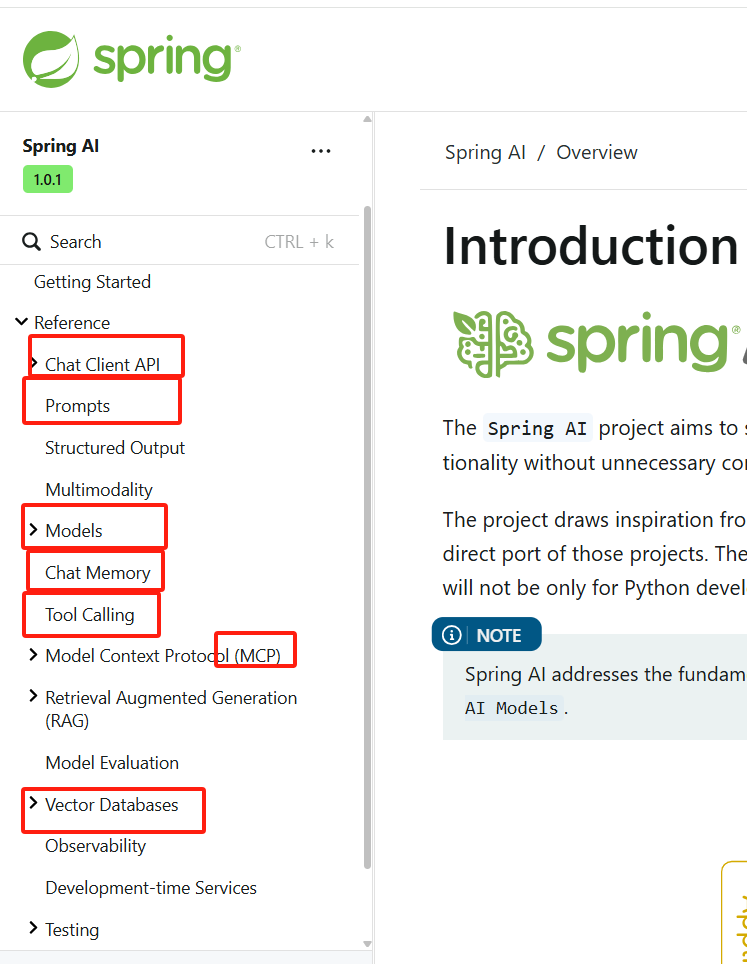

官方文档中已提供了众多能力的说明,旨在简化大模型的应用程序的开发。

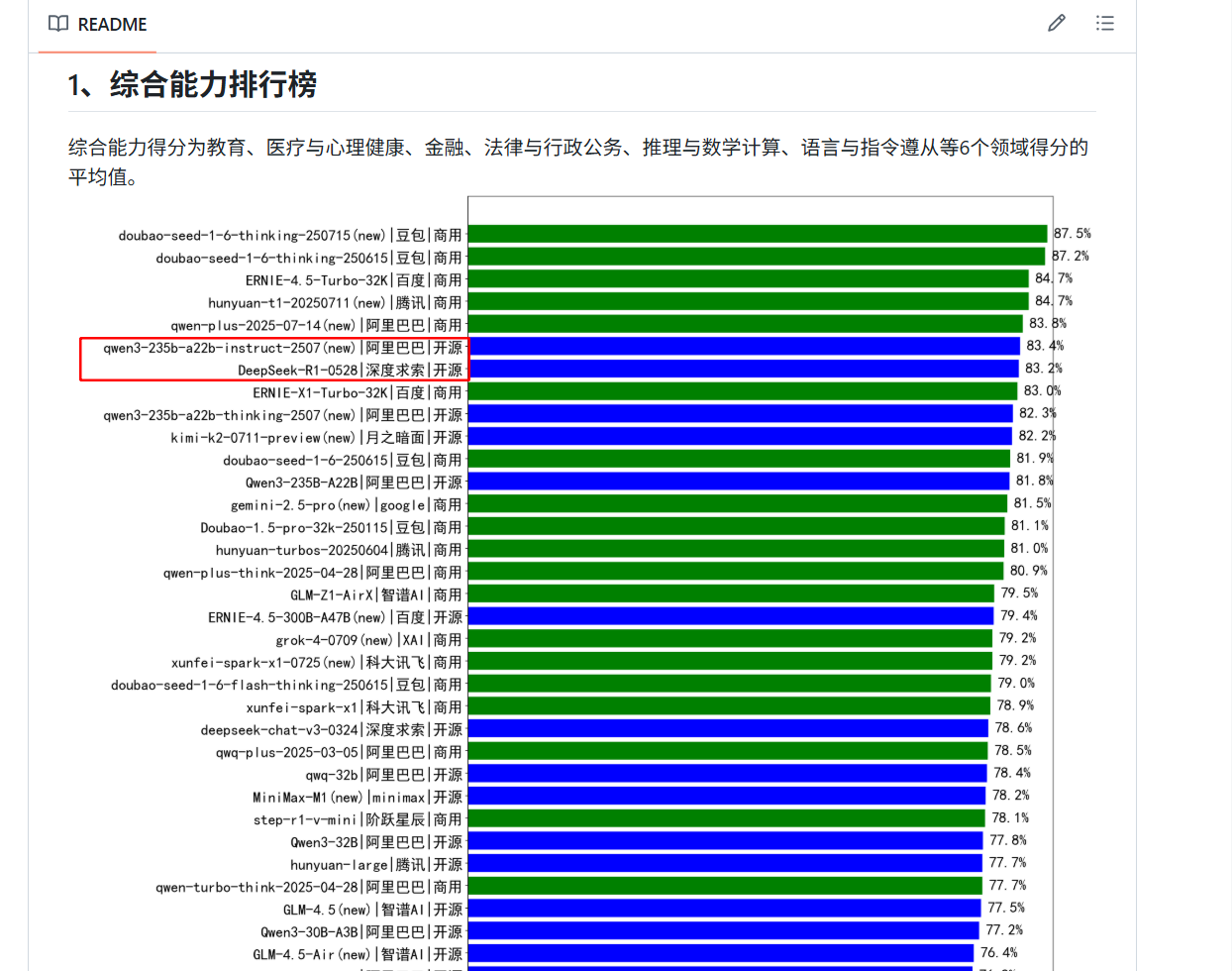

另外,开源大模型的选择(如deepseekR1(0528版)),不同蒸馏模型的选择,可参考github上的开源大模型排行榜

2、运行环境

SpringAI基于spingboot3.x版本,需要JDK17以上。

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.4.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>3、Api-Key申请

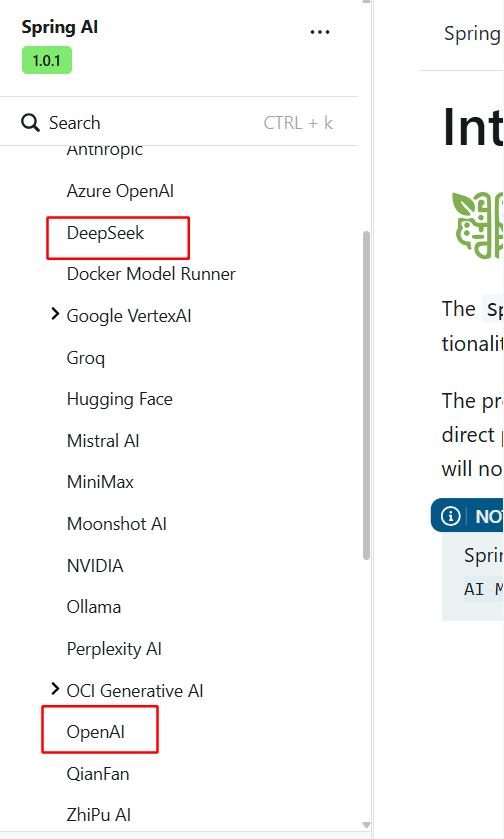

SpringAI提供多种AI提供商的便携式Model,包括各类多模态:图像识别、语音识别、视频识别,以及最基本的LLM文本对话,例如:Claude、OpenAI、DeepSeek、ZhiPu等。

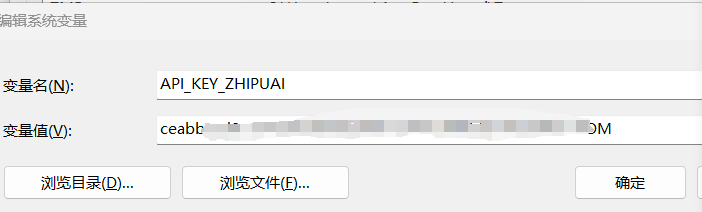

本文使用智谱AI的大模型演示,新用户可获得有期限的免费次数

也可以使用本地安装大模型:https://ollama.com/。通过ollama,就不再需要环境(大模型很多都是依赖Python环境)

4、完整pom

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.4.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>spring-ai-demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>spring-ai-demo</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>17</java.version>

<fastjson.version>2.0.53</fastjson.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- 智谱 -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-zhipuai</artifactId>

</dependency>

<!-- deepseek -->

<!--<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-deepseek</artifactId>

</dependency>-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>com.alibaba.fastjson2</groupId>

<artifactId>fastjson2</artifactId>

<version>${fastjson.version}</version>

</dependency>

<!--mcp server-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-server</artifactId>

</dependency>

<!--即支持sse,也支持stdio-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-client-webflux</artifactId>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>16</source>

<target>16</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

阿里的大模型pom=sping-ai-alibaba-starter-dashscope

5、application.properties文件

server:

servlet:

context-path: /ai

spring:

application:

name: spring-ai-demo

ai:

chat:

client:

# 禁用默认chat client

enabled: false

zhipuai:

# 从环境变量取

api-key: ${API_KEY_ZHIPUAI}

chat:

options:

model: glm-4-plus

temperature: 0.7

data:

redis:

host: localhost

port: 6379

password: 123123!

lettuce:

pool:

min-idle: 0

max-idle: 8

max-active: 8

max-wait: -1ms

6、特性与Demo

6.1、最简单的对话

配置ChatClient

/**

* 默认client

*/

@Bean

public ChatClient zhiPuAiChatClient(ZhiPuAiChatModel chatModel) {

return ChatClient.create(chatModel);

}定义接口

/**

* 最简单的chat

*

* @author stone

* @date 2025/6/26 16:11

*/

@RestController

@RequestMapping("/case1")

@Slf4j

public class Case1Controller {

@Resource

@Qualifier("zhiPuAiChatClient")

private ChatClient chatClient;

/**

* 直接获取结果

*/

@GetMapping("/chat")

public String chat(@RequestParam("input") String input) {

// input=讲个笑话

return this.chatClient.prompt()

.user(input)

.call()

.content();

}

/**

* 转化实体

*/

@GetMapping("/entity")

public List<ActFilm> entity(@RequestParam("input") String input) {

// input=生成刘德华和刘亦菲的10部电影

return this.chatClient.prompt()

.user(input)

.call()

.entity(new ParameterizedTypeReference<List<ActFilm>>() {

});

}

/**

* 流式响应

*/

@GetMapping(value = "/flux", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> flux(@RequestParam("input") String input) {

// input=讲个笑话

return this.chatClient.prompt()

.user(input)

.stream()

.content();

}

/**

* 动态输入

*/

@GetMapping(value = "/fluxDynamic", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> fluxDynamic(@RequestParam("input") String input, @RequestParam("name") String name) {

return this.chatClient.prompt()

.user(promptUserSpec -> promptUserSpec

.text("告诉我中国有多少叫{name}的人")

.param("name", name)

)

.stream()

.content();

}

}.user,也就是用户提示词

.call,同步方式响应,也就是一整个结果返回

.stream,流式响应,调整为sse方式(text/event-stream)

6.2、默认系统文本

预定义chatClient,设置系统提示词

/**

* 参数-占位符的默认系统文本

*/

@Bean

public ChatClient paramTextChatClient(ZhiPuAiChatModel chatModel) {

return ChatClient.builder(chatModel)

.defaultSystem("你是一个智能聊天机器人,用 {role} 的角度回答问题")

.build();

}

/**

* 默认系统文本

*/

@Bean

public ChatClient defaultTextChatClient(ZhiPuAiChatModel chatModel) {

return ChatClient.builder(chatModel)

.defaultSystem("你是一个智能聊天机器人,用邪恶女巫的角度回答问题")

.build();

}定义接口

/**

* 默认系统文本

*

* @author stone

* @date 2025/6/30 15:11

*/

@RestController

@RequestMapping("/case2")

@Slf4j

public class Case2Controller {

@Resource

@Qualifier("defaultTextChatClient")

private ChatClient defaultTextChatClient;

/**

* 默认系统文本

*/

@GetMapping(value = "/flux", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> flux(@RequestParam("input") String input) {

// input=讲个笑话

return this.defaultTextChatClient.prompt()

.user(input)

.stream()

.content();

}

@Resource

@Qualifier("paramTextChatClient")

private ChatClient paramTextChatClient;

/**

* 动态系统文本

*/

@GetMapping(value = "/chat", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> chat(@RequestParam("input") String input, @RequestParam("role") String role) {

// input=聊一聊圆明园的故事吧,500字以内

// role=数学老师/邪恶女巫

return this.paramTextChatClient.prompt()

.system(promptSystemSpec -> promptSystemSpec.param("role", role))

.user(input)

.stream()

.content();

}

}

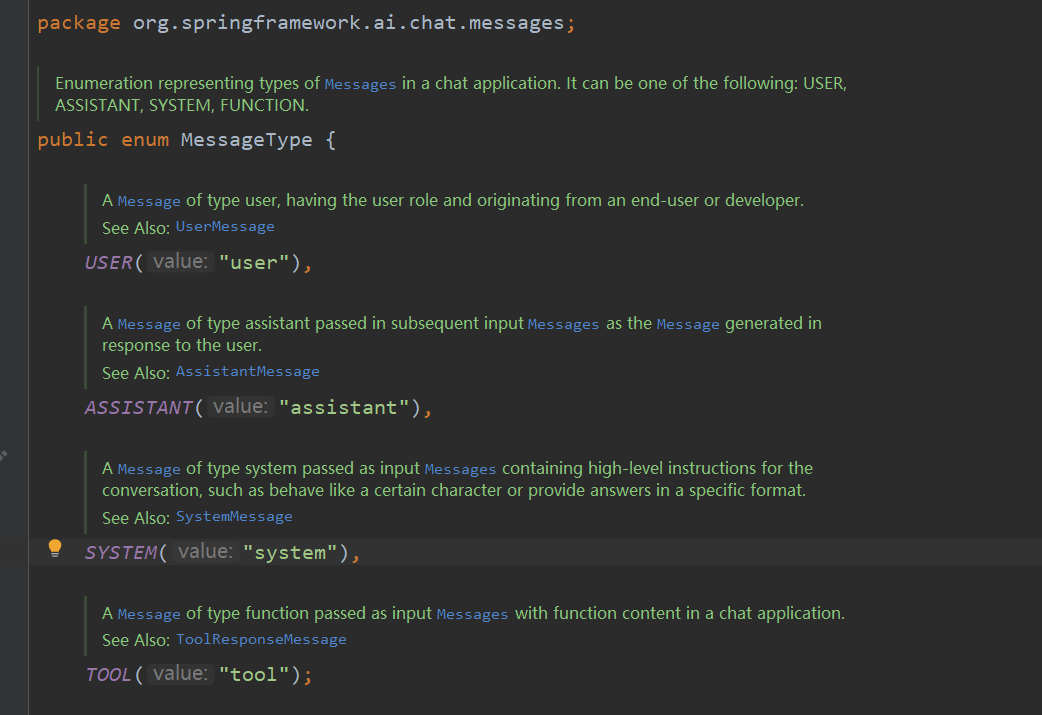

消息类型

提示词的不同部分,在交互中扮演着独特和定义明确的角色。

6.3、advisors

提供了强大灵活的拦截式AI交互驱动(配置多个advisor时,前一个做出的更改会传递给下一个)

/**

* @author stone

* @date 2025/6/30 15:41

*/

@RestController

@RequestMapping("/case3")

@Slf4j

public class Case3Controller {

@Resource

@Qualifier("paramTextChatClient")

private ChatClient chatClient;

/**

* 最简单的advisor=日志

* <p>

* org.springframework.ai.chat.client.advisor=debug

*/

@GetMapping(value = "/chat", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> chat(@RequestParam("input") String input, @RequestParam("role") String role) {

// input=聊一聊圆明园的故事吧,500字以内

// role=邪恶女巫

return this.chatClient.prompt()

.system(promptSystemSpec -> promptSystemSpec.param("role", role))

.advisors(new SimpleLoggerAdvisor())

.user(input)

.stream()

.content();

}

/**

* 自定义打印内容

*/

@GetMapping(value = "/chat2",produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> chat2(@RequestParam("input") String input, @RequestParam("role") String role) {

// input=聊一聊圆明园的故事吧,500字以内

// role=邪恶女巫

return this.chatClient.prompt()

.system(promptSystemSpec -> promptSystemSpec.param("role", role))

.advisors(SimpleLoggerAdvisor.builder()

.requestToString(req -> "请求参数:" + req.prompt().getUserMessage().getText())

.responseToString(resp -> "响应参数:" + resp.getResult().getOutput().getText())

.build())

.user(input)

.stream()

.content();

}

/**

* 定义子类,自定义打印的

*/

@GetMapping(value = "/chat3",produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> chat3(@RequestParam("input") String input, @RequestParam("role") String role) {

// input=聊一聊圆明园的故事吧,500字以内

// role=邪恶女巫

return this.chatClient.prompt()

.system(promptSystemSpec -> promptSystemSpec.param("role", role))

.advisors(new SimpleLogAdvisor())

.user(input)

.stream()

.content();

}

/**

* 自定义日志打印

*/

public static class SimpleLogAdvisor extends SimpleLoggerAdvisor {

public ChatClientResponse adviseCall(ChatClientRequest chatClientRequest, CallAdvisorChain callAdvisorChain) {

log.info("自定义日志,请求参数:{}", chatClientRequest.prompt().getUserMessage().getText());

ChatClientResponse chatClientResponse = super.adviseCall(chatClientRequest, callAdvisorChain);

log.info("自定义日志,响应结果:{}", chatClientResponse.chatResponse().getResult().getOutput().getText());

return chatClientResponse;

}

public Flux<ChatClientResponse> adviseStream(ChatClientRequest chatClientRequest, StreamAdvisorChain streamAdvisorChain) {

log.info("自定义日志,请求参数:{}", chatClientRequest.prompt().getUserMessage().getText());

Flux<ChatClientResponse> chatClientResponses = streamAdvisorChain.nextStream(chatClientRequest);

return (new ChatClientMessageAggregator()).aggregateChatClientResponse(chatClientResponses, this::logResponse);

}

private void logResponse(ChatClientResponse chatClientResponse) {

log.info("自定义日志,响应结果:{}", chatClientResponse.chatResponse().getResult().getOutput().getText());

}

}

}

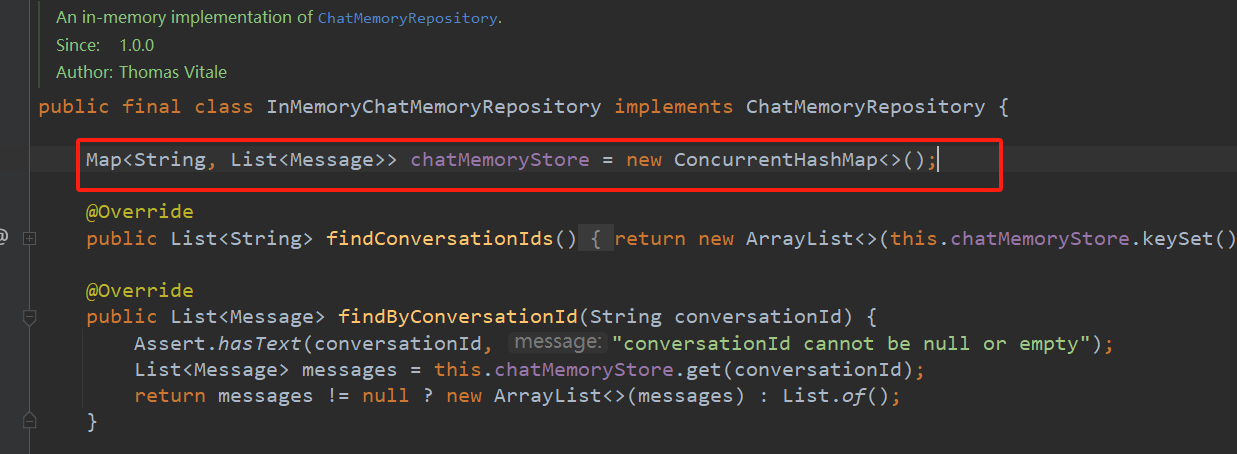

6.4、对话记忆功能

通过多轮对话,实现聊天内存功能,通过实现交互信息的持久化存储与动态检索机制

public Case4Controller(ZhiPuAiChatModel chatModel,

ChatMemory chatMemory) {

this.chatClient = ChatClient

.builder(chatModel)

.defaultAdvisors(

PromptChatMemoryAdvisor.builder(chatMemory).build()

)

.build();

}

private ChatClient chatClient;基于JVM内存;设置唯一信息,通过常量区分不同的用户对话

/**

* 对话记忆功能-基于内存

* <p>

* MessageWindowChatMemory:默认最大20

* InMemoryChatMemoryRepository:使用map

*/

// @GetMapping(value = "/chat", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

@GetMapping(value = "/chat")

public Flux<String> chat(@RequestParam("input") String input) {

// input=我叫什么

return this.chatClient.prompt()

.user(input)

.stream()

.content();

}

/**

* 对话记忆功能-区分不同用户

*/

@GetMapping(value = "/chat2")

public Flux<String> chat2(@RequestParam("input") String input,

@RequestParam("userId") String userId) {

return this.chatClient.prompt()

.user(input)

.advisors(a -> a.param(ChatMemory.CONVERSATION_ID, userId))

.stream()

.content();

}基于Redis的多轮对话记忆功能

/**

* @author stone

* @date 2025/7/14 10:20

*/

@Data

public class ChatBO implements Serializable {

/**

* 用户对话唯一标识

*/

private String chatId;

/**

* 对话类型

*/

private String type;

/**

* 对话内容

*/

private String text;

}

/**

* @author stone

* @date 2025/7/14 10:19

*/

@Slf4j

@Component

public class ChatRedisMemory implements ChatMemory {

private static final String KEY_PREFIX = "chat:history:";

private final RedisTemplate<String, Object> redisTemplate;

public ChatRedisMemory(RedisTemplate<String, Object> redisTemplate) {

this.redisTemplate = redisTemplate;

}

@Override

public void add(String conversationId, List<Message> messages) {

String key = KEY_PREFIX + conversationId;

List<String> list = new ArrayList<>();

for (Message msg : messages) {

String[] strs = msg.getText().split("</think>");

String text = strs.length == 2 ? strs[1] : strs[0];

// 转化

ChatBO bo = new ChatBO();

bo.setChatId(conversationId);

bo.setType(msg.getMessageType().getValue());

bo.setText(text);

list.add(JSON.toJSONString(bo));

}

redisTemplate.opsForList().rightPushAll(key, list.toArray());

redisTemplate.expire(key, 30, TimeUnit.MINUTES);

}

@Override

public List<Message> get(String conversationId) {

String key = KEY_PREFIX + conversationId;

Long size = redisTemplate.opsForList().size(key);

if (size == null || size == 0) {

return Collections.emptyList();

}

List<Object> listTmp = redisTemplate.opsForList().range(key, 0, -1);

List<Message> result = new ArrayList<>();

for (Object obj : listTmp) {

ChatBO chat = JSON.parseObject(obj.toString(), ChatBO.class);

if (MessageType.USER.getValue().equals(chat.getType())) {

result.add(new UserMessage(chat.getText()));

} else if (MessageType.ASSISTANT.getValue().equals(chat.getType())) {

result.add(new AssistantMessage(chat.getText()));

} else if (MessageType.SYSTEM.getValue().equals(chat.getType())) {

result.add(new SystemMessage(chat.getText()));

}

}

return result;

}

@Override

public void clear(String conversationId) {

redisTemplate.delete(KEY_PREFIX + conversationId);

}

}

/**

* 基于redis的对话记忆

*/

@Bean

public ChatMemory chatMemory(RedisTemplate<String, Object> redisTemplate) {

return new ChatRedisMemory(redisTemplate);

} public Case4Controller(ZhiPuAiChatModel chatModel,

ChatMemory chatMemory) {

this.chatClient = ChatClient

.builder(chatModel)

.defaultAdvisors(

PromptChatMemoryAdvisor.builder(chatMemory).build()

)

.build();

}

/**

* 对话记忆功能-基于redis

*/

@GetMapping(value = "/chat3")

public Flux<String> chat3(@RequestParam("input") String input,

@RequestParam("userId") String userId) {

return this.chatClient.prompt()

.user(input)

.advisors(a -> a.param(ChatMemory.CONVERSATION_ID, userId))

.stream()

.content();

}多层记忆机构,模仿人类,做到近期(清晰),中期(模糊),长期(关键点)。这里就引入了向量数据库和RAG。

6.5、@Tools使用

声明式Function Calling,将方法转化为工具。提前告诉大模型,提供了什么tools。太多的tools,可以放到向量数据库。

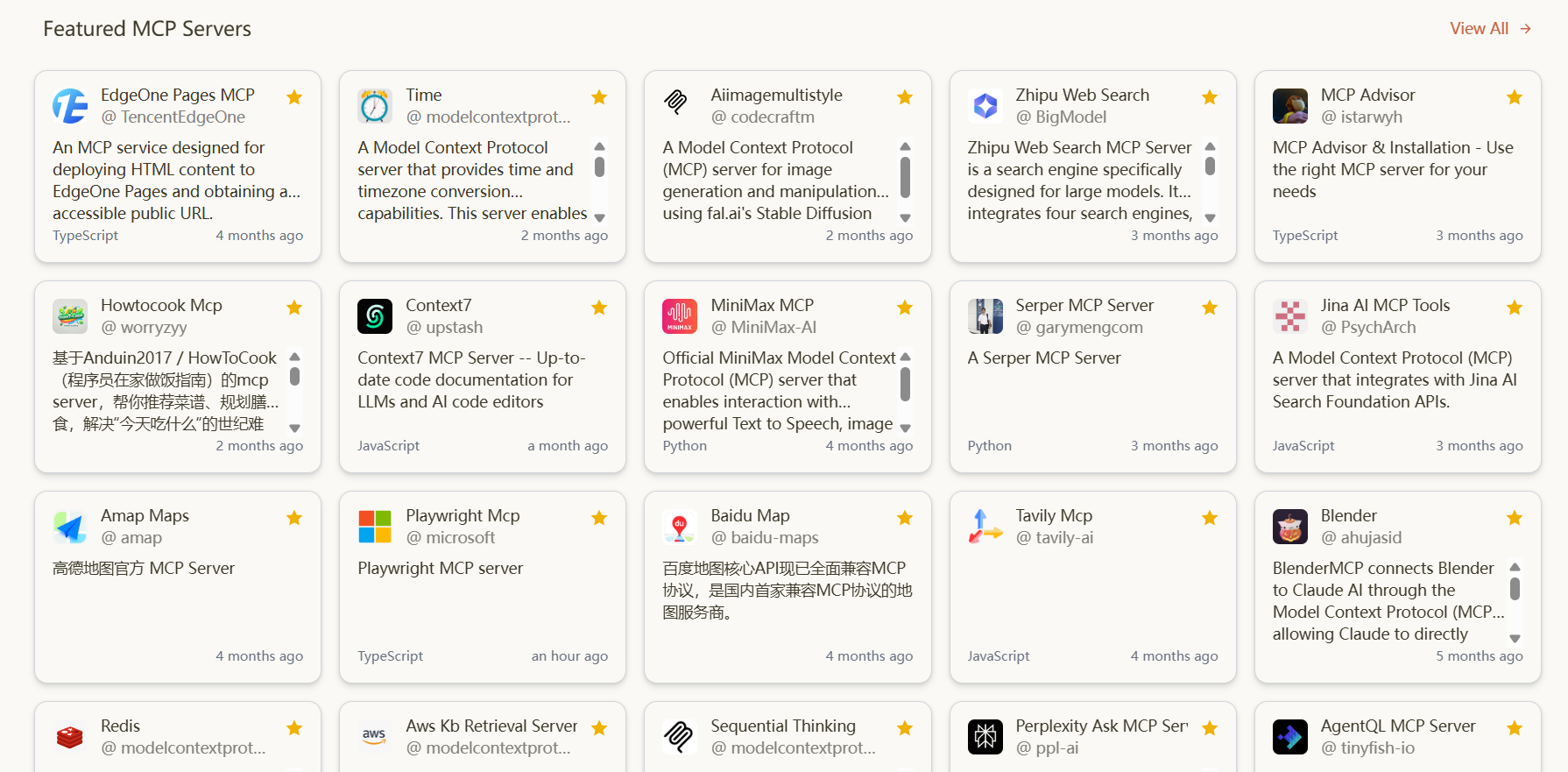

第三方提供的tools,比如百度天气、高德位置,不可能各对接系统去做解析。因此MCP(model content protol)协议,通过JSON-rpc2.0方式(json数据格式),统一格式解析。

/**

* tools工具

*

* @author stone

* @date 2025/7/4 10:42

*/

@Component

@Slf4j

public class OrderTools {

/**

* 比如在退订、取消订单

*/

@Tool(description = "退订、取消订单")

public String cancelOrder(@ToolParam(description = "订单号") String orderNum,

@ToolParam(description = "账号") String userAccount) {

log.info("订单号:{},用户账号:{}", orderNum, userAccount);

// 执行业务逻辑

log.info("处理数据库...");

return "操作成功";

}

}

/**

* @author stone

* @date 2025/7/4 10:40

*/

@RestController

@RequestMapping("/case5")

@Slf4j

public class Case5Controller {

public Case5Controller(ZhiPuAiChatModel chatModel,

ChatMemory chatMemory,

OrderTools orderTools) {

this.chatClient = ChatClient

.builder(chatModel)

.defaultAdvisors(

PromptChatMemoryAdvisor.builder(chatMemory).build()

)

.defaultTools(orderTools)

.build();

}

private ChatClient chatClient;

/**

* tools使用

*/

@GetMapping("/chat")

public String chat(@RequestParam("input") String input) {

// input=我要退订

// input=账号是101,订单号是XXX1111

return this.chatClient.prompt()

// 直接方法使用

// .tools()

.user(input)

.call()

.content();

}

}

6.6、调用外部MCP-server

MCP协议,规定了两种实现方式,Web服务(Http,也就是sse长链接通讯)、提供包stdio方式。总结来说MCP就是应用层提供出来,供大模型来打通、访问、共享外部工具的公共的协议。直接理解就是tools的共享。

提供MCP的包-stdio

1、引入pom

<!--mcp server-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-server</artifactId>

</dependency>2、定义tools工具

import lombok.extern.slf4j.Slf4j;

import org.springframework.ai.tool.annotation.Tool;

import org.springframework.stereotype.Service;

/**

* @author stone

* @date 2025/7/14 13:55

*/

@Slf4j

@Service

public class MathService {

@Tool(description = "加法方法")

public Integer add(Integer a, Integer b) {

log.info("===============add方法被调用: a={}, b={}", a, b);

return a + b;

}

@Tool(description = "乘法方法")

public Integer multiply(Integer a, Integer b) {

log.info("===============multiply方法被调用: a={}, b={}", a, b);

return a * b;

}

3、工具声明Provider

/**

* 注册为mcp的工具:允许服务器公开可由语言模型调用的工具

* <p>

* ToolCallbackProvider+@Tools=mcp服务工具定义

*

*/

@Bean

public ToolCallbackProvider mathTools(MathService mathService) {

return MethodToolCallbackProvider.builder()

.toolObjects(mathService)

.build();

}4、最后再打包package得到jar,提供给其他系统使用。

业务系统使用MCP的包

1、引入pom

<!--即支持sse,也支持stdio-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-client-webflux</artifactId>

</dependency>2、配置文件

spring:

ai:

mcp:

client:

stdio:

servers-configuration: classpath:/mcp-server-config.json3、mcp-server-config.json格式

{

"mcpServers": {

"amap": {

"command": "java",

"args": [

"-jar",

"amap-mcp-server.jar"

],

"env": {

"AMAP_API_KEY": "YOUR_AMAP_API_KEY"

}

}

}

}这里举例,amap为高德的mcp。下边是命令(不同包依赖环境,比如python),连起来就是java -jar test.jar。因此启动时,会获取mcp包中能够提供的tools列表。

env的配置(企业级-权限控制):客户端配置,由mcpServer提供商的apiKey。mciServer内部则可以校验apiKey是否有效,来进行权限控制。

问题:如果存在不同的用户,怎么切换api_key?

答:此时不能使用stdio的方式,而是通过sse的方式,调用远程Web服务的MCP-server。

4、使用引入的mcp工具

/**

* @author stone

* @date 2025/7/14 11:20

*/

@RestController

@RequestMapping("/case6")

@Slf4j

public class Case6Controller {

public Case6Controller(ZhiPuAiChatModel chatModel,

ToolCallbackProvider toolCallbackProvider) {

this.chatClient = ChatClient

.builder(chatModel)

.defaultToolCallbacks(toolCallbackProvider)

.build();

}

private ChatClient chatClient;

/**

* 调用mcp

*/

@GetMapping("/chat")

public String chat(@RequestParam("input") String input) {

// input=一加一等于几

return this.chatClient.prompt()

.user(input)

.call()

.content();

}

}

通过ChatClient设置defaultToolCallbacks来实现。

目前比较主流的就是stdio的方式,直接把包提供出来,业务系统自己使用即可

mcp广场: http://mcp.so/

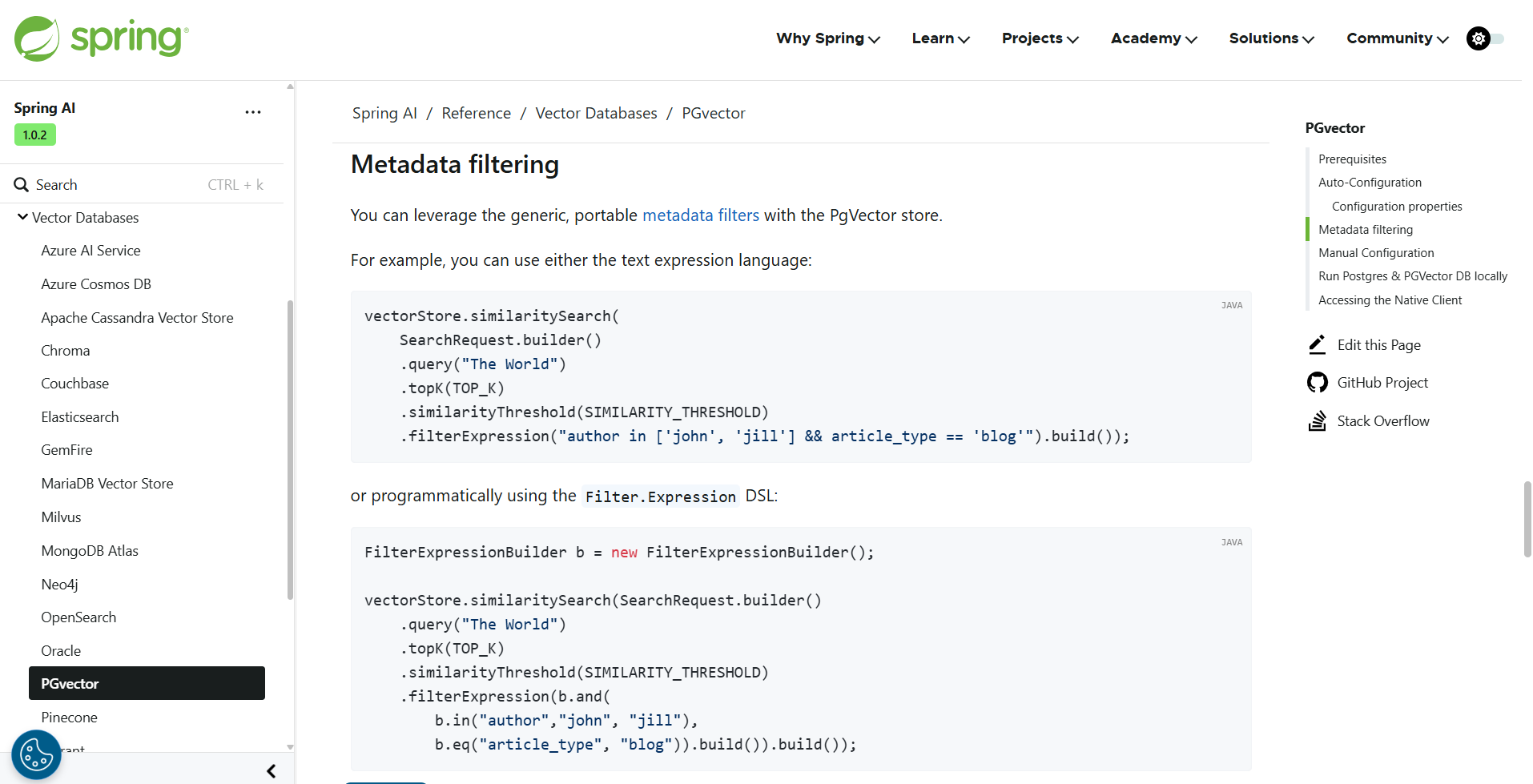

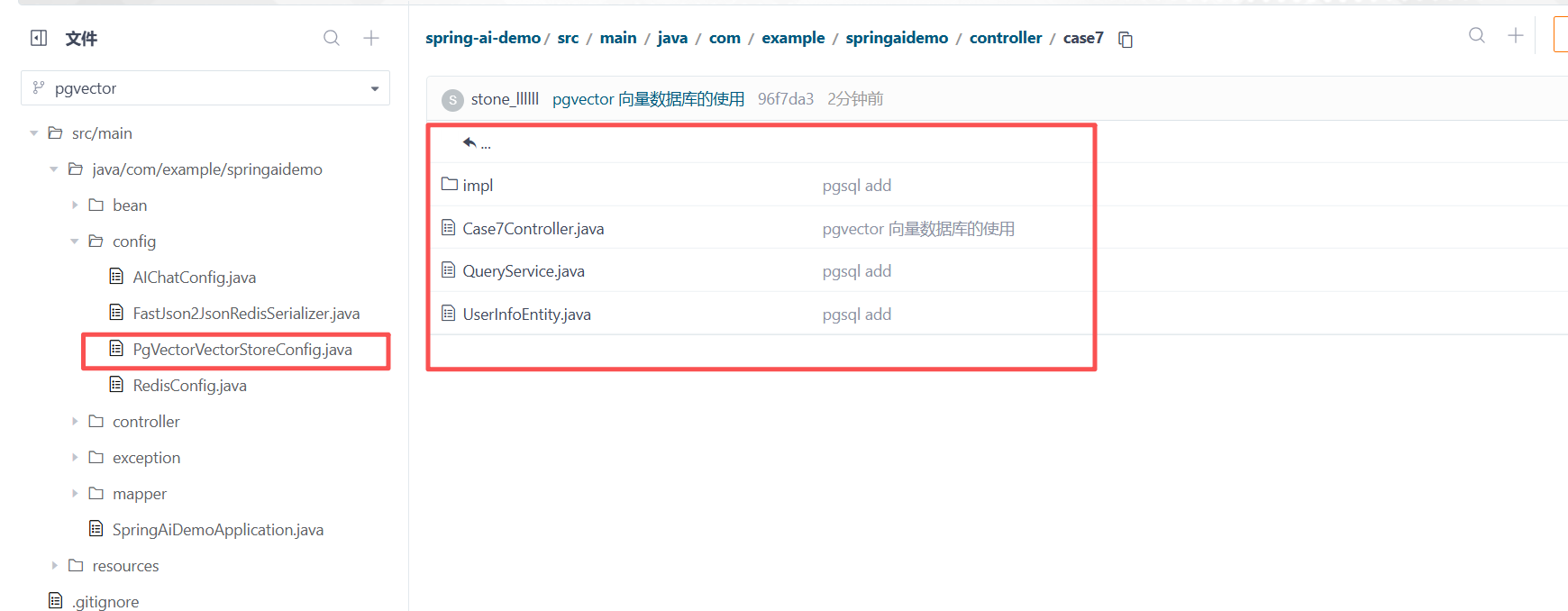

6.7、向量数据库与RAG(检索增强生成)

postgresql安装pgvector插件:https://github.com/pgvector/pgvector

springAI官方示例↓

demo代码已备好↓

1241

1241

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?