Basic Machine Learning Problems

● Supervised Learning: You have labelled data for computer to learn from

○ Regression

○ Classification

● Unsupervised Learning: You don’t have labelled data, but you want to find

patterns in the data

○ Clustering / Dimensionality Reduction

Linear Regression

In the simplest case, we can assume that the relationship between the features and the target is linear:

y =a + bX

In the equation above, y is the target, X is the feature, a is the intercept, and b is the weight of the feature

Using ordinary least squares method, we can estimate a and b in the equation

y = b0 + b1x1 + b2x2 + … + bnxn

This is still a linear regression model, sometimes called multiple linear regression

b0 is called the bias term, while b1 to bn are the weights of the features

Classification

● In classification, we are interested in putting each input sample into two (or more) pre-defined classes

● In other words, the target variable y is discrete

● Some common algorithms for classification:

○ Logistic regression

○ Support vector machines

○ Decision Trees

○ K-nearest-neighbour (kNN)

● Some regression tasks can be simplified to classification tasks

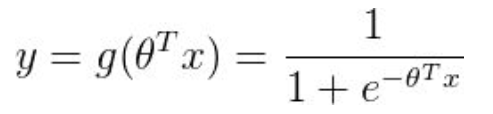

Logistic Regression

we can apply a transformation to the output of linear regression: logistic function or **sigmoid function

Its value tends to 1 if z tends to +∞, and tends to 0 if z tends to −∞

Decision Trees

● Decision trees are constructed by finding conditions to split the dataset into smaller subsets

● Decision trees can also be used to perform regression (thus the term CART:

Classification And Regression Trees)

● Decision trees are usually vulnerable to overfitting (more on this later), thus

we usually have to control the depth of a tree

Choosing ML Algorithms

Reference: Choosing ML Algorithms.

Model Complexity

● A complex model captures complex relationship between X and y, but it is also more likely to pick up noise → overfitting

● A simple model is easy to interpret, but may not be able to capture the true relationship between X and y → underfitting

Splitting Your Dataset

● It is usually advised that we have three splits of the dataset:

- training set: for training your model(s)

- validation/development set: for tuning your model’s hyperparameters

- test/holdout set: for testing the performance of your model

- imbalanced dataset:stratified sampling

- K-fold cross validation

Evaluation

● When evaluating the performance of a model, we need to have:

○ ground truths: the correct answer / the true labels of the inputs

○ metric: a measure of how good the predictions are compared to the ground truths

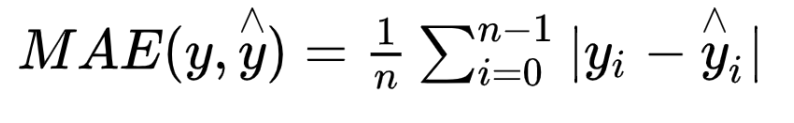

Metrics for Regression - MAE(Mean Absolute Error )

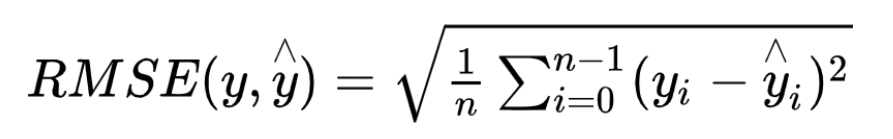

Metrics for Regression - RMSE(Root Mean Squared Error)

Metrics for Classification

○ Accuracy

○ True/False positives/negatives

○ Precision and Recall

○ Area Under the ROC Curve

Top 20 Python libraries for data science

本文探讨了监督学习(包括线性回归和分类,如 logistic 回归、SVM 和决策树)、无监督学习(如聚类和降维)以及关键算法如 KNN。讲解了模型选择、复杂度平衡和数据集划分技巧,还涵盖了常用的评估指标和Python库。

本文探讨了监督学习(包括线性回归和分类,如 logistic 回归、SVM 和决策树)、无监督学习(如聚类和降维)以及关键算法如 KNN。讲解了模型选择、复杂度平衡和数据集划分技巧,还涵盖了常用的评估指标和Python库。

675

675

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?