一、求共同好友

1.1 需求及分析

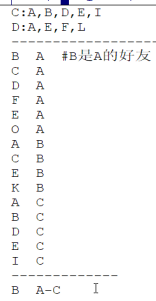

以下是qq的好友列表数据,冒号前是一个用户,冒号后是该用户的所有好友(数据中的好友关系是单向的)

A:B,C,D,F,E,O

B:A,C,E,K

C:A,B,D,E,I

D:A,E,F,L

E:B,C,D,M,L

F:A,B,C,D,E,O,M

G:A,C,D,E,F

H:A,C,D,E,O

I:A,O

J:B,O

K:A,C,D

L:D,E,F

M:E,F,G

O:A,H,I,J

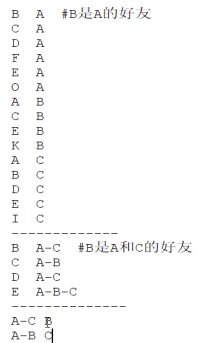

从下图中,反向推导容易理解

1.2 实现步骤

第一步:代码实现

Mapper类

public class Step1Mapper extends Mapper<LongWritable,Text,Text,Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1:以冒号拆分行文本数据: 冒号左边就是V2

String[] split = value.toString().split(":");

String userStr = split[0];

//2:将冒号右边的字符串以逗号拆分,每个成员就是K2

String[] split1 = split[1].split(",");

for (String s : split1) {

//3:将K2和v2写入上下文中

context.write(new Text(s), new Text(userStr));

}

}

}

Reducer类:

public class Step1Reducer extends Reducer<Text,Text,Text,Text> {

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//1:遍历集合,并将每一个元素拼接,得到K3

StringBuffer buffer = new StringBuffer();

for (Text value : values) {

buffer.append(value.toString()).append("-");

}

//2:K2就是V3

//3:将K3和V3写入上下文中

context.write(new Text(buffer.toString()), key);

}

}

JobMain:

public class JobMain extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//1:获取Job对象

Job job = Job.getInstance(super.getConf(), "common_friends_step1_job");

//2:设置job任务

//第一步:设置输入类和输入路径

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\input\\common_friends_step1_input"));

//第二步:设置Mapper类和数据类型

job.setMapperClass(Step1Mapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

//第三,四,五,六

//第七步:设置Reducer类和数据类型

job.setReducerClass(Step1Reducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

//第八步:设置输出类和输出的路径

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\out\\common_friends_step1_out"));

//3:等待job任务结束

boolean bl = job.waitForCompletion(true);

return bl ? 0: 1;

}

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

//启动job任务

int run = ToolRunner.run(configuration, new JobMain(), args);

System.exit(run);

}

}

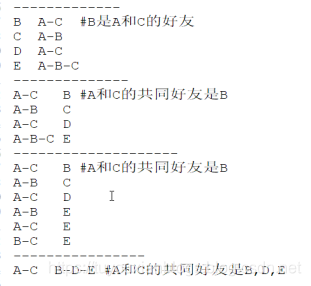

第二步:代码实现

Mapper类

public class Step2Mapper extends Mapper<LongWritable,Text,Text,Text> {

/*

K1 V1

0 A-F-C-J-E- B

----------------------------------

K2 V2

A-C B

A-E B

A-F B

C-E B

*/

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1:拆分行文本数据,结果的第二部分可以得到V2

String[] split = value.toString().split("\t");

String friendStr =split[1];

//2:继续以'-'为分隔符拆分行文本数据第一部分,得到数组

String[] userArray = split[0].split("-");

//3:对数组做一个排序

Arrays.sort(userArray);

//4:对数组中的元素进行两两组合,得到K2

/*

A-E-C -----> A C E

A C E

A C E

*/

for (int i = 0; i <userArray.length -1 ; i++) {

for (int j = i+1; j < userArray.length ; j++) {

//5:将K2和V2写入上下文中

context.write(new Text(userArray[i] +"-"+userArray[j]), new Text(friendStr));

}

}

}

}

Reducer类:

public class Step2Reducer extends Reducer<Text,Text,Text,Text> {

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//1:原来的K2就是K3

//2:将集合进行遍历,将集合中的元素拼接,得到V3

StringBuffer buffer = new StringBuffer();

for (Text value : values) {

buffer.append(value.toString()).append("-");

}

//3:将K3和V3写入上下文中

context.write(key, new Text(buffer.toString()));

}

}

JobMain:

public class JobMain extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//1:获取Job对象

Job job = Job.getInstance(super.getConf(), "common_friends_step2_job");

//2:设置job任务

//第一步:设置输入类和输入路径

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\out\\common_friends_step1_out"));

//第二步:设置Mapper类和数据类型

job.setMapperClass(Step2Mapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

//第三,四,五,六

//第七步:设置Reducer类和数据类型

job.setReducerClass(Step2Reducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

//第八步:设置输出类和输出的路径

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\out\\common_friends_step2_out"));

//3:等待job任务结束

boolean bl = job.waitForCompletion(true);

return bl ? 0: 1;

}

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

//启动job任务

int run = ToolRunner.run(configuration, new JobMain(), args);

System.exit(run);

}

}

二、好友推荐系统

(参考链接:http://developer.51cto.com/art/201301/375661.htm)

-

据第一轮MapReduce的Map,第一轮MapReduce的Reduce 的输入是例如key =I,value={“H,I”、“C,I”、“G,I”} 。其实Reduce 的输入是所有与Key代表的结点相互关注的人。如果H、C、G是与I相互关注的好友,那么H、C、G就可能是二度好友的关系,如果他们之间不是相互关注的。,H与C是二度好友,G与C是二度好友,但G与H不是二度好友,因为他们是相互关注的。第一轮MapReduce的Reduce的处理就是把相互关注的好友对标记为一度好友(“deg1friend”)并输出,把有可能是二度好友的好友对标记为二度好友(“deg2friend”)并输出。

-

二轮MapReduce则需要根据第一轮MapReduce的输出,即每个好友对之间是否是一度好友(“deg1friend”),是否有可能是二度好友(“deg2friend”)的关系,确认他们之间是不是真正的二度好友关系。如果他们有deg1friend的标签,那么不可能是二度好友的关系;如果有deg2friend的标签、没有deg1friend的标签,那么他们就是二度好友的关系。另外,特别可以利用的是,某好友对deg2friend标签的个数就是他们成为二度好友的支持数,即他们之间可以通过多少个都相互关注的好友认识。

import java.io.IOException;

import java.util.Random;

import java.util.Vector;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class deg2friend {

public static class job1Mapper extends Mapper<Object, Text, Text, Text>{

private Text job1map_key = new Text();

private Text job1map_value = new Text();

public void map(Object key, Text value, Context context) throws IOException, InterruptedException {

String eachterm[] = value.toString().split(",");

if(eachterm[0].compareTo(eachterm[1])<0){

job1map_value.set(eachterm[0]+"\t"+eachterm[1]);

}

else if(eachterm[0].compareTo(eachterm[1])>0){

job1map_value.set(eachterm[1]+"\t"+eachterm[0]);

}

job1map_key.set(eachterm[0]);

context.write(job1map_key, job1map_value);

job1map_key.set(eachterm[1]);

context.write(job1map_key, job1map_value);

}

}

public static class job1Reducer extends Reducer<Text,Text,Text,Text> {

private Text job1reduce_key = new Text();

private Text job1reduce_value = new Text();

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

String someperson = key.toString();

Vector<String> hisfriends = new Vector<String>();

for (Text val : values) {

String eachterm[] = val.toString().split("\t");

if(eachterm[0].equals(someperson)){

hisfriends.add(eachterm[1]);

job1reduce_value.set("deg1friend");

context.write(val, job1reduce_value);

}

else if(eachterm[1].equals(someperson)){

hisfriends.add(eachterm[0]);

job1reduce_value.set("deg1friend");

context.write(val, job1reduce_value);

}

}

for(int i = 0; i<hisfriends.size(); i++){

for(int j = 0; j<hisfriends.size(); j++){

if (hisfriends.elementAt(i).compareTo(hisfriends.elementAt(j))<0){

job1reduce_key.set(hisfriends.elementAt(i)+"\t"+hisfriends.elementAt(j));

job1reduce_value.set("deg2friend");

context.write(job1reduce_key, job1reduce_value);

}

// else if(hisfriends.elementAt(i).compareTo(hisfriends.elementAt(j))>0){

// job1reduce_key.set(hisfriends.elementAt(j)+"\t"+hisfriends.elementAt(i));

// }

}

}

}

}

public static class job2Mapper extends Mapper<Object, Text, Text, Text>{

private Text job2map_key = new Text();

private Text job2map_value = new Text();

public void map(Object key, Text value, Context context) throws IOException, InterruptedException {

String lineterms[] = value.toString().split("\t");

if(lineterms.length == 3){

job2map_key.set(lineterms[0]+"\t"+lineterms[1]);

job2map_value.set(lineterms[2]);

context.write(job2map_key,job2map_value);

}

}

}

public static class job2Reducer extends Reducer<Text,Text,Text,Text> {

private Text job2reducer_key = new Text();

private Text job2reducer_value = new Text();

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

Vector<String> relationtags = new Vector<String>();

String deg2friendpair = key.toString();

for (Text val : values) {

relationtags.add(val.toString());

}

boolean isadeg1friendpair = false;

boolean isadeg2friendpair = false;

int surport = 0;

for(int i = 0; i<relationtags.size(); i++){

if(relationtags.elementAt(i).equals("deg1friend")){

isadeg1friendpair = true;

}else if(relationtags.elementAt(i).equals("deg2friend")){

isadeg2friendpair = true;

surport += 1;

}

}

if ((!isadeg1friendpair) && isadeg2friendpair){

job2reducer_key.set(String.valueOf(surport));

job2reducer_value.set(deg2friendpair);

context.write(job2reducer_key,job2reducer_value);

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: deg2friend <in> <out>");

System.exit(2);

}

Job job1 = new Job(conf, "deg2friend");

job1.setJarByClass(deg2friend.class);

job1.setMapperClass(job1Mapper.class);

job1.setReducerClass(job1Reducer.class);

job1.setOutputKeyClass(Text.class);

job1.setOutputValueClass(Text.class);

//定义一个临时目录,先将任务的输出结果写到临时目录中, 下一个排序任务以临时目录为输入目录。

FileInputFormat.addInputPath(job1, new Path(otherArgs[0]));

Path tempDir = new Path("deg2friend-temp-" + Integer.toString(new Random().nextInt(Integer.MAX_VALUE)));

FileOutputFormat.setOutputPath(job1, tempDir);

if(job1.waitForCompletion(true))

{

Job job2 = new Job(conf, "deg2friend");

job2.setJarByClass(deg2friend.class);

FileInputFormat.addInputPath(job2, tempDir);

job2.setMapperClass(job2Mapper.class);

job2.setReducerClass(job2Reducer.class);

FileOutputFormat.setOutputPath(job2, new Path(otherArgs[1]));

job2.setOutputKeyClass(Text.class);

job2.setOutputValueClass(Text.class);

FileSystem.get(conf).deleteOnExit(tempDir);

System.exit(job2.waitForCompletion(true) ? 0 : 1);

}

System.exit(job1.waitForCompletion(true) ? 0 : 1);

}

}

1971

1971

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?