1.ip映射

导包

<dependency>

<groupId>org.lionsoul</groupId>

<artifactId>ip2region</artifactId>

<version>1.7.2</version>

</dependency>

import org.apache.spark.SparkConf

import org.apache.spark.sql.{DataFrame, SparkSession, functions}

import org.lionsoul.ip2region.{DbConfig, DbSearcher}

import org.apache.spark.sql.types.StringType

case class ipTest()

object ipTest{

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("sss").setMaster("local[*]")

val spark = SparkSession.builder().config(conf).getOrCreate()

val frame:DataFrame = spark.read.csv("C:\\Users\\86186\\Desktop\\aa.csv").toDF("ip")

//注册的udf是在withcolumn中使用

val UDF = functions.udf(ipTest(_:String),StringType)

import spark.implicits._

frame.withColumn("provience",UDF($"ip")).show()

//注册的udf是在sql中使用

spark.udf.register("ipTest",ipTest(_:String))

frame.createOrReplaceTempView("temp")

spark.sql(

"""

|select

|ip,

|ipTest(ip) as province

|from temp

|""".stripMargin).show()

}

def ipTest(ip:String) = {

//根据ip进行位置信息搜索

val config = new DbConfig

//获取ip库的位置,集群环境下

// val dbfile = SparkFiles.get("ip2region.db")

//本地

val dbfile = "D:\\software\\idea\\WorkSpace\\SparkTestProject\\src\\main\\resources\\ip2region.db"

val searcher = new DbSearcher(config, dbfile)

//采用Btree搜索

val block = searcher.btreeSearch(ip)

val region: String = block.getRegion

//打印位置信息(格式:国家|大区|省份|城市|运营商)

println(region)

//获取省份

val province = region.split("\\|")(2)

province

}

}

2.Shell脚本

2.1使用shell脚本提交spark任务(类可以没有main方法)

#!/bin/bash

function spark_submit_jar() {

$SPARK2/bin/spark-submit --class 主类 \

--master yarn \

--deploy-mode cluster \

--driver-memory 1g \

--driver-cores 2 \

--num-executors 30 \

--executor-memory 4G \

--executor-cores 8 \

jar/Artemis.jar 参数

}

spark_submit_jar

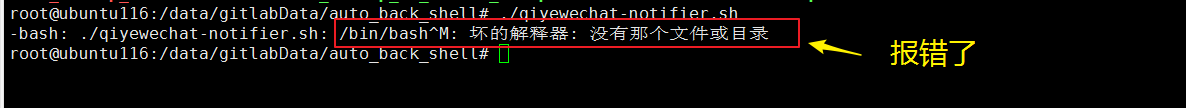

2.2idea编写的shell脚本上传到linux执行时报错

报错信息

报错信息

原因是windows和linux的结尾换行不一致

windows是\n\r,而linux是\n

解决办法是:sed -i ‘s/\r$//’ shellname.sh

将\r换成’’

3.Hive内部表和外部表

1.外部表建表语句

create EXTERNAL table if not EXISTS xiankan_completion_radio_null_si(

id int,

name string

)

partitioned by(time string)

row format delimited

fields terminated by '\t'

location 'hdfs:/path'

2.内部表与外部表的转换

alter table selfTable set TBLPROPERTIES('EXTERNAL'='false')//外部表转内部表

alter table selfTable set TBLPROPERTIES('EXTERNAL'='true')//内部表转外部表

3.添加或者删除分区

ALTER TABLE test_table ADD PARTITION (dt='20210510') location '/path/aa.txt' PARTITION (dt='20210509') location '/path/bb.txt';

ALTER TABLE test_table DROP PARTITION (dt='20210510');

4.hive使用lzo压缩

create table lzo_test(

i int ,

s string)

STORED AS INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

4.hdfs

创建多级目录

hadoop fs -mkdir -p 'hdfs:/a/b/c/d'

删除多级目录

hadoop fs -rm -r 'hdfs:/a/b/c/d'

833

833

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?