文本信息检索

实验要求

-

针对“语料库.txt”文件,实现基于布尔模型的检索系统和基于TF-IDF的检索系统。(每一行看做是一个文档)

-

基于布尔模型的检索系统

输入 以布尔表达式形式输入,一次检索单词数量不超过三个(AA and BB or CC ),返回所有的击中的结果(超过10项的只显示前10项)

-

基于TF-IDF的检索系统

输入不超过8个字的短语,系统首先自动进行分词,按照TF-IDF的值求和排序返回前10项结果。

实验过程

由于两次实验都需要用将 语料库 的每一行看作一个文本,故一开始便将语料库按行分类存储到 text 集合和 listLine 集合中:

//表示语料库中第i行文本下的分词结果,即:第i行文本中,string 词语在该行本文出现的次数

private Map<Integer, Map<String, Integer>> text = new HashMap<>();

//表示语料库第i行的文本内容

private List<String> listLine = new ArrayList<>();

该部分代码与实验三FMM和BMM读取部分代码类似,故省略。

基于布尔模型的检索系统

由于输入的为汉字和 and or not 代表逻辑关系的表达式,而Java支持脚本语言,故使用 JavaSE6 中自带了JavaScript语言的脚本引擎 ScriptEngine:

// 字符串转条件表达式

ScriptEngineManager manager = new ScriptEngineManager();

ScriptEngine engine = manager.getEngineByExtension("js");

// 输入input文本格式处理:如输入 ***中国 and 的 not 共产党***

String[] words = input.trim().split(" +");

String[] word3 = new String[3];

for (int i = 0; i < words.length; i+=2) {

//将输入的单词存入 word3 数组中

word3[i/2] = words[i];

//将输入的 words[i] 替换为 --> 判断word文本行里是否包含words[i]这一单词

words[i] = "word.containsKey(word3[" + i/2 + "])";

}

//将words数组用空格隔开

input = String.join(" ", words);

//将用户输入的逻辑 替换为 --> 计算机可以识别的逻辑符号

input = input.replaceAll(" and ", " && ");

input = input.replaceAll(" or ", " || ");

input = input.replaceAll(" not ", " && ! ");

//脚本语言的绑定

engine.put("word3", word3);

然后对每行文本进行执行,符合条件表达式即输出文本:

Boolean result = (Boolean) engine.eval(input);// 字符串转条件表达式

if (result) {

System.out.println(listLine.get(i));//符合就输出相应文本

num ++;

}

if (num == 10) break;//输出前十行

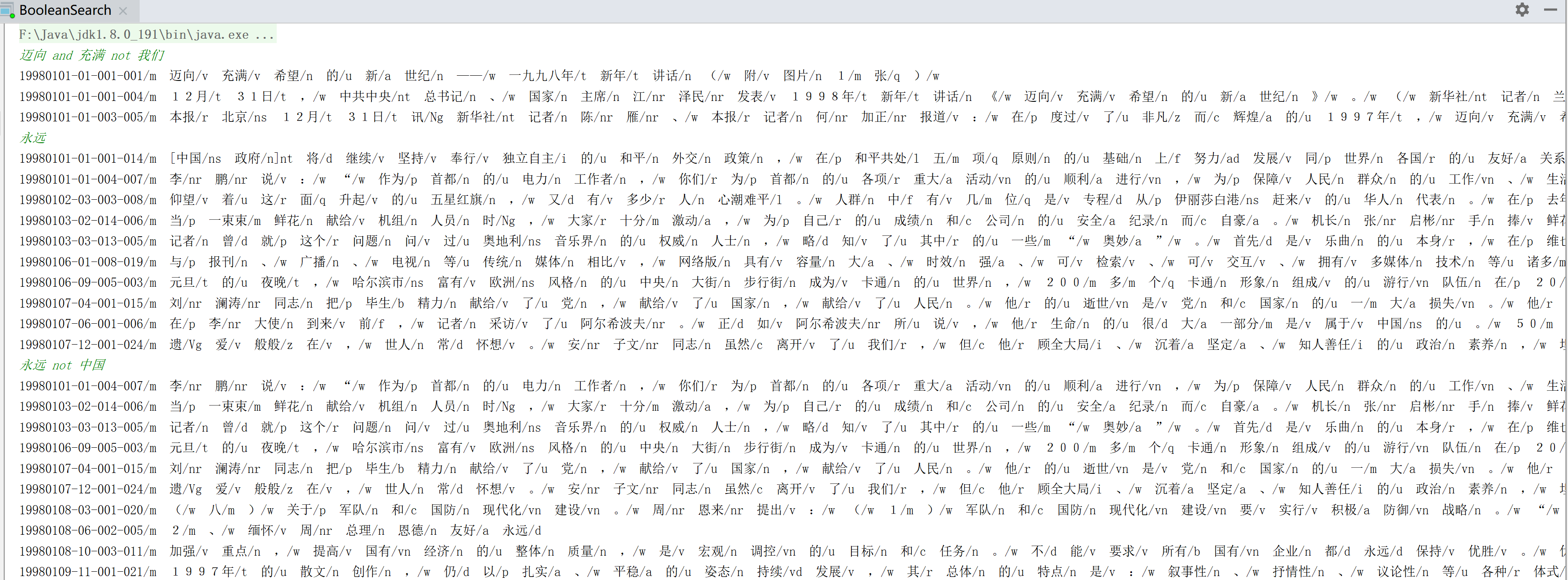

输出结果:

基于TF-IDF的检索系统

1、读取实验三对语料库的分词结果output.txt,利用BMM对输入的数据进行分词:

for (int j = line.length(); j >= 0 ; j--) {

for(int i=0; i<j; i++) {

String string = line.substring(i, j);

if (yuliaoku.containsKey(string) && !string.equals(" ")) {

inputWords.add(string);

j -= string.length()-1;

break;

} else if (i == j-1 && !string.equals(" ")) {

inputWords.add(string);

j -= string.length()-1;

}

}

}

2、获取TF值:

/*********获取TF值---第i个网页下,分词结果的第j个词语的TF值********/

Map<Integer, Map<Integer, Double>> wordTF = new HashMap<>();

for (int i = 0; i < listLine.size(); i++) {

//获取第i个网页下的分词结果

Map<String, Integer> map = text.get(i);

//存储分词结果的第j个词语的TF值

Map map1 = new HashMap();

for (int j = 0; j < inputWords.size(); j++) {

int num = map.containsKey(inputWords.get(j)) ? map.get(inputWords.get(j)) : 0;

double aaa = (double)num / map.size();

map1.put(j, aaa);

wordTF.put(i, map1);

}

}

3、获取IDF值 ------ 网页总数量 / 当前词语出现的网页数量,再取log:

double[] wordIDF = new double[inputWords.size()];

for (int i = 0; i < inputWords.size(); i++) {

int idf = 0;

for (int j = 0; j < listLine.size(); j++) {

if (listLine.get(j).contains(inputWords.get(i))) {

idf ++;

}

}

if (idf == 0) {

System.out.println( idf + "在所有网页中未出现,无法进行 网页数/词频 运算!");

System.exit(-1);

}

double aaa = listLine.size() / idf;

wordIDF[i] = Math.log(aaa);

}

4、对每个部分求和:

//得到每个部分的TF-IDF值

double result = 0.0;

for (int j = 0; j < inputWords.size(); j++) {

Double map = wordTF.get(i).get(j);

result += map * wordIDF[j];

}

5、排序输出:

List<Map.Entry<Integer, Double>> entryList = new ArrayList<>(tf_idf.entrySet());

Collections.sort(entryList, new Comparator<Map.Entry<Integer, Double>>() {

@Override

public int compare(Map.Entry<Integer, Double> o1, Map.Entry<Integer, Double> o2) {

return o2.getValue().compareTo(o1.getValue());

}

});

for (int i = 0; i < 10; i++) {

int a = entryList.get(i).getKey();

System.out.println("文本为:" + listLine.get(a));

System.out.println("TF-IDF值为:" + entryList.get(i).getValue());

System.out.println("---------------------------------------------------------------------------------------------------------");//分隔符

}

输出结果:

实验完整代码

package com.tuxiangchuli.java;

import javax.script.ScriptEngine;

import javax.script.ScriptEngineManager;

import javax.script.ScriptException;

import java.io.*;

import java.util.*;

public class BooleanSearch {

private Map<Integer, Map<String, Integer>> text = new HashMap<>();

//行数

private List<String> listLine = new ArrayList<>();

private Map<Integer, Integer> textNum = new HashMap<>();

private Map<String, Integer> yuliaoku = new HashMap<>();

private void readOutput() throws IOException {

String fileName = "D:\\output.txt";

//FileInputStream字节输入流;InputStreamReader将字节流转化为字符流;BufferedReader缓冲方式读取

BufferedReader br = new BufferedReader(new InputStreamReader(new FileInputStream(fileName), "UTF-8"));

String line;

while ((line = br.readLine()) != null) {

String[] input = line.trim().split(" +");

if (input.length == 2)

continue;

yuliaoku.put(input[0], Integer.valueOf(input[2]));

}

br.close();

}

private void readText() throws IOException {

/********读取语料库每一行,存到List中********/

String fileName = "D:\\yuliaoku.txt";

//FileInputStream字节输入流;InputStreamReader将字节流转化为字符流;BufferedReader缓冲方式读取

BufferedReader br = new BufferedReader(new InputStreamReader(new FileInputStream(fileName), "UTF-8"));

String line;

while ((line = br.readLine()) != null) {

listLine.add(line);

}

br.close();

for (int i = 0; i < listLine.size(); i++) {

Map<String, Integer> words = new HashMap<>();

String[] arrays = listLine.get(i).trim().split(" +");//每个文本分词并统计其词频

textNum.put(i, arrays.length);

for (String s : arrays) {

if (s.indexOf("19980") != -1)

continue;

String[] endString = s.split("/");

s = endString[0];

int count = words.containsKey(s) ? words.get(s) : 0;

words.put(s, count + 1);

}

text.put(i, words);

}

}

private void tf_IDFSearch(String line) throws IOException {

readOutput();

List<String> inputWords = new ArrayList<>();

//BMM分词

for (int j = line.length(); j >= 0 ; j--) {

for(int i=0; i<j; i++) {

String string = line.substring(i, j);

if (yuliaoku.containsKey(string) && !string.equals(" ")) {

inputWords.add(string);

j -= string.length()-1;

break;

} else if (i == j-1 && !string.equals(" ")) {

inputWords.add(string);

j -= string.length()-1;

}

}

}

/*********获取TF值---第i个网页,第j个词的TF值********/

Map<Integer, Map<Integer, Double>> wordTF = new HashMap<>();

for (int i = 0; i < listLine.size(); i++) {

Map<String, Integer> map = text.get(i);

Map map1 = new HashMap();

for (int j = 0; j < inputWords.size(); j++) {

int num = map.containsKey(inputWords.get(j)) ? map.get(inputWords.get(j)) : 0;

double aaa = (double)num / map.size();

map1.put(j, aaa);

wordTF.put(i, map1);

}

}

/**********获取IDF值**********/

System.out.println("------------------------------------------");

System.out.println("输入的分词:" + inputWords);

System.out.println("------------------------------------------");

double[] wordIDF = new double[inputWords.size()];

for (int i = 0; i < inputWords.size(); i++) {

int idf = 0;

for (int j = 0; j < listLine.size(); j++) {

if (listLine.get(j).contains(inputWords.get(i))) {

idf ++;

}

}

if (idf == 0) {

System.out.println( idf + "在所有网页中未出现,无法进行 网页数/词频 运算!");

System.exit(-1);

}

double aaa = listLine.size() / idf;

wordIDF[i] = Math.log(aaa);

}

/************第i个网页获得的TF-IDF计算如下:******/

Map<Integer, Double> tf_idf = new HashMap<>();

for (int i = 0; i < listLine.size(); i++) {

double result = 0.0;

for (int j = 0; j < inputWords.size(); j++) {

Double map = wordTF.get(i).get(j);

result += map * wordIDF[j];

}

tf_idf.put(i, result);

}

List<Map.Entry<Integer, Double>> entryList = new ArrayList<>(tf_idf.entrySet());

Collections.sort(entryList, new Comparator<Map.Entry<Integer, Double>>() {

@Override

public int compare(Map.Entry<Integer, Double> o1, Map.Entry<Integer, Double> o2) {

return o2.getValue().compareTo(o1.getValue());

}

});

for (int i = 0; i < 10; i++) {

int a = entryList.get(i).getKey();

System.out.println("文本为:" + listLine.get(a));

System.out.println("TF-IDF值为:" + entryList.get(i).getValue());

System.out.println("---------------------------------------------------------------------------------------------------------");

}

}

private void boolSearch(String input) throws ScriptException {

// 字符串转条件表达式

ScriptEngineManager manager = new ScriptEngineManager();

ScriptEngine engine = manager.getEngineByExtension("js");

String[] words = input.trim().split(" +");

String[] word3 = new String[3];

for (int i = 0; i < words.length; i+=2) {

word3[i/2] = words[i];

words[i] = "word.containsKey(word3[" + i/2 + "])";

}

input = String.join(" ", words);

input = input.replaceAll(" and ", " && ");

input = input.replaceAll(" or ", " || ");

input = input.replaceAll(" not ", " && ! ");

engine.put("word3", word3);

int num = 0;

for (int i=0; i<text.size(); i++) {

Map<String, Integer> word;

word = text.get(i);

engine.put("word", word);

Boolean result = (Boolean) engine.eval(input);// 字符串转条件表达式

if (result) {

//符合就输出相应文本

System.out.println(listLine.get(i));

num ++;

}

if (num == 10)

break;

}

}

public static void main(String[] args) throws IOException, ScriptException {

BooleanSearch booleanSearch = new BooleanSearch();

booleanSearch.readText();

Scanner scanner = new Scanner(System.in);

String input;

while (scanner.hasNextLine()) {

input = scanner.nextLine();

input.getBytes("UTF-8");

/*****布尔检索*******/

//booleanSearch.boolSearch(input);//需要用到撤销注释

/*****基于TF-IDF的检索系统*******/

booleanSearch.tf_IDFSearch(input);

}

}

}

该博客围绕文本信息检索展开实验,要求针对“语料库.txt”实现基于布尔模型和TF - IDF的检索系统。布尔模型按布尔表达式检索,TF - IDF系统对输入短语分词后按TF - IDF值排序输出。文中介绍了实验过程并给出输出结果和完整代码。

该博客围绕文本信息检索展开实验,要求针对“语料库.txt”实现基于布尔模型和TF - IDF的检索系统。布尔模型按布尔表达式检索,TF - IDF系统对输入短语分词后按TF - IDF值排序输出。文中介绍了实验过程并给出输出结果和完整代码。

399

399

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?