这学期新开的web编程课程。

第一次的实验项目如下:

第一个实验项目

新闻爬虫及爬取结果的查询网站

◦核心需求:

◦1、选取3-5个代表性的新闻网站(比如新浪新闻、网易新闻等,或者某个垂直领域权威性的网站比如经济领域的雪球财经、东方财富等,或者体育领域的腾讯体育、虎扑体育等等)建立爬虫,针对不同网站的新闻页面进行分析,爬取出编码、标题、作者、时间、关键词、摘要、内容、来源等结构化信息,存储在数据库中。

◦2、建立网站提供对爬取内容的分项全文搜索,给出所查关键词的时间热度分析。

◦技术要求:

◦1、必须采用Node.JS实现网络爬虫

◦2、必须采用Node.JS实现查询网站后端,HTML+JS实现前端(尽量不要使用任何前后端框架)

完成了三个网站的爬虫

那因为接触时间不长,在写这篇文章时感觉自己依然是个小白。

准备工作

准备request包,cheeio包,icnov包。

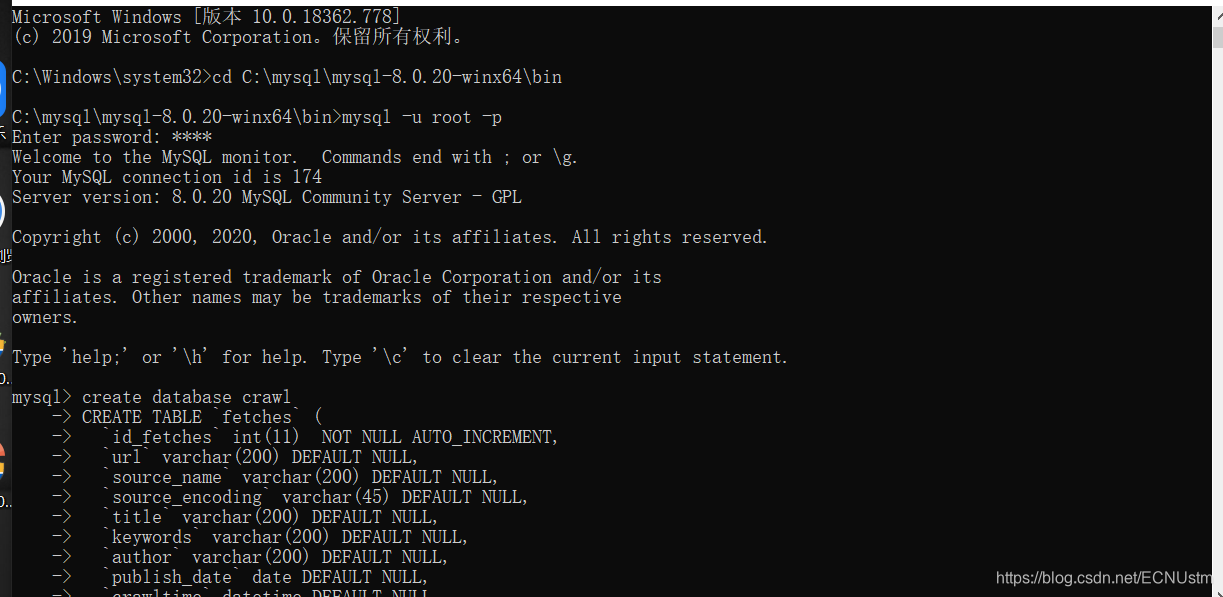

mysql数据库

中国人民网

var fs = require('fs');

var myRequest = require('request');

var myCheerio = require('cheerio');

var myIconv = require('iconv-lite');

require('date-utils');

var mysql = require('./mysql.js');

var source_name = "中国新闻网";

var domain = 'http://www.chinanews.com/';

var myEncoding = "utf-8";

var seedURL = 'http://www.chinanews.com/';

var seedURL_format = "$('a')";

var keywords_format = " $('meta[name=\"keywords\"]').eq(0).attr(\"content\")";

var title_format = "$('title').text()";

var date_format = "$('#pubtime_baidu').text()";

var author_format = "$('#editor_baidu').text()";

var content_format = "$('.left_zw').text()";

var desc_format = " $('meta[name=\"description\"]').eq(0).attr(\"content\")";

var source_format = "$('#source_baidu').text()";

var url_reg = /\/(\d{4})\/(\d{2})-(\d{2})\/(\d{7}).shtml/;

var regExp = /((\d{4}|\d{2})(\-|\/|\.)\d{1,2}\3\d{1,2})|(\d{4}年\d{1,2}月\d{1,2}日)/

//防止网站屏蔽我们的爬虫

var headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.65 Safari/537.36'

}

//request模块异步fetch url

function request(url, callback) {

var options = {

url: url,

encoding: null,

//proxy: 'http://x.x.x.x:8080',

headers: headers,

timeout: 10000 //

}

myRequest(options, callback)

};

seedget();

function seedget() {

request(seedURL, function(err, res, body) { //读取种子页面

// try {

//用iconv转换编码

var html = myIconv.decode(body, myEncoding);

//console.log(html);

//准备用cheerio解析html

var $ = myCheerio.load(html, { decodeEntities: true });

// } catch (e) { console.log('读种子页面并转码出错:' + e) };

var seedurl_news;

try {

seedurl_news = eval(seedURL_format);

} catch (e) { console.log('url列表所处的html块识别出错:' + e) };

seedurl_news.each(function(i, e) { //遍历种子页面里所有的a链接

var myURL = "";

try {

//得到具体新闻url

var href = "";

href = $(e).attr("href");

if (href == undefined) return;

if (href.toLowerCase().indexOf('http://') >= 0) myURL = href; //http://开头的

else if (href.startsWith('//')) myURL = 'http:' + href; ////开头的

else myURL = seedURL.substr(0, seedURL.lastIndexOf('/') + 1) + href; //其他

} catch (e) { console.log('识别种子页面中的新闻链接出错:' + e) }

if (!url_reg.test(myURL)) return; //检验是否符合新闻url的正则表达式

//console.log(myURL);

var fetch_url_Sql = 'select url from fetches where url=?';

var fetch_url_Sql_Params = [myURL];

mysql.query(fetch_url_Sql, fetch_url_Sql_Params, function(qerr, vals, fields) {

if (vals.length > 0) {

console.log('URL duplicate!')

} else newsGet(myURL); //读取新闻页面

});

});

});

};

function newsGet(myURL) { //读取新闻页面

request(myURL, function(err, res, body) { //读取新闻页面

//try {

var html_news = myIconv.decode(body, myEncoding); //用iconv转换编码

//console.log(html_news);

//准备用cheerio解析html_news

var $ = myCheerio.load(html_news, { decodeEntities: true });

myhtml = html_news;

//} catch (e) { console.log('读新闻页面并转码出错:' + e);};

console.log("转码读取成功:" + myURL);

//动态执行format字符串,构建json对象准备写入文件或数据库

var fetch = {};

fetch.title = "";

fetch.content = "";

fetch.publish_date = (new Date()).toFormat("YYYY-MM-DD");

//fetch.html = myhtml;

fetch.url = myURL;

fetch.source_name = source_name;

fetch.source_encoding = myEncoding; //编码

fetch.crawltime = new Date();

if (keywords_format == "") fetch.keywords = source_name; // eval(keywords_format); //没有关键词就用sourcename

else fetch.keywords = eval(keywords_format);

if (title_format == "") fetch.title = ""

else fetch.title = eval(title_format); //标题

if (date_format != "") fetch.publish_date = eval(date_format); //刊登日期

console.log('date: ' + fetch.publish_date);

fetch.publish_date = regExp.exec(fetch.publish_date)[0];

fetch.publish_date = fetch.publish_date.replace('年', '-')

fetch.publish_date = fetch.publish_date.replace('月', '-')

fetch.publish_date = fetch.publish_date.replace('日', '')

fetch.publish_date = new Date(fetch.publish_date).toFormat("YYYY-MM-DD");

if (author_format == "") fetch.author = source_name; //eval(author_format); //作者

else fetch.author = eval(author_format);

if (content_format == "") fetch.content = "";

else fetch.content = eval(content_format).replace("\r\n" + fetch.author, ""); //内容,是否要去掉作者信息自行决定

if (source_format == "") fetch.source = fetch.source_name;

else fetch.source = eval(source_format).replace("\r\n", ""); //来源

if (desc_format == "") fetch.desc = fetch.title;

else fetch.desc = eval(desc_format).replace("\r\n", ""); //摘要

// var filename = source_name + "_" + (new Date()).toFormat("YYYY-MM-DD") +

// "_" + myURL.substr(myURL.lastIndexOf('/') + 1) + ".json";

// ////存储json

// fs.writeFileSync(filename, JSON.stringify(fetch));

var fetchAddSql = 'INSERT INTO fetches(url,source_name,source_encoding,title,' +

'keywords,author,publish_date,crawltime,content) VALUES(?,?,?,?,?,?,?,?,?)';

var fetchAddSql_Params = [fetch.url, fetch.source_name, fetch.source_encoding,

fetch.title, fetch.keywords, fetch.author, fetch.publish_date,

fetch.crawltime.toFormat("YYYY-MM-DD HH24:MI:SS"), fetch.content

];

//执行sql,数据库中fetch表里的url属性是unique的,不会把重复的url内容写入数据库

mysql.query(fetchAddSql, fetchAddSql_Params, function(qerr, vals, fields) {

if (qerr) {

console.log(qerr);

}

}); //mysql写入

});

}

这个是老师给出的示例代码;

因为用的是现成的代码,就展示下搜索后的结果:

雪球网

var myRequest = require('request');

var myIconv = require('iconv-lite');

var myCheerio = require('cheerio');

var myEncoding = "utf-8";

var mysql = require('./mysql.js');

var source_name = "雪球";

var domain = 'https://xueqiu.com/';

var myEncoding = "utf-8";

var seedURL = 'https://xueqiu.com/';

function request(url, callback) {

var options = {

url: url,

encoding: null,

//proxy: 'http://x.x.x.x:8080',

headers: headers,

timeout: 10000 //

}

myRequest(options, callback)

};

var headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.65 Safari/537.36'

}

seedget();

function seedget() {

request('https://xueqiu.com/', function(err, res, body) {

var html = myIconv.decode(body, myEncoding);

var $ = myCheerio.load(html, { decodeEntities: true });

var seedurl_news;

try {

seedurl_news = eval($(".AnonymousHome_a__placeholder_3RZ"));

} catch (e) { console.log('url列表所处的html块识别出错:' + e) };

seedurl_news.each(function(i, e) {

var myURL = "";

var href="";

try {

href=$(e).attr("href");

console.log(href);

myURL='https://xueqiu.com'+href

} catch (e) { console.log('识别种子页面中的新闻链接出错:' + e) }

newsget(myURL)

});

});

}

function newsget(myURL){

request(myURL, function(err, res, body){

var html_news = myIconv.decode(body, myEncoding);

var $ = myCheerio.load(html_news, { decodeEntities: true });

myhtml = html_news;

console.log("转码读取成功:" + myURL);

var fetch = {};

fetch.title = "";

fetch.content = "";

fetch.url = myURL;

fetch.title=$("title").text();

fetch.content=$('meta[name="description"]').attr("content");

var fetchadd ='INSERT INTO fetches(url,title,content )VALUES(?,?,?)';

var fetchadd_params=[fetch.url,fetch.title,fetch.content];

mysql.query(fetchadd,fetchadd_params,function(qerr,vals,fields){});

});

}

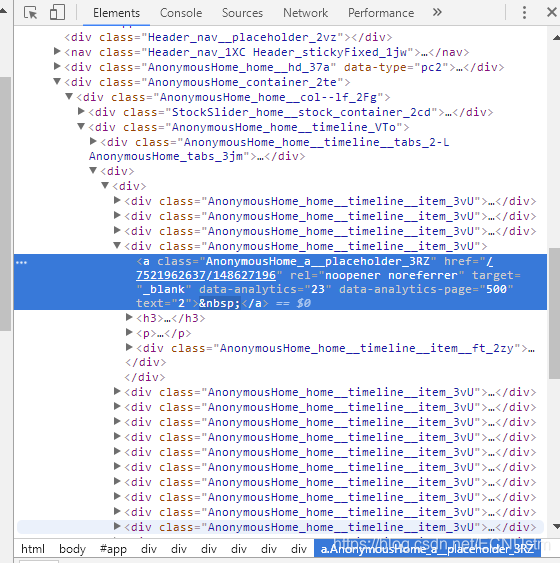

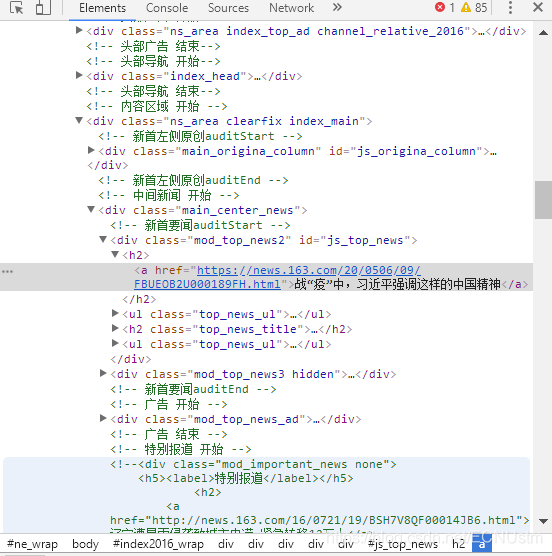

观察网站源代码结构

新闻的链接都存在class AnonymousHome_a__placeholder_3RZ的地方。

先要发送一个请求,需要一个request包。

然后筛选出链接,可能需要进行修饰。然后向获得的链接再发送请求,然后筛选出标题,作者等等信息。

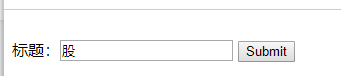

爬取到数据后,需要做一个前端。这里同样是用老师给出的代码。

大概就爬取完成了。

网易新闻

var fs = require('fs');

var myRequest = require('request');

var myCheerio = require('cheerio');

var myIconv = require('iconv-lite');

require('date-utils');

var mysql = require('./mysql.js');

var source_name = "网易新闻";

var domain = 'https://news.163.com/';

var myEncoding = "GBK";

var seedURL = 'https://news.163.com/';

var seedURL_format = "$('a')";

var keywords_format = " $('meta[name=\"keywords\"]').eq(0).attr(\"content\")";

var title_format = " $('meta[property=\"og:title\"]').eq(0).attr(\"content\")";

var date_format = "$('.post_time_source').text()"|"$(#ptime).text()";

var author_format = "$('meta[name=\"author\"]').eq(0).attr(\"content\")";

var content_format = "$('#endText').text()";

var desc_format = " $('meta[name=\"description\"]').eq(0).attr(\"content\")";

var source_format = "$('#ne_article_source').text()";

var url_reg = /\/(\d{2})\/(\d{4})\/(\d{2})\/(\w{10,30}).html/;

var regExp = /((\d{4}|\d{2})(\-|\/|\.)\d{1,2}\3\d{1,2})|(\d{4}年\d{1,2}月\d{1,2}日)|(\d{4}\-\d{2}\-\d{2})/

//防止网站屏蔽我们的爬虫

var headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.65 Safari/537.36'

}

//request模块异步fetch url

function request(url, callback) {

var options = {

url: url,

encoding: null,

//proxy: 'http://x.x.x.x:8080',

headers: headers,

timeout: 10000 //

}

myRequest(options, callback)

};

seedget();

function seedget() {

request(seedURL, function(err, res, body) { //读取种子页面

// try {

//用iconv转换编码

var html = myIconv.decode(body, myEncoding);

//console.log(html);

//准备用cheerio解析html

var $ = myCheerio.load(html, { decodeEntities: true });

// } catch (e) { console.log('读种子页面并转码出错:' + e) };

var seedurl_news;

try {

seedurl_news = eval(seedURL_format);

} catch (e) { console.log('url列表所处的html块识别出错:' + e) };

seedurl_news.each(function(i, e) { //遍历种子页面里所有的a链接

var myURL = "";

try {

//得到具体新闻url

var href = "";

href = $(e).attr("href");

if (href == undefined) return;

if (href.toLowerCase().indexOf('http://') >= 0) myURL = href; //http://开头的

else if (href.startsWith('//')) myURL = 'http:' + href; ////开头的

else myURL = seedURL.substr(0, seedURL.lastIndexOf('/') + 1) + href; //其他

} catch (e) { console.log('识别种子页面中的新闻链接出错:' + e) }

if (!url_reg.test(myURL)) return; //检验是否符合新闻url的正则表达式

//console.log(myURL);

var fetch_url_Sql = 'select url from fetches where url=?';

var fetch_url_Sql_Params = [myURL];

mysql.query(fetch_url_Sql, fetch_url_Sql_Params, function(qerr, vals, fields) {

newsGet(myURL); //读取新闻页面

});

});

});

};

function newsGet(myURL) { //读取新闻页面

request(myURL, function(err, res, body) { //读取新闻页面

//try {

var html_news = myIconv.decode(body, myEncoding); //用iconv转换编码

//console.log(html_news);

//准备用cheerio解析html_news

var $ = myCheerio.load(html_news, { decodeEntities: true });

myhtml = html_news;

//} catch (e) { console.log('读新闻页面并转码出错:' + e);};

console.log("转码读取成功:" + myURL);

//动态执行format字符串,构建json对象准备写入文件或数据库

var fetch = {};

fetch.title = "";

fetch.content = "";

fetch.publish_date = (new Date()).toFormat("YYYY-MM-DD");

//fetch.html = myhtml;

fetch.url = myURL;

fetch.source_name = source_name;

fetch.source_encoding = myEncoding; //编码

fetch.crawltime = new Date();

if (keywords_format == "") fetch.keywords = source_name; // eval(keywords_format); //没有关键词就用sourcename

else fetch.keywords = eval(keywords_format);

if (title_format == "") fetch.title = ""

else fetch.title = eval(title_format); //标题

if (date_format != "") fetch.publish_date = eval(date_format); //刊登日期

console.log('date: ' + fetch.publish_date);

fetch.publish_date = regExp.exec(fetch.publish_date)[0];

fetch.publish_date = fetch.publish_date.replace('年', '-')

fetch.publish_date = fetch.publish_date.replace('月', '-')

fetch.publish_date = fetch.publish_date.replace('日', '')

fetch.publish_date = new Date(fetch.publish_date).toFormat("YYYY-MM-DD");

if (author_format == "") fetch.author = source_name; //eval(author_format); //作者

else fetch.author = eval(author_format);

if (content_format == "") fetch.content = "";

else fetch.content = eval(content_format).replace("\r\n" + fetch.author, ""); //内容,是否要去掉作者信息自行决定

if (source_format == "") fetch.source = fetch.source_name;

else fetch.source = eval(source_format).replace("\r\n", ""); //来源

if (desc_format == "") fetch.desc = fetch.title;

else fetch.desc = eval(desc_format).replace("\r\n", ""); //摘要

// var filename = source_name + "_" + (new Date()).toFormat("YYYY-MM-DD") +

// "_" + myURL.substr(myURL.lastIndexOf('/') + 1) + ".json";

// ////存储json

// fs.writeFileSync(filename, JSON.stringify(fetch));

var fetchAddSql = 'INSERT INTO fetches(url,source_name,source_encoding,title,' +

'keywords,author,publish_date,crawltime,content) VALUES(?,?,?,?,?,?,?,?,?)';

var fetchAddSql_Params = [fetch.url, fetch.source_name, fetch.source_encoding,

fetch.title, fetch.keywords, fetch.author, fetch.publish_date,

fetch.crawltime.toFormat("YYYY-MM-DD HH24:MI:SS"), fetch.content

];

//执行sql,数据库中fetch表里的url属性是unique的,不会把重复的url内容写入数据库

mysql.query(fetchAddSql, fetchAddSql_Params, function(qerr, vals, fields) {

if (qerr) {

console.log(qerr);

}

}); //mysql写入

});

}

和以上流程相似,但出现了一些问题。

网易新闻的编码格式为GBK,所以需要进行一些修改。

还有存链接的方式和中国新闻网一样,所以采用相似的代码。

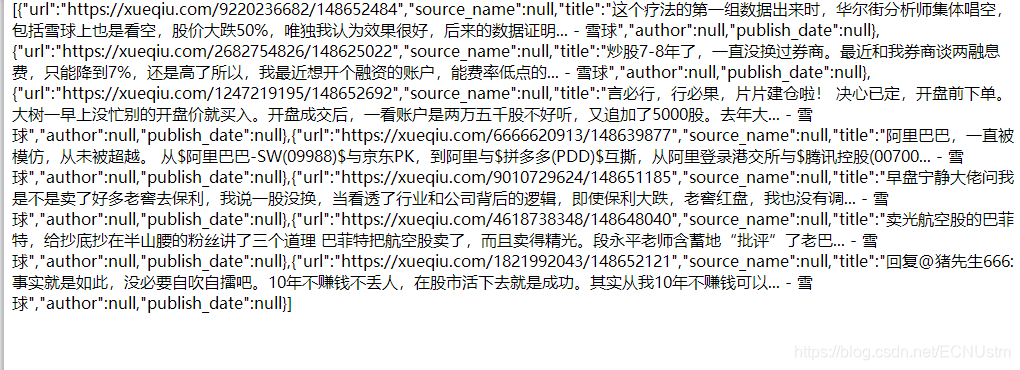

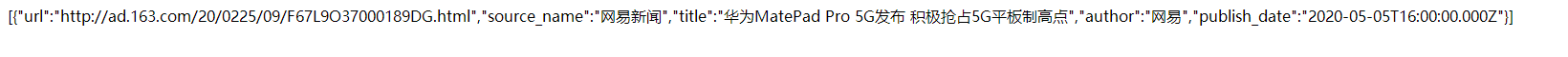

爬取搜索结果如下:

因为个人理解能力不强,且思维强度也不够高。而且没有深入的挖掘思考,目前就搞到这个样子,在看过他人博客后感觉到了这段时间对web编程的不重视。在后续学习中我会加大对这门课程的学习和理解,希望自己在后续学习中能有较深的理解。到目前为止依然有许多爬取失败的问题没有解决,但还算有一点点收获。

5744

5744

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?