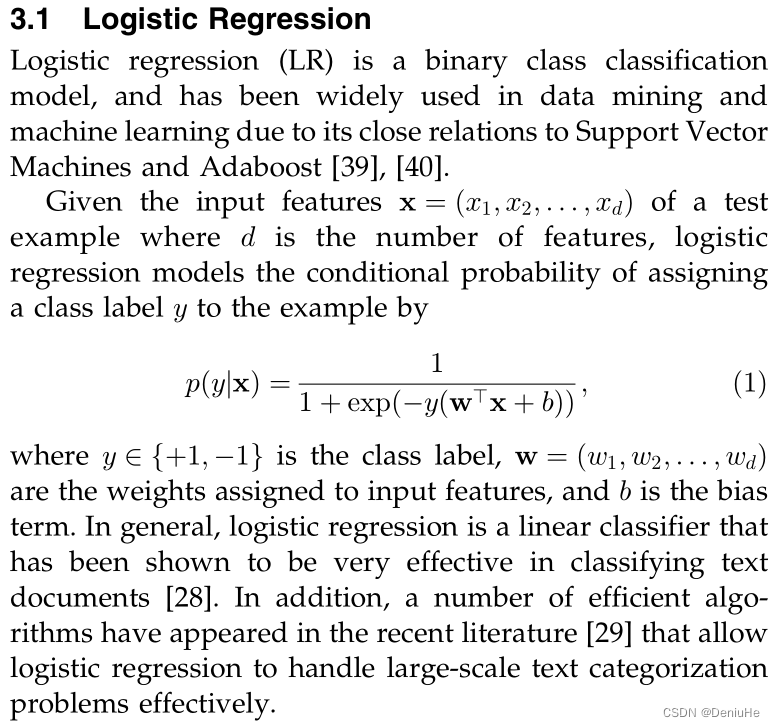

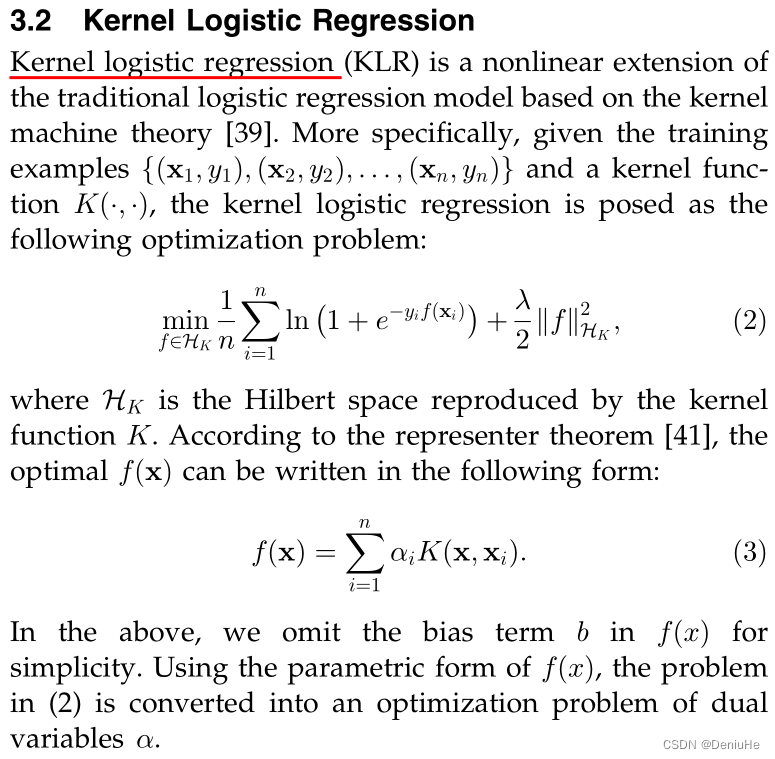

公式推导:

目标函数:

最小问题的目标函数:

求导数

第一部分导数

第二部分导数

第三部分导数

综合导数:

import numpy as np

class KernelLogisticRegression:

def __init__(self, kernel='rbf', gamma=0.1, C=100, tol=1e-6, max_iter=200):

self.kernel = kernel

self.gamma = gamma

self.C = C

self.tol = tol

self.max_iter = max_iter

def _rbf_kernel(self, X, Y):

dist = np.sum(X**2, axis=1).reshape(-1, 1) + np.sum(Y**2, axis=1) - 2 * np.dot(X, Y.T)

return np.exp(-self.gamma * dist)

def _sigmoid(self, x):

return 1 / (1 + np.exp(-x))

def _dual_algorithm(self, K, y):

alpha = np.zeros(K.shape[0])

for t in range(self.max_iter):

g = y - self._sigmoid(K.dot(alpha))

H = K * np.outer(self._sigmoid(K.dot(alpha)), 1 - self._sigmoid(K.dot(alpha)))

d = np.zeros(K.shape[0])

for i in range(K.shape[0]):

d[i] = H[i, i] + 1e-12

z = g / d

alpha_old = alpha

for i in range(K.shape[0]):

alpha[i] = max(0, min(alpha[i] + z[i], self.C))

z -= (alpha[i] - alpha_old[i]) * H[:, i]

if np.linalg.norm(alpha - alpha_old) < self.tol:

break

self.alpha = alpha

def fit(self, X, y):

if self.kernel == 'rbf':

K = self._rbf_kernel(X, X)

else:

raise ValueError('Unsupported kernel type')

self._dual_algorithm(K, y)

self.X_train = X

def predict(self, X):

if self.kernel == 'rbf':

K = self._rbf_kernel(X, self.X_train)

else:

raise ValueError('Unsupported kernel type')

y_pred = np.sign(K.dot(self.alpha))

return y_pred

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=0, n_classes=2, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = KernelLogisticRegression(kernel='rbf', gamma=0.1, C=1.0, tol=1e-4, max_iter=100)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

from sklearn.metrics import accuracy_score

acc = accuracy_score(y_test, y_pred)

print('Accuracy:', acc)分类效果远不如逻辑回归和SVM,不知道是不是代码问题。

import numpy as np

from scipy.optimize import minimize

import matplotlib.pyplot as plt

from sklearn.metrics.pairwise import pairwise_kernels, pairwise_distances

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.preprocessing import StandardScaler

class KernelLogisticRegression:

def __init__(self, kernel='rbf', gamma=1000, C=100, tol=1e-5, max_iter=500):

self.kernel = kernel

self.gamma = gamma

self.C = C

self.tol = tol

self.max_iter = max_iter

self.alpha = None

self.X = None

self.y = None

self.n_samples = None

self.kernel_matrix = None

def _calculate_kernel_matrix(self, X1, X2):

if self.kernel == 'rbf':

K = np.exp(-self.gamma * ((X1[:, np.newaxis] - X2) ** 2).sum(axis=2))

# K = -pairwise_distances(X1, X2, metric='euclidean')

else:

raise ValueError('Invalid kernel type')

return K

def _sigmoid(self, x):

return 1/(1+np.exp(-x))

def _objective_function(self, alpha):

term1 = 0.5 * alpha.T @ self.kernel_matrix @ alpha

term2 = np.sum(self.y * np.log(self._sigmoid(self.kernel_matrix @ alpha)))

term3 = np.sum((1 - self.y) * np.log(1 - self._sigmoid(self.kernel_matrix @ alpha)))

return term1 - term2 - term3

def _gradient(self, alpha):

p1 = self._sigmoid(self.kernel_matrix @ alpha) - self.y

return self.kernel_matrix @ p1

def fit(self, X, y):

self.X = X

self.y = y

self.n_samples = X.shape[0]

self.kernel_matrix = self._calculate_kernel_matrix(X, X)

self.alpha = np.zeros(self.n_samples)

bounds = [(0, self.C)] * self.n_samples

options = {'maxiter': self.max_iter}

for i in range(self.max_iter):

alpha_prev = np.copy(self.alpha)

res = minimize(self._objective_function, self.alpha, method='L-BFGS-B', jac=self._gradient, bounds=bounds, options=options)

self.alpha = res.x

if np.linalg.norm(self.alpha - alpha_prev) < self.tol:

break

def predict(self, X):

K = self._calculate_kernel_matrix(X, self.X)

y_pred = self._sigmoid(K @ self.alpha)

y_pred[y_pred > 0.5] = 1

y_pred[y_pred <= 0.5] = 0

return y_pred.astype(int)

from sklearn.datasets import make_classification

from sklearn import datasets

from sklearn.model_selection import train_test_split

# X, y = make_classification(n_samples=1000, n_features=5, n_informative=5, n_redundant=0, n_classes=2, random_state=10)

# X, y = datasets.make_blobs(n_samples=1000, n_features=2, centers=2, cluster_std=2.0, random_state=44)

X, y = datasets.make_circles(n_samples=400, shuffle=True, noise=0.01, random_state=42)

plt.scatter(X[:,0], X[:,1],c=y)

plt.show()

scaler = StandardScaler()

X = scaler.fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

model = KernelLogisticRegression()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

from sklearn.metrics import accuracy_score

acc = accuracy_score(y_test, y_pred)

print('Accuracy:', acc)

LR_model = LogisticRegression()

LR_model.fit(X=X_train, y=y_train)

y_pred = LR_model.predict(X=X_test)

ACC = accuracy_score(y_test,y_pred)

print("ACC:::",ACC)

svm_model = SVC(C=100, kernel='rbf',gamma=0.1)

svm_model.fit(X=X_train, y=y_train)

y_pred = svm_model.predict(X=X_test)

ACC = accuracy_score(y_test,y_pred)

print("ACC:::",ACC)

文章实现了基于RBF核的逻辑回归模型,并与标准逻辑回归和SVM进行比较。在给定的数据集上,核逻辑回归的分类效果逊于逻辑回归和SVM。代码中包含了模型的训练、预测以及性能评估过程。

文章实现了基于RBF核的逻辑回归模型,并与标准逻辑回归和SVM进行比较。在给定的数据集上,核逻辑回归的分类效果逊于逻辑回归和SVM。代码中包含了模型的训练、预测以及性能评估过程。

1074

1074

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?