ex4

github:https://github.com/DLW3D/coursera-machine-learning-ex

练习文件下载地址:https://s3.amazonaws.com/spark-public/ml/exercises/on-demand/machine-learning-ex4.zip

Neural Network Learning 神经网络学习

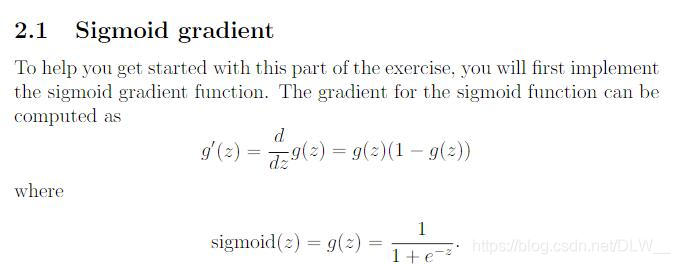

Sigmoid Gradient Sigmoid函数梯度

sigmoidGradient.m

function g = sigmoidGradient(z)

g = sigmoid(z) .* (1 - sigmoid(z));

end

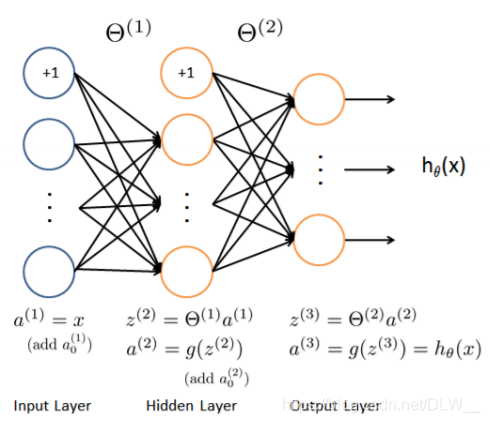

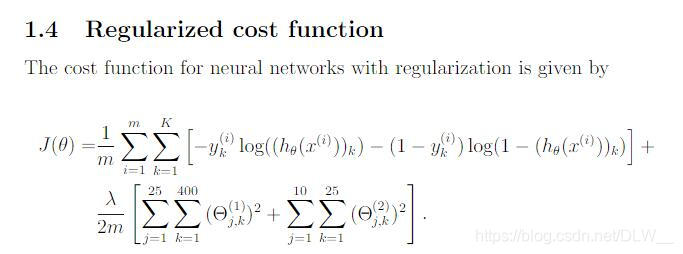

Feedforward 前向传播

前向传播的工作主要是计算代价函数

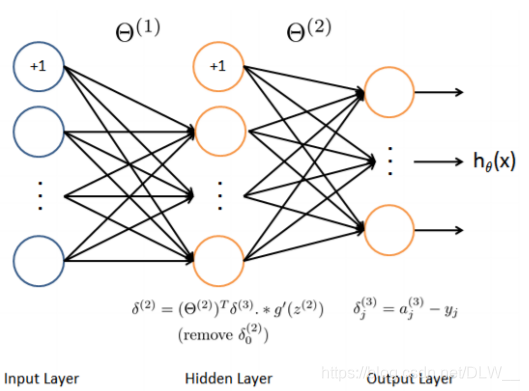

Backpropagation 反向传播

反向传播需要计算输出层误差,通过误差传播方程计算出各隐含层神经元误差,最后进行参数的梯度计算.

nnCostFunction.m

function [J grad] = nnCostFunction(nn_params, ...

input_layer_size, ...

hidden_layer_size, ...

num_labels, ...

X, y, lambda)

%NNCOSTFUNCTION Implements the neural network cost function for a two layer

%neural network which performs classification

% [J grad] = NNCOSTFUNCTON(nn_params, hidden_layer_size, num_labels, ...

% X, y, lambda) computes the cost and gradient of the neural network. The

% parameters for the neural network are "unrolled" into the vector

% nn_params and need to be converted back into the weight matrices.

%

% The returned parameter grad should be a "unrolled" vector of the

% partial derivatives of the neural network.

%

% Reshape nn_params back into the parameters Theta1 and Theta2, the weight matrices

% for our 2 layer neural network

Theta1 = reshape(nn_params(1:hidden_layer_size * (input_layer_size + 1)), ...

hidden_layer_size, (input_layer_size + 1));

Theta2 = reshape(nn_params((1 + (hidden_layer_size * (input_layer_size + 1))):end), ...

num_labels, (hidden_layer_size + 1));

m = size(X, 1);

z2 = [ones(size(X,1),1), X] * Theta1';%m*25

a2 = sigmoid(z2);

a2 = [ones(size(a2,1),1), a2];%m*26

z3 = a2 * Theta2';%m*10

a3 = sigmoid(z3);%m*10

y_ = zeros(m, num_labels);%m*10

for i = 1:num_labels

y_(:,i) = (y == i);

end

J = sum(sum(-y_ .* log(a3) - (1-y_) .* log(1-a3)))/m +...

lambda/2/m * (sum(sum(Theta1(:,2:size(Theta1,2)).^2))+...

sum(sum(Theta2(:,2:size(Theta2,2)).^2)));

delta3 = a3 - y_;%m*10

delta2 = delta3*Theta2(:,2:size(Theta2,2)).*sigmoidGradient(z2);%m*25

Theta2_grad = delta3' * a2 / m;%10 * 26

Theta1_grad = delta2' * [ones(size(X,1),1), X] / m;%25 * 401

%正则化

Theta2(:,1) = 0;

Theta1(:,1) = 0;

Theta2_grad = Theta2_grad + lambda/m*Theta2;

Theta1_grad = Theta1_grad + lambda/m*Theta1;

% Unroll gradients

grad = [Theta1_grad(:) ; Theta2_grad(:)];

end

RandInitialize Weights 随机初始化参数

神经网络必需随机初始化参数.

randInitializeWeights.m

function W = randInitializeWeights(L_in, L_out)

epsilon_init=0.12;

W = rand(L_out, 1 + L_in) * 2 * epsilon_init - epsilon_init;

end

预测输出

predict.m

function p = predict(Theta1, Theta2, X)

m = size(X, 1);

num_labels = size(Theta2, 1);

p = zeros(size(X, 1), 1);

h1 = sigmoid([ones(m, 1) X] * Theta1');

h2 = sigmoid([ones(m, 1) h1] * Theta2');

[dummy, p] = max(h2, [], 2);

end

训练神经网络

% Load the weights into variables Theta1 and Theta2

load('ex4data1.mat');

input_layer_size = 400; % 20x20 Input Images of Digits

hidden_layer_size = 25; % 25 hidden units

num_labels = 10; % 10 labels, from 1 to 10

% (note that we have mapped "0" to label 10)

fprintf('\nInitializing Neural Network Parameters ...\n')

initial_Theta1 = randInitializeWeights(input_layer_size, hidden_layer_size);

initial_Theta2 = randInitializeWeights(hidden_layer_size, num_labels);

% Unroll parameters

initial_nn_params = [initial_Theta1(:) ; initial_Theta2(:)];

fprintf('\nTraining Neural Network... \n')

options = optimset('MaxIter', 50);

% You should also try different values of lambda

lambda = 1;

% Create "short hand" for the cost function to be minimized

costFunction = @(p) nnCostFunction(p, ...

input_layer_size, ...

hidden_layer_size, ...

num_labels, X, y, lambda);

% Now, costFunction is a function that takes in only one argument (the

% neural network parameters)

[nn_params, cost] = fmincg(costFunction, initial_nn_params, options);

% Obtain Theta1 and Theta2 back from nn_params

Theta1 = reshape(nn_params(1:hidden_layer_size * (input_layer_size + 1)), ...

hidden_layer_size, (input_layer_size + 1));

Theta2 = reshape(nn_params((1 + (hidden_layer_size * (input_layer_size + 1))):end), ...

num_labels, (hidden_layer_size + 1));

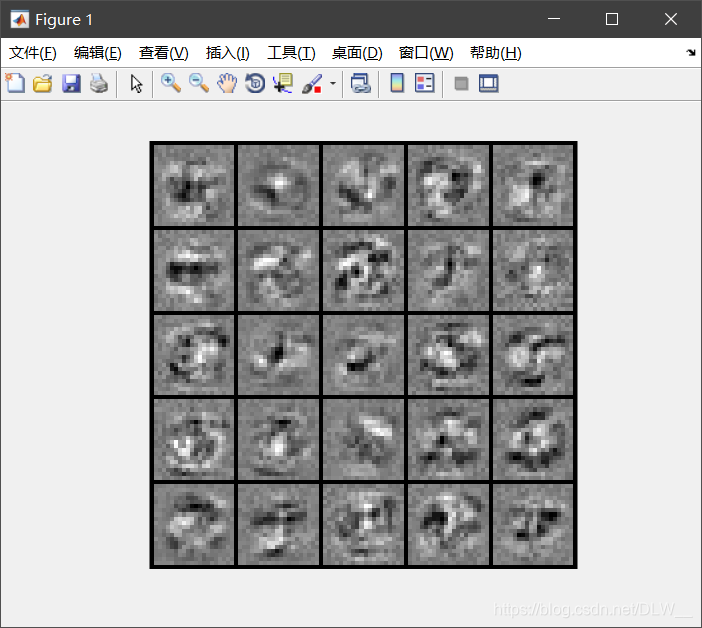

fprintf('\nVisualizing Neural Network... \n')

displayData(Theta1(:, 2:end));

pred = predict(Theta1, Theta2, X);

fprintf('\nTraining Set Accuracy: %f\n', mean(double(pred == y)) * 100);

Training Set Accuracy: 95.260000

本文详细介绍了一个两层神经网络的学习过程,包括前向传播计算代价函数、反向传播计算梯度、随机初始化参数、预测输出及神经网络训练。通过具体代码实现,展示了如何使用随机初始化的参数训练神经网络,并评估其在训练集上的准确性。

本文详细介绍了一个两层神经网络的学习过程,包括前向传播计算代价函数、反向传播计算梯度、随机初始化参数、预测输出及神经网络训练。通过具体代码实现,展示了如何使用随机初始化的参数训练神经网络,并评估其在训练集上的准确性。

1420

1420

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?