01

“”"

1.使用以下代代码下载所有的歌曲!歌曲名最好用已有的.

2.想办法把 https://www.17k.com/list/3015690.html 页面中章节详情的内容URL给拿到

2.1 做进程划分,爬取章节页面详情存储到本地,一个章节一个html文件.

2.2 html = response.text

2.3 如果你的请求返回出来的是乱码,设置response.encoding=‘utf-8’/‘gbk’…

2.4 你把文章的内容给拿出来存到本地.

“”"

下载歌曲的代码

path = ‘https://webfs.yun.kugou.com/201908192103/34f30c50f3dddf902489d8329b5a8256/G072/M03/1B/04/iA0DAFc4Oq2ASH8UACk2YICuxZ0695.mp3’

import requests

response = requests.get(path)

mp3_ = response.content

with open(‘joker.mp3’,mode=‘wb’) as f:

f.write(mp3_)

获取歌曲URL

import json

with open(‘C:/Users/admin/Desktop/top_500.txt’,mode=‘r’) as f:

res = f.readlines()[0].strip(’\n’).split(’}’)

for json_ in res[:-1]:

json = json + ‘}’

_json = json.loads(_json)

song_play_url = _json[‘song_play_url’]

if song_play_url is not None:

print(song_play_url)

import json

import requests

#导入多进程管理包

import multiprocessing#

def text(path):

song_play_url_list = []

song_name_list = []

with open(path,mode='r') as f:

res = f.readlines()[0].strip('\n').split('}')

for json_ in res[:-1]:

_json = json_ + '}'

#json.loads将已编码的 JSON 字符串解码为 Python 对象

_json = json.loads(_json)

song_play_url = _json['song_play_url']

if song_play_url is not None:

song_play_url_list.append(song_play_url)

song_name = _json['song_name']

song_name_list.append(song_name)

return song_play_url_list , song_name_list

song_url,song_name = text('C:/Users/admin/Desktop/top_500.txt')

def download(song_url,song_name):

i = -1

for path_ in song_url:

i += 1

#requests.get请求指定的页面信息,并返回实体主体

response = requests.get(path_)

mp3_ = response.content

with open('E:/music'+song_name[i]+'.mp3',mode='wb') as f:

f.write(mp3_)

#download(song_url,song_name)

if __name__ == "__main__":

x = int(len(song_url)/2)

#创建进程

p1 = multiprocessing.Process(target=download,args=(song_url[0:x],song_name[0:x]))

p2 = multiprocessing.Process(target=download,args=(song_url[x:],song_name[x:]))

#启动进程

p1.start()

p2.start()

p1.join()

p2.join()

print("Over")

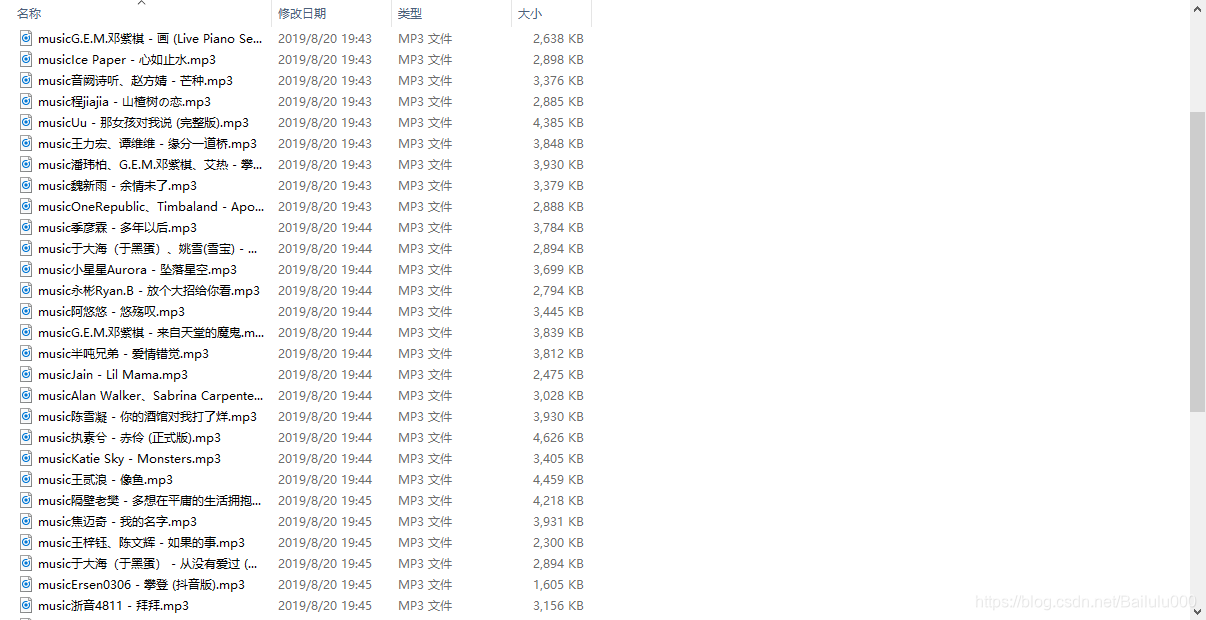

运行结果:

02

- .想办法把 https://www.17k.com/list/3015690.html 页面中章节详情的内容URL给拿到

- 做进程划分,爬取章节页面详情存储到本地,一个章节一个html文件.

- html = response.text

- 如果你的请求返回出来的是乱码,设置response.encoding=‘utf-8’/‘gbk’…

import multiprocessing

from lxml import etree

import requests

import re

def novel():

response = requests.get('https://www.17k.com/list/3015690.html') #请求网页 <Response [200]>

response.encoding = 'utf8'

tree = etree.HTML(response.text) #调用HTML类进行初始化,这样就成功构造了一个XPath解析对象

w_list = tree.xpath('//html/body/div[@class="Main List"]/dl[@class="Volume"]/dd/a') #提取每一个a标签的内容

#<a target="_blank" href="/chapter/3015690/38259921.html" title="第一章 和龙王谈交易

url = []

for i in w_list:

href = i.xpath('./@href')[0] #提取href标签的内容

a = 'http://www.17k.com/'

html = a + href

url.append(html)

return url

html_list = novel()

def write_(html_list):

b = 0

for html in html_list:

res = requests.get(html)

res.encoding = 'utf8'

#red = re.compile('<p>(.*?)</p>')

#result = re.findall(red,rec)

tree1 = etree.HTML(res.text)

b_list = tree1.xpath('//html/body/div[@class="area"]/div[2]/div[2]/div[1]/div[2]/p/text()')

txt = str(b_list)

#print(txt)

b += 1

with open('E:/python/Homework05/novel/'+str(b)+'.txt',mode='w') as f:

f.write(txt)

if __name__ == "__main__":

x = int(len(html_list)/2)

p1 = multiprocessing.Process(target=write_,args=(html_list[0:x],))

p2 = multiprocessing.Process(target=write_,args=(html_list[x:],))

p1.start()

p2.start()

p1.join()

p2.join()

print("Over")

这篇博客主要介绍了如何使用Python下载歌曲,包括从指定链接读取歌曲URL并保存为MP3文件,以及初步探讨了爬取网页内容,如17k小说网站的章节详情URL,涉及进程划分和网页内容的本地存储。

这篇博客主要介绍了如何使用Python下载歌曲,包括从指定链接读取歌曲URL并保存为MP3文件,以及初步探讨了爬取网页内容,如17k小说网站的章节详情URL,涉及进程划分和网页内容的本地存储。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?