MapReduce分布式计算系统

一、编程调试WordCount程序

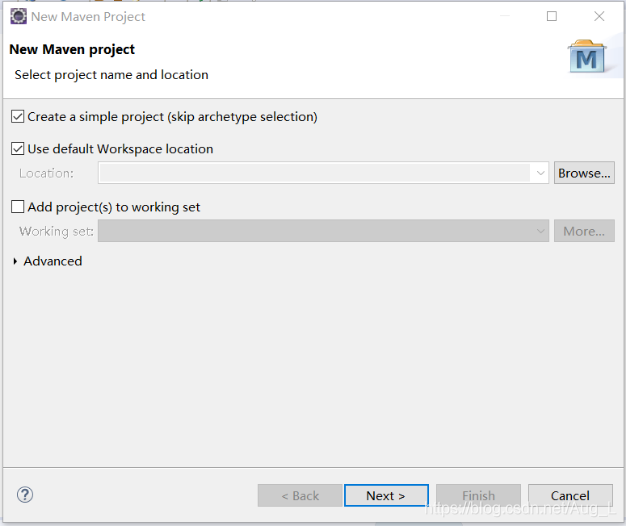

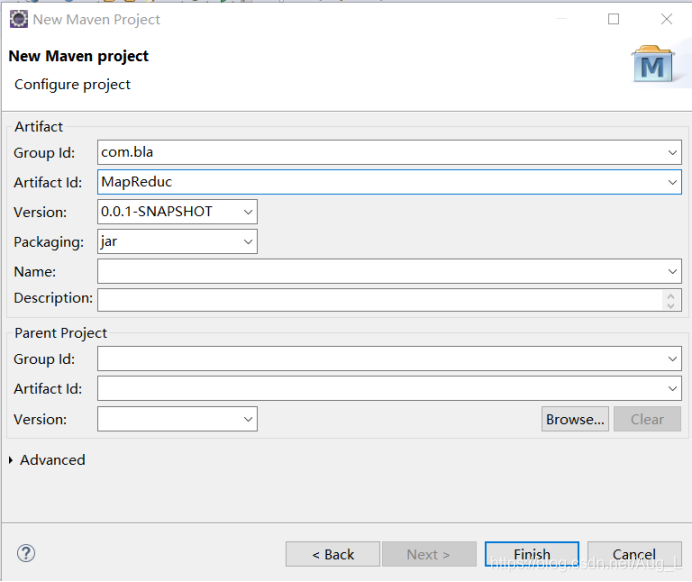

新建maven项目

修改pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.bla</groupId>

<artifactId>MapReduce</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<artifactId> maven-assembly-plugin </artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<mainClass>wordcount.WordCountDriver</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

编写Wordcount程序

package wordcount;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountDriver {

public static class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

//对数据进行打散

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//输入数据 hello world love work

String line = value.toString();

//对数据切分

String[] words=line.split(" ");

//写出<hello, 1>

for(String w:words) {

//写出reducer端

context.write(new Text(w), new IntWritable(1));

}

}

}

public static class WordCountReducer extends Reducer <Text, IntWritable, Text, IntWritable>{

protected void reduce(Text Key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

//记录出现的次数

int sum=0;

//累加求和输出

for(IntWritable v:values) {

sum +=v.get();

}

context.write(Key, new IntWritable(sum));

}

}

public static void main(String[] args) throws IllegalArgumentException, IOException, ClassNotFoundException, InterruptedException {

// 设置root权限

System.setProperty("HADOOP_USER_NAME", "root");

//创建job任务

Configuration conf=new Configuration();

Job job=Job.getInstance(conf);

//指定jar包位置

job.setJarByClass(WordCountDriver.class);

//关联使用的Mapper类

job.setMapperClass(WordCountMapper.class);

//关联使用的Reducer类

job.setReducerClass(WordCountReducer.class);

//设置Mapper阶段输出的数据类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//设置Reducer阶段输出的数据类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//设置数据输入路径和文件名

FileInputFormat.setInputPaths(job, new Path("/usr/local/hdfs/input/cc.txt"));

//设置数据输出路径

FileOutputFormat.setOutputPath(job, new Path("/usr/local/hdfs/output"));

//提交任务

Boolean rs=job.waitForCompletion(true);

//退出

System.exit(rs?0:1);

}

}

二、生成jar包,在虚拟机上运行

1、程序运行成功后在cmd中打包

2、mvn assembly:assembly

3、上传到xshell上运行

4、创建的input文件,在input中写入内容

hdfs -mkdir /output

5、运行Java程序

6、查看结果:

hdfs -ls /output

hdfs -cat /output/part-r-00000

本文详细介绍了如何使用MapReduce编程调试WordCount程序,从新建Maven项目、配置pom.xml,到编写WordCount代码。接着,文章指导读者如何生成jar包,并在虚拟机上运行该程序,包括在CMD中打包、通过mvn assembly命令、上传到xshell、准备input数据以及最终查看运行结果。

本文详细介绍了如何使用MapReduce编程调试WordCount程序,从新建Maven项目、配置pom.xml,到编写WordCount代码。接着,文章指导读者如何生成jar包,并在虚拟机上运行该程序,包括在CMD中打包、通过mvn assembly命令、上传到xshell、准备input数据以及最终查看运行结果。

751

751

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?