但又更多 prompt 时,可以异步调用大模型,加快效率,普通写法:

20 条 prompt:

import requests

import time

from openai import OpenAI

client = OpenAI(

api_key="",

base_url="https://open.bigmodel.cn/api/paas/v4/"

)

def call_large_model(prompt):

completion = client.chat.completions.create(

model="glm-4-plus",

messages=[

{"role": "system", "content": "你是一个聪明且富有创造力的小说作家"},

{"role": "user",

"content": prompt}

],

top_p=0.7,

temperature=0.9

)

return completion.choices[0].message

def main_sync():

prompts = [f"说出数字,不用说其他内容 {i}" for i in range(20)]

start_time = time.time()

for prompt in prompts:

size = call_large_model(prompt)

print(f"{size}")

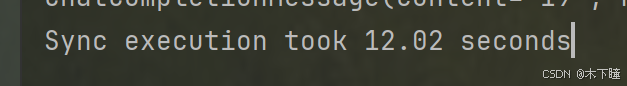

print(f"Sync execution took {time.time() - start_time:.2f} seconds")

if __name__ == "__main__":

main_sync()

异步写法:

import aiohttp

import asyncio

import time

API_KEY = ""

BASE_URL = "https://open.bigmodel.cn/api/paas/v4"

async def call_large_model(prompt, session):

url = f"{BASE_URL}/chat/completions"

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

messages = [

{"role": "system", "content": "你是一个聪明且富有创造力的小说作家"},

{"role": "user", "content": prompt}

]

payload = {

"model": "glm-4-plus",

"messages": messages,

"top_p": 0.7,

"temperature": 0.9

}

async with session.post(url, json=payload, headers=headers) as response:

completion = await response.json()

return completion['choices'][0]['message']

async def main_async():

prompts = [f"说出数字,不用说其他内容 {i}" for i in range(20)]

start_time = time.time()

async with aiohttp.ClientSession() as session:

tasks = [call_large_model(prompt, session) for prompt in prompts]

results = await asyncio.gather(*tasks)

for result in results:

print(f"{result}")

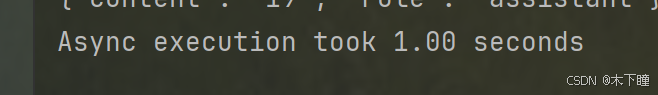

print(f"Async execution took {time.time() - start_time:.2f} seconds")

if __name__ == "__main__":

asyncio.run(main_async())

opanai异步:

import asyncio

import time

from openai import AsyncOpenAI

# 创建异步客户端

client = AsyncOpenAI(

base_url=',

api_key=, # ModelScope Token

)

# 设置额外参数

extra_body = {

"enable_thinking": False,

# "thinking_budget": 4096

}

async def process_request(user_query: str):

"""处理单个请求的异步函数"""

response = await client.chat.completions.create(

model='Qwen/Qwen3-235B-A22B',

messages=[

{

'role': 'user',

'content': user_query

}

],

stream=False,

extra_body=extra_body

)

return response.choices[0].message.tool_calls

async def process_batch_requests(queries: list):

"""批量处理请求的异步函数"""

tasks = [process_request(query) for query in queries]

results = await asyncio.gather(*tasks)

return results

# 使用示例

if __name__ == "__main__":

queries = [

"9.9和9.11谁大",

"10.1和10.10哪个更大",

"比较5.5和5.55的大小"

]

# 运行异步批量处理

start = time.time()

results = asyncio.run(process_batch_requests(queries))

# 打印所有结果

for i, tool_calls in enumerate(results):

print(f"查询 {queries[i]} 的结果:")

print(tool_calls)

print("-" * 50)

end = time.time()

print(f"处理完成,耗时 {end - start:.2f} 秒")

919

919

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?