论文:《PVANET: Deep but Lightweight Neural Networks for Real-time Object Detection》

论文链接:https://arxiv.org/abs/1608.08021

代码链接:https://github.com/sanghoon/pva-faster-rcnn

参考原文:https://blog.youkuaiyun.com/u014380165/article/details/79502113

https://www.cnblogs.com/fariver/p/7449563.html

1、论述

RCNN系列的object detection算法总体上分为特征提取、RPN网络和分类回归三大部分,Faster RCNN的效果虽好,但是速度较慢,这篇文章的出发点是改进Faster CNN的特征提取网络,也就是用PVANET来提取特征作为Faster RCNN网络中RPN部分和RoI Pooling部分的输入,改进以后的Faster RCNN可以在基本不影响准确率的前提下减少运行时间。

加宽和加深网络向来是提升网络效果的两个主要方式,因为要提速,所以肯定做不到同时加宽和加深网络,因此PVANET网络的总体设计原则是:less channels with more layers,深层网络的训练问题可以通过residual(残差网络)结构来解决。

2、创新点

PVAnet是RCNN系列目标方向,基于Faster-RCNN进行改进,Faster-RCNN基础网络可以使用ZF、VGG、Resnet等,但精度与速度难以同时提高。PVAnet的含义应该为:Performance Vs Accuracy,意为加速模型性能,同时不丢失精度的含义。主要的工作再使用了高效的自己设计的基础网络。

该网络使用了C.ReLU、Inception、HyperNet以及residual模块等技巧。

整体网络结构如图1所示:

3、C.ReLU

C.ReLU(concatenated ReLU)主要是用来减少计算量。

作者观察CNN网络的前面一些基础网络卷积层参数,发现低层卷积核成对出现(参数互为相反数),因此,作者减小输出特征图个数为原始一半,另一半直接取相反数得到,再将两部分特征图连接,从而减少了卷积核数目。

另外在本文中,和原始的C.ReLU相比,作者还额外添加了scale/shift层用来做尺度变换和平移操作,相当于一个线性变换,改变原来完全对称的数据分布。

C.ReLU的模块结构如图2所示。

4、Inception模块

作者发现googlenet中Inception模块由于具有多种感受野的卷积核组合,因此能够适应多尺度目标的检测,作者使用基于Inception模块组合并且组合跳级路特征进行基础网络后部分特征的提取。

Figure3是作者使用的Inception结构,其中右边和左边相比多了stride=2,所以输出的feature map的size减半。

5、HyperNet

多层特征融合可以尽可能利用细节和抽象特征,这种做法在object detection领域也常常用到,比如SSD,但是在融合的时候需要注意融合的特征要尽量不冗余,否则就白白增加计算量了。

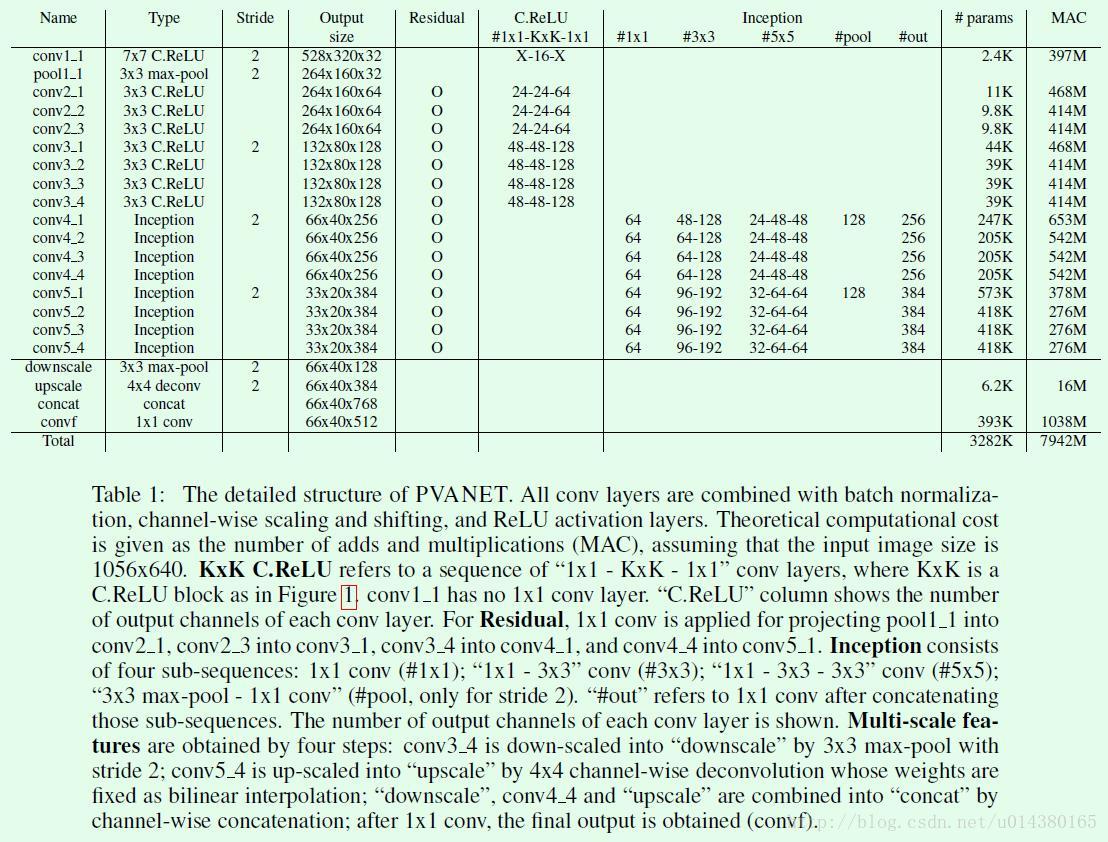

Table1是PVANET的网路结构图。前半部分采用常规的卷积,后半部分采用Inception结构,另外residual结构的思想也贯穿了这两部分结构。之所以引入residual结构,主要还是回到作者一开始提到的网络结构设计原则:less channels with more layers。受限于深层网络的训练瓶颈,所以引入residual结构。C.ReLU那一列包含1*1、K*K和1*1卷积,其中K*K部分就是前面Figure1的C.ReLU结构,而前后的两个1*1卷积是做通道的缩减和还原,主要还是为了减少计算量。强调下关于特征层融合的操作:将conv3_4进downscale、将conv5_4进行upscale,这样这两层feature map的size就和conv4_4的输出size一样,然后将二者和conv4_4进行concate得到融合以后的特征。

6、实验过程

除了以上基础网络的区别:

(1) PVAnet使用的anchor与faster-rcnn不同,PVA在每个特征点上使用了25个anchor(5种尺度,5种形状)。

(2) 并且RPN网络不使用全部特征图就能达到很好的定位精度,RPN网络只用生成200个proposals;

(3) 使用VOC2007、VOC2012、COCO一起训练模型;

(4) 可以使用类似于Fast-RCNN的truncated SVD来加速全连接层的速度;

(5) 使用投票机制增加训练精度,投票机制应该参考于R-FCN

7、实验结果

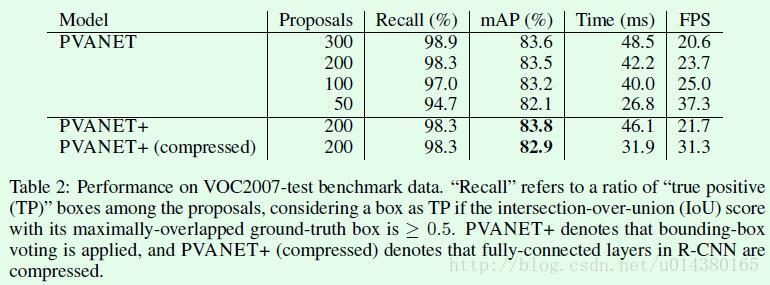

Table2是在VOC2007数据集上的不同配置的PVANET网络实验结果。

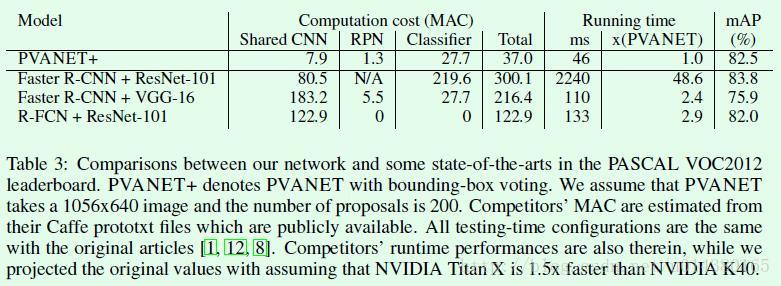

Table3是在VOC2012数据集上的PVANET网络和Faster RCNN、RFCN网络的对比。

PVANet是一种改进的Faster RCNN网络,通过优化特征提取网络实现速度与精度的平衡。它采用C.ReLU、Inception模块和Residual结构,结合多层特征融合,使模型在保持高精度的同时大幅提速。

PVANet是一种改进的Faster RCNN网络,通过优化特征提取网络实现速度与精度的平衡。它采用C.ReLU、Inception模块和Residual结构,结合多层特征融合,使模型在保持高精度的同时大幅提速。

6603

6603

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?