购买机器

地址:https://www.aliyun.com/product/ecs?spm=5176.12825654.eofdhaal5.2.e9392c4acVBgPk

使用 root登录机器

创建用户

$ useradd hadoop$ vi /etc/sudoers# 找到root ALL=(ALL) ALL,添加hadoop ALL=(ALL) NOPASSWD:ALL

创建目录

在hadoop用户的根目录下创建目录

$ mkdir app data lib maven_repos software script source tmp# app 最终软件安装的目录# data 测试数据# lib 开发的jar# maven_repos Maven本地仓库# software 软件# script 脚本 flume hive project# source 源码# tmp 临时文件夹

下载 JDK解压文件

jdk安装

- 下载

https://download.oracle.com/otn/java/jdk/8u221-b11/230deb18db3e4014bb8e3e8324f81b43/jdk-8u221-linux-x64.tar.gz

- 配置、解压文件

$ mkdir /usr/java$ tar -zxvf ./jdk-8u221-linux-x64.tar.gz -C /usr/java/#配置环境变量$ sudo vim /etc/profileexport JAVA_HOME=/usr/java/jdk1.8.0_221export PATH=$JAVA_HOME/bin:$PATH$ source /etc/profile#验证$ javaUsage: java [-options] class [args...](to execute a class)or java [-options] -jar jarfile [args...](to execute a jar file)where options include:-d32 use a 32-bit data model if available-d64 use a 64-bit data model if available-server to select the "server" VMThe default VM is server,because you are running on a server-class machine.-cp <class search path of directories and zip/jar files>-classpath <class search path of directories and zip/jar files>A : separated list of directories, JAR archives,and ZIP archives to search for class files.-D<name>=<value>set a system property-verbose:[class|gc|jni]enable verbose output-version print product version and exit-version:<value>Warning: this feature is deprecated and will be removedin a future release.require the specified version to run-showversion print product version and continue-jre-restrict-search | -no-jre-restrict-searchWarning: this feature is deprecated and will be removedin a future release.include/exclude user private JREs in the version search-? -help print this help message-X print help on non-standard options-ea[:<packagename>...|:<classname>]-enableassertions[:<packagename>...|:<classname>]enable assertions with specified granularity-da[:<packagename>...|:<classname>]-disableassertions[:<packagename>...|:<classname>]disable assertions with specified granularity-esa | -enablesystemassertionsenable system assertions-dsa | -disablesystemassertionsdisable system assertions-agentlib:<libname>[=<options>]load native agent library <libname>, e.g. -agentlib:hprofsee also, -agentlib:jdwp=help and -agentlib:hprof=help-agentpath:<pathname>[=<options>]load native agent library by full pathname-javaagent:<jarpath>[=<options>]load Java programming language agent, see java.lang.instrument-splash:<imagepath>show splash screen with specified image

配置免密登录

$ ssh-keygen -t rsaGenerating public/private rsa key pair.Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):Created directory '/home/hadoop/.ssh'.Enter passphrase (empty for no passphrase):Enter same passphrase again:Your identification has been saved in /home/hadoop/.ssh/id_rsa.Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.The key fingerprint is:SHA256:8ZhB5+0Mu1WPhe85fijGI08Dctp/V+wjLXxtR1x+5Vw hadoop@bigdataThe key's randomart image is:+---[RSA 2048]----+| . . || . o . . || o o . o .|| * = . =E|| S = + .**|| = + .@|| . o.+ .*=|| .o==++O|| +o++++|+----[SHA256]-----+$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys$ chmod 0600 ~/.ssh/authorized_keys$ ssh bigdataThe authenticity of host 'bigdata (172.26.19.x)' can't be established.ECDSA key fingerprint is SHA256:yoQ6N2QsIscrIYJiSJ7MnHIWdQ8T/zAaTIcXB0zZJNY.ECDSA key fingerprint is MD5:ba:ed:b5:0a:c2:f0:0a:52:e1:8d:76:1f:4d:b0:72:28.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added 'bigdata,172.26.19.x' (ECDSA) to the list of known hosts.Last login: Mon Aug 26 22:26:45 2019 from 124.64.74.89Welcome to Alibaba Cloud Elastic Compute Service !$ ssh localhost dateThe authenticity of host 'localhost (127.0.0.1)' can't be established.ECDSA key fingerprint is SHA256:yoQ6N2QsIscrIYJiSJ7MnHIWdQ8T/zAaTIcXB0zZJNY.ECDSA key fingerprint is MD5:ba:ed:b5:0a:c2:f0:0a:52:e1:8d:76:1f:4d:b0:72:28.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added 'localhost' (ECDSA) to the list of known hosts.Mon Aug 26 22:56:14 CST 2019

下载 HADOOP解压文件

安装

- 下载

http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1.tar.gz

- 配置、解压文件

$ tar -zxvf hadoop-2.6.0-cdh5.15.1.tar.gz -C ../app/#创建软连接$ ln -s /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/ hadoop# 配置当前用户的环境变量$ vim ~/.bash_profileexport HADOOP_HOME=/home/hadoop/app/hadoopexport PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH$ source ~/.bash_profile

hadoop的基本配置

$ vim /home/hadoop/app/hadoop/etc/hadoop/core-site.xml<configuration><property><name>fs.defaultFS</name><value>hdfs://172.26.19.217:9000</value> # 配置自己机器的IP</property><property><name>hadoop.tmp.dir</name><value>/home/hadoop/data/hadoop/tmp</value></property></configuration>$ vim /home/hadoop/app/hadoop/etc/hadoop/hdfs-site.xml<configuration><property><name>dfs.replication</name><value>1</value></property></configuration>

- 启动和验证

$ hdfs namenode -format19/08/26 23:18:47 INFO namenode.NameNode: STARTUP_MSG:/************************************************************STARTUP_MSG: Starting NameNodeSTARTUP_MSG: user = hadoopSTARTUP_MSG: host = bigdata/172.26.19.xSTARTUP_MSG: args = [-format]STARTUP_MSG: version = 2.6.0-cdh5.15.1$ start-dfs.shStarting namenodes on [bigdata]bigdata: starting namenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-namenode-bigdata.outlocalhost: starting datanode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-datanode-bigdata.outStarting secondary namenodes [0.0.0.0]0.0.0.0: starting secondarynamenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-secondarynamenode-bigdata.outcondarynamenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/hadoop-hadoop-secondarynamenode-bigdata.out$ jps3970 Jps3588 NameNode3861 SecondaryNameNode3708 DataNode

配置 yarn

$ cd /home/hadoop/app/hadoop/etc/hadoop$ cp mapred-site.xml.template mapred-site.xml$ vim mapred-site.xml<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>$ vim yarn-site.xml<configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property></configuration>$ start-yarn.shstarting yarn daemonsstarting resourcemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/yarn-hadoop-resourcemanager-bigdata.outlocalhost: starting nodemanager, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs/yarn-hadoop-nodemanager-bigdata.out$ jps4161 NodeManager4066 ResourceManager3588 NameNode3861 SecondaryNameNode4454 Jps3708 DataNode

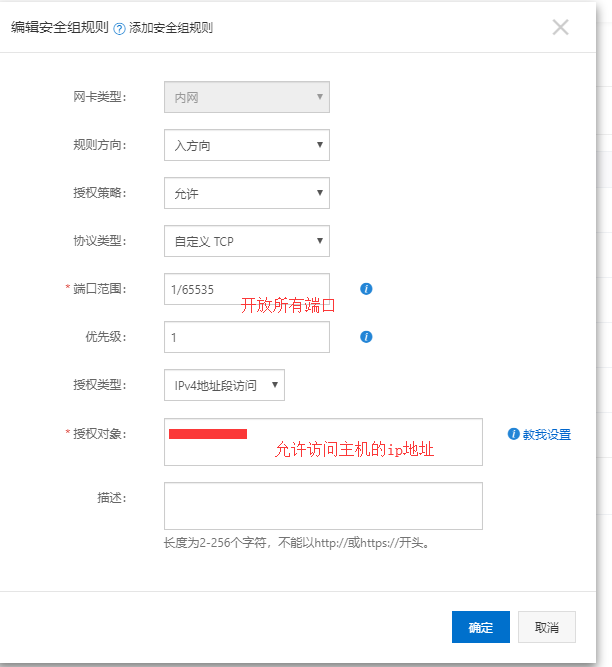

阿里云安全组设置

浏览器测试是否可以访问

输入ip地址:50070

- 参考文档:http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1/hadoop-project-dist/hadoop-common/SingleCluster.html

1285

1285

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?