Abstract

表达大模型在文本分类上做的不好。

原因:

1、处理复杂语境时缺少推理能力。(e.g… 类比、讽刺)

2、限制学习的上下文的token数。

提出了自己的策略:

** Clue And Reasoning Prompting (CARP).线索与推理提示**

1、能用prompt找到clue(语境线索)

2、能使用K-最近邻算法在上下文的学习中,使得能利用LLM的泛化性和具体任务的全标签功能。

ICL(in-context learning)。

introduction

带有ICL的LLM的效果不如微调后的文本分类模型原因:

1、LLM的推理能力不行

2、上下文学习受限,the longest context allowed for GPT-3 is 4,096 subtokens.

所以比监督学习(文本分类模型)的效果要差一些。

related work

大模型可以泛泛的被分为三类:

1、encoder-only:Bert

2、decoder-only:GPT

3、encoder-decoder:T5

prompt Construction (优化结构)

通过input对于语句的判断(sentiment is positive or negative)做引导,证明这种可行性只需要在few-shot证明即可。

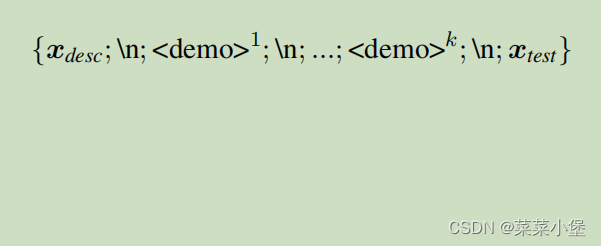

prompt样例👆

选取输入样例

1、Random:没什么的特殊的,随机选样。

2、kNN Sampling:把xtestx_{test}xtest使用一个encoder模型,选出和xtestx_{test}xtest相近的k个数据,选取相近sentence的方法:

1.SimCSE:基于语义模型做的检索语义相似的example,但是不一定是具有相同标签的示例。

2.Finetuned Model:CARP使用在训练数据集上微调的模型作为kNN编码器模型。

个人理解是在训练前加入了一个encoder模型,然后有针对性的(取相似Q&A)作为大模型的prompt。

线索收集与推理

整个过程是模范(mimics)人的步骤来进行的。

Overview

Clue

表现的更多是一些浅显的词汇分词意思。

Reasoning

表现的是一种通过理解语句的逻辑推理,更深层次的论证,所以更像人类的决定。

405

405

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?