前言

本文介绍了可变形大核注意力(D-LKA Attention)机制,该机制解决了Transformer在医学图像分割中计算成本高、忽略片间信息的问题。它通过大卷积核获取大感受野且控制计算开销,结合可变形卷积动态调整采样网格以适配数据模式,还设计了2D和3D版本(3D版擅长跨深度数据理解)。我们将D-LKA Attention集成进YOLOv11,构建分层视觉Transformer架构D-LKA Net。实验证明,该模型在数据集上表现优于现有方法,提升了分割精度。

文章目录: YOLOv8改进大全:卷积层、轻量化、注意力机制、损失函数、Backbone、SPPF、Neck、检测头全方位优化汇总

专栏链接: YOLOv8改进专栏

介绍

摘要

医学图像分割在Transformer模型的应用下取得了显著进步,这些模型在捕捉远距离上下文和全局语境信息方面表现出色。然而,这些模型的计算需求随着token数量的平方增加,限制了其深度和分辨率能力。大多数现有方法以逐片处理三维体积图像数据(称为伪3D),这忽略了重要的片间信息,从而降低了模型的整体性能。为了解决这些挑战,我们引入了可变形大核注意力(D-LKA Attention)的概念,这是一种简化的注意力机制,采用大卷积核以充分利用体积上下文信息。该机制在类似于自注意力的感受野内运行,同时避免了计算开销。此外,我们提出的注意力机制通过可变形卷积灵活变形采样网格,使模型能够适应多样的数据模式。我们设计了D-LKA Attention的2D和3D版本,其中3D版本在跨深度数据理解方面表现出色。这些组件共同构成了我们新颖的分层视觉Transformer架构,即D-LKA Net。在流行的医学分割数据集(如Synapse、NIH胰腺和皮肤病变)上对我们模型的评估表明其优于现有方法。我们的代码实现已在GitHub上公开。

文章链接

论文地址:论文地址

代码地址:代码地址

基本原理

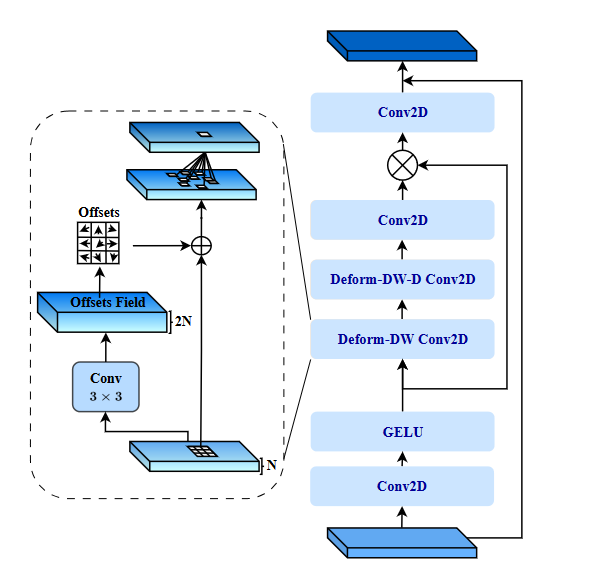

可变形大核注意力(Deformable Large Kernel Attention, D-LKA Attention)是一种简化的注意力机制,采用大卷积核来充分利用体积上下文信息。该机制在类似于自注意力的感受野内运行,同时避免了计算开销。通过可变形卷积灵活变形采样网格,使模型能够适应多样的数据模式,增强对病灶或器官变形的表征能力。同时设计了D-LKA Attention的2D和3D版本,其中3D版本在跨深度数据理解方面表现出色。这些组件共同构成了新颖的分层视觉Transformer架构,即D-LKA Net,在多种医学分割数据集上的表现优于现有方法。:

-

大卷积核:

D-LKA Attention利用大卷积核来捕捉类似于自注意力机制的感受野。这些大卷积核可以通过深度卷积、深度膨胀卷积和1×1卷积构建,从而在保持参数和计算复杂性较低的同时,获得较大的感受野。这使得模型能够更好地理解体积上下文信息,提升分割精度。 -

可变形卷积:

D-LKA Attention引入了可变形卷积,使得卷积核可以灵活地调整采样网格,从而适应不同的数据模式。这种适应性使得模型能够更准确地表征病变或器官的变形,提高对象边界的定义精度。可变形卷积通过一个额外的卷积层学习偏移场,使得卷积核可以动态调整以适应输入特征。 -

2D和3D版本:

D-LKA Attention分别设计了2D和3D版本,以适应不同的分割任务需求。2D版本在处理二维图像时,通过变形卷积提高对不规则形状和大小对象的捕捉能力。3D版本则在处理三维体积数据时,通过在现有框架中引入单个变形卷积层来增强跨深度数据的理解能力。这种设计在保持计算效率的同时,能够有效地处理片间信息,提高分割精度和上下文整合能力。

大卷积核:

D-LKA Attention中的大卷积核是通过多种卷积技术构建的,以实现类似于自注意力机制的感受野,但参数和计算量更少。具体实现方式包括:

- 深度卷积(Depth-wise Convolution):深度卷积将每个输入通道独立地卷积,以降低参数数量和计算复杂度。

- 深度膨胀卷积(Depth-wise Dilated Convolution):通过在卷积核之间插入空洞,实现更大的感受野,同时保持计算效率。

- 1×1卷积:用于调整通道数并增加非线性特征。

大卷积核的构建公式如下:

D W = ( 2 d − 1 ) × ( 2 d − 1 ) DW = (2d-1)×(2d-1) DW=(2d−1)×(2d−1)

D W − D = ⌈ K d ⌉ × ⌈ K d ⌉ DW-D = \left\lceil \frac{K}{d} \right\rceil \times \left\lceil \frac{K}{d} \right\rceil DW−D=⌈dK⌉×⌈dK⌉

其中, K K K 是卷积核大小, d d d 是膨胀率。参数数量和浮点运算量的计算公式如下:

P ( K , d ) = C ( ⌈ K d ⌉ 2 + ( 2 d − 1 ) 2 + 3 + C ) P(K, d) = C \left( \left\lceil \frac{K}{d} \right\rceil^2 + (2d-1)^2 + 3 + C \right) P(K,d)=C(⌈dK⌉2+(2d−1)2+3+C)

F ( K , d ) = P ( K , d ) × H × W F(K, d) = P(K, d) × H × W F(K,d)=P(K,d)×H×W

在三维情况下,公式扩展如下:

P 3 d ( K , d ) = C ( ⌈ K d ⌉ 3 + ( 2 d − 1 ) 3 + 3 + C ) P3d(K, d) = C \left( \left\lceil \frac{K}{d} \right\rceil^3 + (2d-1)^3 + 3 + C \right) P3d(K,d)=C(⌈dK⌉3+(2d−1)3+3+C)

F 3 d ( K , d ) = P 3 d ( K , d ) × H × W × D F3d(K, d) = P3d(K, d) × H × W × D F3d(K,d)=P3d(K,d)×H×W×D

通过这种大卷积核设计,D-LKA Attention能够在保持计算效率的同时,实现大范围的感受野,从而在医学图像分割任务中提供更加精确的结果。

可变形卷积:

D-LKA Attention中的可变形卷积(Deformable Convolution)是一种增强型卷积技术,旨在提高卷积网络对不规则形状和尺寸对象的捕捉能力。可变形卷积通过在标准卷积操作中引入额外的学习偏移,使卷积核可以动态调整其采样位置,从而更灵活地适应输入特征的几何形变。

可变形卷积的基本思想是通过学习偏移量,使卷积核在每次卷积操作中能够自适应地调整其采样网格。具体实现如下:

- 偏移量学习:首先,使用一个卷积层来学习输入特征图上的偏移量。这个卷积层的输出是一个与输入特征图大小相同的偏移量图。

- 偏移量应用:将学习到的偏移量应用到卷积核的采样位置,从而动态调整每个卷积操作的采样点位置。

- 卷积操作:使用调整后的采样位置进行标准卷积操作。

核心代码

import torch

import torch.nn as nn

import torchvision

class DeformConv(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv, self).__init__()

self.offset_net = nn.Conv2d(in_channels=in_channels,

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=kernel_size,

padding=padding,

stride=stride,

dilation=dilation,

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

offsets = self.offset_net(x)

out = self.deform_conv(x, offsets)

return out

class DeformConv_3x3(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv, self).__init__()

self.offset_net = nn.Conv2d(in_channels=in_channels,

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=3,

padding=1,

stride=1,

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

offsets = self.offset_net(x)

out = self.deform_conv(x, offsets)

return out

class DeformConv_experimental(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv_experimental, self).__init__()

self.conv_channel_adjust = nn.Conv2d(

in_channels=in_channels, out_channels=2 * kernel_size[0] * kernel_size[1], kernel_size=(1, 1))

self.offset_net = nn.Conv2d(in_channels=2 * kernel_size[0] * kernel_size[1],

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=3,

padding=1,

stride=1,

groups=2 * kernel_size[0] * kernel_size[1],

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

x_chan = self.conv_channel_adjust(x)

offsets = self.offset_net(x_chan)

out = self.deform_conv(x, offsets)

return out

class deformable_LKA(nn.Module):

def __init__(self, dim):

super().__init__()

self.conv0 = DeformConv(dim, kernel_size=(5, 5), padding=2, groups=dim)

self.conv_spatial = DeformConv(dim, kernel_size=(

7, 7), stride=1, padding=9, groups=dim, dilation=3)

self.conv1 = nn.Conv2d(dim, dim, 1)

def forward(self, x):

u = x.clone()

attn = self.conv0(x)

attn = self.conv_spatial(attn)

attn = self.conv1(attn)

return u * attn

class deformable_LKA_experimental(nn.Module):

def __init__(self, dim):

super().__init__()

self.conv0 = DeformConv_experimental(

dim, kernel_size=(5, 5), padding=2, groups=dim)

self.conv_spatial = DeformConv_experimental(dim, kernel_size=(

7, 7), stride=1, padding=9, groups=dim, dilation=3)

self.conv1 = nn.Conv2d(dim, dim, 1)

def forward(self, x):

u = x.clone()

attn = self.conv0(x)

attn = self.conv_spatial(attn)

attn = self.conv1(attn)

return u * attn

class deformable_LKA_Attention(nn.Module):

def __init__(self, d_model):

super().__init__()

self.proj_1 = nn.Conv2d(d_model, d_model, 1)

self.activation = nn.GELU()

self.spatial_gating_unit = deformable_LKA(d_model)

self.proj_2 = nn.Conv2d(d_model, d_model, 1)

def forward(self, x):

shorcut = x.clone()

x = self.proj_1(x)

x = self.activation(x)

x = self.spatial_gating_unit(x)

x = self.proj_2(x)

x = x + shorcut

return x

class deformable_LKA_Attention_experimental(nn.Module):

def __init__(self, d_model):

super().__init__()

self.proj_1 = nn.Conv2d(d_model, d_model, 1)

self.activation = nn.GELU()

self.spatial_gating_unit = deformable_LKA_experimental(d_model)

self.proj_2 = nn.Conv2d(d_model, d_model, 1)

def forward(self, x):

shorcut = x.clone()

x = self.proj_1(x)

x = self.activation(x)

x = self.spatial_gating_unit(x)

x = self.proj_2(x)

x = x + shorcut

return x

引入代码

在根目录下的ultralytics/nn/modules/目录,新建一个attention目录,然后新建一个以 deformable_LKA_Attention 为文件名的py文件, 把代码拷贝进去。

import torch

import torch.nn as nn

import torchvision

class DeformConv(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv, self).__init__()

self.offset_net = nn.Conv2d(in_channels=in_channels,

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=kernel_size,

padding=padding,

stride=stride,

dilation=dilation,

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

offsets = self.offset_net(x)

out = self.deform_conv(x, offsets)

return out

class DeformConv_3x3(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv, self).__init__()

self.offset_net = nn.Conv2d(in_channels=in_channels,

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=3,

padding=1,

stride=1,

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

offsets = self.offset_net(x)

out = self.deform_conv(x, offsets)

return out

class DeformConv_experimental(nn.Module):

def __init__(self, in_channels, groups, kernel_size=(3, 3), padding=1, stride=1, dilation=1, bias=True):

super(DeformConv_experimental, self).__init__()

self.conv_channel_adjust = nn.Conv2d(

in_channels=in_channels, out_channels=2 * kernel_size[0] * kernel_size[1], kernel_size=(1, 1))

self.offset_net = nn.Conv2d(in_channels=2 * kernel_size[0] * kernel_size[1],

out_channels=2 *

kernel_size[0] * kernel_size[1],

kernel_size=3,

padding=1,

stride=1,

groups=2 * kernel_size[0] * kernel_size[1],

bias=True)

self.deform_conv = torchvision.ops.DeformConv2d(in_channels=in_channels,

out_channels=in_channels,

kernel_size=kernel_size,

padding=padding,

groups=groups,

stride=stride,

dilation=dilation,

bias=False)

def forward(self, x):

x_chan = self.conv_channel_adjust(x)

offsets = self.offset_net(x_chan)

out = self.deform_conv(x, offsets)

return out

class deformable_LKA(nn.Module):

def __init__(self, dim):

super().__init__()

self.conv0 = DeformConv(dim, kernel_size=(5, 5), padding=2, groups=dim)

self.conv_spatial = DeformConv(dim, kernel_size=(

7, 7), stride=1, padding=9, groups=dim, dilation=3)

self.conv1 = nn.Conv2d(dim, dim, 1)

def forward(self, x):

u = x.clone()

attn = self.conv0(x)

attn = self.conv_spatial(attn)

attn = self.conv1(attn)

return u * attn

class deformable_LKA_experimental(nn.Module):

def __init__(self, dim):

super().__init__()

self.conv0 = DeformConv_experimental(

dim, kernel_size=(5, 5), padding=2, groups=dim)

self.conv_spatial = DeformConv_experimental(dim, kernel_size=(

7, 7), stride=1, padding=9, groups=dim, dilation=3)

self.conv1 = nn.Conv2d(dim, dim, 1)

def forward(self, x):

u = x.clone()

attn = self.conv0(x)

attn = self.conv_spatial(attn)

attn = self.conv1(attn)

return u * attn

class deformable_LKA_Attention(nn.Module):

def __init__(self, d_model):

super().__init__()

self.proj_1 = nn.Conv2d(d_model, d_model, 1)

self.activation = nn.GELU()

self.spatial_gating_unit = deformable_LKA(d_model)

self.proj_2 = nn.Conv2d(d_model, d_model, 1)

def forward(self, x):

shorcut = x.clone()

x = self.proj_1(x)

x = self.activation(x)

x = self.spatial_gating_unit(x)

x = self.proj_2(x)

x = x + shorcut

return x

tasks注册

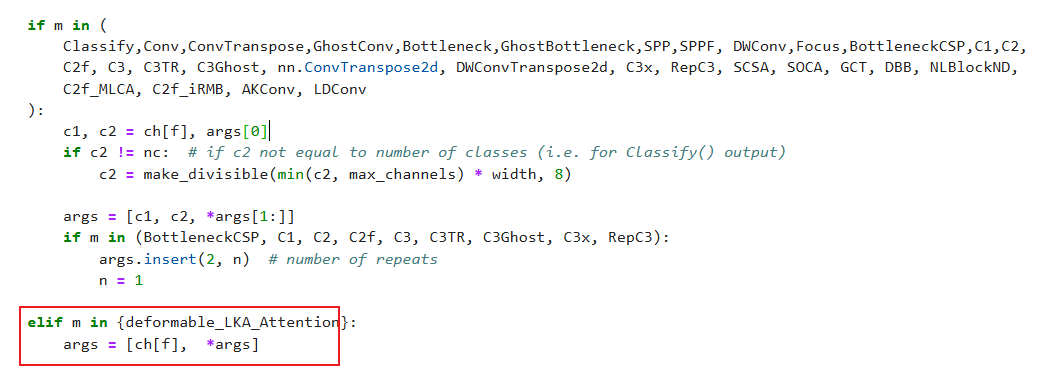

在ultralytics/nn/tasks.py中进行如下操作:

步骤1:

from ultralytics.nn.attention.deformable_LKA_Attention import deformable_LKA_Attention

步骤2

修改def parse_model(d, ch, verbose=True):

elif m in {deformable_LKA_Attention}:

args = [ch[f], *args]

配置yolov8-deformable-LKA.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024]

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 768]

l: [1.00, 1.00, 512]

x: [1.00, 1.25, 512]

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 15 (P3/8-small)

- [-1, 3, deformable_LKA_Attention, []] # 16

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, C2f, [512]] # 19 (P4/16-medium)

- [-1, 3, deformable_LKA_Attention, []]

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, C2f, [1024]] # 23 (P5/32-large)

- [-1, 3, deformable_LKA_Attention, []]

- [[16, 20, 24], 1, Detect, [nc]] # Detect(P3, P4, P5)

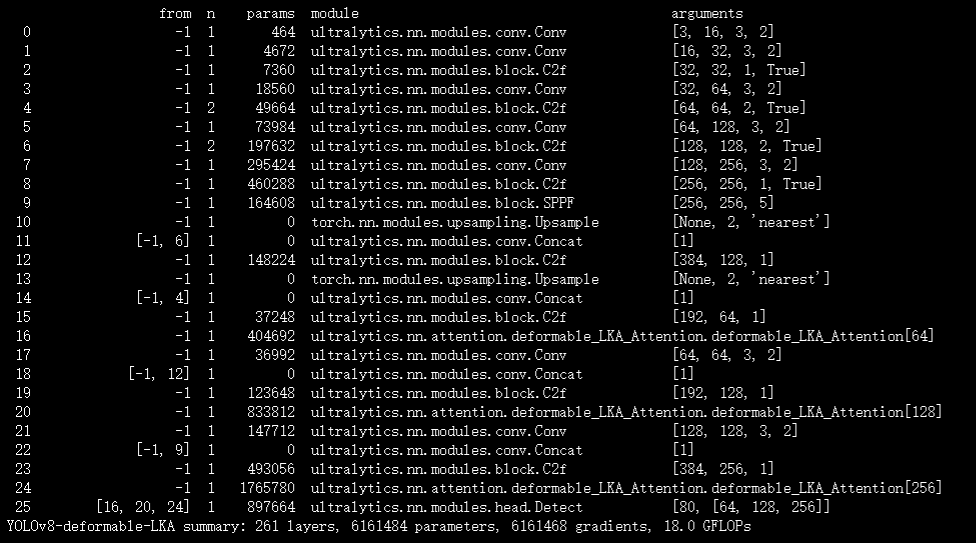

实验

脚本

import os

from ultralytics import YOLO

yaml = 'ultralytics/cfg/models/v8/yolov8-deformable-LKA.yaml'

model = YOLO(yaml)

model.info()

if __name__ == "__main__":

results = model.train(data='coco128.yaml',

name='deformable-LKA',

epochs=10,

workers=8,

batch=1)

结果

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?