第 57 天: kMeans 聚类 (续)

获得虚拟中心后, 换成与其最近的点作为实际中心, 再聚类.

//当前临时实际中心点与平均中心点的距离

double[] tempNearestDistanceArray = new double[numClusters];

//当前距离平均中心最近的实际点

double[][] tempActualCenters = new double[numClusters][dataset.numAttributes() - 1];

Arrays.fill(tempNearestDistanceArray, Double.MAX_VALUE);

for (int i = 0; i < dataset.numInstances(); i++) {

//用当前数据去与其分类的中心比较距离

if (tempNearestDistanceArray[tempClusterArray[i]] > distance(i, tempCenters[tempClusterArray[i]])) {

tempNearestDistanceArray[tempClusterArray[i]] = distance(i, tempCenters[tempClusterArray[i]]);

//暂时存储当前距离平均中心最近的实际点

for (int j = 0; j < dataset.numAttributes() - 1; j++) {

tempActualCenters[tempClusterArray[i]][j] = dataset.instance((i)).value(j);

}

}

}

for (int i = 0; i < tempNewCenters.length; i++) {

tempNewCenters[i] = tempActualCenters[i];

}

运行结果:

New loop ..

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4,0.2],[6.3,3.3,6.0,2.5]]

New loop ..

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4,0.2],[6.0,2.7,5.1,1.6]]

New loop ...

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4,0.2],[5.7,2.8,4.5,1.3]]

New loop ...

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4, 0.2],[5.5,2.3,4.0,1.3]]

New loop ...

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4,e.2],[5.5,2.3,4.0,1.3]]

New loop ...

Now the new centers are: [[7.0,3.2,4.7,1.4],[5.1,3.5,1.4,e.2],[5.5,2.3,4.0,1.3]]第58天:符号型数据的 NB 算法

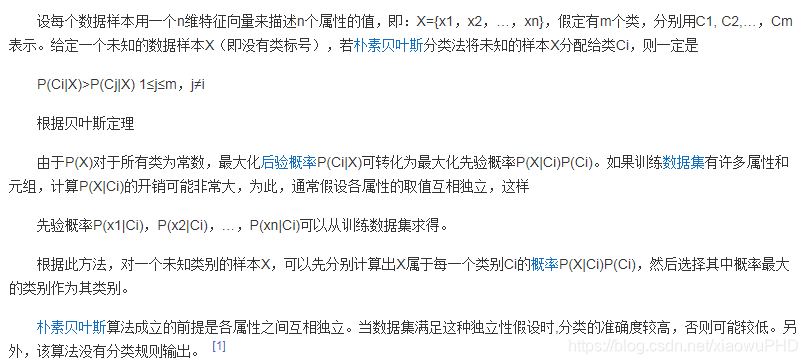

NB(朴素贝叶斯)算法是一种分类算法。朴素贝叶斯思想:对于给出的待分类项,求出在此项条件(特征)下各个类别出现的概率,那个最大就认为其属于哪个类别。这就是朴素贝叶斯的思想基础。

代码如下:

import weka.core.Instance;

import weka.core.Instances;

import java.io.FileReader;

import java.util.Arrays;

/**

* @description:朴素贝叶斯算法

* @author: Qing Zhang

* @time: 2021/7/6

*/

public class NaiveBayes {

//存储擦参数的内部类

private class GaussianParamters {

double mu;

double sigma;

public GaussianParamters(double paraMu, double paraSigma) {

mu = paraMu;

sigma = paraSigma;

}

public String toString() {

return "(" + mu + ", " + sigma + ")";

}

}

//数据

Instances dataset;

//类数量。例如二分类就是2

int numClasses;

//实例数量

int numInstances;

//属性数量

int numConditions;

//预测,包含被查询和预测的标签

int[] predicts;

//类分布

double[] classDistribution;

//具有拉普拉斯平滑的类分布

double[] classDistributionLaplacian;

//所有类的所有属性对所有值的条件概率

double[][][] conditionalProbabilities;

//具有拉普拉斯平滑的条件概率

double[][][] conditionalProbabilitiesLaplacian;

//高斯参数

GaussianParamters[][] gaussianParameters;

//数据类型

int dataType;

//符号型

public static final int NOMINAL = 0;

//数值型

public static final int NUMERICAL = 1;

public NaiveBayes(String paraFilename) {

dataset = null;

try {

FileReader fileReader = new FileReader(paraFilename);

dataset = new Instances(fileReader);

fileReader.close();

} catch (Exception ee) {

System.out.println("Cannot read the file: " + paraFilename + "\r\n" + ee);

System.exit(0);

}

dataset.setClassIndex(dataset.numAttributes() - 1);

numConditions = dataset.numAttributes() - 1;

numInstances = dataset.numInstances();

numClasses = dataset.attribute(numConditions).numValues();

}

/**

* @Description: 设置数据类型

* @Param: [paraDataType]

* @return: void

*/

public void setDataType(int paraDataType) {

dataType = paraDataType;

}

/**

* @Description: 计算拉普拉斯平滑的类分布

* @Param: []

* @return: void

*/

public void calculateClassDistribution() {

classDistribution = new double[numClasses];

classDistributionLaplacian = new double[numClasses];

double[] tempCounts = new double[numClasses];

for (int i = 0; i < numInstances; i++) {

int tempClassValue = (int) dataset.instance(i).classValue();

tempCounts[tempClassValue]++;

}

for (int i = 0; i < numClasses; i++) {

classDistribution[i] = tempCounts[i] / numInstances;

classDistributionLaplacian[i] = (tempCounts[i] + 1) / (numInstances + numClasses);

}

System.out.println("Class distribution: " + Arrays.toString(classDistribution));

System.out.println(

"Class distribution Laplacian: " + Arrays.toString(classDistributionLaplacian));

}

/**

* @Description: 用拉普拉斯平滑法计算条件概率。只扫描一次数据集

* @Param: []

* @return: void

*/

public void calculateConditionalProbabilities() {

conditionalProbabilities = new double[numClasses][numConditions][];

conditionalProbabilitiesLaplacian = new double[numClasses][numConditions][];

// 分配空间

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

int tempNumValues = (int) dataset.attribute(j).numValues();

conditionalProbabilities[i][j] = new double[tempNumValues];

conditionalProbabilitiesLaplacian[i][j] = new double[tempNumValues];

}

}

// 计数

int[] tempClassCounts = new int[numClasses];

for (int i = 0; i < numInstances; i++) {

int tempClass = (int) dataset.instance(i).classValue();

tempClassCounts[tempClass]++;

for (int j = 0; j < numConditions; j++) {

int tempValue = (int) dataset.instance(i).value(j);

conditionalProbabilities[tempClass][j][tempValue]++;

}

}

// 拉普拉斯函数的真实概率

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

int tempNumValues = (int) dataset.attribute(j).numValues();

for (int k = 0; k < tempNumValues; k++) {

conditionalProbabilitiesLaplacian[i][j][k] = (conditionalProbabilities[i][j][k]

+ 1) / (tempClassCounts[i] + numClasses);

}

}

}

System.out.println(Arrays.deepToString(conditionalProbabilities));

}

/**

* @Description: 用拉普拉斯平滑法计算条件概率

* @Param: []

* @return: void

*/

public void calculateGausssianParameters() {

gaussianParameters = new GaussianParamters[numClasses][numConditions];

double[] tempValuesArray = new double[numInstances];

int tempNumValues = 0;

double tempSum = 0;

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

tempSum = 0;

//获取该类的值。

tempNumValues = 0;

for (int k = 0; k < numInstances; k++) {

if ((int) dataset.instance(k).classValue() != i) {

continue;

}

tempValuesArray[tempNumValues] = dataset.instance(k).value(j);

tempSum += tempValuesArray[tempNumValues];

tempNumValues++;

}

// 获得参数。

double tempMu = tempSum / tempNumValues;

double tempSigma = 0;

for (int k = 0; k < tempNumValues; k++) {

tempSigma += (tempValuesArray[k] - tempMu) * (tempValuesArray[k] - tempMu);

}

tempSigma /= tempNumValues;

tempSigma = Math.sqrt(tempSigma);

gaussianParameters[i][j] = new GaussianParamters(tempMu, tempSigma);

}

}

System.out.println(Arrays.deepToString(gaussianParameters));

}

/**

* @Description: 给所有实例分类,结果将存储在predict[]中

* @Param: []

* @return: void

*/

public void classify() {

predicts = new int[numInstances];

for (int i = 0; i < numInstances; i++) {

predicts[i] = classify(dataset.instance(i));

}

}

/**

* @Description: 给所有样本分类

* @Param: [paraInstance]

* @return: int

*/

public int classify(Instance paraInstance) {

if (dataType == NOMINAL) {

return classifyNominal(paraInstance);

} else if (dataType == NUMERICAL) {

return classifyNumerical(paraInstance);

}

return -1;

}

/**

* @Description: 符号型数据分类

* @Param: [paraInstance]

* @return: int

*/

public int classifyNominal(Instance paraInstance) {

// 找到最大的一个

double tempBiggest = -10000;

int resultBestIndex = 0;

for (int i = 0; i < numClasses; i++) {

double tempPseudoProbability = Math.log(classDistributionLaplacian[i]);

for (int j = 0; j < numConditions; j++) {

int tempAttributeValue = (int) paraInstance.value(j);

// 拉普拉斯平滑

tempPseudoProbability += Math

.log(conditionalProbabilities[i][j][tempAttributeValue]);

}

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

}

}

return resultBestIndex;

}

/**

* @Description: 数值型数据分类

* @Param: [paraInstance]

* @return: int

*/

public int classifyNumerical(Instance paraInstance) {

// 找到最大的一个

double tempBiggest = -10000;

int resultBestIndex = 0;

for (int i = 0; i < numClasses; i++) {

double tempPseudoProbability = Math.log(classDistributionLaplacian[i]);

for (int j = 0; j < numConditions; j++) {

double tempAttributeValue = paraInstance.value(j);

double tempSigma = gaussianParameters[i][j].sigma;

double tempMu = gaussianParameters[i][j].mu;

tempPseudoProbability += -Math.log(tempSigma) - (tempAttributeValue - tempMu)

* (tempAttributeValue - tempMu) / (2 * tempSigma * tempSigma);

}

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

}

}

return resultBestIndex;

}

/**

* @Description: 计算准确率

* @Param: []

* @return: double

*/

public double computeAccuracy() {

double tempCorrect = 0;

for (int i = 0; i < numInstances; i++) {

if (predicts[i] == (int) dataset.instance(i).classValue()) {

tempCorrect++;

}

}

double resultAccuracy = tempCorrect / numInstances;

return resultAccuracy;

}

/**

* @Description: 符号型数据测试

* @Param: []

* @return: void

*/

public static void testNominal() {

System.out.println("Hello, Naive Bayes. I only want to test the nominal data.");

String tempFilename = "F:\\研究生\\研0\\学习\\Java_Study\\data_set\\mushrooms.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NOMINAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateConditionalProbabilities();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}

/**

* @Description: 数值型数据测试

* @Param: []

* @return: void

*/

public static void testNumerical() {

System.out.println(

"Hello, Naive Bayes. I only want to test the numerical data with Gaussian assumption.");

String tempFilename = "D:\\Java\\Java学习\\data_set\\iris.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NUMERICAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateGausssianParameters();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}

public static void main(String[] args) {

testNominal();

testNumerical();

}

}

本文介绍了KMeans聚类算法的迭代过程,通过不断更新实际中心点来接近数据分布。在朴素贝叶斯算法部分,详细阐述了如何计算类概率和条件概率,并提供了数值型和符号型数据的分类方法。最后,展示了算法的测试结果及准确性计算。

本文介绍了KMeans聚类算法的迭代过程,通过不断更新实际中心点来接近数据分布。在朴素贝叶斯算法部分,详细阐述了如何计算类概率和条件概率,并提供了数值型和符号型数据的分类方法。最后,展示了算法的测试结果及准确性计算。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?