1.获取文本文件的编码格式

文件编码识别工具包

<!-- 文件编码识别工具 -->

<dependency>

<groupId>com.ibm.icu</groupId>

<artifactId>icu4j</artifactId>

<version>4.6</version>

</dependency>

文本文件编码格式获取

private static String getCharset(MultipartFile file) {

if (Objects.isNull(file)) {

return "file is null";

}

CharsetDetector detector = new CharsetDetector();

detector.setText(file.getBytes());

CharsetMatch match = detector.detect();

return match.getName();

}

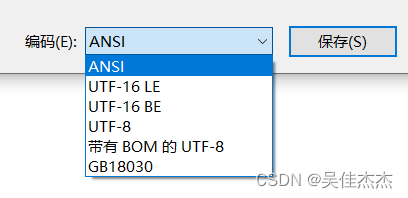

输入带有不同编码格式的文本文件:ANSI、UTF-16 LE、UTF-16 BE、UTF-8、带有BOM的UTF-8、GB18030

输出文本文件的编码格式一一对应为:GB18030、UTF-16 LE、UTF-16 BE、UTF-8、UTF-8、GB18030

2. 将任意格式的文本文件转为UFT-8

注意:UTF-16 LE、UTF-16 BE会转为带BOM的UFT-8

/**

* 将任意编码格式的文本文件转为utf8

*

* @param originalPath 原始路径

* @param targetPath 转换后的路径

* @param charsetName 当前文件编码

*/

public static void saveUtf8File(String originalPath, String targetPath, String charsetName) throws IOException {

try (InputStream inputStream = new FileInputStream(originalPath);

Reader reader = new InputStreamReader(inputStream, charsetName);

Writer writer = new OutputStreamWriter(new FileOutputStream(targetPath), StandardCharsets.UTF_8)) {

char[] buffer = new char[1024];

int readCount;

while ((readCount = reader.read(buffer)) != -1) {

writer.write(buffer, 0, readCount);

}

}

}

3.将带BOM的UTF-8转换为UTF-8

/**

* 将带BOM的utf8转为utf8

*

* @param originalPath 原始路径

* @param targetPath 转换后的路径

*/

public static void saveUtf8File(String originalPath, String targetPath) throws IOException {

try (InputStream in = new FileInputStream(originalPath);

OutputStream out = new FileOutputStream(targetPath)) {

byte[] buffer = new byte[8];

int bytesRead = in.read(buffer, 0, buffer.length);

// 判断是否包含BOM

if (bytesRead >= 3 && buffer[0] == (byte) 0xEF && buffer[1] == (byte) 0xBB && buffer[2] == (byte) 0xBF) {

// 跳过BOM

bytesRead -= 3;

System.arraycopy(buffer, 3, buffer, 0, bytesRead);

}

// 将剩余的数据写入新文件

out.write(buffer, 0, bytesRead);

byte[] bufferOther = new byte[1024];

while (true) {

int bytesReadOther = in.read(bufferOther);

if (bytesReadOther == -1) {

break;

}

out.write(bufferOther, 0, bytesReadOther);

}

}

}

1015

1015

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?