相关链接:

https://mp.weixin.qq.com/s/nhL-dF1ezvf91q_xcW383g

1、环境

确实有亿点老哈

- xxl-job-admin应用:2.0.2

- xxl-job-core2.0.2

- xxl-rpc-core1.4.0

- 执行器web应用:springboot2.5.2

- xxl-job-core2.0.2

- xxl-rpc-core1.4.0

2、问题现象

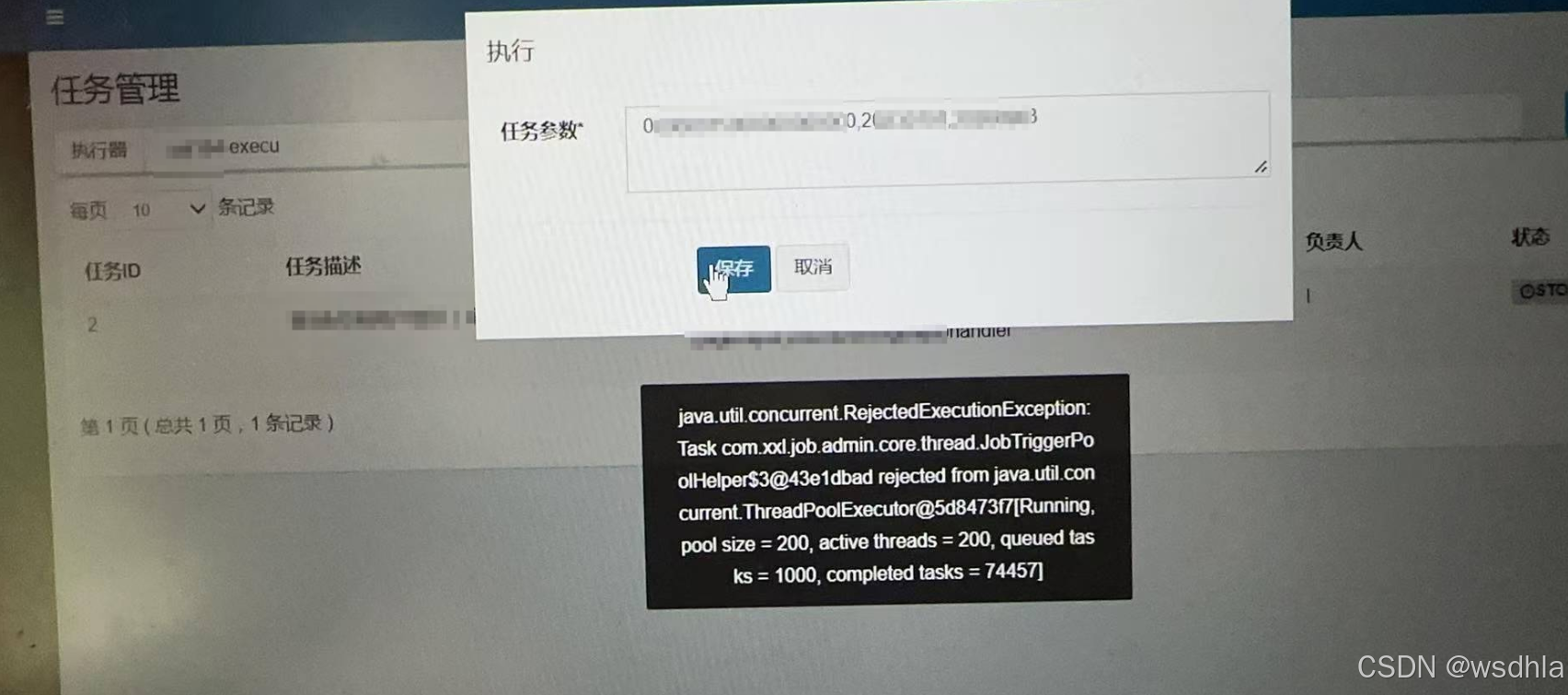

测试环境在使用xxl-job-admin执行具体的任务时,突然提示线程池已满。

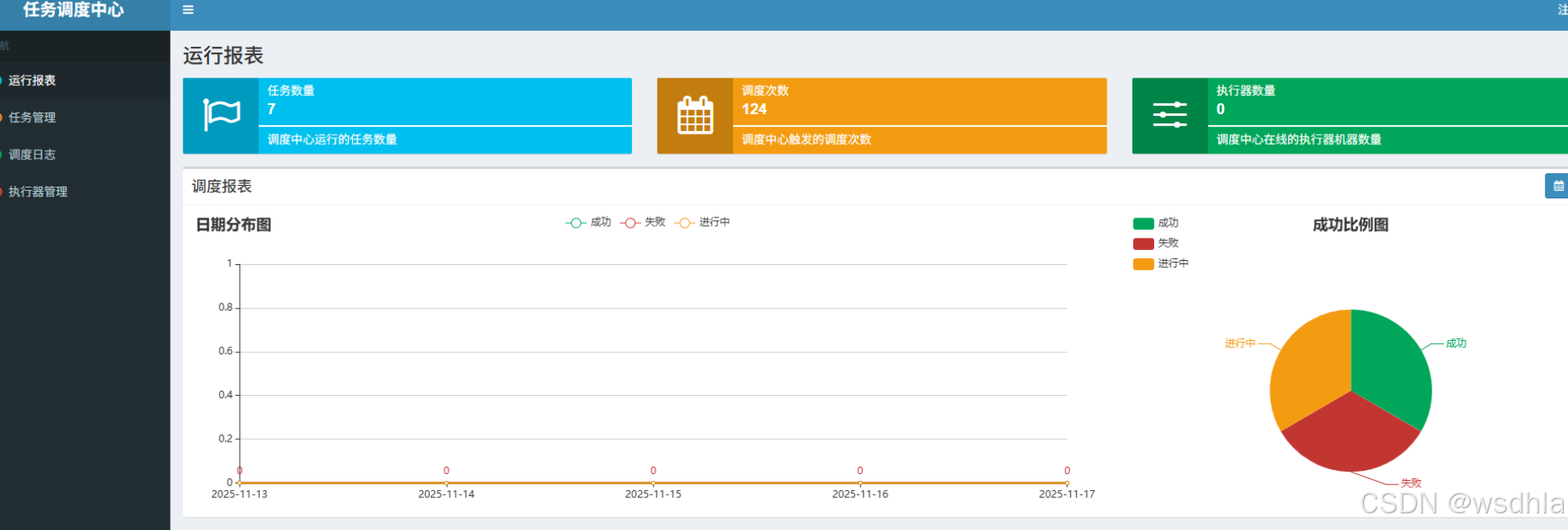

首页监控视图也没有提供线程池的相关监测。

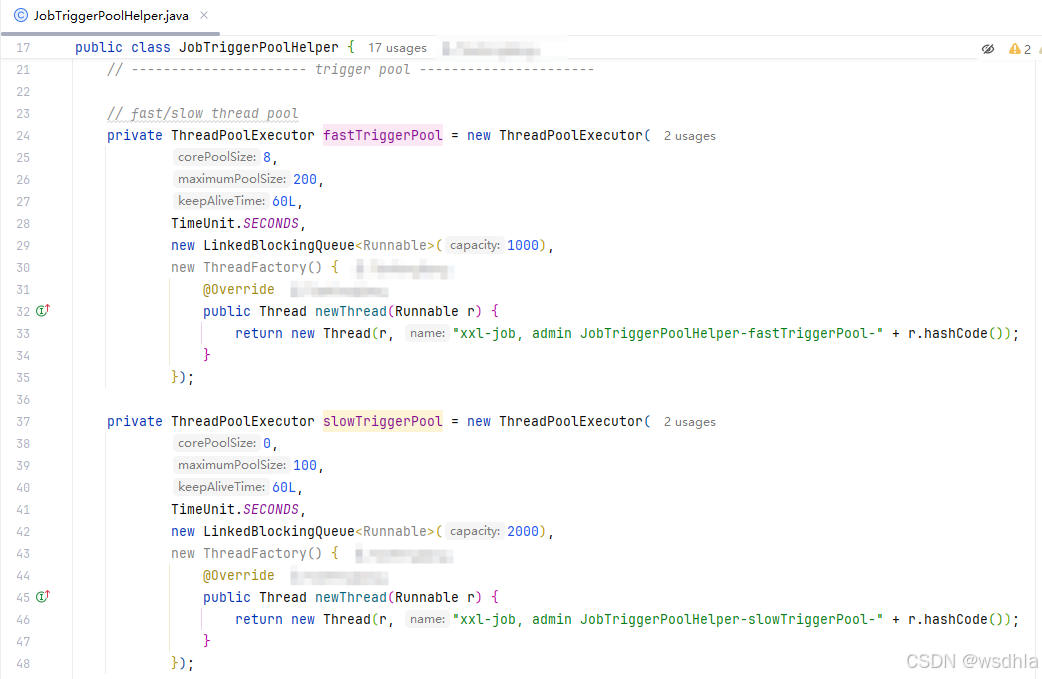

翻翻源码就看到了池子的默认配置

解决方法有多种,比如更改线程池配置(当然也不能无限扩大,因为这样可能找不到根因),或者横向扩展xxl-job-admin应用节点等。

但是我想找到根因,看看到底是哪些任务把池子干满了,是否有异常的业务?开干!!!

3、想办法监测一下

本地自测代码,抛砖引玉,大家有其他更好的方案,评论区见

3.1、xxl-job-admin应用侧

首先,在xxl-job-admin应用侧,监控线程池的状态,代码如下:

import cn.hutool.json.JSONObject;

import com.xxl.job.admin.core.thread.JobTriggerPoolHelper;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.lang.reflect.Field;

import java.util.*;

import java.util.concurrent.ThreadPoolExecutor;

@RestController

public class HealthController {

@GetMapping("/health/thread-pool")

public JSONObject threadPoolHealth() throws Exception {

JSONObject result = new JSONObject();

Field jobTriggerPoolHelper = JobTriggerPoolHelper.class.getDeclaredField("helper");

jobTriggerPoolHelper.setAccessible(true);

JobTriggerPoolHelper o = (JobTriggerPoolHelper) jobTriggerPoolHelper.get(null);

Field fastTriggerPoolField = o.getClass().getDeclaredField("fastTriggerPool");

fastTriggerPoolField.setAccessible(true);

ThreadPoolExecutor fastTriggerPoolExecutor = (ThreadPoolExecutor) fastTriggerPoolField.get(o);

JSONObject fastTriggerPool = new JSONObject();

fastTriggerPool.put("activeCount", fastTriggerPoolExecutor.getActiveCount());

fastTriggerPool.put("poolSize", fastTriggerPoolExecutor.getPoolSize());

fastTriggerPool.put("queueSize", fastTriggerPoolExecutor.getQueue().size());

fastTriggerPool.put("completedTaskCount", fastTriggerPoolExecutor.getCompletedTaskCount());

result.put("fastTriggerPool", fastTriggerPool);

Field slowTriggerPoolField = o.getClass().getDeclaredField("slowTriggerPool");

slowTriggerPoolField.setAccessible(true);

ThreadPoolExecutor slowTriggerPoolFieldExecutor = (ThreadPoolExecutor) slowTriggerPoolField.get(o);

JSONObject slowTriggerPool = new JSONObject();

slowTriggerPool.put("activeCount", slowTriggerPoolFieldExecutor.getActiveCount());

slowTriggerPool.put("poolSize", slowTriggerPoolFieldExecutor.getPoolSize());

slowTriggerPool.put("queueSize", slowTriggerPoolFieldExecutor.getQueue().size());

slowTriggerPool.put("completedTaskCount", slowTriggerPoolFieldExecutor.getCompletedTaskCount());

result.put("slowTriggerPool", fastTriggerPool);

// Set<Thread> threads = new HashSet<>();

// Field workersField = executor.getClass().getDeclaredField("workers");

// workersField.setAccessible(true);

// Set<?> workers = (Set<?>) workersField.get(executor);

//

// // 从Worker对象中获取线程

// for (Object worker : workers) {

// Field threadField = worker.getClass().getDeclaredField("thread");

// threadField.setAccessible(true);

// Thread thread = (Thread) threadField.get(worker);

//

// StackTraceElement[] stackTrace = thread.getStackTrace();

// // 跳过Thread类和当前方法本身的调用栈

// List<StackTraceElement> relevantStack = new ArrayList<>();

// boolean foundThreadConstructor = false;

//

// for (StackTraceElement element : stackTrace) {

// if (element.getClassName().equals("java.lang.Thread") &&

// element.getMethodName().equals("<init>")) {

// foundThreadConstructor = true;

// continue;

// }

//

// if (foundThreadConstructor &&

// !element.getClassName().startsWith("java.lang.Thread") &&

// !element.getClassName().equals(HealthController.class.getName())) {

// relevantStack.add(element);

// }

// }

//

// StackTraceElement[] array = relevantStack.toArray(new StackTraceElement[0]);

//

// threads.add(thread);

// }

return result;

}

}3.2、执行器web应用侧

代码如下:

import com.alibaba.fastjson.JSON;

import com.xxl.job.core.executor.impl.XxlJobSpringExecutor;

import com.xxl.job.core.handler.IJobHandler;

import com.xxl.job.core.thread.JobThread;

import org.apache.commons.lang3.StringUtils;

import org.springframework.beans.factory.support.DefaultListableBeanFactory;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.autoconfigure.domain.EntityScan;

import org.springframework.context.ApplicationContext;

import org.springframework.context.annotation.ComponentScan;

import org.springframework.context.annotation.EnableAspectJAutoProxy;

import org.springframework.data.jpa.repository.config.EnableJpaRepositories;

import org.springframework.jms.annotation.EnableJms;

import org.springframework.stereotype.Repository;

import org.springframework.transaction.annotation.EnableTransactionManagement;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

import javax.annotation.Resource;

import java.lang.ref.Reference;

import java.lang.ref.WeakReference;

import java.lang.reflect.Field;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.stream.Collectors;

@RestController

public class MoniXxlThreadController {

@Resource

private ApplicationContext applicationContext;

@GetMapping("/health/xxl-thread")

public List<Map<String, Integer>> threadPoolHealth() throws Exception {

Map<String, Integer> result = new ConcurrentHashMap<>();

// spring-beans-5.3.31.jar

// org.springframework.beans.factory.support.DefaultListableBeanFactory

// registerBeanDefinition()、preInstantiateSingletons()

// 私有反射

// List<String> beanDefinitionNames = DefaultListableBeanFactory.serializableFactories.get("application").referent.beanDefinitionNames;

Field serializableFactoriesField = DefaultListableBeanFactory.class.getDeclaredField("serializableFactories");

serializableFactoriesField.setAccessible(true);

Map<String, Reference<DefaultListableBeanFactory>> serializableFactories = (Map<String, Reference<DefaultListableBeanFactory>>) serializableFactoriesField.get(null);

WeakReference<DefaultListableBeanFactory> application = (WeakReference<DefaultListableBeanFactory>) serializableFactories.get("application");

Field referentField = application.getClass().getSuperclass().getDeclaredField("referent");

referentField.setAccessible(true);

DefaultListableBeanFactory referent = (DefaultListableBeanFactory) referentField.get(application);

Field beanDefinitionNamesField = referent.getClass().getDeclaredField("beanDefinitionNames");

beanDefinitionNamesField.setAccessible(true);

List<String> beanDefinitionNames = (List<String>) beanDefinitionNamesField.get(referent);

List<String> executor1 = beanDefinitionNames.stream().filter(x -> x.toUpperCase().contains("EXECUTOR")).collect(Collectors.toList());

List<String> executor2 = beanDefinitionNames.stream().filter(x -> x.toUpperCase().contains("JOB")).collect(Collectors.toList());

// 等同其下

// XxlJobSpringExecutor xxlJobSpringExecutor1 = (XxlJobSpringExecutor) applicationContext.getBean("xxlJobExecutor");

XxlJobSpringExecutor xxlJobSpringExecutor = applicationContext.getBean(XxlJobSpringExecutor.class);

Field jobHandlerRepositoryField = xxlJobSpringExecutor.getClass().getSuperclass().getDeclaredField("jobHandlerRepository");

jobHandlerRepositoryField.setAccessible(true);

ConcurrentHashMap<String, IJobHandler> jobHandlerRepository = (ConcurrentHashMap<String, IJobHandler>) jobHandlerRepositoryField.get(xxlJobSpringExecutor);

Field jobThreadRepositoryField = xxlJobSpringExecutor.getClass().getSuperclass().getDeclaredField("jobThreadRepository");

jobThreadRepositoryField.setAccessible(true);

ConcurrentHashMap<Integer, JobThread> jobThreadRepository = (ConcurrentHashMap<Integer, JobThread>) jobThreadRepositoryField.get(xxlJobSpringExecutor);

jobThreadRepository.forEach((k, v) -> {

String handlerName = v.getHandler().getClass().getName();

Integer oldVal = Optional.ofNullable(result.get(handlerName)).orElse(Integer.valueOf(0));

result.put(handlerName, oldVal + 1);

});

// 按线程数将任务降序排序

return result.entrySet().stream()

.sorted(Map.Entry.<String, Integer>comparingByValue().reversed())

.map(entry -> Collections.singletonMap(entry.getKey(), entry.getValue()))

.collect(Collectors.toList());

}

}可以试一试

147

147

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?