博客地址:http://www.fanlegefan.com

文章地址:http://www.fanlegefan.com/archives/azkabanjob/

无参作业

这里的作业全部用打印代替

1. o2o_2_hive.job:将清洗完的数据入hive库

2. o2o_clean_data.job:调用mr清洗hdfs数据

3. o2o_up_2_hdfs.job:将文件上传至hdfs

4. o2o_get_file_ftp1.job:从ftp1获取日志

5. o2o_get_file_fip2.job:从ftp2获取日志

依赖关系:

3依赖4和5,2依赖3,1依赖2,4和5没有依赖关系。

1.o2o_2_hive.job

type=command

command=sh /job/o2o_2_hive.sh

dependencies=o2o_clean_data2.o2o_clean_data.job

type=command

command=sh /job/o2o_clean_data.sh

dependencies=o2o_up_2_hdfs3.o2o_up_2_hdfs.job

type=command

command=echo "hadoop fs -put /data/*""

#多个依赖用逗号隔开

dependencies=o2o_get_file_ftp1,o2o_get_file_ftp24.o2o_get_file_ftp1.job

type=command

command=echo "wget ftp://file1 -O /data/file1"5.o2o_get_file_ftp2.job

type=command

command=echo "wget ftp:file2 -O /data/file2"目录结构

~/test/azkaban/flow$ tree

.

├── job

│ ├── o2o_2_hive.sh

│ └── o2o_clean_data.sh

├── o2o_2_hive.job

├── o2o_clean_data.job

├── o2o_get_file_ftp1.job

├── o2o_get_file_ftp2.job

└── o2o_up_2_hdfs.job

打包

zip -r flow.zip *有参作业

修改o2o_2_hive.job

传入day作为参数,并将改参数传给o2o_2_hive.sh

type=command

d=${day}

command=sh job/o2o_2_hive.sh ${d}

dependencies=o2o_clean_data

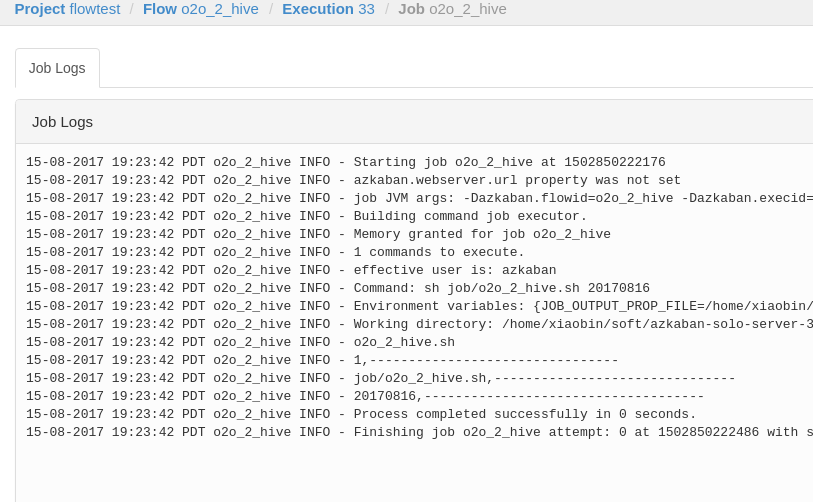

修改o2o_2_hive.sh

#!/usr/bin/env bash

echo "o2o_2_hive.sh"

echo $#,"--------------------------------"

echo $0,"-------------------------------"

echo $1,"------------------------------------"重新打包

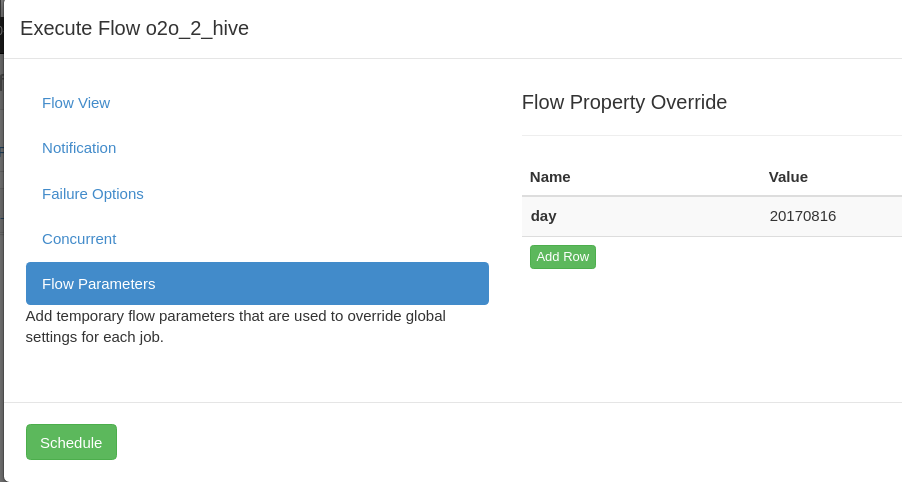

zip -r flow.zip *执行作业传递参数

本文介绍了一种基于Azkaban的任务调度方案,包括无参和有参作业配置,依赖关系设置,以及如何通过shell脚本实现数据从FTP获取到HDFS上传、清洗并最终导入Hive库的全流程自动化。

本文介绍了一种基于Azkaban的任务调度方案,包括无参和有参作业配置,依赖关系设置,以及如何通过shell脚本实现数据从FTP获取到HDFS上传、清洗并最终导入Hive库的全流程自动化。

1706

1706

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?