需求

获取博客文章的链接、标题、类型、简介内容。

环境

Win7 x64,Python 3.7

实现

# coding: UTF-8

from bs4 import BeautifulSoup

import urllib.request as urlrequest

import re

class Spider(object):

def __init__(self):

self.pages=[]

self.datas=[]

self.root="https://blog.youkuaiyun.com/wingrez"

def claw(self, startpage, endpage):

for i in range(startpage, endpage+1):

self.pages.append(self.root+"/article/list/%d?" % i )

for url in self.pages:

self.getDatas(url)

def download(self, url): # 下载当前网页内容

if url is None:

print("链接为空!")

return None

response=urlrequest.urlopen(url)

if response.getcode()!=200:

print("访问失败!")

return None

return response.read()

def getDatas(self, url): # 获取当前页所有文章信息

html_cont=self.download(url)

soup=BeautifulSoup(html_cont, 'html.parser', from_encoding='UTF-8')

articles=soup.find_all('div', class_='article-item-box csdn-tracking-statistics')

for article in articles:

data={}

tag_a=article.find('h4').find('a')

data['url']=tag_a['href']

tag_span=tag_a.find('span')

data['type']=tag_span.string

data['title']=tag_span.next_element.next_element

data['summary']=article.find('p').find('a').string

self.datas.append(data)

def output(self): # 输出数据

fout=open('output.html','w',encoding="UTF-8")

fout.write("<html>")

fout.write("<body>")

fout.write("<table>")

for data in self.datas:

fout.write("<tr>")

fout.write("<td>%s</td>" % data['type'])

fout.write("<td>%s</td>" % data['url'])

fout.write("<td>%s</td>" % data['title'])

fout.write("<td>%s</td>" % data['summary'])

fout.write("</tr>")

fout.write("</table>")

fout.write("</body>")

fout.write("</html>")

fout.close()

if __name__=="__main__":

spider=Spider()

spider.claw(1,12)

spider.output()

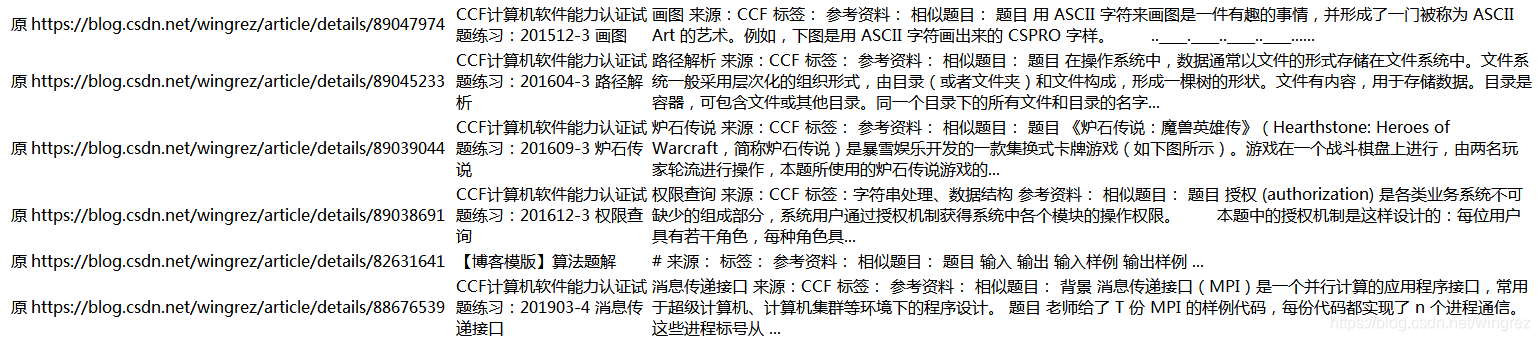

效果

本文介绍了一个使用Python和BeautifulSoup实现的简单爬虫,用于抓取优快云博客上的文章链接、标题、类型及简介。该爬虫在Windows 7 x64环境下运行,针对Python 3.7版本进行编写。通过定义Spider类,实现了从指定页面范围内的优快云博客抓取数据,并将结果输出到HTML文件中。

本文介绍了一个使用Python和BeautifulSoup实现的简单爬虫,用于抓取优快云博客上的文章链接、标题、类型及简介。该爬虫在Windows 7 x64环境下运行,针对Python 3.7版本进行编写。通过定义Spider类,实现了从指定页面范围内的优快云博客抓取数据,并将结果输出到HTML文件中。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?