写在前面

此篇文章是前桥大学大神复现的Attention,本人边学边翻译,借花献佛。跟着论文一步一步复现Attention和Transformer,敲完以后收货非常大,加深了理解。如有问题,请留言指出。

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import math, copy, time

from torch.autograd import Variable

import matplotlib.pyplot as plt

import seaborn

seaborn.set_context(context="talk")

%matplotlib inline

模型架构

大多数competitive neural sequence transduction models都有encoder-decoder架构(参考论文)。本文中,encoder将符号表示的输入序列 x 1 , … , x n x_1, \dots, x_n x1,…,xn映射到一系列连续表示 Z = ( z 1 , … , z n ) Z=(z_1, \dots, z_n) Z=(z1,…,zn)。给定一个z,decoder一次产生一个符号表示的序列输出 ( y 1 , … , y m ) (y_1, \dots, y_m) (y1,…,ym)。对于每一步来说,模型都是自回归的(自回归介绍论文),在生成下一个时消耗先前生成的所有符号作为附加输入。

class EncoderDecoder(nn.Module):

"""

A stanard Encoder-Decoder architecture.Base fro this and many other models.

"""

def __init__(self, encoder, decoder, src_embed, tgt_embed, generator):

super(EncoderDecoder, self).__init__()

self.encoder = encoder

self.decoder = decoder

self.src_embed = src_embed

self.tgt_embed = tgt_embed

self.generator = generator

def forward(self, src, tgt, src_mask, tgt_mask):

""" Take in and process masked src and target sequences. """

return self.decode(self.encode(src, src_mask), src_mask, tgt, tgt_mask)

def encode(self, src, src_mask):

return self.encoder(self.src_embed(src), src_mask)

def decode(self, memory, src_mask, tgt, tgt_mask):

return self.decoder(self.tgt_embed(tgt), memory, src_mask, tgt_mask)

class Generator(nn.Module):

"""Define standard linear + softmax generation step."""

def __init__(self, d_model, vocab):

super(Generator, self).__init__()

self.proj = nn.Linear(d_model, vocab)

def forward(self, x):

return F.log_softmax(self.proj(x), dim=-1)

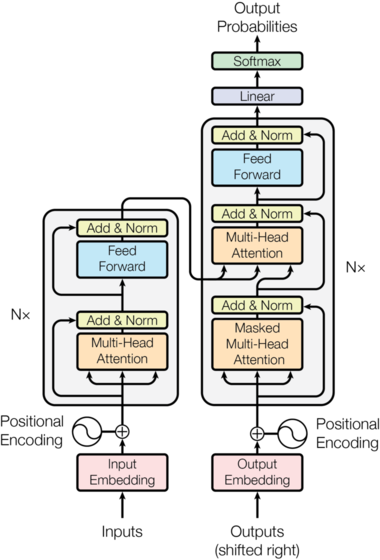

Transformer这种结构,在encoder和decoder中使用堆叠的self-attention和point-wise全连接层。如下图的左边和右边所示:

Image(filename='images/ModelNet-21.png')

Encoder 和 Decoder Stacks

Encoder

编码器由6个相同的layer堆叠而成

def clones(module, N):

"Produce N identical layers."

return nn.ModuleList([copy.deepcopy(module) for _ in range(N)])

class Encoder(nn.Module):

"Core encoder is a stack of N layers"

def __init__(self, layer, N):

super(Encoder, self).__init__()

self.layers = clones(layer, N)

self.norm = LayerNorm(layer.size)

def forward(self, x, mask):

"Pass the input (and mask) through each layer in turn."

for layer in self.layers:

x = layer(x, mask)

return self.norm(x)

这里在两个子层中都使用了残差连接(参考论文),然后紧跟layer normalization(参考论文)

class LayerNorm(nn.Module):

""" Construct a layernorm model (See citation for details)"""

def __init__(self, features, eps=1e-6):

super(LayerNorm, self).__init__()

self.a_2 = nn.Parameter(torch.ones(features))

self.b_2 = nn.Parameter(torch.zeros(features))

self.eps = eps

def forward(self, x):

mean = x.mean(-1, keepdim=True)

std = x.std(-1, keepdim=True)

return self.a_2 * (x - mean) / (std + self.eps) + self.b_2

也就是说,每个子层的输出是LayerNorm(x + Sublayer(x)),其中Sublayer(x)由子层实现。对于每一个子层,将其添加到子层输入并进行规范化之前,使用了Dropout(参考论文)

为了方便残差连接,模型中的所有子层和embedding层输出维度都是512

class SublayerConnection(nn.Module):

"""

A residual connection followed by a layer norm. Note for

code simplicity the norm is first as opposed to last .

"""

def __init__(self, size, dropout):

super(SublayerConnection, self).__init__()

self.norm = LayerNorm(size)

self.dropout = nn.Dropout(dropout)

def forward(self, x, sublayer):

"""Apply residual connection to any sublayer with the sanme size. """

return x + self.dropout(sublayer(self.norm(x)))

每层有两个子层。第一个子层是multi-head self-attention机制,第二层是一个简单的position-wise全连接前馈神经网络。

class EncoderLayer(nn.Module):

"""Encoder is made up of self-attention and feed forward (defined below)"""

def __init__(self, size, self_attn, feed_forward, dropout):

super(EncoderLayer, self).__init__()

self.self_attn = self_attn

self.feed_forward = feed_forward

self.sublayer = clones(SublayerConnection(size, dropout), 2)

self.size = size

def forward(self, x, mask):

"""Follow Figure 1 (left) for connection """

x = self.sublayer[0](x, lambda x : self.self_attn(x, x, x, mask))

return self.sublayer[1](x, self.feed_forward)

Decoder

Decoder由6个相同layer堆成

class Decoder(nn.Module):

"""Generic N layer decoder with masking """

def __init__(self, layer, N):

super(Decoder, self).__init__()

self.layers = clones(layer, N)

self.norm = LayerNorm(layer.size)

def forward(self, x, memory, src_mask, tgt_mask):

for layer in self.layers:

x = layer(x, memory, src_mask, tgt_mask)

return self.norm(x)

每个encoder层除了两个子层外,还插入了第三个子层,即在encoder堆的输出上上执行multi-head注意力作用的层。类似于encoder,在每一个子层后面使用残差连接,并紧跟norm

class DecoderLayer(nn.Module):

"""Decoder is made of self-attn, src-attn, and feed forward (defined below)"""

def __init__(self, size, self_attn, src_attn, feed_forward, dropout):

super(DecoderLayer, self).__init__()

self.size = size

self.self_attn = self_attn

self.src_attn = src_attn

self.feed_forward = feed_forward

self.sublayer = clones(SublayerConnection(size, dropout), 3)

def forward(self, x, memory, src_mask, tgt_mask):

"""Follow Figure 1 (right) for connections"""

m = memory

x = self.sublayer[0](x, lambda x : self.self_attn(x, x, x, tgt_mask))

x = self.sublayer[1](x, lambda x : self.src_attn(x, m, m, src_mask))

return self.sublayer[2](x, self.feed_forward)

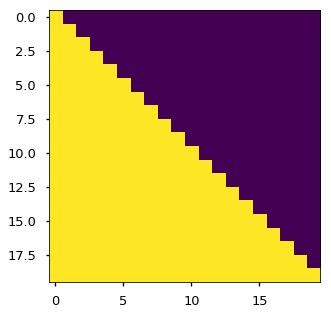

修改在decoder层堆中的self-atention 子层,防止位置关注后续位置。masking与使用一个position信息偏移的输出embedding相结合,确保对于position i i i 的预测仅依赖于小于 i i i 的position的输出

def subsequent_mask(size):

"""Mask out subsequent positions. """

attn_shape = (1, size, size)

subsequent_mask = np.triu(np.ones(attn_shape), k=1).astype('uint8')

return torch.from_numpy(subsequent_mask) == 0

plt.figure(figsize=(5, 5))

plt.imshow(subsequent_mask(20)[0])

None

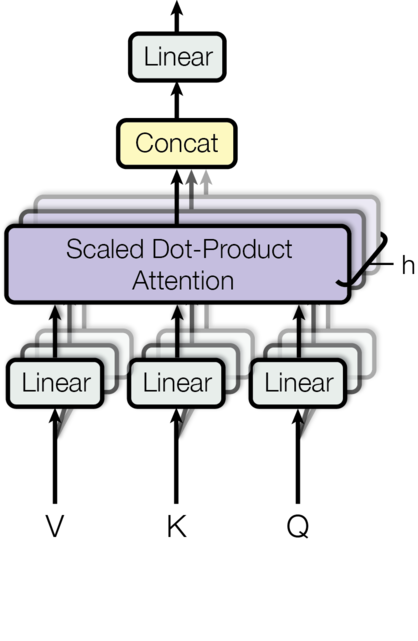

Attention

注意力功能可以看做将一个query和一组key-value对映射到一个output,其中query、keys、values和output都是向量(vector),输出是values的加权和,其中权重可以通过将query和对应的key输入到一个compatibility function来计算分配给每一个value的权重。

这里的attention其实可以叫做“Scaled Dot-Product Attention”。输入由 d k d_k dk维度的queries和keys组成,values的维度是 d v d_v dv。计算query和所有keys的点乘,然后除以 d k \sqrt{d_k} dk,然后应用softmax函数来获取值的权重。 d k \sqrt{d_k} dk起到调节作用,使得内积不至于太大(太大的话softmax后就非0即1了,不够“soft”了)。

实际计算中,一次计算一组queries的注意力函数,将其组成一个矩阵 Q Q Q, 并且keys和values也分别组成矩阵 K K K和 V V V。此时,使用如下公式进行计算:

A t t e n t i o n ( Q , K , V ) = s o f t m a x ( Q K T d k ) V Attention(Q, K, V) = softmax(\frac{QK^T}{\sqrt{d_k}})V Attention(Q,K,V)=softmax(dkQKT)V

def attention(query, key, value, mask=None, dropout=None):

"""Compute 'Scaled Dot Product Attention ' """

d_k = query.size(-1)

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(d_k) # matmul矩阵相乘

if mask is not None:

scores = scores.masked_fill(mask == 0, -1e9)

p_attn = F.softmax(scores, dim = -1)

if dropout is not None:

p_attn = dropout(p_attn)

return torch.matmul(p_attn, value), p_attn

最常用的两种注意力实现机制包括: additive attention (cite), and dot-product (multiplicative) attention.

此处的实现是dot-product attention,不过多了 d k \sqrt{d_k} dk。additive attention计算函数使用一个但隐藏层的前馈神经网络。

这两种实现机制在理论上复杂度是相似的,但是dot-product attention速度更快和更节省空间,因为可以使用高度优化的矩阵乘法来实现。

对于小规模values两种机制性能类差不多,但是对于大规模的values上,additive attention 性能优于 dot poduct。

原因分析:猜测可能是对于大规模values,内积会巨幅增长,将softmax函数推入有一个极小梯度的区域,造成性能下降(为了说明为什么内积变大,假设 q 和 k q和k q和k 是独立且平均值为0方差为1的随机变量,那么点乘 q ∗ k = ∑ i = 1 d k q i k i q*k = \sum^{d_k}_{i=1}q_ik_i q∗k=∑i=1dkqiki,其平均值为0,方差为1)为了抵消负面影响,使用 d k \sqrt{d_k} dk来缩放内积

from IPython.display import Image

Image("images/ModalNet-20.png")

Multi-head attention允许模型共同关注在不同位置的来自不同子空间的表示信息,只要一个单独的attention head,平均一下就会抑制上面所说的情况。此时,用公式表示如下:

M u l t i H e a d ( Q , K , V ) = C o n c a t ( h e a d − 1 , … , h e a d h ) W o MultiHead(Q, K, V) = Concat(head-1, \dots, head_h)W^o MultiHead(Q,K,V)=Concat(head−1,…,headh)Wo

其中 h e a d i = A t t e n t i o n ( Q W i Q , K W i K , V W i V ) head_i = Attention(QW_i^Q, KW_i^K, VW_i^V) headi=Attention(QWiQ,KWiK,VWiV), W i Q ∈ R d m o d e l ∗ D k , W i K ∈ d m o d e l ∗ d k , W i V ∈ d m o d e l ∗ d v 并 且 W o i n R h d v ∗ d m o d e l W_i^Q \in \mathcal{R}^{d_model * D_k}, W_i^K \in \mathcal{d_model * d_k}, W_i^V \in \mathcal{d_model*d_v} 并且 W_o \ in \mathcal{R}^{hd_v*d_{model}} W

本文详细解析了如何根据《Attention is All You Need》论文实现Transformer模型,涵盖编码器、解码器堆栈、多头注意力、位置编码等关键组件,以及训练过程中的技巧,如标签平滑和多GPU训练。

本文详细解析了如何根据《Attention is All You Need》论文实现Transformer模型,涵盖编码器、解码器堆栈、多头注意力、位置编码等关键组件,以及训练过程中的技巧,如标签平滑和多GPU训练。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

344

344