Zookeeper版本:zookeeper-3.4.11

JDK版本:1.8.0_161

服务器:192.168.1.150

|

服务器节点 |

zoo.conf配置 |

|

192.168.1.150:2811 |

/usr/local/zookeeper-3.4.11/conf/zoo_1.cfg配置 dataDir=/usr/local/zookeeper-3.4.11/data_1 dataLogDir=/usr/local/zookeeper-3.4.11/logs_1 clientPort=2181 server.1=192.168.1.150:2287:3387 server.2=192.168.1.150:2288:3388 server.2=192.168.1.150:2289:3389 命令:echo "1">/usr/local/zookeeper-3.4.11/data_1/myid 启动:/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg |

|

192.168.1.150:2812 |

/usr/local/zookeeper-3.4.11/conf/zoo_2.cfg配置 dataDir=/usr/local/zookeeper-3.4.11/data_2 dataLogDir=/usr/local/zookeeper-3.4.11/logs_2 clientPort=2182 server.1=192.168.1.150:2287:3387 server.2=192.168.1.150:2288:3388 server.2=192.168.1.150:2289:3389 命令:echo "2">/usr/local/zookeeper-3.4.11/data_2/myid 启动:/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_2.cfg |

|

192.168.1.150:2813 |

/usr/local/zookeeper-3.4.11/conf/zoo_3.cfg配置 dataDir=/usr/local/zookeeper-3.4.11/data_3 dataLogDir=/usr/local/zookeeper-3.4.11/logs_3 clientPort=2183 server.1=192.168.1.150:2287:3387 server.2=192.168.1.150:2288:3388 server.2=192.168.1.150:2289:3389 命令:echo "3">/usr/local/zookeeper-3.4.11/data_3/myid 启动:/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_3.cfg |

|

|

注意:线上部署的时候使用内网地址,同时防火墙开放2181/2182/2183端口,安全组的入站规则也要开放该三个端口 |

1、解压文件

解压到/usr/local/目录下

命令:

tar -zxvf zookeeper-3.4.11.tar.gz -C /usr/local/

![]()

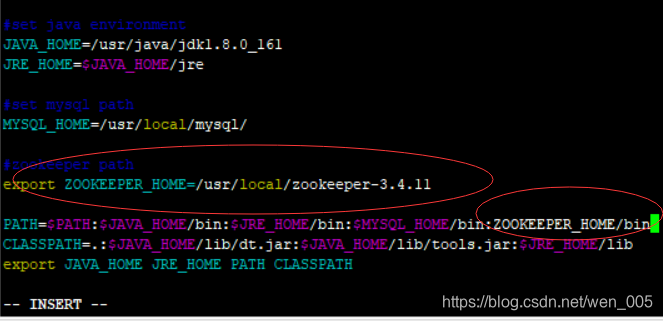

2、配置环境变量

vim /etc/profile

#zookeeper path

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.11

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$MYSQL_HOME/bin:ZOOKEEPER_HOME/bin

保存退出后执行

source /etc/profile

使配置的环境变量生效

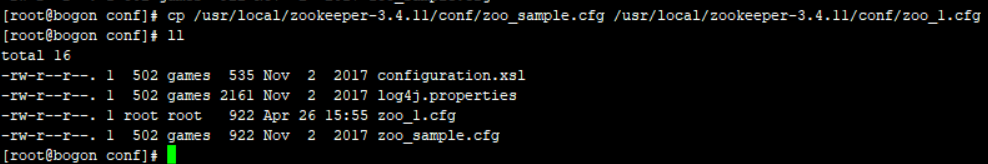

3、创建zoo.cfg配置文件

(1)创建节点1配置文件:

cp /usr/local/zookeeper-3.4.11/conf/zoo_sample.cfg /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg

vim zoo_1.cfg

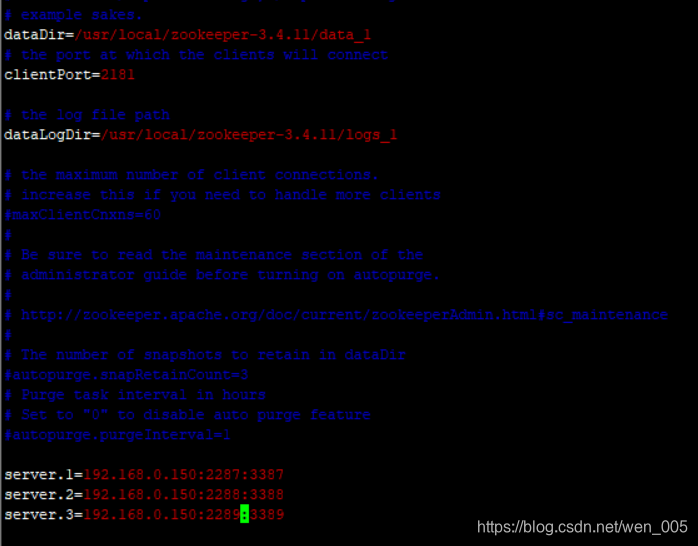

修改配置文件内容:

# the directory where the snapshot is stored.

dataDir=/usr/local/zookeeper-3.4.11/data_1

# the port at which the clients will connect

clientPort=2181

# the log file path

dataLogDir=/usr/local/zookeeper-3.4.11/logs_1

# the server node

server.1=127.0.0.1:2287:3387

server.2=127.0.0.1:2288:3388

server.3=127.0.0.1:2289:3389

(2)创建节点2配置文件:

cp /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg /usr/local/zookeeper-3.4.11/conf/zoo_2.cfg

vim zoo_2.cfg

修改配置文件内容:

# the directory where the snapshot is stored.

dataDir=/usr/local/zookeeper-3.4.11/data_2

# the port at which the clients will connect

clientPort=2182

# the log file path

dataLogDir=/usr/local/zookeeper-3.4.11/logs_2

# the server node

server.1=127.0.0.1:2287:3387

server.2=127.0.0.1:2288:3388

server.3=127.0.0.1:2289:3389

(3)创建节点3配置文件:

cp /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg /usr/local/zookeeper-3.4.11/conf/zoo_3.cfg

vim zoo_3.cfg

修改配置文件内容:

# the directory where the snapshot is stored.

dataDir=/usr/local/zookeeper-3.4.11/data_3

# the port at which the clients will connect

clientPort=2183

# the log file path

dataLogDir=/usr/local/zookeeper-3.4.11/logs_3

# the server node

server.1=127.0.0.1:2287:3387

server.2=127.0.0.1:2288:3388

server.3=127.0.0.1:2289:3389

4、创建存放zookeeper数据和日志文件的目录

mkdir -p /usr/local/zookeeper-3.4.11/data_1

mkdir -p /usr/local/zookeeper-3.4.11/data_2

mkdir -p /usr/local/zookeeper-3.4.11/data_3

mkdir -p /usr/local/zookeeper-3.4.11/logs_1

mkdir -p /usr/local/zookeeper-3.4.11/logs_2

mkdir -p /usr/local/zookeeper-3.4.11/logs_3

#设置权限(root用户可以省略)

sudo chmod -R 777 /usr/local/zookeeper-3.4.11/data_{1/2/3}

sudo chmod -R 777 /usr/local/zookeeper-3.4.11/logs_{1/2/3}

5、分别在data_{1/2/3}文件夹下创建myid文件,并写入1/2/3(数字对应server.1的数字)

echo "1" > /usr/local/zookeeper-3.4.11/data_1/myid

echo "2" > /usr/local/zookeeper-3.4.11/data_2/myid

echo "3" > /usr/local/zookeeper-3.4.11/data_3/myid

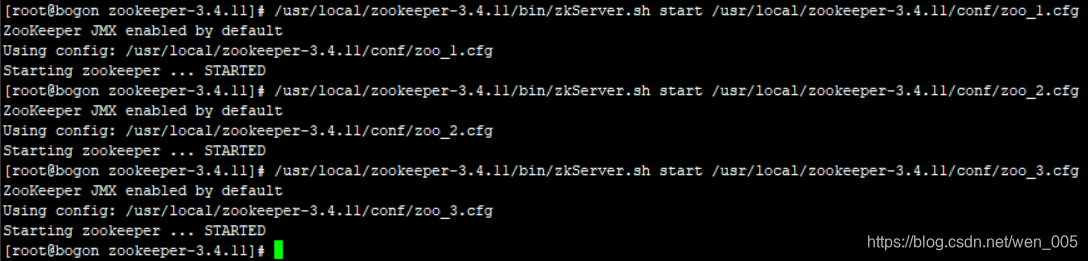

6、启动

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_2.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_3.cfg

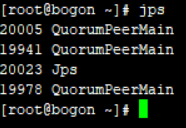

启动结果验证:

命令:

jps

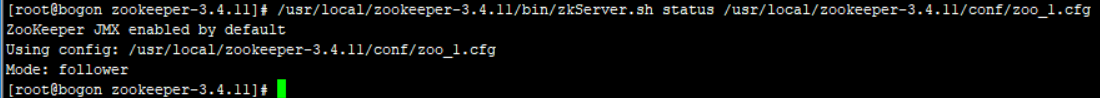

查看状态:

/usr/local/zookeeper-3.4.11/bin/zkServer.sh status /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg

启动服务时,可以创建shell脚本启动:

vim start-zk-cluster.sh

输入内容:

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_2.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start /usr/local/zookeeper-3.4.11/conf/zoo_3.cfg

创建停止服务:

vim stop-zk-cluster.sh

输入内容:

/usr/local/zookeeper-3.4.11/bin/zkServer.sh stop /usr/local/zookeeper-3.4.11/conf/zoo_1.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh stop /usr/local/zookeeper-3.4.11/conf/zoo_2.cfg

/usr/local/zookeeper-3.4.11/bin/zkServer.sh stop /usr/local/zookeeper-3.4.11/conf/zoo_3.cfg

授权:

chmod -R 777 start-zk-cluster.sh

chmod -R 777 stop-zk-cluster.sh

启动时执行这两个文件即可

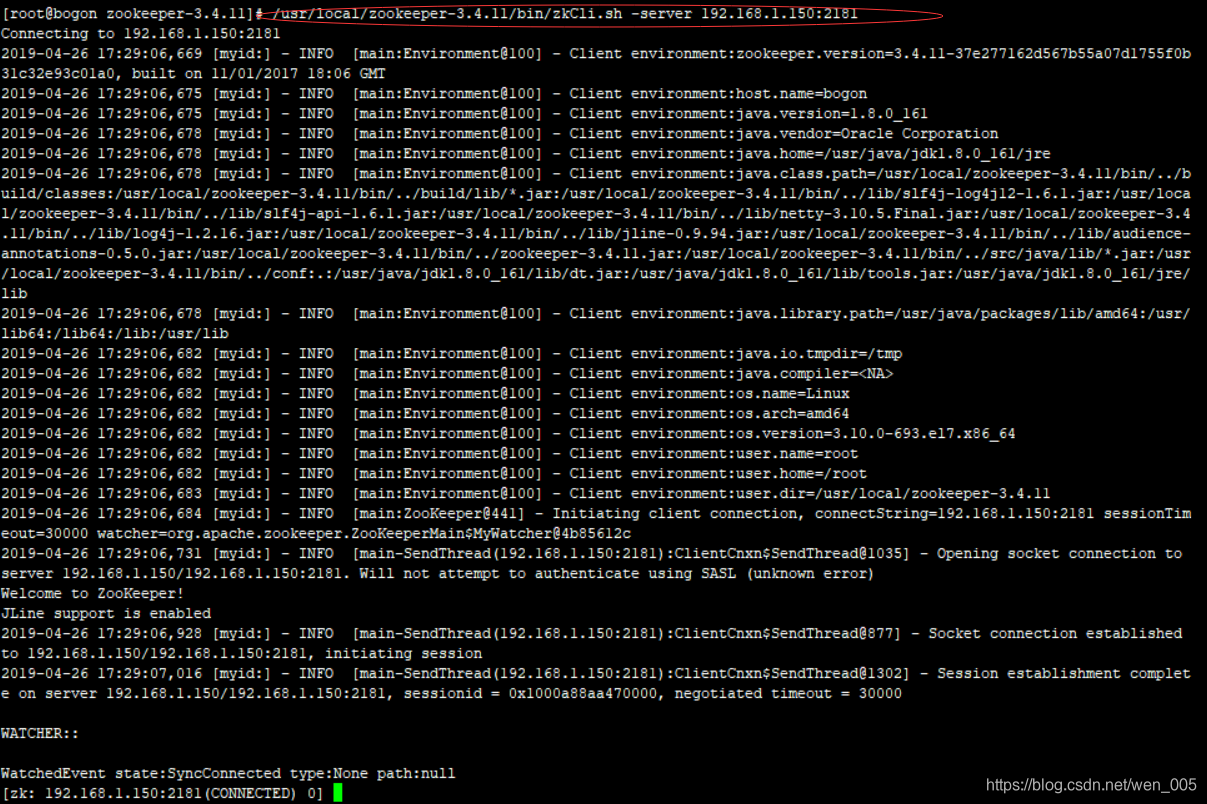

7、连接客户端操作测试

/usr/local/zookeeper-3.4.11/bin/zkCli.sh -server 192.168.1.150:2181

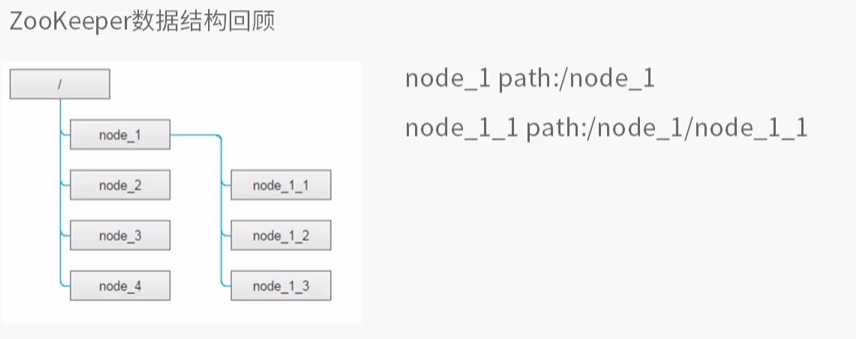

ls / :列出根节点下所有的子节点信息

![]()

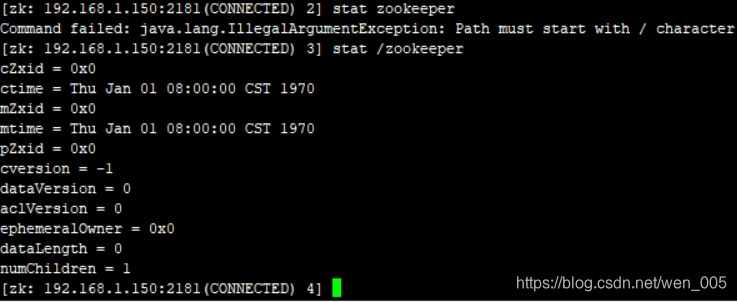

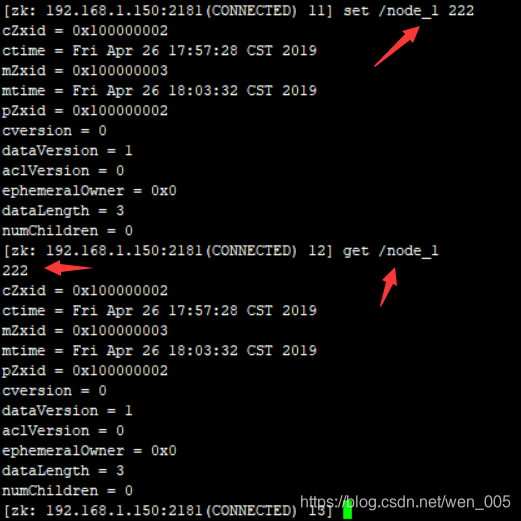

stat path :获取指定节点的状态信息

czxid 创建该节点的事物ID

ctime 创建该节点的时间

mZxid 更新该节点的事物ID

mtime 更新该节点的时间

pZxid 操作当前节点的子节点列表的事物ID(这种操作包含增加子节点,删除子节点)

cversion 当前节点的子节点版本号

dataVersion 当前节点的数据版本号

aclVersion 当前节点的acl权限版本号

ephemeralowner 当前节点的如果是临时节点,该属性是临时节点的事物ID

dataLength 当前节点的d的数据长度

numchildren 当前节点的子节点个数

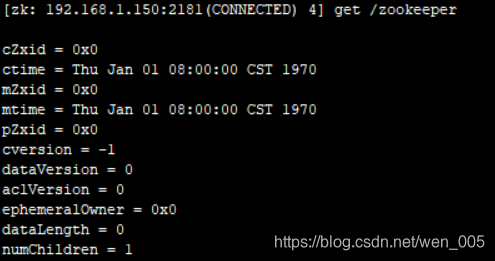

get path 获取当前节点的数据内容

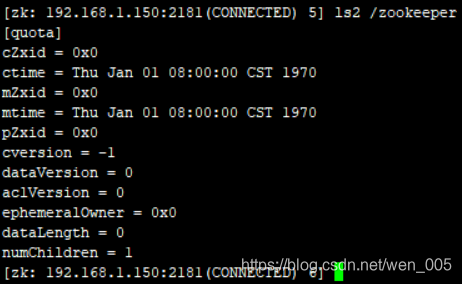

ls2 path :是ls 和 stat两个命令的结合

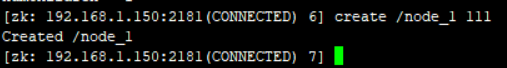

create [-s] [-e] path data acl

-s 表示是顺序节点

-e 标识是临时节点(注:临时节点在客户端结束与服务器的会话后,自动消失)

path 节点路径

data 节点数据

acl 节点权限

set path data [version] :修改当前节点的数据内容 如果指定版本,需要和当前节点的数据版本一致

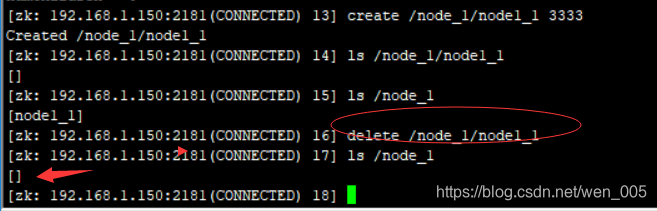

delete path [version] 删除指定路径的节点 如果有子节点要先删除子节点

命令:

delete /node_1/node1_1

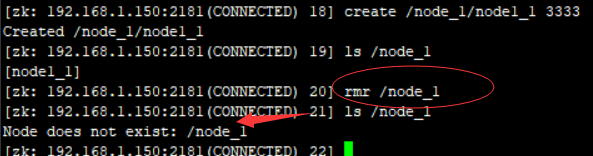

rmr path 删除当前路径节点及其所有子节点

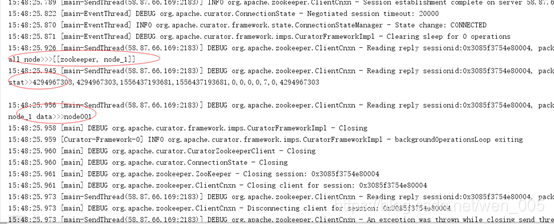

8、Java连接测试

public class ZookeeperClusterTest {

public static void main(String[] args) throws Exception {

String zkAddress = "192.168.1.150:2181,192.168.1.150:2182,192.168.1.150:2183";

CuratorFramework client = null;

try{

RetryPolicy retryPolicy = new ExponentialBackoffRetry(1000,5);

client = CuratorFrameworkFactory.builder().

connectString(zkAddress).

retryPolicy(retryPolicy).sessionTimeoutMs(20000).

connectionTimeoutMs(6000).

build();

client.start();

List<String> list = client.getChildren().forPath("/");

System.out.println("all node>>>" + Arrays.asList(list));

Stat stat = client.checkExists().forPath("/node_1");

System.out.println("stat>>" +stat);

if(stat == null){

client.create().forPath("/node_1","node001".getBytes());

System.out.println("node_1 data>>>" + client.getData().forPath("/node_1"));

}else {

System.out.println("node_1 data>>>" + new String(client.getData().forPath("/node_1"),"UTF-8"));

}

}finally {

CloseableUtils.closeQuietly(client);//一定得关闭,不然一直占用

}

}

}

本文详细介绍Zookeeper 3.4.11集群的搭建步骤,包括环境配置、节点设置及启动过程。并演示如何通过命令行和Java进行客户端操作与连接测试。

本文详细介绍Zookeeper 3.4.11集群的搭建步骤,包括环境配置、节点设置及启动过程。并演示如何通过命令行和Java进行客户端操作与连接测试。

1491

1491

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?