SpringAI智能客服Function Calling兼容性问题解决方案

问题背景

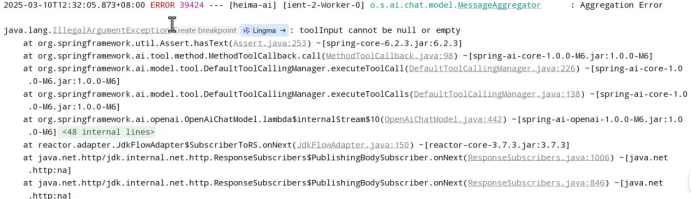

在使用SpringAI框架构建智能客服系统时,我们遇到了一个严重的兼容性问题。当使用阿里云百炼平台的模型进行Function Calling(函数调用)时,系统抛出以下错误:

AggregationError java.lang.IllegalArgumentException

CreatebreakpointLingma →: toolInput cannot be null or empty

问题分析

根本原因

虽然阿里云百炼平台声称对OpenAI协议兼容,但在实际使用过程中,特别是在智能体(Agent)功能方面,存在部分兼容的问题。这种不完全兼容导致了Function Calling功能无法正常工作。

技术实现背景

我们的智能客服系统采用了以下技术架构:

- SpringAI框架:用于封装Function Calling实现

- Spring WebFlux:响应式编程模型,提供流式输出

- 阿里云百炼平台:作为底层LLM服务提供商

核心服务代码如下:

public Flux<String> service(String prompt, String chatId) {

// 1. 保存会话ID

Long userId = 1L; // 实际项目中应从上下文获取

chatHistoryRepository.save("service", userId, chatId, prompt);

// 2. 请求模型并返回流式响应

return serviceChatClient.prompt()

.user(prompt)

.advisors(advisorSpec ->

advisorSpec.param(CHAT_MEMORY_CONVERSATION_ID_KEY, chatId))

.stream()

.content();

}

深入源码分析

问题定位

通过源码分析,我们发现问题出现在OpenAiChatModel类的buildGeneration方法中:

private Generation buildGeneration(OpenAiApi.ChatCompletion.Choice choice,

Map<String, Object> metadata,

OpenAiApi.ChatCompletionRequest request) {

List<AssistantMessage.ToolCall> toolCalls = choice.message().toolCalls() == null ?

List.of() :

choice.message().toolCalls().stream().map((toolCall) -> {

return new AssistantMessage.ToolCall(

toolCall.id(),

"function",

toolCall.function().name(),

toolCall.function().arguments() // 问题所在

);

}).toList();

// ... 其他代码

}

问题表现

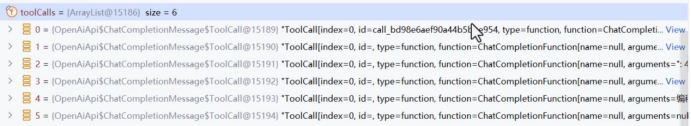

通过断点调试发现了两个关键问题:

- 数据条数异常:OpenAI标准应该返回一条完整的ToolCall数据,但阿里云百炼平台返回了6条分片数据

- 数据完整性问题:返回的6条数据都是残缺不全的片段

实际返回的数据结构如下:

ToolCall[index=0, id=call_bd98e6aef90a44b5bbe954, type=function,

function=ChatCompletionFunction[name=queryCourse, arguments=(]]

ToolCall[index=, id=, type=function,

function=ChatCompletionFunction[name=null, arguments=query:{edu]]

ToolCall[index=0, id=, type=function,

function=ChatCompletionFunction[name=null, arguments=*: 4,]]

ToolCall[index=0, id=, type=function,

function=ChatCompletionFunction[name=null, arguments="type*: *]]

ToolCall[index=0, id=, type=function,

function=ChatCompletionFunction[name=null, arguments=编程程*]]

ToolCall[index=0, id=, type=function,

function=ChatCompletionFunction[name=null, arguments=null]]

可以看出,原本应该是一个完整的arguments参数被分割成了多个片段,分别存储在不同的ToolCall对象中。

解决方案对比

方案一:简化实现(不推荐)

实现方式:放弃Flux响应式流,改用传统阻塞式编程

优点:

- 实现简单,无需修改底层代码

- 避开了兼容性问题

缺点:

- 用户体验显著下降

- 失去了流式响应的优势

- 不符合现代异步编程最佳实践

方案二:自定义兼容层(推荐)

实现方式:重写OpenAiChatModel,解决参数拼接问题

详细实现方案

核心思路

由于OpenAiChatModel的buildGeneration方法是私有方法,我们无法直接修改。根据Java面向对象编程的封装特性,我们需要创建一个自定义的兼容类。

关键代码实现

创建自定义的AlibabaOpenAiChatModel类,重写参数处理逻辑:

private Generation buildGeneration(OpenAiApi.ChatCompletion.Choice choice,

Map<String, Object> metadata,

OpenAiApi.ChatCompletionRequest request) {

List<AssistantMessage.ToolCall> toolCalls = choice.message().toolCalls() == null ?

List.of() :

choice.message()

.toolCalls()

.stream()

.map(toolCall -> new AssistantMessage.ToolCall(

toolCall.id(),

"function",

toolCall.function().name(),

toolCall.function().arguments()

))

// 关键改动:合并所有分片的arguments

.reduce((tc1, tc2) -> new AssistantMessage.ToolCall(

tc1.id().isEmpty() ? tc2.id() : tc1.id(), // 使用非空的ID

"function",

tc1.name() != null ? tc1.name() : tc2.name(), // 使用非空的name

(tc1.arguments() != null ? tc1.arguments() : "") +

(tc2.arguments() != null ? tc2.arguments() : "") // 拼接arguments

))

.stream()

.toList();

// ... 其他处理逻辑

}

完整代码

package com.example.model;

import io.micrometer.observation.Observation;

import io.micrometer.observation.ObservationRegistry;

import io.micrometer.observation.contextpropagation.ObservationThreadLocalAccessor;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.ai.chat.messages.AssistantMessage;

import org.springframework.ai.chat.messages.MessageType;

import org.springframework.ai.chat.messages.ToolResponseMessage;

import org.springframework.ai.chat.messages.UserMessage;

import org.springframework.ai.chat.metadata.*;

import org.springframework.ai.chat.model.*;

import org.springframework.ai.chat.observation.ChatModelObservationContext;

import org.springframework.ai.chat.observation.ChatModelObservationConvention;

import org.springframework.ai.chat.observation.ChatModelObservationDocumentation;

import org.springframework.ai.chat.observation.DefaultChatModelObservationConvention;

import org.springframework.ai.chat.prompt.ChatOptions;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.model.Media;

import org.springframework.ai.model.ModelOptionsUtils;

import org.springframework.ai.model.function.FunctionCallback;

import org.springframework.ai.model.function.FunctionCallbackResolver;

import org.springframework.ai.model.function.FunctionCallingOptions;

import org.springframework.ai.model.tool.LegacyToolCallingManager;

import org.springframework.ai.model.tool.ToolCallingChatOptions;

import org.springframework.ai.model.tool.ToolCallingManager;

import org.springframework.ai.model.tool.ToolExecutionResult;

import org.springframework.ai.openai.OpenAiChatOptions;

import org.springframework.ai.openai.api.OpenAiApi;

import org.springframework.ai.openai.api.common.OpenAiApiConstants;

import org.springframework.ai.openai.metadata.support.OpenAiResponseHeaderExtractor;

import org.springframework.ai.retry.RetryUtils;

import org.springframework.ai.tool.definition.ToolDefinition;

import org.springframework.core.io.ByteArrayResource;

import org.springframework.core.io.Resource;

import org.springframework.http.ResponseEntity;

import org.springframework.lang.Nullable;

import org.springframework.retry.support.RetryTemplate;

import org.springframework.util.*;

import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.stream.Collectors;

public class AlibabaOpenAiChatModel extends AbstractToolCallSupport implements ChatModel {

private static final Logger logger = LoggerFactory.getLogger(AlibabaOpenAiChatModel.class);

private static final ChatModelObservationConvention DEFAULT_OBSERVATION_CONVENTION = new DefaultChatModelObservationConvention();

private static final ToolCallingManager DEFAULT_TOOL_CALLING_MANAGER = ToolCallingManager.builder().build();

/**

* The default options used for the chat completion requests.

*/

private final OpenAiChatOptions defaultOptions;

/**

* The retry template used to retry the OpenAI API calls.

*/

private final RetryTemplate retryTemplate;

/**

* Low-level access to the OpenAI API.

*/

private final OpenAiApi openAiApi;

/**

* Observation registry used for instrumentation.

*/

private final ObservationRegistry observationRegistry;

private final ToolCallingManager toolCallingManager;

/**

* Conventions to use for generating observations.

*/

private ChatModelObservationConvention observationConvention = DEFAULT_OBSERVATION_CONVENTION;

/**

* Creates an instance of the AlibabaOpenAiChatModel.

* @param openAiApi The OpenAiApi instance to be used for interacting with the OpenAI

* Chat API.

* @throws IllegalArgumentException if openAiApi is null

* @deprecated Use AlibabaOpenAiChatModel.Builder.

*/

@Deprecated

public AlibabaOpenAiChatModel(OpenAiApi openAiApi) {

this(openAiApi, OpenAiChatOptions.builder().model(OpenAiApi.DEFAULT_CHAT_MODEL).temperature(0.7).build());

}

/**

* Initializes an instance of the AlibabaOpenAiChatModel.

* @param openAiApi The OpenAiApi instance to be used for interacting with the OpenAI

* Chat API.

* @param options The OpenAiChatOptions to configure the chat model.

* @deprecated Use AlibabaOpenAiChatModel.Builder.

*/

@Deprecated

public AlibabaOpenAiChatModel(OpenAiApi openAiApi, OpenAiChatOptions options) {

this(openAiApi, options, null, RetryUtils.DEFAULT_RETRY_TEMPLATE);

}

/**

* Initializes a new instance of the AlibabaOpenAiChatModel.

* @param openAiApi The OpenAiApi instance to be used for interacting with the OpenAI

* Chat API.

* @param options The OpenAiChatOptions to configure the chat model.

* @param functionCallbackResolver The function callback resolver.

* @param retryTemplate The retry template.

* @deprecated Use AlibabaOpenAiChatModel.Builder.

*/

@Deprecated

public AlibabaOpenAiChatModel(OpenAiApi openAiApi, OpenAiChatOptions options,

@Nullable FunctionCallbackResolver functionCallbackResolver, RetryTemplate retryTemplate) {

this(openAiApi, options, functionCallbackResolver, List.of(), retryTemplate);

}

/**

* Initializes a new instance of the AlibabaOpenAiChatModel.

* @param openAiApi The OpenAiApi instance to be used for interacting with the OpenAI

* Chat API.

* @param options The OpenAiChatOptions to configure the chat model.

* @param functionCallbackResolver The function callback resolver.

* @param toolFunctionCallbacks The tool function callbacks.

* @param retryTemplate The retry template.

* @deprecated Use AlibabaOpenAiChatModel.Builder.

*/

@Deprecated

public AlibabaOpenAiChatModel(OpenAiApi openAiApi, OpenAiChatOptions options,

@Nullable FunctionCallbackResolver functionCallbackResolver,

@Nullable List<FunctionCallback> toolFunctionCallbacks, RetryTemplate retryTemplate) {

this(openAiApi, options, functionCallbackResolver, toolFunctionCallbacks, retryTemplate,

ObservationRegistry.NOOP);

}

/**

* Initializes a new instance of the AlibabaOpenAiChatModel.

* @param openAiApi The OpenAiApi instance to be used for interacting with the OpenAI

* Chat API.

* @param options The OpenAiChatOptions to configure the chat model.

* @param functionCallbackResolver The function callback resolver.

* @param toolFunctionCallbacks The tool function callbacks.

* @param retryTemplate The retry template.

* @param observationRegistry The ObservationRegistry used for instrumentation.

* @deprecated Use AlibabaOpenAiChatModel.Builder or AlibabaOpenAiChatModel(OpenAiApi,

* OpenAiChatOptions, ToolCallingManager, RetryTemplate, ObservationRegistry).

*/

@Deprecated

public AlibabaOpenAiChatModel(OpenAiApi openAiApi, OpenAiChatOptions options,

@Nullable FunctionCallbackResolver functionCallbackResolver,

@Nullable List<FunctionCallback> toolFunctionCallbacks, RetryTemplate retryTemplate,

ObservationRegistry observationRegistry) {

this(openAiApi, options,

LegacyToolCallingManager.builder()

.functionCallbackResolver(functionCallbackResolver)

.functionCallbacks(toolFunctionCallbacks)

.build(),

retryTemplate, observationRegistry);

logger.warn("This constructor is deprecated and will be removed in the next milestone. "

+ "Please use the AlibabaOpenAiChatModel.Builder or the new constructor accepting ToolCallingManager instead.");

}

public AlibabaOpenAiChatModel(OpenAiApi openAiApi, OpenAiChatOptions defaultOptions, ToolCallingManager toolCallingManager,

RetryTemplate retryTemplate, ObservationRegistry observationRegistry) {

// We do not pass the 'defaultOptions' to the AbstractToolSupport,

// because it modifies them. We are using ToolCallingManager instead,

// so we just pass empty options here.

super(null, OpenAiChatOptions.builder().build(), List.of());

Assert.notNull(openAiApi, "openAiApi cannot be null");

Assert.notNull(defaultOptions, "defaultOptions cannot be null");

Assert.notNull(toolCallingManager, "toolCallingManager cannot be null");

Assert.notNull(retryTemplate, "retryTemplate cannot be null");

Assert.notNull(observationRegistry, "observationRegistry cannot be null");

this.openAiApi = openAiApi;

this.defaultOptions = defaultOptions;

this.toolCallingManager = toolCallingManager;

this.retryTemplate = retryTemplate;

this.observationRegistry = observationRegistry;

}

@Override

public ChatResponse call(Prompt prompt) {

// Before moving any further, build the final request Prompt,

// merging runtime and default options.

Prompt requestPrompt = buildRequestPrompt(prompt);

return this.internalCall(requestPrompt, null);

}

public ChatResponse internalCall(Prompt prompt, ChatResponse previousChatResponse) {

OpenAiApi.ChatCompletionRequest request = createRequest(prompt, false);

ChatModelObservationContext observationContext = ChatModelObservationContext.builder()

.prompt(prompt)

.provider(OpenAiApiConstants.PROVIDER_NAME)

.requestOptions(prompt.getOptions())

.build();

ChatResponse response = ChatModelObservationDocumentation.CHAT_MODEL_OPERATION

.observation(this.observationConvention, DEFAULT_OBSERVATION_CONVENTION, () -> observationContext,

this.observationRegistry)

.observe(() -> {

ResponseEntity<OpenAiApi.ChatCompletion> completionEntity = this.retryTemplate

.execute(ctx -> this.openAiApi.chatCompletionEntity(request, getAdditionalHttpHeaders(prompt)));

var chatCompletion = completionEntity.getBody();

if (chatCompletion == null) {

logger.warn("No chat completion returned for prompt: {}", prompt);

return new ChatResponse(List.of());

}

List<OpenAiApi.ChatCompletion.Choice> choices = chatCompletion.choices();

if (choices == null) {

logger.warn("No choices returned for prompt: {}", prompt);

return new ChatResponse(List.of());

}

List<Generation> generations = choices.stream().map(choice -> {

// @formatter:off

Map<String, Object> metadata = Map.of(

"id", chatCompletion.id() != null ? chatCompletion.id() : "",

"role", choice.message().role() != null ? choice.message().role().name() : "",

"index", choice.index(),

"finishReason", choice.finishReason() != null ? choice.finishReason().name() : "",

"refusal", StringUtils.hasText(choice.message().refusal()) ? choice.message().refusal() : "");

// @formatter:on

return buildGeneration(choice, metadata, request);

}).toList();

RateLimit rateLimit = OpenAiResponseHeaderExtractor.extractAiResponseHeaders(completionEntity);

// Current usage

OpenAiApi.Usage usage = completionEntity.getBody().usage();

Usage currentChatResponseUsage = usage != null ? getDefaultUsage(usage) : new EmptyUsage();

Usage accumulatedUsage = UsageUtils.getCumulativeUsage(currentChatResponseUsage, previousChatResponse);

ChatResponse chatResponse = new ChatResponse(generations,

from(completionEntity.getBody(), rateLimit, accumulatedUsage));

observationContext.setResponse(chatResponse);

return chatResponse;

});

if (ToolCallingChatOptions.isInternalToolExecutionEnabled(prompt.getOptions()) && response != null

&& response.hasToolCalls()) {

var toolExecutionResult = this.toolCallingManager.executeToolCalls(prompt, response);

if (toolExecutionResult.returnDirect()) {

// Return tool execution result directly to the client.

return ChatResponse.builder()

.from(response)

.generations(ToolExecutionResult.buildGenerations(toolExecutionResult))

.build();

}

else {

// Send the tool execution result back to the model.

return this.internalCall(new Prompt(toolExecutionResult.conversationHistory(), prompt.getOptions()),

response);

}

}

return response;

}

@Override

public Flux<ChatResponse> stream(Prompt prompt) {

// Before moving any further, build the final request Prompt,

// merging runtime and default options.

Prompt requestPrompt = buildRequestPrompt(prompt);

return internalStream(requestPrompt, null);

}

public Flux<ChatResponse> internalStream(Prompt prompt, ChatResponse previousChatResponse) {

return Flux.deferContextual(contextView -> {

OpenAiApi.ChatCompletionRequest request = createRequest(prompt, true);

if (request.outputModalities() != null) {

if (request.outputModalities().stream().anyMatch(m -> m.equals("audio"))) {

logger.warn("Audio output is not supported for streaming requests. Removing audio output.");

throw new IllegalArgumentException("Audio output is not supported for streaming requests.");

}

}

if (request.audioParameters() != null) {

logger.warn("Audio parameters are not supported for streaming requests. Removing audio parameters.");

throw new IllegalArgumentException("Audio parameters are not supported for streaming requests.");

}

Flux<OpenAiApi.ChatCompletionChunk> completionChunks = this.openAiApi.chatCompletionStream(request,

getAdditionalHttpHeaders(prompt));

// For chunked responses, only the first chunk contains the choice role.

// The rest of the chunks with same ID share the same role.

ConcurrentHashMap<String, String> roleMap = new ConcurrentHashMap<>();

final ChatModelObservationContext observationContext = ChatModelObservationContext.builder()

.prompt(prompt)

.provider(OpenAiApiConstants.PROVIDER_NAME)

.requestOptions(prompt.getOptions())

.build();

Observation observation = ChatModelObservationDocumentation.CHAT_MODEL_OPERATION.observation(

this.observationConvention, DEFAULT_OBSERVATION_CONVENTION, () -> observationContext,

this.observationRegistry);

observation.parentObservation(contextView.getOrDefault(ObservationThreadLocalAccessor.KEY, null)).start();

// Convert the ChatCompletionChunk into a ChatCompletion to be able to reuse

// the function call handling logic.

Flux<ChatResponse> chatResponse = completionChunks.map(this::chunkToChatCompletion)

.switchMap(chatCompletion -> Mono.just(chatCompletion).map(chatCompletion2 -> {

try {

@SuppressWarnings("null")

String id = chatCompletion2.id();

List<Generation> generations = chatCompletion2.choices().stream().map(choice -> { // @formatter:off

if (choice.message().role() != null) {

roleMap.putIfAbsent(id, choice.message().role().name());

}

Map<String, Object> metadata = Map.of(

"id", chatCompletion2.id(),

"role", roleMap.getOrDefault(id, ""),

"index", choice.index(),

"finishReason", choice.finishReason() != null ? choice.finishReason().name() : "",

"refusal", StringUtils.hasText(choice.message().refusal()) ? choice.message().refusal() : "");

return buildGeneration(choice, metadata, request);

}).toList();

// @formatter:on

OpenAiApi.Usage usage = chatCompletion2.usage();

Usage currentChatResponseUsage = usage != null ? getDefaultUsage(usage) : new EmptyUsage();

Usage accumulatedUsage = UsageUtils.getCumulativeUsage(currentChatResponseUsage,

previousChatResponse);

return new ChatResponse(generations, from(chatCompletion2, null, accumulatedUsage));

}

catch (Exception e) {

logger.error("Error processing chat completion", e);

return new ChatResponse(List.of());

}

// When in stream mode and enabled to include the usage, the OpenAI

// Chat completion response would have the usage set only in its

// final response. Hence, the following overlapping buffer is

// created to store both the current and the subsequent response

// to accumulate the usage from the subsequent response.

}))

.buffer(2, 1)

.map(bufferList -> {

ChatResponse firstResponse = bufferList.get(0);

if (request.streamOptions() != null && request.streamOptions().includeUsage()) {

if (bufferList.size() == 2) {

ChatResponse secondResponse = bufferList.get(1);

if (secondResponse != null && secondResponse.getMetadata() != null) {

// This is the usage from the final Chat response for a

// given Chat request.

Usage usage = secondResponse.getMetadata().getUsage();

if (!UsageUtils.isEmpty(usage)) {

// Store the usage from the final response to the

// penultimate response for accumulation.

return new ChatResponse(firstResponse.getResults(),

from(firstResponse.getMetadata(), usage));

}

}

}

}

return firstResponse;

});

// @formatter:off

Flux<ChatResponse> flux = chatResponse.flatMap(response -> {

if (ToolCallingChatOptions.isInternalToolExecutionEnabled(prompt.getOptions()) && response.hasToolCalls()) {

var toolExecutionResult = this.toolCallingManager.executeToolCalls(prompt, response);

if (toolExecutionResult.returnDirect()) {

// Return tool execution result directly to the client.

return Flux.just(ChatResponse.builder().from(response)

.generations(ToolExecutionResult.buildGenerations(toolExecutionResult))

.build());

} else {

// Send the tool execution result back to the model.

return this.internalStream(new Prompt(toolExecutionResult.conversationHistory(), prompt.getOptions()),

response);

}

}

else {

return Flux.just(response);

}

})

.doOnError(observation::error)

.doFinally(s -> observation.stop())

.contextWrite(ctx -> ctx.put(ObservationThreadLocalAccessor.KEY, observation));

// @formatter:on

return new MessageAggregator().aggregate(flux, observationContext::setResponse);

});

}

private MultiValueMap<String, String> getAdditionalHttpHeaders(Prompt prompt) {

Map<String, String> headers = new HashMap<>(this.defaultOptions.getHttpHeaders());

if (prompt.getOptions() != null && prompt.getOptions() instanceof OpenAiChatOptions chatOptions) {

headers.putAll(chatOptions.getHttpHeaders());

}

return CollectionUtils.toMultiValueMap(

headers.entrySet().stream().collect(Collectors.toMap(Map.Entry::getKey, e -> List.of(e.getValue()))));

}

private Generation buildGeneration(OpenAiApi.ChatCompletion.Choice choice, Map<String, Object> metadata, OpenAiApi.ChatCompletionRequest request) {

List<AssistantMessage.ToolCall> toolCalls = choice.message().toolCalls() == null ? List.of()

: choice.message()

.toolCalls()

.stream()

.map(toolCall -> new AssistantMessage.ToolCall(toolCall.id(), "function",

toolCall.function().name(), toolCall.function().arguments()))

.reduce((tc1, tc2) -> new AssistantMessage.ToolCall(tc1.id(), "function", tc1.name(), tc1.arguments() + tc2.arguments()))

.stream()

.toList();

String finishReason = (choice.finishReason() != null ? choice.finishReason().name() : "");

var generationMetadataBuilder = ChatGenerationMetadata.builder().finishReason(finishReason);

List<Media> media = new ArrayList<>();

String textContent = choice.message().content();

var audioOutput = choice.message().audioOutput();

if (audioOutput != null) {

String mimeType = String.format("audio/%s", request.audioParameters().format().name().toLowerCase());

byte[] audioData = Base64.getDecoder().decode(audioOutput.data());

Resource resource = new ByteArrayResource(audioData);

Media.builder().mimeType(MimeTypeUtils.parseMimeType(mimeType)).data(resource).id(audioOutput.id()).build();

media.add(Media.builder()

.mimeType(MimeTypeUtils.parseMimeType(mimeType))

.data(resource)

.id(audioOutput.id())

.build());

if (!StringUtils.hasText(textContent)) {

textContent = audioOutput.transcript();

}

generationMetadataBuilder.metadata("audioId", audioOutput.id());

generationMetadataBuilder.metadata("audioExpiresAt", audioOutput.expiresAt());

}

var assistantMessage = new AssistantMessage(textContent, metadata, toolCalls, media);

return new Generation(assistantMessage, generationMetadataBuilder.build());

}

private ChatResponseMetadata from(OpenAiApi.ChatCompletion result, RateLimit rateLimit, Usage usage) {

Assert.notNull(result, "OpenAI ChatCompletionResult must not be null");

var builder = ChatResponseMetadata.builder()

.id(result.id() != null ? result.id() : "")

.usage(usage)

.model(result.model() != null ? result.model() : "")

.keyValue("created", result.created() != null ? result.created() : 0L)

.keyValue("system-fingerprint", result.systemFingerprint() != null ? result.systemFingerprint() : "");

if (rateLimit != null) {

builder.rateLimit(rateLimit);

}

return builder.build();

}

private ChatResponseMetadata from(ChatResponseMetadata chatResponseMetadata, Usage usage) {

Assert.notNull(chatResponseMetadata, "OpenAI ChatResponseMetadata must not be null");

var builder = ChatResponseMetadata.builder()

.id(chatResponseMetadata.getId() != null ? chatResponseMetadata.getId() : "")

.usage(usage)

.model(chatResponseMetadata.getModel() != null ? chatResponseMetadata.getModel() : "");

if (chatResponseMetadata.getRateLimit() != null) {

builder.rateLimit(chatResponseMetadata.getRateLimit());

}

return builder.build();

}

/**

* Convert the ChatCompletionChunk into a ChatCompletion. The Usage is set to null.

* @param chunk the ChatCompletionChunk to convert

* @return the ChatCompletion

*/

private OpenAiApi.ChatCompletion chunkToChatCompletion(OpenAiApi.ChatCompletionChunk chunk) {

List<OpenAiApi.ChatCompletion.Choice> choices = chunk.choices()

.stream()

.map(chunkChoice -> new OpenAiApi.ChatCompletion.Choice(chunkChoice.finishReason(), chunkChoice.index(), chunkChoice.delta(),

chunkChoice.logprobs()))

.toList();

return new OpenAiApi.ChatCompletion(chunk.id(), choices, chunk.created(), chunk.model(), chunk.serviceTier(),

chunk.systemFingerprint(), "chat.completion", chunk.usage());

}

private DefaultUsage getDefaultUsage(OpenAiApi.Usage usage) {

return new DefaultUsage(usage.promptTokens(), usage.completionTokens(), usage.totalTokens(), usage);

}

Prompt buildRequestPrompt(Prompt prompt) {

// Process runtime options

OpenAiChatOptions runtimeOptions = null;

if (prompt.getOptions() != null) {

if (prompt.getOptions() instanceof ToolCallingChatOptions toolCallingChatOptions) {

runtimeOptions = ModelOptionsUtils.copyToTarget(toolCallingChatOptions, ToolCallingChatOptions.class,

OpenAiChatOptions.class);

}

else if (prompt.getOptions() instanceof FunctionCallingOptions functionCallingOptions) {

runtimeOptions = ModelOptionsUtils.copyToTarget(functionCallingOptions, FunctionCallingOptions.class,

OpenAiChatOptions.class);

}

else {

runtimeOptions = ModelOptionsUtils.copyToTarget(prompt.getOptions(), ChatOptions.class,

OpenAiChatOptions.class);

}

}

// Define request options by merging runtime options and default options

OpenAiChatOptions requestOptions = ModelOptionsUtils.merge(runtimeOptions, this.defaultOptions,

OpenAiChatOptions.class);

// Merge @JsonIgnore-annotated options explicitly since they are ignored by

// Jackson, used by ModelOptionsUtils.

if (runtimeOptions != null) {

requestOptions.setHttpHeaders(

mergeHttpHeaders(runtimeOptions.getHttpHeaders(), this.defaultOptions.getHttpHeaders()));

requestOptions.setInternalToolExecutionEnabled(

ModelOptionsUtils.mergeOption(runtimeOptions.isInternalToolExecutionEnabled(),

this.defaultOptions.isInternalToolExecutionEnabled()));

requestOptions.setToolNames(ToolCallingChatOptions.mergeToolNames(runtimeOptions.getToolNames(),

this.defaultOptions.getToolNames()));

requestOptions.setToolCallbacks(ToolCallingChatOptions.mergeToolCallbacks(runtimeOptions.getToolCallbacks(),

this.defaultOptions.getToolCallbacks()));

requestOptions.setToolContext(ToolCallingChatOptions.mergeToolContext(runtimeOptions.getToolContext(),

this.defaultOptions.getToolContext()));

}

else {

requestOptions.setHttpHeaders(this.defaultOptions.getHttpHeaders());

requestOptions.setInternalToolExecutionEnabled(this.defaultOptions.isInternalToolExecutionEnabled());

requestOptions.setToolNames(this.defaultOptions.getToolNames());

requestOptions.setToolCallbacks(this.defaultOptions.getToolCallbacks());

requestOptions.setToolContext(this.defaultOptions.getToolContext());

}

ToolCallingChatOptions.validateToolCallbacks(requestOptions.getToolCallbacks());

return new Prompt(prompt.getInstructions(), requestOptions);

}

private Map<String, String> mergeHttpHeaders(Map<String, String> runtimeHttpHeaders,

Map<String, String> defaultHttpHeaders) {

var mergedHttpHeaders = new HashMap<>(defaultHttpHeaders);

mergedHttpHeaders.putAll(runtimeHttpHeaders);

return mergedHttpHeaders;

}

/**

* Accessible for testing.

*/

OpenAiApi.ChatCompletionRequest createRequest(Prompt prompt, boolean stream) {

List<OpenAiApi.ChatCompletionMessage> chatCompletionMessages = prompt.getInstructions().stream().map(message -> {

if (message.getMessageType() == MessageType.USER || message.getMessageType() == MessageType.SYSTEM) {

Object content = message.getText();

if (message instanceof UserMessage userMessage) {

if (!CollectionUtils.isEmpty(userMessage.getMedia())) {

List<OpenAiApi.ChatCompletionMessage.MediaContent> contentList = new ArrayList<>(List.of(new OpenAiApi.ChatCompletionMessage.MediaContent(message.getText())));

contentList.addAll(userMessage.getMedia().stream().map(this::mapToMediaContent).toList());

content = contentList;

}

}

return List.of(new OpenAiApi.ChatCompletionMessage(content,

OpenAiApi.ChatCompletionMessage.Role.valueOf(message.getMessageType().name())));

}

else if (message.getMessageType() == MessageType.ASSISTANT) {

var assistantMessage = (AssistantMessage) message;

List<OpenAiApi.ChatCompletionMessage.ToolCall> toolCalls = null;

if (!CollectionUtils.isEmpty(assistantMessage.getToolCalls())) {

toolCalls = assistantMessage.getToolCalls().stream().map(toolCall -> {

var function = new OpenAiApi.ChatCompletionMessage.ChatCompletionFunction(toolCall.name(), toolCall.arguments());

return new OpenAiApi.ChatCompletionMessage.ToolCall(toolCall.id(), toolCall.type(), function);

}).toList();

}

OpenAiApi.ChatCompletionMessage.AudioOutput audioOutput = null;

if (!CollectionUtils.isEmpty(assistantMessage.getMedia())) {

Assert.isTrue(assistantMessage.getMedia().size() == 1,

"Only one media content is supported for assistant messages");

audioOutput = new OpenAiApi.ChatCompletionMessage.AudioOutput(assistantMessage.getMedia().get(0).getId(), null, null, null);

}

return List.of(new OpenAiApi.ChatCompletionMessage(assistantMessage.getText(),

OpenAiApi.ChatCompletionMessage.Role.ASSISTANT, null, null, toolCalls, null, audioOutput));

}

else if (message.getMessageType() == MessageType.TOOL) {

ToolResponseMessage toolMessage = (ToolResponseMessage) message;

toolMessage.getResponses()

.forEach(response -> Assert.isTrue(response.id() != null, "ToolResponseMessage must have an id"));

return toolMessage.getResponses()

.stream()

.map(tr -> new OpenAiApi.ChatCompletionMessage(tr.responseData(), OpenAiApi.ChatCompletionMessage.Role.TOOL, tr.name(),

tr.id(), null, null, null))

.toList();

}

else {

throw new IllegalArgumentException("Unsupported message type: " + message.getMessageType());

}

}).flatMap(List::stream).toList();

OpenAiApi.ChatCompletionRequest request = new OpenAiApi.ChatCompletionRequest(chatCompletionMessages, stream);

OpenAiChatOptions requestOptions = (OpenAiChatOptions) prompt.getOptions();

request = ModelOptionsUtils.merge(requestOptions, request, OpenAiApi.ChatCompletionRequest.class);

// Add the tool definitions to the request's tools parameter.

List<ToolDefinition> toolDefinitions = this.toolCallingManager.resolveToolDefinitions(requestOptions);

if (!CollectionUtils.isEmpty(toolDefinitions)) {

request = ModelOptionsUtils.merge(

OpenAiChatOptions.builder().tools(this.getFunctionTools(toolDefinitions)).build(), request,

OpenAiApi.ChatCompletionRequest.class);

}

// Remove `streamOptions` from the request if it is not a streaming request

if (request.streamOptions() != null && !stream) {

logger.warn("Removing streamOptions from the request as it is not a streaming request!");

request = request.streamOptions(null);

}

return request;

}

private OpenAiApi.ChatCompletionMessage.MediaContent mapToMediaContent(Media media) {

var mimeType = media.getMimeType();

if (MimeTypeUtils.parseMimeType("audio/mp3").equals(mimeType) || MimeTypeUtils.parseMimeType("audio/mpeg").equals(mimeType)) {

return new OpenAiApi.ChatCompletionMessage.MediaContent(

new OpenAiApi.ChatCompletionMessage.MediaContent.InputAudio(fromAudioData(media.getData()), OpenAiApi.ChatCompletionMessage.MediaContent.InputAudio.Format.MP3));

}

if (MimeTypeUtils.parseMimeType("audio/wav").equals(mimeType)) {

return new OpenAiApi.ChatCompletionMessage.MediaContent(

new OpenAiApi.ChatCompletionMessage.MediaContent.InputAudio(fromAudioData(media.getData()), OpenAiApi.ChatCompletionMessage.MediaContent.InputAudio.Format.WAV));

}

else {

return new OpenAiApi.ChatCompletionMessage.MediaContent(

new OpenAiApi.ChatCompletionMessage.MediaContent.ImageUrl(this.fromMediaData(media.getMimeType(), media.getData())));

}

}

private String fromAudioData(Object audioData) {

if (audioData instanceof byte[] bytes) {

return String.format("data:;base64,%s", Base64.getEncoder().encodeToString(bytes));

}

throw new IllegalArgumentException("Unsupported audio data type: " + audioData.getClass().getSimpleName());

}

private String fromMediaData(MimeType mimeType, Object mediaContentData) {

if (mediaContentData instanceof byte[] bytes) {

// Assume the bytes are an image. So, convert the bytes to a base64 encoded

// following the prefix pattern.

return String.format("data:%s;base64,%s", mimeType.toString(), Base64.getEncoder().encodeToString(bytes));

}

else if (mediaContentData instanceof String text) {

// Assume the text is a URLs or a base64 encoded image prefixed by the user.

return text;

}

else {

throw new IllegalArgumentException(

"Unsupported media data type: " + mediaContentData.getClass().getSimpleName());

}

}

private List<OpenAiApi.FunctionTool> getFunctionTools(List<ToolDefinition> toolDefinitions) {

return toolDefinitions.stream().map(toolDefinition -> {

var function = new OpenAiApi.FunctionTool.Function(toolDefinition.description(), toolDefinition.name(),

toolDefinition.inputSchema());

return new OpenAiApi.FunctionTool(function);

}).toList();

}

@Override

public ChatOptions getDefaultOptions() {

return OpenAiChatOptions.fromOptions(this.defaultOptions);

}

@Override

public String toString() {

return "AlibabaOpenAiChatModel [defaultOptions=" + this.defaultOptions + "]";

}

/**

* Use the provided convention for reporting observation data

* @param observationConvention The provided convention

*/

public void setObservationConvention(ChatModelObservationConvention observationConvention) {

Assert.notNull(observationConvention, "observationConvention cannot be null");

this.observationConvention = observationConvention;

}

public static Builder builder() {

return new Builder();

}

public static final class Builder {

private OpenAiApi openAiApi;

private OpenAiChatOptions defaultOptions = OpenAiChatOptions.builder()

.model(OpenAiApi.DEFAULT_CHAT_MODEL)

.temperature(0.7)

.build();

private ToolCallingManager toolCallingManager;

private FunctionCallbackResolver functionCallbackResolver;

private List<FunctionCallback> toolFunctionCallbacks;

private RetryTemplate retryTemplate = RetryUtils.DEFAULT_RETRY_TEMPLATE;

private ObservationRegistry observationRegistry = ObservationRegistry.NOOP;

private Builder() {

}

public Builder openAiApi(OpenAiApi openAiApi) {

this.openAiApi = openAiApi;

return this;

}

public Builder defaultOptions(OpenAiChatOptions defaultOptions) {

this.defaultOptions = defaultOptions;

return this;

}

public Builder toolCallingManager(ToolCallingManager toolCallingManager) {

this.toolCallingManager = toolCallingManager;

return this;

}

@Deprecated

public Builder functionCallbackResolver(FunctionCallbackResolver functionCallbackResolver) {

this.functionCallbackResolver = functionCallbackResolver;

return this;

}

@Deprecated

public Builder toolFunctionCallbacks(List<FunctionCallback> toolFunctionCallbacks) {

this.toolFunctionCallbacks = toolFunctionCallbacks;

return this;

}

public Builder retryTemplate(RetryTemplate retryTemplate) {

this.retryTemplate = retryTemplate;

return this;

}

public Builder observationRegistry(ObservationRegistry observationRegistry) {

this.observationRegistry = observationRegistry;

return this;

}

public AlibabaOpenAiChatModel build() {

if (toolCallingManager != null) {

Assert.isNull(functionCallbackResolver,

"functionCallbackResolver cannot be set when toolCallingManager is set");

Assert.isNull(toolFunctionCallbacks,

"toolFunctionCallbacks cannot be set when toolCallingManager is set");

return new AlibabaOpenAiChatModel(openAiApi, defaultOptions, toolCallingManager, retryTemplate,

observationRegistry);

}

if (functionCallbackResolver != null) {

Assert.isNull(toolCallingManager,

"toolCallingManager cannot be set when functionCallbackResolver is set");

List<FunctionCallback> toolCallbacks = this.toolFunctionCallbacks != null ? this.toolFunctionCallbacks

: List.of();

return new AlibabaOpenAiChatModel(openAiApi, defaultOptions, functionCallbackResolver, toolCallbacks,

retryTemplate, observationRegistry);

}

return new AlibabaOpenAiChatModel(openAiApi, defaultOptions, DEFAULT_TOOL_CALLING_MANAGER, retryTemplate,

observationRegistry);

}

}

}

Bean配置注册

将自定义的模型注册为Spring Bean:

@Bean

public AlibabaOpenAiChatModel alibabaOpenAiChatModel(

OpenAiConnectionProperties commonProperties,

OpenAiChatProperties chatProperties,

ObjectProvider<RestClient.Builder> restClientBuilderProvider,

ObjectProvider<WebClient.Builder> webClientBuilderProvider,

ToolCallingManager toolCallingManager,

RetryTemplate retryTemplate,

ResponseErrorHandler responseErrorHandler,

ObjectProvider<ObservationRegistry> observationRegistry,

ObjectProvider<ChatModelObservationConvention> observationConvention) {

// 配置基础参数

String baseUrl = StringUtils.hasText(chatProperties.getBaseUrl()) ?

chatProperties.getBaseUrl() : commonProperties.getBaseUrl();

String apiKey = StringUtils.hasText(chatProperties.getApiKey()) ?

chatProperties.getApiKey() : commonProperties.getApiKey();

String projectId = StringUtils.hasText(chatProperties.getProjectId()) ?

chatProperties.getProjectId() : commonProperties.getProjectId();

String organizationId = StringUtils.hasText(chatProperties.getOrganizationId()) ?

chatProperties.getOrganizationId() : commonProperties.getOrganizationId();

// 构建连接头信息

Map<String, List<String>> connectionHeaders = new HashMap<>();

if (StringUtils.hasText(projectId)) {

connectionHeaders.put("OpenAI-Project", List.of(projectId));

}

if (StringUtils.hasText(organizationId)) {

connectionHeaders.put("OpenAI-Organization", List.of(organizationId));

}

// 获取HTTP客户端构建器

RestClient.Builder restClientBuilder =

restClientBuilderProvider.getIfAvailable(RestClient::builder);

WebClient.Builder webClientBuilder =

webClientBuilderProvider.getIfAvailable(WebClient::builder);

// 构建OpenAI API客户端

OpenAiApi openAiApi = OpenAiApi.builder()

.baseUrl(baseUrl)

.apiKey(new SimpleApiKey(apiKey))

.headers(CollectionUtils.toMultiValueMap(connectionHeaders))

.completionsPath(chatProperties.getCompletionsPath())

.embeddingsPath("/v1/embeddings")

.restClientBuilder(restClientBuilder)

.webClientBuilder(webClientBuilder)

.responseErrorHandler(responseErrorHandler)

.build();

// 构建自定义聊天模型

AlibabaOpenAiChatModel chatModel = AlibabaOpenAiChatModel.builder()

.openAiApi(openAiApi)

.defaultOptions(chatProperties.getOptions())

.toolCallingManager(toolCallingManager)

.retryTemplate(retryTemplate)

.observationRegistry(observationRegistry.getIfUnique(() -> ObservationRegistry.NOOP))

.build();

// 设置观察约定

observationConvention.ifAvailable(chatModel::setObservationConvention);

return chatModel;

}

技术原理深度解析

为什么会出现分片问题?

- 流式传输机制:阿里云百炼平台在处理Function Calling时,采用了流式传输方式

- 协议差异:虽然声称兼容OpenAI,但在具体实现上存在细微差别

- 数据序列化方式:不同平台对JSON数据的分块策略不同

解决方案的技术要点

- Stream API的妙用:利用Java 8的Stream API进行数据聚合

- reduce操作:将多个分片ToolCall合并为单个完整对象

- 空值处理:妥善处理分片中的null和空字符串

- Bean替换:通过Spring的依赖注入机制替换默认实现

总结

本文详细分析了SpringAI与阿里云百炼平台在Function Calling功能上的兼容性问题,并提供了完整的解决方案。通过自定义兼容层的方式,我们成功解决了参数分片问题,保持了响应式编程的优势,同时确保了系统的稳定性和用户体验。

这个案例提醒我们,在选择和集成第三方服务时,不能仅仅依赖于"兼容性声明",而应该通过实际测试来验证兼容性,并准备相应的适配方案。

扩展阅读

如果您在实施过程中遇到问题,欢迎在评论区讨论交流,对您有帮助,点赞收藏支持一下

278

278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?