目录

1、摘要

本文主要讲述k8s高可用集群的部署过程,对原理性内容不做过多说明,如有需要,请自行查阅。

本文k8s高可用集群的搭建主要是在window11专业版hyper-v沙箱环境中进行,网络环境为本地局域网,需要保证沙箱环境能够正常访问广域网,且每台虚拟机设置静态IP。

2、环境准备

- 3台CentOS Stream 10虚拟机作为Master节点,每台20G磁盘空间,4G内存

- 2台CentOS Stream 10虚拟机作为Worker节点,每台20G磁盘空间,3G内存

- k8s版本:v1.33.2

- 容器运行时环境: containerd v2.1.2

3、软件要求

-

所有节点安装containerd

-

所有节点安装kubeadm, kubelet和kubectl

-

所有节点安装负载均衡软件(HAProxy+Keepalived)

4、集群部署

4.1 安装容器运行时环境

在所有机器上安装containerd

#关闭防火墙

systemctl disable --now firewalld

# 启用 IPv4 数据包转发

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

EOF

#应用配置

sudo sysctl --system

#下载容器运行时压缩包

curl -O https://github.com/containerd/containerd/releases/download/v2.1.2/containerd-2.1.2-linux-amd64.tar.gz

#解压缩

tar Cxzvf /usr/local containerd-2.1.2-linux-amd64.tar.gz

#下载containerd.service文件

mkdir -p /usr/local/lib/systemd/system/ && \

curl -L https://raw.githubusercontent.com/containerd/containerd/main/containerd.service -o /usr/local/lib/systemd/system/containerd.service && \

systemctl daemon-reload && \

systemctl enable --now containerd

#安装runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.3.0/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

#安装CNI插件(不安装安装 CNI 可能会遇到容器崩溃自动重启现象)

curl -JLO https://github.com/containernetworking/plugins/releases/download/v1.7.1/cni-plugins-linux-amd64-v1.7.1.tgz

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.7.1.tgz

#容器日志查询

sudo crictl --runtime-endpoint=unix:///run/containerd/containerd.sock logs <container_id>

#如果是从软件包(例如,RPM 或者 .deb)中安装 containerd,其中默认禁止了 CRI 集成插件。我们需要要确保 cri 没有出现在 /etc/containerd/config.toml 文件中 disabled_plugins 列表内4.2 containerd镜像源配置

#生成默认配置

mkdir -p /etc/containerd && \

containerd config default > /etc/containerd/config.toml

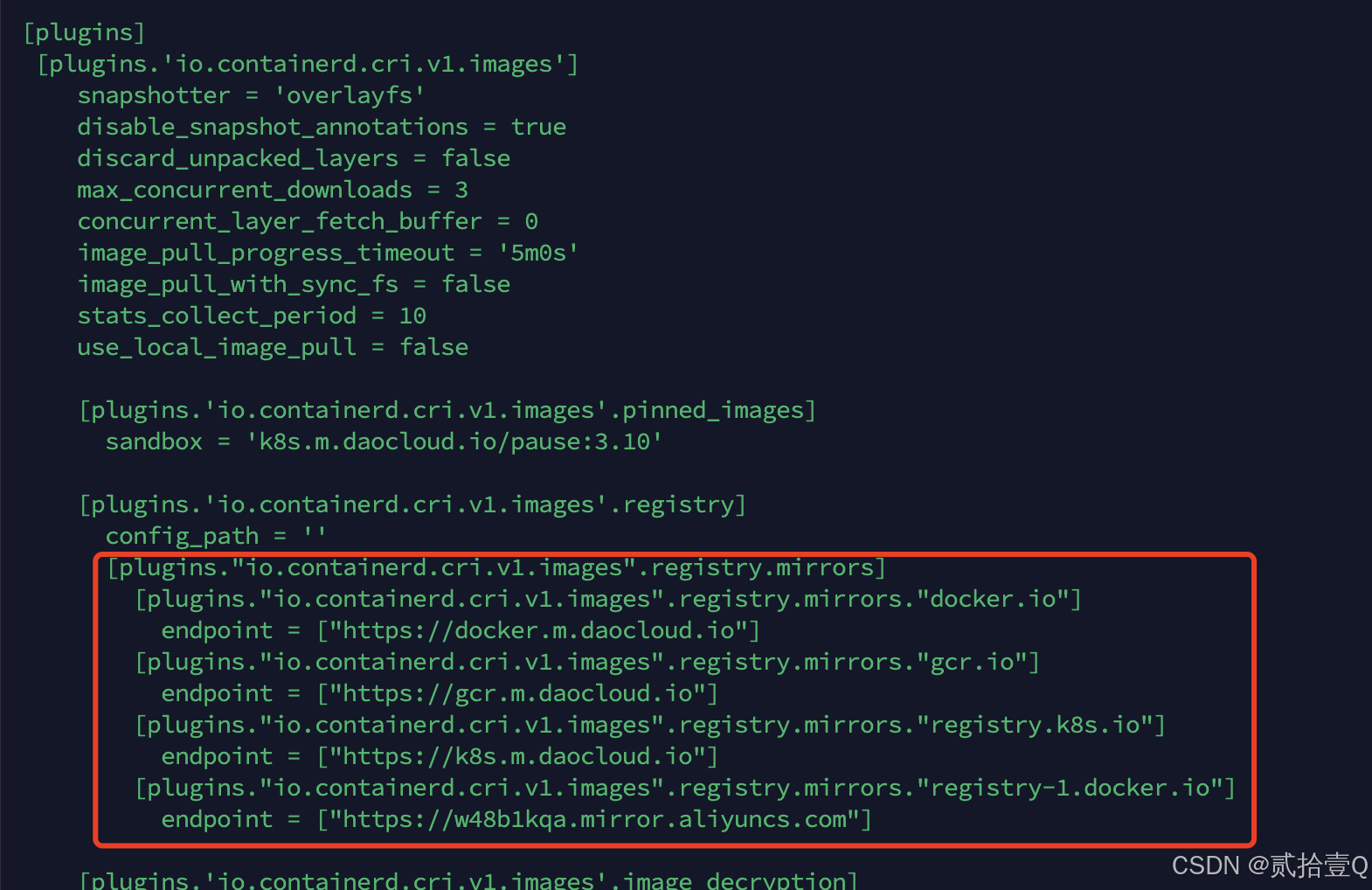

# containerd2.x版本是在[plugins.'io.containerd.cri.v1.images'.registry]下添加镜像源配置

[plugins."io.containerd.cri.v1.images".registry.mirrors]

[plugins."io.containerd.cri.v1.images".registry.mirrors."docker.io"]

endpoint = ["https://docker.m.daocloud.io"]

[plugins."io.containerd.cri.v1.images".registry.mirrors."gcr.io"]

endpoint = ["https://gcr.m.daocloud.io"]

[plugins."io.containerd.cri.v1.images".registry.mirrors."registry.k8s.io"]

endpoint = ["https://k8s.m.daocloud.io"]

#重启containerd

systemctl restart containerd

#验证变更结果

/usr/local/bin/containerd config dump | grep -A 10 "registry.mirrors"

镜像源配置结果示例如下:

4.3 k8s组件安装

在每台机器上安装k8s

#分别在五台主机上设置主机名

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-master2

hostnamectl set-hostname k8s-master3

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

#配置host映射

192.168.1.100 k8s-master1

192.168.1.103 k8s-master2

192.168.1.104 k8s-master3

192.168.1.101 k8s-node1

192.168.1.102 k8s-node2

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#交换分区功能禁用

echo "关闭 swap..."

swapoff -a

sed -i '/swap/s/^/#/' /etc/fstab

#添加 Kubernetes 的 yum 仓库

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.33/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

#安装 kubelet、kubeadm 和 kubectl,并启用 kubelet 以确保它在启动时自动启动

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

4.4 安装keepalived和haproxy

#所有控制平台节点安装keepalived haproxy

yum install -y keepalived haproxy

4.5 配置Keepalived

#主节点(即主Master节点)配置Keepalived

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

#haproxy重启机制配置

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

#设置优先级

priority 101

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

#设置VIP地址(控制平面节点的高可用 IP)

virtual_ipaddress {

192.168.1.200

}

track_script {

chk_haproxy

}

# VIP漂移时调用脚本

notify "/etc/keepalived/notify.sh"

}

virtual_server 192.168.1.200 6443 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.1.100 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.1.103 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.1.104 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

EOF

#备份节点(即Master备份节点)配置 Keepalived

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_BACKUP

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

#优先级配置

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

#设置VIP地址(控制平面节点的高可用 IP)

virtual_ipaddress {

192.168.1.200

}

track_script {

chk_haproxy

}

# VIP漂移时调用脚本

notify "/etc/keepalived/notify.sh"

}

virtual_server 192.168.1.200 6443 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.1.100 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.1.103 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.1.104 6443 {

weight 1

SSL_GET {

url {

path /healthz

digest ff20ad2481f8d01119d450f37f0175b

}

url {

path /

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

EOF4.6 配置haproxy

#配置 HAProxy(所有控制平面节点)

cat > /etc/haproxy/haproxy.cfg << EOF

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /var/lib/haproxy/stats

user haproxy

group haproxy

daemon

defaults

log global

mode tcp

option tcplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

#指定haproxy前台监听地址

frontend kubernetes-frontend

bind 192.168.1.200:16443

mode tcp

option tcplog

default_backend kubernetes-backend

#指定haproxy后台转发地址

backend kubernetes-backend

mode tcp

balance roundrobin

server kube-master-1 192.168.1.100:6443 check

server kube-master-2 192.168.1.103:6443 check

server kube-master-3 192.168.1.104:6443 check

EOF4.7 启用keepalived haproxy

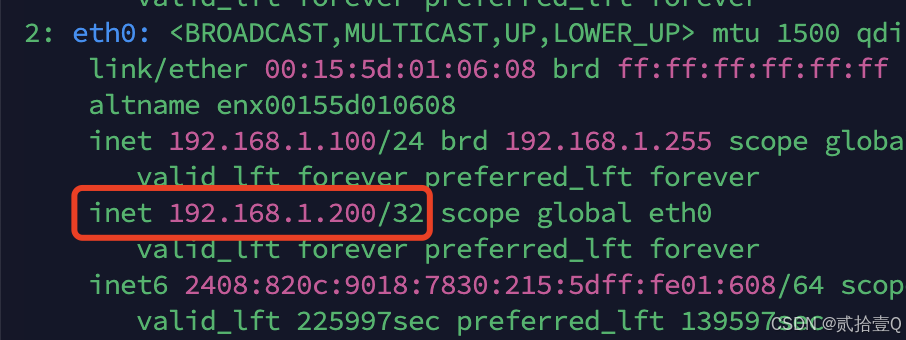

systemctl enable --now keepalived haproxykeepalived 启动后,会随机挑选一个virtual_server作为VIP节点,并生成如下图所示的虚拟IP

4.8 创建haproxy通知脚本

该脚本主要用于keepalived VIP漂移时调用,需要在所有控制平面节点创建(/etc/keepalived/notify.sh)

#!/bin/bash

TYPE=$1

NAME=$2

STATE=$3

case $STATE in

"MASTER") # 当前节点获得 VIP

systemctl start haproxy

exit 0

;;

"BACKUP") # 当前节点失去 VIP

systemctl stop haproxy

exit 0

;;

"FAULT") # 当前节点故障

systemctl stop haproxy

exit 0

;;

*)

echo "Unknown state: $STATE"

exit 1

;;

esac#添加可执行权限

chmod +x /etc/keepalived/notify.sh

#重启服务

systemctl daemon-reload

systemctl restart keepalived4.9 k8s初始化

#在主节点(VIP节点)生成--certificate-key

kubeadm init phase upload-certs --upload-certs

#主节点(VIP节点)初始化

sudo kubeadm init \

--apiserver-advertise-address=192.168.1.100 \

--control-plane-endpoint "192.168.1.200:16443" \

--upload-certs \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12

# 主节点(VIP节点)安装网络插件(Calico)

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

# 生成工作节点初始化指令

kubeadm token create --print-join-command

#初始化备份master节点(主节点api-server需要正常运行,相关参数在主节点初始化完成后会生成,或者手动通过工作节点初始化指令生成指令后,手动添加相关参数)

kubeadm join 192.168.1.200:16443 --token iqrauq.fsts6uhy8041fm1u \

--discovery-token-ca-cert-hash sha256:8f3c1bedd2d16bffab46b758d248d1318de4d2cfe7ed3727826d87a0c6c93842 \

--control-plane --certificate-key 6fcf0e5baefa7899902191964e6d7746a7fcd25b5af35aa9c8998bdc5697d3bb

#worker节点初始化(相关参数在主节点初始化完成后会生成,或者手动通过工作节点初始化指令生成指令)

kubeadm join 192.168.1.200:16443 --token iqrauq.fsts6uhy8041fm1u \

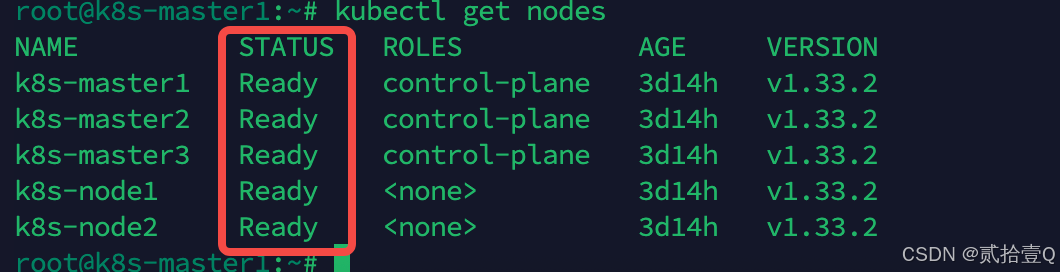

--discovery-token-ca-cert-hash sha256:8f3c1bedd2d16bffab46b758d248d1318de4d2cfe7ed3727826d87a0c6c93842所有节点初始化完之后需要保证所有节点状态都为Ready时才代表着该节点已正常加入集群并处于可用状态

4.10 部署ingress-nginx

在任意master节点创建ingress-controller-deploy.yaml添加下列内容

通过kubectl apply -f ingress-controller-deploy.yaml命令部署ingress-nginx

关于ingress都用途,读者自行查阅

#部署ingress Controller

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-nginx-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Local

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: k8s.m.daocloud.io/ingress-nginx/controller:v1.8.1@sha256:e5c4824e7375fcf2a393e1c03c293b69759af37a9ca6abdb91b13d78a93da8bd

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: k8s.m.daocloud.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: k8s.m.daocloud.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None4.11 部署MetalLB

MetalLB 是一个为裸机(Bare Metal)Kubernetes 集群提供负载均衡功能的开源项目。在裸机环境中,Kubernetes 原生的 LoadBalancer 类型服务无法自动分配外部 IP,而 MetalLB 可以解决这个问题

#主节点(VIP节点)部署MetalLB

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.7/config/manifests/metallb-native.yaml

#主节点(VIP节点)配置MetalLB IP池,metalLB-conf.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.1.110-192.168.1.150 # 可用IP范围

- 192.168.2.0/24 # 整个网段

autoAssign: true # 自动分配IP

avoidBuggyIPs: true # 避免分配有问题的IP

---

#创建 L2Advertisement 资源

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

spec:

ipAddressPools:

- first-pool

#配置实施

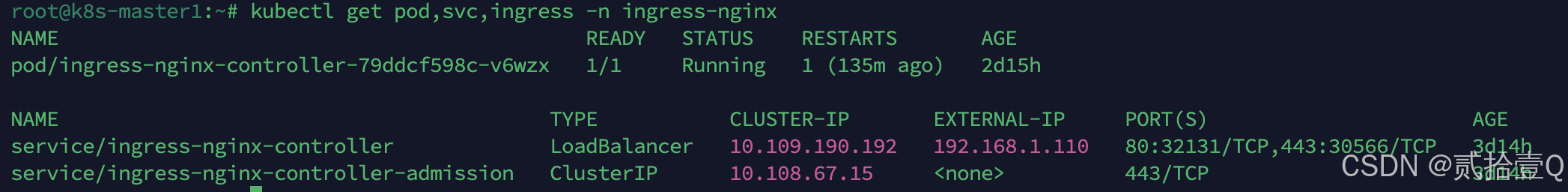

kubectl apply -f metalLB-conf.yaml部署完MetalLB后,应当能看到ingress-nginx-controller能正常分配EXTERNAL-IP

4.12 集群测试

在任意master节点创建test-service.yaml

并执行kubectl apply -f test-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: Always

resources: {}

status: {}

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service # Service 名称

spec:

selector:

app: nginx # 必须匹配 Pod 的标签

ports:

- protocol: TCP

port: 80 # Service 暴露的端口

targetPort: 80 # Pod 的端口

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

spec:

ingressClassName: nginx

rules:

- host: my-app.example.com # 替换为你的域名(或使用通配符 *)

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service # 指向你的 Service

port:

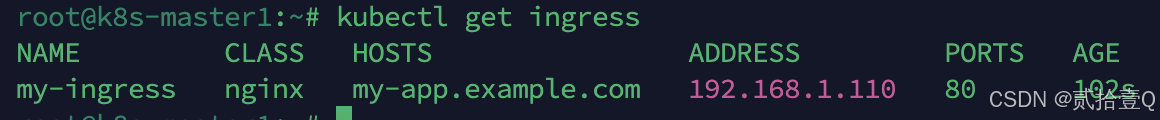

number: 80 # Service 的端口获取ingress

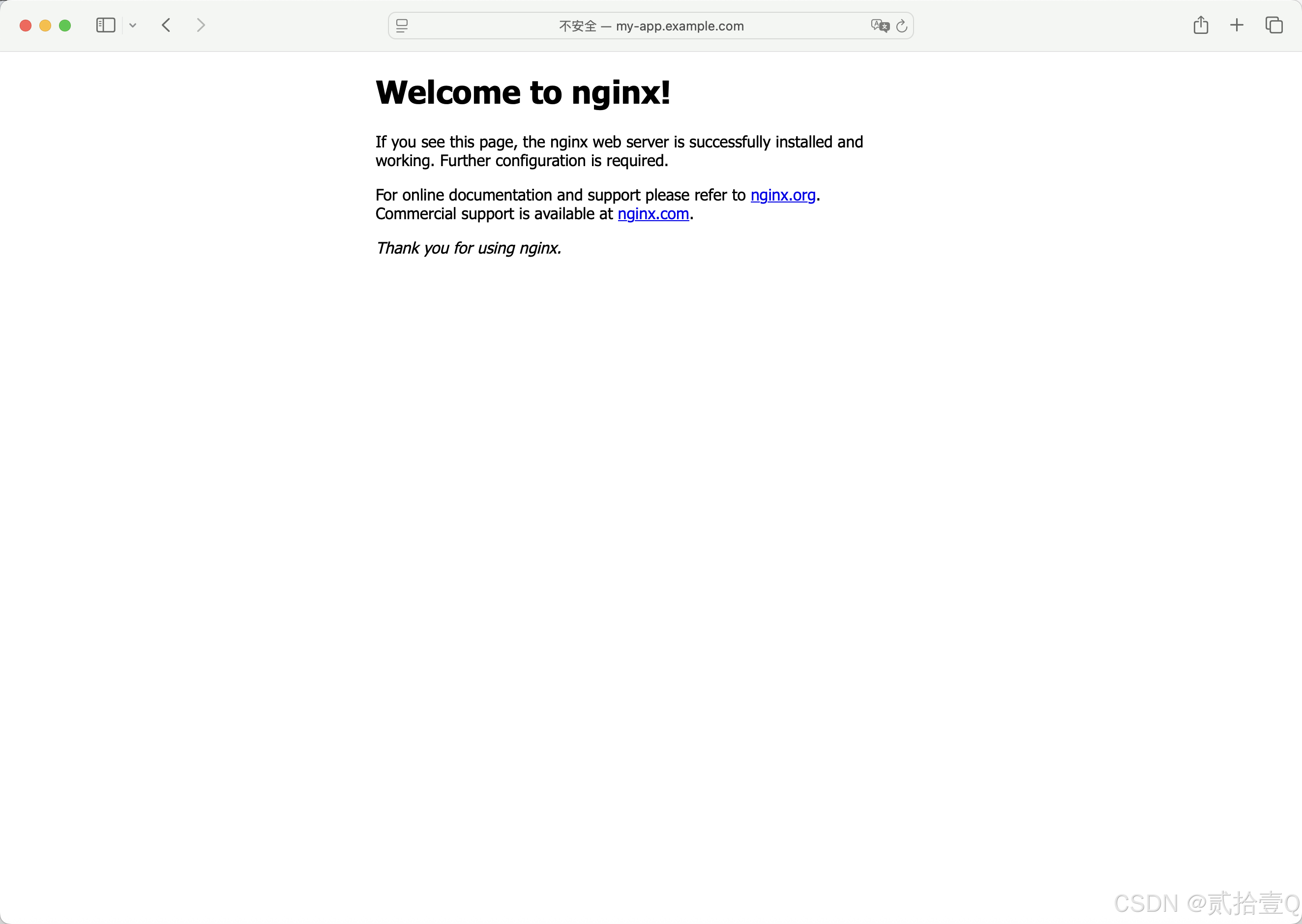

访问my-app.example.com

注意

4.13 集群资源监控

4.13 集群资源监控

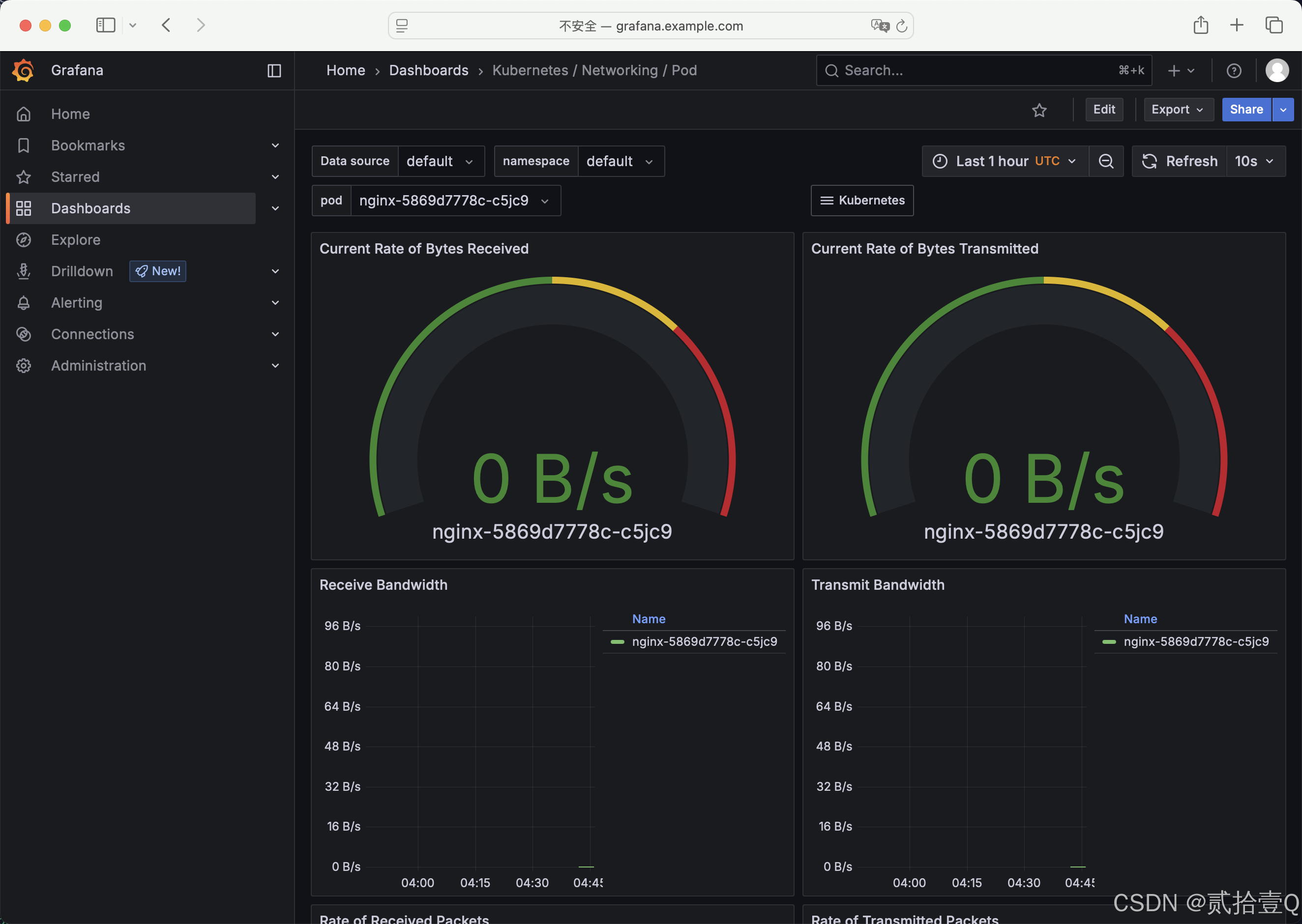

本文使用普罗米修斯【prometheus】 + Grafana 搭建监控平台,使用helm进行安装部署

prometheus: 用于定时搜索被监控服务的状态,是一个开源的监控平台,主要功能包括监控、报警等,通常以HTTP协议周期性抓取被监控组件状态。

Grafana: 一个开源的数据分析和可视化工具, 支持多种数据源

#添加 Prometheus 社区 Helm 仓库

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update#创建配置文件prometheus-values.yaml

server:

ingress:

enabled: true

ingressClassName: nginx # 指定Ingress类名,与你的控制器匹配

hosts:

- prometheus.example.com # 替换为你的域名或IP

paths:

- /

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "false" # 可选

persistentVolume:

enabled: true

size: 10Gi

storageClass: "standard" # 修改为你的存储类

service:

type: LoadBalancer # 使用LoadBalancer或NodePort暴露服务

alertmanager:

enabled: true # 启用Alertmanager

ingress:

enabled: true

ingressClassName: nginx # 指定Ingress类名

hosts:

- alertmanager.example.com

paths:

- /

kubeStateMetrics:

enabled: true # 启用Kubernetes状态指标收集

nodeExporter:

enabled: true # 启用节点指标收集

pushgateway:

enabled: false # 禁用Push Gateway(按需启用)

grafana:

ingress:

enabled: true

ingressClassName: nginx # 指定Ingress类名

hosts:

- grafana.example.com

paths:

- /#部署prometheus + granfa

helm install prometheus prometheus-community/kube-prometheus-stack \

-f prometheus-values.yaml \

-n monitoring \

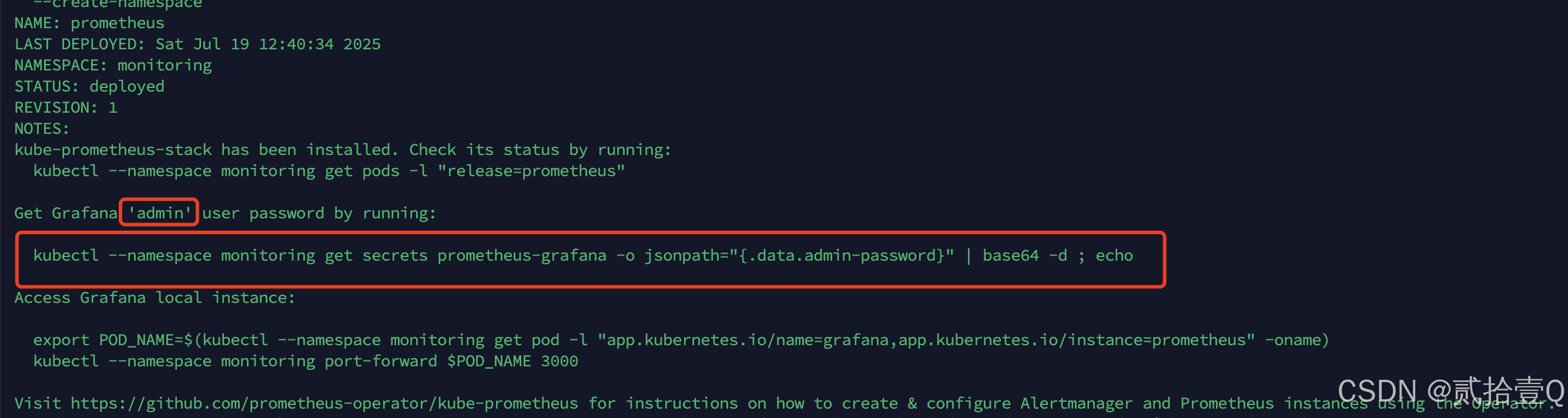

--create-namespaceprometheus + grafana部署完成后,通过下图中命令获取admin用户默认密码

783

783

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?