大数据学习第19天:

熟悉hive参数的使用

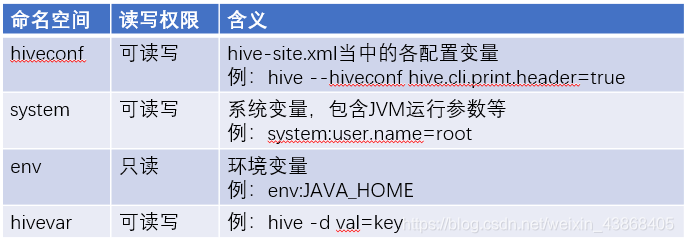

hive当中的参数、变量,都是以命名空间开头

通过${}方式进行引用,其中system、env下的变量必须以前缀开头

hive 参数设置方式

1、修改配置文件 ${HIVE_HOME}/conf/hive-site.xml

2、启动hive cli时,通过–hiveconf key=value的方式进行设置

例:hive --hiveconf hive.cli.print.header=true

3、进入cli之后,通过使用set命令设置

hive set命令

在hive CLI控制台可以通过set对hive中的参数进行查询、设置

set设置:

set hive.cli.print.header=true;

set查看

set hive.cli.print.header

hive参数初始化配置

当前用户家目录下的.hiverc文件

如: ~/.hiverc

如果没有,可直接创建该文件,将需要设置的参数写到该文件中,hive启动运行时,会加载改文件中的配置。

hive历史操作命令集

~/.hivehistory

熟练使用hive的动态分区

hive中支持两种类型的分区:

静态分区SP(static partition)

动态分区DP(dynamic partition)

静态分区与动态分区的主要区别在于静态分区是手动指定,而动态分区是通过数据来进行判断。详细来说,静态分区的列实在编译时期,通过用户传递来决定的;动态分区只有在SQL执行时才能决定。

Hive分区的创建

Hive分区是在创建表的时候用Partitioned by 关键字定义的,但要注意,Partitioned by子句中定义的列是表中正式的列,但是Hive下的数据文件中并不包含这些列,因为它们是目录名。

静态分区

创建一张静态分区表par_tab,单个分区

create table par_tab (name string,nation string) partitioned by (sex string) row format delimited fields terminated by ',';

这时候通过desc查看的表结构如下

hive> desc par_tab;

OK

name string

nation string

sex string

# Partition Information

# col_name data_type comment

sex string

Time taken: 0.038 seconds, Fetched: 8 row(s)

准备本地数据文件par_tab.txt,内容 “名字/国籍”,将以性别(sex)作为分区

jan,china

mary,america

lilei,china

heyong,china

yiku,japan

emoji,japan

把数据插入到表(其实load操作相当于把文件移动到HDFS的Hive目录下)

load data local inpath '/home/hadoop/files/par_tab.txt' into table par_tab partition (sex='man');

这时候在hive下查询par_tab表,变成了3列,注意。

hive> select * from par_tab;

OK

jan china man

mary america man

lilei china man

heyong china man

yiku japan man

emoji japan man

Time taken: 0.076 seconds, Fetched: 6 row(s)

查看par_tab目录结构

[hadoop@hadoop001 files]$ hadoop dfs -lsr /user/hive/warehouse/par_tab

drwxr-xr-x - hadoop supergroup 0 2017-03-29 08:25 /user/hive/warehouse/par_tab/sex=man

-rwxr-xr-x 1 hadoop supergroup 71 2017-03-29 08:25 /user/hive/warehouse/par_tab/sex=man/par_tab.txt

可以看到,在新建分区表的时候,系统会在hive数据仓库默认路径/user/hive/warehouse/下创建一个目录(表名),再创建目录的子目录sex=man(分区名),最后在分区名下存放实际的数据文件。

如果再插入另一个数据文件数据,如文件

lily,china

nancy,china

hanmeimei,america

插入数据

load data local inpath '/home/hadoop/files/par_tab_wm.txt' into table par_tab partition (sex='woman');

查看par_tab表目录结构

[hadoop@hadoop001 files]$ hadoop dfs -lsr /user/hive/warehouse/par_tab

drwxr-xr-x - hadoop supergroup 0 2017-03-29 08:25 /user/hive/warehouse/par_tab/sex=man

-rwxr-xr-x 1 hadoop supergroup 71 2017-03-29 08:25 /user/hive/warehouse/par_tab/sex=man/par_tab.txt

drwxr-xr-x - hadoop supergroup 0 2017-03-29 08:35 /user/hive/warehouse/par_tab/sex=woman

-rwxr-xr-x 1 hadoop supergroup 41 2017-03-29 08:35 /user/hive/warehouse/par_tab/sex=woman/par_tab_wm.txt

最后查看两次插入的结果,包含了man和woman

hive> select * from par_tab;

OK

jan china man

mary america man

lilei china man

heyong china man

yiku japan man

emoji japan man

lily china woman

nancy china woman

hanmeimei america woman

Time taken: 0.136 seconds, Fetched: 9 row(s)

因为分区列是表实际定义的列,所以查询分区数据时

hive> select * from par_tab where sex='woman';

OK

lily china woman

nancy china woman

hanmeimei america woman

Time taken: 0.515 seconds, Fetched: 3 row(s)

下面创建一张静态分区表par_tab_muilt,多个分区(性别+日期)

hive> create table par_tab_muilt (name string, nation string) partitioned by (sex string,dt string) row format delimited fields terminated by ',' ;

hive> load data local inpath '/home/hadoop/files/par_tab.txt' into table par_tab_muilt partition (sex='man',dt='2017-03-29');

[hadoop@hadoop001 files]$ hadoop dfs -lsr /user/hive/warehouse/par_tab_muilt

drwxr-xr-x - hadoop supergroup 0 2017-03-29 08:45 /user/hive/warehouse/par_tab_muilt/sex=man

drwxr-xr-x - hadoop supergroup 0 2017-03-29 08:45 /user/hive/warehouse/par_tab_muilt/sex=man/dt=2017-03-29

-rwxr-xr-x 1 hadoop supergroup 71 2017-03-29 08:45 /user/hive/warehouse/par_tab_muilt/sex=man/dt=2017-03-29/par_tab.txt

可见,新建表的时候定义的分区顺序,决定了文件目录顺序(谁是父目录谁是子目录),正因为有了这个层级关系,当我们查询所有man的时候,man以下的所有日期下的数据都会被查出来。如果只查询日期分区,但父目录sex=man和sex=woman都有该日期的数据,那么Hive会对输入路径进行修剪,从而只扫描日期分区,性别分区不作过滤(即查询结果包含了所有性别)。

动态分区

如果用上述的静态分区,插入的时候必须首先要知道有什么分区类型,而且每个分区写一个load data,太烦人。使用动态分区可解决以上问题,其可以根据查询得到的数据动态分配到分区里。其实动态分区与静态分区区别就是不指定分区目录,由系统自己选择。

首先,启动动态分区功能

hive> set hive.exec.dynamic.partition=true;

假设已有一张表par_tab,前两列是名称name和国籍nation,后两列是分区列,性别sex和日期dt,数据如下

hive> select * from par_tab;

OK

lily china man 2013-03-28

nancy china man 2013-03-28

hanmeimei america man 2013-03-28

jan china man 2013-03-29

mary america man 2013-03-29

lilei china man 2013-03-29

heyong china man 2013-03-29

yiku japan man 2013-03-29

emoji japan man 2013-03-29

Time taken: 1.141 seconds, Fetched: 9 row(s)

现在我把这张表的内容直接插入到另一张表par_dnm中,并实现sex为静态分区,dt动态分区(不指定到底是哪日,让系统自己分配决定)

hive> insert overwrite table par_dnm partition(sex='man',dt)

> select name, nation, dt from par_tab;

插入后看下目录结构

drwxr-xr-x - hadoop supergroup 0 2017-03-29 10:32 /user/hive/warehouse/par_dnm/sex=man

drwxr-xr-x - hadoop supergroup 0 2017-03-29 10:32 /user/hive/warehouse/par_dnm/sex=man/dt=2013-03-28

-rwxr-xr-x 1 hadoop supergroup 41 2017-03-29 10:32 /user/hive/warehouse/par_dnm/sex=man/dt=2013-03-28/000000_0

drwxr-xr-x - hadoop supergroup 0 2017-03-29 10:32 /user/hive/warehouse/par_dnm/sex=man/dt=2013-03-29

-rwxr-xr-x 1 hadoop supergroup 71 2017-03-29 10:32 /user/hive/warehouse/par_dnm/sex=man/dt=2013-03-29/000000_0

再查看分区数

hive> show partitions par_dnm;

OK

sex=man/dt=2013-03-28

sex=man/dt=2013-03-29

Time taken: 0.065 seconds, Fetched: 2 row(s)

证明动态分区成功。

注意,动态分区不允许主分区采用动态列而副分区采用静态列,这样将导致所有的主分区都要创建副分区静态列所定义的分区。

动态分区可以允许所有的分区列都是动态分区列,但是要首先设置一个参数hive.exec.dynamic.partition.mode :

hive> set hive.exec.dynamic.partition.mode;

hive.exec.dynamic.partition.mode=strict

它的默认值是strick,即不允许分区列全部是动态的,这是为了防止用户有可能原意是只在子分区内进行动态建分区,但是由于疏忽忘记为主分区列指定值了,这将导致一个dml语句在短时间内创建大量的新的分区(对应大量新的文件夹),对系统性能带来影响。

所以我们要设置:

hive> set hive.exec.dynamic.partition.mode=nostrick;

’

深刻理解分桶机制

对于每一个表(table)或者分区, Hive可以进一步组织成桶,也就是说桶是更为细粒度的数据范围划分。Hive也是 针对某一列进行桶的组织。Hive采用对列值哈希,然后除以桶的个数求余的方式决定该条记录存放在哪个桶当中。

把表(或者分区)组织成桶(Bucket)有两个理由:

(1)获得更高的查询处理效率。桶为表加上了额外的结构,Hive 在处理有些查询时能利用这个结构。具体而言,连接两个在(包含连接列的)相同列上划分了桶的表,可以使用 Map 端连接 (Map-side join)高效的实现。比如JOIN操作。对于JOIN操作两个表有一个相同的列,如果对这两个表都进行了桶操作。那么将保存相同列值的桶进行JOIN操作就可以,可以大大较少JOIN的数据量。

(2)使取样(sampling)更高效。在处理大规模数据集时,在开发和修改查询的阶段,如果能在数据集的一小部分数据上试运行查询,会带来很多方便。

1.

创建带桶的 table :

create tablebucketed_user(id int,name string)clustered by (id) sorted by(name) into 4buckets row format delimited fields terminated by '\t' stored as textfile;

首先,我们来看如何告诉Hive—个表应该被划分成桶。我们使用CLUSTERED BY 子句来指定划分桶所用的列和要划分的桶的个数:

CREATE TABLE bucketed_user(id INT) name STRING) CLUSTERED BY (id) INTO 4BUCKETS;

在这里,我们使用用户ID来确定如何划分桶(Hive使用对值进行哈希并将结果除 以桶的个数取余数。这样,任何一桶里都会有一个随机的用户集合(PS:其实也能说是随机,不是吗?)。

对于map端连接的情况,两个表以相同方式划分桶。处理左边表内某个桶的 mapper知道右边表内相匹配的行在对应的桶内。因此,mapper只需要获取那个桶 (这只是右边表内存储数据的一小部分)即可进行连接。这一优化方法并不一定要求两个表必须桶的个数相同,两个表的桶个数是倍数关系也可以。用HiveQL对两个划分了桶的表进行连接,可参见“map连接”部分(P400)。

桶中的数据可以根据一个或多个列另外进行排序。由于这样对每个桶的连接变成了高效的归并排序(merge-sort),

因此可以进一步提升map端连接的效率。以下语法声明一个表使其使用排序桶:

CREATE TABLE bucketed_users(id INT, name STRING)

CLUSTERED BY (id) SORTED BY(id ASC) INTO 4 BUCKETS;

我们如何保证表中的数据都划分成桶了呢?把在Hive外生成的数据加载到划分成 桶的表中,当然是可以的。其实让Hive来划分桶更容易。这一操作通常针对已有的表。

Hive并不检查数据文件中的桶是否和表定义中的桶一致(无论是对于桶的数量或用于划分桶的列)。如果两者不匹配,在査询时可能会碰到错 误或未定义的结果。因此,建议让Hive来进行划分桶的操作。

-

往表中插入数据:

INSERT OVERWRITE TABLEbucketed_users SELECT * FROM users; -

物理上,每个桶就是表(或分区)目录里的一个文件。它的文件名并不重要,但是桶 n 是按照字典序排列的第 n 个文件。事实上,桶对应于 MapReduce的输出文件分区:一个作业产生的桶(输出文件)和reduce任务个数相同.

-

对桶中的数据进行采样:

hive> SELECT * FROMbucketed_users

> TABLESAMPLE(BUCKET 1 OUT OF 4 ON id);

>

> 0 Nat

>

> 4 Ann

- 桶的个数从1开始计数。因此,前面的查询从4个桶的第一个中获取所有的用户。 对于一个大规模的、均匀分布的数据集,这会返回表中约四分之一的数据行。我们 也可以用其他比例对若干个桶进行取样(因为取样并不是一个精确的操作,因此这个 比例不一定要是桶数的整数倍)。

注:tablesample是抽样语句,语法:TABLESAMPLE(BUCKET x OUTOF y)

y必须是table总bucket数的倍数或者因子。hive根据y的大小,决定抽样的比例。例如,table总共分了64份,当y=32时,抽取(64/32=)2个bucket的数据,当y=128时,抽取(64/128=)1/2个bucket的数据。x表示从哪个bucket开始抽取。例如,table总bucket数为32,tablesample(bucket 3 out of 16),表示总共抽取(32/16=)2个bucket的数据,分别为第3个bucket和第(3+16=)19个bucket的数据。

熟练操作hive Lateral View、视图和索引

一、Hive Lateral View

Lateral View用于和UDTF函数(explode、split)结合来使用。

首先通过UDTF函数拆分成多行,再将多行结果组合成一个支持别名的虚拟表。

主要解决在select使用UDTF做查询过程中,查询只能包含单个UDTF,不能包含其他字段、以及多个UDTF的问题

语法:

LATERAL VIEW udtf(expression) tableAlias AS columnAlias (’,’ columnAlias)

举例:统计人员表中共有多少种爱好、多少个城市?

hive> select * from psn2;

OK

psn2.id psn2.name psn2.likes psn2.address psn2.age

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

Time taken: 0.138 seconds, Fetched: 12 row(s)

hive> select explode(likes) from psn2;

OK

col

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

lol

book

movie

Time taken: 0.294 seconds, Fetched: 36 row(s)

hive> select count(distinct(myCol1)), count(distinct(myCol2)) from psn2

> LATERAL VIEW explode(likes) myTable1 AS myCol1

> LATERAL VIEW explode(address) myTable2 AS myCol2, myCol3;

Query ID = root_20190216171853_af297af9-dcc6-4e1e-8674-fa0969727b23

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1548397153910_0012, Tracking URL = http://PCS102:8088/proxy/application_1548397153910_0012/

Kill Command = /usr/local/hadoop-3.1.1/bin/mapred job -kill job_1548397153910_0012

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2019-02-16 17:19:00,480 Stage-1 map = 0%, reduce = 0%

2019-02-16 17:19:04,582 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.08 sec

2019-02-16 17:19:09,693 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.24 sec

MapReduce Total cumulative CPU time: 7 seconds 240 msec

Ended Job = job_1548397153910_0012

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 7.24 sec HDFS Read: 15860 HDFS Write: 103 SUCCESS

Total MapReduce CPU Time Spent: 7 seconds 240 msec

OK

_c0 _c1

2

Time taken: 16.894 seconds, Fetched: 1 row(s)

hive>

hive View视图

和关系型数据库中的普通视图一样,hive也支持视图

特点:

不支持物化视图(oracle支持)

只能查询,不能做加载数据操作

视图的创建,只是保存一份元数据,查询视图时才执行对应的子查询

view定义中若包含了ORDER BY/LIMIT语句,当查询视图时也进行ORDER BY/LIMIT语句操作,view当中定义的优先级更高

view支持迭代视图

View语法

创建视图:

CREATE VIEW [IF NOT EXISTS] [db_name.]view_name

[(column_name [COMMENT column_comment], ...) ]

[COMMENT view_comment]

[TBLPROPERTIES (property_name = property_value, ...)]

AS SELECT ... ;

举例:注意 视图在HDFS下不存在文件

hive> create view v_psn2 as select id,name from psn2;

OK

id name

Time taken: 0.127 seconds

hive> show tables;

OK

tab_name

cell_drop_monitor

cell_monitor

docs

logtbl

person

person3

psn2

psn21

psn22

psn3

psn31

psn4

psnbucket

student

test01

v_psn2

wc

Time taken: 0.02 seconds, Fetched: 17 row(s)

hive> select * from v_psn2;

OK

v_psn2.id v_psn2.name

小明1

小明2

小明3

小明4

小明5

小明6

小明1

小明2

小明3

小明4

小明5

小明6

Time taken: 0.11 seconds, Fetched: 12 row(s)

hive> drop view v_psn2;

OK

Time taken: 0.08 seconds

hive> select * from v_psn2;

FAILED: SemanticException [Error 10001]: Line 1:14 Table not found 'v_psn2'

hive>

Hive 索引

目的:优化查询以及检索性能

给表psn2创建索引:create index t1_index on table psn2(name) as ‘org.apache.hadoop.hive.ql.index.compact.CompactIndexHandler’ with deferred rebuild in table t1_index_table;

as:指定索引器;

in table:指定索引表,若不指定默认生成在default__psn2_t1_index__表中

create index t1_index on table psn2(name)

as ‘org.apache.hadoop.hive.ql.index.compact.CompactIndexHandler’ with deferred rebuild;

查询索引

show index on psn2;

重建索引(建立索引之后必须重建索引才能生效)

ALTER INDEX t1_index ON psn2 REBUILD;

删除索引

DROP INDEX IF EXISTS t1_index ON psn2;

熟悉hive运行方式

1、命令行方式cli:控制台模式

–与hdfs交互

hive> dfs -ls /;

Found 3 items

drwxr-xr-x - root supergroup 0 2019-01-25 16:44 /root

drwxrwx--- - root supergroup 0 2019-01-25 16:18 /tmp

drwxr-xr-x - root supergroup 0 2019-02-01 09:46 /usr

hive> dfs -cat /root/hive_remote/warehouse/person/*;

1,小明1,18,lol-book-movie,beijing:shangxuetang-shanghai:pudong

2,小明2,20,lol-book-movie,beijing:shangxuetang-shanghai:pudong

3,小明3,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong

4,小明4,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong

5,小明5,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong

6,小明6,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong

hive>

-与Linux交互 :!开头

hive> !pwd;

/root

2、-脚本运行方式(实际生产环境中用最多)

#直接按照入参执行 输出结果到linux控制台

[root@PCS102 ~]# hive -e "select * from psn2"

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = b243b1f6-0b67-416f-8b9a-3da0304cb88b

Logging initialized using configuration in jar:file:/usr/local/apache-hive-3.1.1-bin/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = 0a2ced87-5509-44bb-927e-17ab4d993b91

OK

psn2.id psn2.name psn2.likes psn2.address psn2.age

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

Time taken: 2.416 seconds, Fetched: 12 row(s)

#直接按照入参执行 输出结果重定向到文件

[root@PCS102 ~]# hive -e "select * from psn2" > aaa

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 1ee55846-3df2-4fc0-8ce8-501d2202a617

Logging initialized using configuration in jar:file:/usr/local/apache-hive-3.1.1-bin/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = 7549c4cf-d416-406b-82f7-f5012c3f1173

OK

Time taken: 2.59 seconds, Fetched: 12 row(s)

[root@PCS102 ~]# cat aaa

psn2.id psn2.name psn2.likes psn2.address psn2.age

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

#直接按照入参执行 输出结果重定向到文件 -S静默执行

[root@PCS102 ~]# hive -S -e "select * from psn2" > bbb

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 991dd630-b1ae-448d-a43c-5870fb7508cc

Hive Session ID = ed0b4ba8-c8ec-4c9b-acba-4815e3e5762a

[root@PCS102 ~]# cat bbb

psn2.id psn2.name psn2.likes psn2.address psn2.age

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

#直接按照入参执行 输出结果重定向到文件 如果sql有问题 会报错 报错信息输出到linux控制台

[root@PCS102 ~]# hive -e "select * from psn55" > ccc

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 83a20df1-6f19-414a-a247-cf7dbc6ee58c

Logging initialized using configuration in jar:file:/usr/local/apache-hive-3.1.1-bin/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = 8d5bfc04-7e76-46b5-b2a2-13e8ccfc890a

FAILED: SemanticException [Error 10001]: Line 1:14 Table not found 'psn55'

[root@PCS102 ~]# cat ccc

#-f 执行文件中的sql 结果输出到linux控制台

[root@PCS102 ~]# hive -f test

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = b5831035-da17-4260-95aa-10c68f729327

Logging initialized using configuration in jar:file:/usr/local/apache-hive-3.1.1-bin/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = 19738ea4-0c4b-473f-8f05-171a16f8ec04

OK

psn2.id psn2.name psn2.likes psn2.address psn2.age

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

Time taken: 2.569 seconds, Fetched: 12 row(s)

#-i 执行文件中的sql 会进入hive CLI

[root@PCS102 ~]# hive -i test

which: no hbase in (/usr/local/jdk1.8.0_65/bin:/home/cluster/subversion-1.10.3/bin:/home/cluster/apache-storm-0.9.2/bin:/usr/local/hadoop-3.1.1/bin:/usr/local/hadoop-3.1.1/sbin:/usr/local/apache-hive-3.1.1-bin/bin:/usr/local/sbin:/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/openssh/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-3.1.1-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = d720d685-5547-4469-a07d-f47d4d078bd7

Logging initialized using configuration in jar:file:/usr/local/apache-hive-3.1.1-bin/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = eb4cbb84-8174-4432-8be6-dd38bac70f2d

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

#在Hive CLI里执行外面的包含sql的文件

hive> source test;

OK

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10

小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 20

Time taken: 0.151 seconds, Fetched: 12 row(s)

hive>

3、JDBC方式:hiveserver2

4、web GUI接口 :hwi操作麻烦、基本不用、hue要好一下

(1)下载源码包apache-hive-*-src.tar.gz (注意:新版本里没有hwi,这里举例apache-hive-1.2.1-src.tar.gz)

(2)将hwi/web/*里面所有的文件打成war包

cd /usr/local/apache-hive-1.2.1-src/hwi/web && jar -cvf hive-hwi.war ./

(3)将hwi war包放在$HIVE_HOME/lib/

cp /usr/local/apache-hive-1.2.1-src/hwi/web/hive-hwi.war /usr/local/apache-hive-3.1.1-bin/lib/

(4)复制tools.jar(在jdk的lib目录下)到$HIVE_HOME/lib下

cp /usr/local/jdk1.8.0_65/lib/tools.jar /usr/local/apache-hive-3.1.1-bin/lib

(5)修改hive-site.xml

(5)修改hive-site.xml

<property>

<name>hive.hwi.listen.host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hive.hwi.listen.port</name>

<value>9999</value>

</property>

<property>

<name>hive.hwi.war.file</name>

<value>lib/hive-hwi.war</value>

</property>

(6)启动hwi服务(端口号9999)

hive --service hwi

(7)浏览器通过以下链接来访问

http://PCS102:9999/hwi/

理解权限管理并熟练操作

、Hive 权限管理

1、三种授权模型:

(1)Storage Based Authorization in the Metastore Server

基于存储的授权 - 可以对Metastore中的元数据进行保护,但是没有提供更加细粒度的访问控制(例如:列级别、行级别)。

(2)SQL Standards Based Authorization in HiveServer2

基于SQL标准的Hive授权 - 完全兼容SQL的授权模型,推荐使用该模式。

(3)Default Hive Authorization (Legacy Mode)

hive默认授权 - 设计目的仅仅只是为了防止用户产生误操作,而不是防止恶意用户访问未经授权的数据。

重点看一下第(2)种授权:Hive - SQL Standards Based Authorization in HiveServer2

–完全兼容SQL的授权模型

–除支持对于用户的授权认证,还支持角色role的授权认证

·role可理解为是一组权限的集合,通过role为用户授权

·一个用户可以具有一个或多个角色

·默认包含两种角色:public、admin

2、限制

(1)启用当前认证方式之后,dfs, add, delete, compile, and reset等命令被禁用。

(2)通过set命令设置hive configuration的方式被限制某些用户使用。

(可通过修改配置文件hive-site.xml中hive.security.authorization.sqlstd.confwhitelist进行配置)

(3)添加、删除函数以及宏的操作,仅为具有admin的用户开放。

(4)用户自定义函数(开放支持永久的自定义函数),可通过具有admin角色的用户创建,其他用户都可以使用。

(5)Transform功能被禁用。

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

<property>

<name>hive.users.in.admin.role</name>

<value>root</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>

服务端启动hiveserver2;客户端通过beeline进行连接

4、角色的添加、删除、查看、设置

CREATE ROLE role_name; -- 创建角色

DROP ROLE role_name; -- 删除角色

SET ROLE (role_name|ALL|NONE); -- 设置角色

SHOW CURRENT ROLES; -- 查看当前具有的角色

SHOW ROLES; -- 查看所有存在的角色

角色的授予、移除、查看#将角色授予某个用户、角色

GRANT role_name [, role_name] ...

TO principal_specification [, principal_specification] ...

[ WITH ADMIN OPTION ];

principal_specification

: USER user

| ROLE role

#移除某个用户、角色的角色

REVOKE [ADMIN OPTION FOR] role_name [, role_name] ...

FROM principal_specification [, principal_specification] ... ;

principal_specification

: USER user

| ROLE role

#查看授予某个用户、角色的角色列表

SHOW ROLE GRANT (USER|ROLE) principal_name;

#查看属于某种角色的用户、角色列表

SHOW PRINCIPALS role_name;

Hive权限管理

权限:

SELECT privilege – gives read access to an object.

INSERT privilege – gives ability to add data to an object (table).

UPDATE privilege – gives ability to run update queries on an object (table).

DELETE privilege – gives ability to delete data in an object (table).

ALL PRIVILEGES – gives all privileges (gets translated into all the above privileges).

权限的授予、移除、查看:

#将权限授予某个用户、角色:

GRANT

priv_type [, priv_type ] ...

ON table_or_view_name

TO principal_specification [, principal_specification] ...

[WITH GRANT OPTION];

#移除某个用户、角色的权限:

REVOKE [GRANT OPTION FOR]

priv_type [, priv_type ] ...

ON table_or_view_name

FROM principal_specification [, principal_specification] ... ;

principal_specification

: USER user

| ROLE role

priv_type

: INSERT | SELECT | UPDATE | DELETE | ALL

#查看某个用户、角色的权限:

SHOW GRANT [principal_name] ON (ALL| ([TABLE] table_or_view_name)

理解hive调优

符合SQL语法的字符串解析生成可以在Hadoop上执行的MapReduce的工具。理解Hadoop的核心能力,是Hive 优化的根本。使用Hive尽量按照分布式计算的一些特点来设计SQL,Hive的调优原则主要包括以下几点:

原子化操作

尽量原子化操作,避免一个SQL包含复杂逻辑,可以使用中间表来完成复杂的逻辑,从而提高整体执行效率。一般来说,单个SQL所起的JOB个数尽量控制在5个以下。如果Union All的部分个数大于2,或者每个Union部分数据量大,应该拆分成多个Insert Into语句

充分利用服务器资源

让服务器尽可能的多做事情,充分利用服务器的计算资源,以实现最高的系统吞吐量为目标。比如一个作业能做完的事情就不要拆开两个作业来完成。Reduce个数过少不能真正发挥Hadoop并行计算的优势,但Reduce个数过多,会造成大量小文件问题,所以需要根据数据量和资源情况找到一个折衷点。

Hive可以将没有依赖关系的多次MR过程(例如Union All语义中的多个子查询)并发提交。使用hive.exec.parallel参数控制在同一个SQL中的不同作业是否可以同时运行,从而提高作业的并发,充分利用服务器的资源:

SET hive.exec.parallel=true;

SET hive.exec.parallel.thread.number=最大并发job数;

选择最优的执行路径

让服务器尽量少做事情,选择最优的执行路径,以资源消耗最少为目标。比如

注意Join的使用

两表关联时,若其中有一个表很小,则使用Map Join,否则使用普通的Reduce Join

SET hive.auto.convert.join=true ;

SET hive.smalltable.filesize=25000000L(默认是25M);

注意小文件的问题

在Hive里有两种比较常见的处理办法。第一是使用Combinefileinputformat,将多个小文件打包作为一个整体的Inputsplit,减少Map任务数;

SET mapred.max.split.size=128000000;

SET mapred.min.split.size=128000000;

SET mapred.min.split.size.per.node=128000000;

SET mapred.min.split.size.per.rack=128000000;

SET hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat;

第二是文件数目小,容易在文件存储端造成瓶颈,给 HDFS 带来压力,影响处理效率。对此,可以通过合并Map和Reduce的结果文件来消除这样的影响。

设置Hive参数,将额外启动一个MR Job打包小文件。

用于设置合并属性的参数有:

是否合并Map输出文件:SET hive.merge.mapfiles=true(默认值为真)

是否合并Reduce 端输出文件:SET hive.merge.mapredfiles= true(默认值为假)

合并文件的大小:SET hive.merge.size.per.task=25610001000(默认值为 256000000)

注意执行过程中的数据倾斜问题

在Hive调优中比较常用的处理办法有两种:

第一,通过hive.groupby.skewindata = true控制生成两个MR Job,第一个MR Job Map的输出结果随机分配到Reduce做次预汇总,减少某些key值条数过多或过小造成的数据倾斜问题。

第二,通过hive.map.aggr = true(默认为true)在Map端做Combiner,假如map各条数据基本上不一样,聚合没什么意义,做Combiner反而画蛇添足,Hive会通过以下两个参数:

hive.groupby.mapaggr.checkinterval = 100000 (默认)

hive.map.aggr.hash.min.reduction = 0.5(默认)

预先取100000条数据聚合,如果聚合后的条数/100000>0.5,则不再做聚合。

合理设置Map与Reduce的个数

增加Map数

同时可执行的Map数是有限的,通常情况下,作业会通过Input的目录产生一个或者多个Map任务,而主要的决定因素是Input的文件总个数和Input的文件大小。

如果表A只有一个文件,且大小超过100M,包含上千万记录,任务较为耗时,可以考虑用多个Map任务完成,有效提升性能

合理设置Reduce数

增加map数可以通过控制一个作业的Reduce数来加以控制。Reduce个数的设定会极大影响执行效率,一般基于以下参数设定:

hive.exec.reducers.bytes.per.reducer(每个reduce任务处理的数据量,如1G)

hive.exec.reducers.max(每个任务最大的reduce数)

如果Reduce的输入(Map的输出)总大小不超过1G,那么只会有一个Reduce任务。可以根据实际情况,通过缩小每个Reduce任务处理的数据量来提高执行性能。

Hive SQL调优

Hive查询生成多个Map Reduce作业,一个Map Reduce作业又有map,reduce,spill,shuffle,sort等多个阶段,所以针对Hive SQL的优化可以大致分为针对MR中单个步骤的优化,针对MR全局的优化以及针对整个查询的优化。Hive SQL的调优贯穿所有阶段,主要是解决运行过程中数据倾斜问题,减少作业数,对小文件进行合并处理,合理设置Map Reduce的任务数等,根据数据量和模型情况,通过迭代调测来有效提升性能。以下是常见的Hive SQL调优方法:

Hive join优化

减少不必要的关联

Hive SQL和其他SQL一样,是一种功能强大的说明性语言,对于同一个业务功能,可以通过不同的写法来实现,而不同的写法会产生不同的性能特点。

例如一个点击率表,包括了访问时间、SessionID、网址和来源IP地址共四个字段:

CREATE TABLE clicks (

timestamp date, sessionID string, url string, source_ip string)

STORED as ORC tblproperties (“orc.compress” = “SNAPPY”);

假设我们需要查找每个SessionID最后一次的访问网址:

方法一:

SELECT clicks.* FROM clicks inner join

(select sessionID, max(timestamp) as max_ts from clicks group by sessionID) latest

ON clicks.sessionID = latest.sessionID and clicks.timestamp = latest.max_ts;

该方法使用了子查询方法去收集每个SessionID的最后访问时间,然后通过Inner Join自关联去排除掉之前的点击访问记录,效率较低。

方法二:

SELECT * FROM (SELECT *, RANK() over (partition by sessionID,order by timestamp desc) as rank FROM clicks) ranked_clicks

WHERE ranked_clicks.rank=1;

这里使用了OLAP的排位函数去实现相同的业务查询,关键不需要表关联,仅为单表操作,删除不必要的关联在大数据开发上意义重大,能大幅提高性能。

带表分区的Join

Hive是先关联再进行Where条件判断,如果在右表b中找不到左表a表的记录,b表中的所以列都会显示为NULL,这种情况下left outer的查询结果与where子句无关,解决办法是将Where条件放在JOIN ON的关联判断条件中。

SQL编写需要尽早过滤数据,以减少各个阶段的数据量,只选择需要用到的字段。

Skew Join优化

Hive是将优化Skewed Join Key为Map Join。开启hive.optimize.skewjoin=true可优化处理过程中倾斜的数据。但需要注意Skew Join优化需要额外的Map Join操作,且不能节省Shuffle的代价。

利用随机数减少数据倾斜

大表之间Join容易因为空值而产生数据倾斜,除了通过过滤方法排除空值,还可以利用随机数分散到不同的Reduce上,例如:

select a.uid from big_table_a a left outer join big_table_b b on b.uid = case when a.uid is null or length(a.uid)=0 then concat(‘rd_sid’,rand()) else a.uid end;

把空值的Key变成一个字符串加上随机数,就能把倾斜的数据分到不同的Reduce上,解决数据倾斜问题。因为空值不参与关联,即使分到不同的Reduce上,也不影响最终的结果,而且IO减少,作业数也减少了,执行效率更优。

Group by

Group By是在Reduce阶段的操作,防止数据倾斜:

Map端聚合,提前一部分计算:Hive.map.aggr = true

hive.groupby.skewindata为ture的时候,生成的查询计划会有两个MRJob:

第一个MRJob 中,Map的输出结果集合会随机分布到Reduce中,每个Reduce做部分聚合操作,并输出结果,这样处理的结果是相同的GroupBy Key有可能被分发到不同的Reduce中,从而达到负载均衡的目的。

第二个MRJob再根据预处理的数据结果按照GroupBy Key分布到Reduce中(这个过程可以保证相同的GroupBy Key被分布到同一个Reduce中),最后完成最终的聚合操作。

Count Distinct

数据量小的时候无所谓,数据量大的情况下,由于COUNT DISTINCT操作需要用一个Reduce Task来完成,这一个Reduce需要处理的数据量太大,就会导致整个Job很难完成,一般COUNT DISTINCT使用先GROUP BY再COUNT的方式替换:

SELECT day,

COUNT(DISTINCT id)AS uv

FROM test

GROUP BY day

可以转换成:

SELECT day,

COUNT(id) AS uv

FROM (SELECTday,id FROM test GROUP BY day,id) a

GROUP BY day;

虽然会多用一个Job来完成,但在数据量大的情况下,这个绝对是值得的。

Order by VS Sort by

Order by是在全局的排序,只用一个Reduce去运行,所以在SET Hive.mapred.mode=strict 模式下,不允许执行以下查询:

没有limit限制的order by语句

分区表上没有指定分区

笛卡尔积(JOIN时没有ON语句)。

而Sort by是在每个Reduce内排序,只保证同一个Reduce下排序正确。

通用Hive SQL优化方法

1、尽量利用分区,比如按时间进行分区。

2、关联条件不能忽略,避免Select *。

3、关联字段的类型保持一致,避免字段的强制转换。

4、避免使用LIKE 进行模糊匹配的查询。

5、对查询进行优化,要尽量避免全表扫描

常用参数

(常用的一些可设置参数,具体数值按照需要进行调整!)

SET hive.optimize.skewjoin = true;

SET hive.skewjoin.key = 100000;

SET hive.exec.dynamic.partition.mode = nonstrict;

SET mapred.reducer.tasks = 50;

// Hive中间结果压缩和压缩输出

SET hive.exec.compress.output = true; – 默认false

SET hive.exec.compress.intermediate = true; – 默认false

SET mapred.output.compression.codec = org.apache.hadoop.io.compress.SnappyCodec; – 默认org.apache.hadoop.io.compress.DefaultCodec

SET mapred.output.compression.type = BLOCK; – 默认BLOCK

// 输出合并小文件

SET hive.merge.mapfiles = true; – 默认true,在map-only任务结束时合并小文件

SET hive.merge.mapredfiles = true; – 默认false,在map-reduce任务结束时合并小文件

SET hive.merge.size.per.task = 268435456; – 默认256M

SET hive.merge.smallfiles.avgsize = 16777216; – 当输出文件的平均大小小于该值时,启动一个独立的map-reduce任务进行文件merge

// 设置map和reduce数量

SET mapred.max.split.size = 256000000;

SET mapred.min.split.size = 64000000;

SET mapred.min.split.size.per.node = 64000000;

SET mapred.min.split.size.per.rack = 64000000;

SET hive.input.format = org.apache.hadoop.hive.ql.io.CombineHiveInputFormat;

SET hive.exec.reducers.bytes.per.reducer = 256000000; – 默认64M,每个reducer处理的数据量大小

// 设置数据倾斜和并行化

SET hive.exec.parallel = true; – 并行执行

SET hive.exec.parallel.thread.number = 16;

SET mapred.job.reuse.jvm.num.tasks = 10;

SET hive.exec.dynamic.partition = true;

SET hive.optimize.cp = true; – 列裁剪

SET hive.optimize.pruner = true; – 分区裁剪

SET hive.groupby.skewindata = true; – groupby数据倾斜

SET hive.exec.mode.local.auto = true; --本地执行

SET hive.exec.mode.local.auto.input.files.max = 10; //map数默认是4,当map数小于10就会启动任务本地执行

SET hive.exec.mode.local.auto.inputbytes.max = 128000000 --默认是128M

//关闭以下两个参数来完成关闭Hive任务的推测执行

SET mapred.map.tasks.speculative.execution=false;

SET mapred.reduce.tasks.speculative.execution=false;

本文深入探讨Hive大数据处理的优化策略,涵盖参数配置、动态分区、分桶机制、索引、视图、LateralView及SQL调优技巧,旨在提升Hive查询效率和系统性能。

本文深入探讨Hive大数据处理的优化策略,涵盖参数配置、动态分区、分桶机制、索引、视图、LateralView及SQL调优技巧,旨在提升Hive查询效率和系统性能。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?