例如,epoch = 10意味着神经网络对相同的数据集经过10次重复的训练过程。

For instance, epoch = 10 means that theneural network goes through 10 repeated training processes with the samedataset.

到目前为止,你能看懂这一部分内容吗?

Are you able to follow this section so far?

现在,你已经学习了神经网络训练的大部分关键概念。

Then you have learned most of the keyconcepts of the neural network training.

虽然方程表达式可以根据学习规则而变化,但基本概念是相对一致的。

Although the equations may vary dependingon the learning rule, the essential concepts are relatively the same.

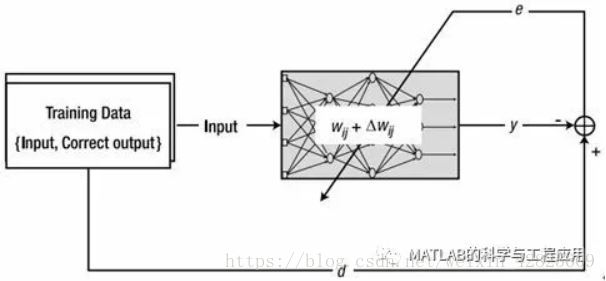

图2-13说明了本节中描述的训练过程。

Figure 2-13 illustrates the trainingprocess described in this section.

图2-13 训练过程描述The training process

广义增量规则(Generalized Delta Rule)

本节涉及增量规则的一些理论知识。

This section touches on some theoreticalaspects of the delta rule.

然而,你不必因此感到沮丧。

However, you don’t need to be frustrated.

我们将学习最重要的部分,而不是涉及太多的细节。

We will go through the most essentialsubjects without elaborating too much on the specifics.

上一节的增量规则已经过时了。

The delta rule of the previous section israther obsolete.

后来的研究发现,增量规则存在更广义的形式。

Later studies have uncovered that thereexists a more generalized form of the delta rule.

对于任意激活函数,增量规则可表示为以下方程。

For an arbitrary activation function, thedelta rule is expressed as the following equation.

将上式代入方程2.3,得到与式2.2中增量法则相同的公式。

Plugging this equation into Equation 2.3results in the same formula as the delta rule in Equation 2.2.

这一事实表明,方程2.2中的增量规则仅适用于线性激活函数。

This fact indicates that the delta rule inEquation 2.2 is only valid for linear activation functions.

现在,我们可以用sigmoid函数推导出增量法则,它被广泛用作激活函数。

Now, we can derive the delta rule with thesigmoid function, which is widely used as an activation function.

sigmoid函数定义为如图2-14所示。

The sigmoid function is defined as shown inFigure 2-14.

——本文译自Phil Kim所著的《Matlab Deep Learning》

更多精彩文章请关注微信号:

5706

5706

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?