INFO 09-12 08:36:18 __init__.py:207] Automatically detected platform cuda.

INFO 09-12 08:36:18 api_server.py:912] vLLM API server version 0.7.3

INFO 09-12 08:36:18 api_server.py:913] args: Namespace(host=None, port=8003, uvicorn_log_level='info', allow_credentials=False, allowed_origins=['*'], allowed_methods=['*'], allowed_headers=['*'], api_key=None, lora_modules=None, prompt_adapters=None, chat_template=None, chat_template_content_format='auto', response_role='assistant', ssl_keyfile=None, ssl_certfile=None, ssl_ca_certs=None, ssl_cert_reqs=0, root_path=None, middleware=[], return_tokens_as_token_ids=False, disable_frontend_multiprocessing=False, enable_request_id_headers=False, enable_auto_tool_choice=False, enable_reasoning=False, reasoning_parser=None, tool_call_parser=None, tool_parser_plugin='', model='/models/DeepSeek-R1-Distill-Llama-70B', task='auto', tokenizer=None, skip_tokenizer_init=False, revision=None, code_revision=None, tokenizer_revision=None, tokenizer_mode='auto', trust_remote_code=False, allowed_local_media_path=None, download_dir=None, load_format='auto', config_format=<ConfigFormat.AUTO: 'auto'>, dtype='auto', kv_cache_dtype='auto', max_model_len=84320, guided_decoding_backend='xgrammar', logits_processor_pattern=None, model_impl='auto', distributed_executor_backend=None, pipeline_parallel_size=1, tensor_parallel_size=4, max_parallel_loading_workers=None, ray_workers_use_nsight=False, block_size=None, enable_prefix_caching=None, disable_sliding_window=False, use_v2_block_manager=True, num_lookahead_slots=0, seed=0, swap_space=4, cpu_offload_gb=0, gpu_memory_utilization=0.9, num_gpu_blocks_override=None, max_num_batched_tokens=512, max_num_partial_prefills=1, max_long_partial_prefills=1, long_prefill_token_threshold=0, max_num_seqs=1, max_logprobs=20, disable_log_stats=False, quantization=None, rope_scaling=None, rope_theta=None, hf_overrides=None, enforce_eager=True, max_seq_len_to_capture=8192, disable_custom_all_reduce=False, tokenizer_pool_size=0, tokenizer_pool_type='ray', tokenizer_pool_extra_config=None, limit_mm_per_prompt=None, mm_processor_kwargs=None, disable_mm_preprocessor_cache=False, enable_lora=True, enable_lora_bias=False, max_loras=1, max_lora_rank=8, lora_extra_vocab_size=256, lora_dtype='auto', long_lora_scaling_factors=None, max_cpu_loras=4, fully_sharded_loras=False, enable_prompt_adapter=False, max_prompt_adapters=1, max_prompt_adapter_token=0, device='auto', num_scheduler_steps=1, multi_step_stream_outputs=True, scheduler_delay_factor=0.0, enable_chunked_prefill=True, speculative_model=None, speculative_model_quantization=None, num_speculative_tokens=None, speculative_disable_mqa_scorer=False, speculative_draft_tensor_parallel_size=None, speculative_max_model_len=None, speculative_disable_by_batch_size=None, ngram_prompt_lookup_max=None, ngram_prompt_lookup_min=None, spec_decoding_acceptance_method='rejection_sampler', typical_acceptance_sampler_posterior_threshold=None, typical_acceptance_sampler_posterior_alpha=None, disable_logprobs_during_spec_decoding=None, model_loader_extra_config=None, ignore_patterns=[], preemption_mode=None, served_model_name=['DeepSeek-R1'], qlora_adapter_name_or_path=None, otlp_traces_endpoint=None, collect_detailed_traces=None, disable_async_output_proc=False, scheduling_policy='fcfs', scheduler_cls='vllm.core.scheduler.Scheduler', override_neuron_config=None, override_pooler_config=None, compilation_config=None, kv_transfer_config=None, worker_cls='auto', generation_config=None, override_generation_config=None, enable_sleep_mode=False, calculate_kv_scales=False, additional_config=None, disable_log_requests=False, max_log_len=None, disable_fastapi_docs=False, enable_prompt_tokens_details=False)

INFO 09-12 08:36:18 api_server.py:209] Started engine process with PID 76

INFO 09-12 08:36:22 __init__.py:207] Automatically detected platform cuda.

INFO 09-12 08:36:24 config.py:549] This model supports multiple tasks: {'generate', 'classify', 'embed', 'score', 'reward'}. Defaulting to 'generate'.

INFO 09-12 08:36:24 config.py:1382] Defaulting to use mp for distributed inference

INFO 09-12 08:36:24 config.py:1555] Chunked prefill is enabled with max_num_batched_tokens=512.

WARNING 09-12 08:36:24 cuda.py:95] To see benefits of async output processing, enable CUDA graph. Since, enforce-eager is enabled, async output processor cannot be used

WARNING 09-12 08:36:24 config.py:685] Async output processing is not supported on the current platform type cuda.

WARNING 09-12 08:36:24 config.py:2224] LoRA with chunked prefill is still experimental and may be unstable.

INFO 09-12 08:36:27 config.py:549] This model supports multiple tasks: {'generate', 'classify', 'score', 'reward', 'embed'}. Defaulting to 'generate'.

INFO 09-12 08:36:27 config.py:1382] Defaulting to use mp for distributed inference

INFO 09-12 08:36:27 config.py:1555] Chunked prefill is enabled with max_num_batched_tokens=512.

WARNING 09-12 08:36:27 cuda.py:95] To see benefits of async output processing, enable CUDA graph. Since, enforce-eager is enabled, async output processor cannot be used

WARNING 09-12 08:36:27 config.py:685] Async output processing is not supported on the current platform type cuda.

WARNING 09-12 08:36:27 config.py:2224] LoRA with chunked prefill is still experimental and may be unstable.

INFO 09-12 08:36:27 llm_engine.py:234] Initializing a V0 LLM engine (v0.7.3) with config: model='/models/DeepSeek-R1-Distill-Llama-70B', speculative_config=None, tokenizer='/models/DeepSeek-R1-Distill-Llama-70B', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, tokenizer_revision=None, trust_remote_code=False, dtype=torch.bfloat16, max_seq_len=84320, download_dir=None, load_format=LoadFormat.AUTO, tensor_parallel_size=4, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=None, enforce_eager=True, kv_cache_dtype=auto, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='xgrammar'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=DeepSeek-R1, num_scheduler_steps=1, multi_step_stream_outputs=True, enable_prefix_caching=False, chunked_prefill_enabled=True, use_async_output_proc=False, disable_mm_preprocessor_cache=False, mm_processor_kwargs=None, pooler_config=None, compilation_config={"splitting_ops":[],"compile_sizes":[],"cudagraph_capture_sizes":[],"max_capture_size":0}, use_cached_outputs=True,

WARNING 09-12 08:36:28 multiproc_worker_utils.py:300] Reducing Torch parallelism from 64 threads to 1 to avoid unnecessary CPU contention. Set OMP_NUM_THREADS in the external environment to tune this value as needed.

INFO 09-12 08:36:28 custom_cache_manager.py:19] Setting Triton cache manager to: vllm.triton_utils.custom_cache_manager:CustomCacheManager

�[1;36m(VllmWorkerProcess pid=348)�[0;0m INFO 09-12 08:36:28 multiproc_worker_utils.py:229] Worker ready; awaiting tasks

�[1;36m(VllmWorkerProcess pid=349)�[0;0m INFO 09-12 08:36:28 multiproc_worker_utils.py:229] Worker ready; awaiting tasks

�[1;36m(VllmWorkerProcess pid=350)�[0;0m INFO 09-12 08:36:28 multiproc_worker_utils.py:229] Worker ready; awaiting tasks

INFO 09-12 08:36:29 cuda.py:229] Using Flash Attention backend.

�[1;36m(VllmWorkerProcess pid=350)�[0;0m INFO 09-12 08:36:29 cuda.py:229] Using Flash Attention backend.

�[1;36m(VllmWorkerProcess pid=348)�[0;0m INFO 09-12 08:36:29 cuda.py:229] Using Flash Attention backend.

�[1;36m(VllmWorkerProcess pid=349)�[0;0m INFO 09-12 08:36:29 cuda.py:229] Using Flash Attention backend.

�[1;36m(VllmWorkerProcess pid=348)�[0;0m INFO 09-12 08:36:29 utils.py:916] Found nccl from library libnccl.so.2

�[1;36m(VllmWorkerProcess pid=348)�[0;0m INFO 09-12 08:36:29 pynccl.py:69] vLLM is using nccl==2.21.5

INFO 09-12 08:36:29 utils.py:916] Found nccl from library libnccl.so.2

INFO 09-12 08:36:29 pynccl.py:69] vLLM is using nccl==2.21.5

�[1;36m(VllmWorkerProcess pid=349)�[0;0m INFO 09-12 08:36:29 utils.py:916] Found nccl from library libnccl.so.2

�[1;36m(VllmWorkerProcess pid=349)�[0;0m INFO 09-12 08:36:29 pynccl.py:69] vLLM is using nccl==2.21.5

�[1;36m(VllmWorkerProcess pid=350)�[0;0m INFO 09-12 08:36:29 utils.py:916] Found nccl from library libnccl.so.2

�[1;36m(VllmWorkerProcess pid=350)�[0;0m INFO 09-12 08:36:29 pynccl.py:69] vLLM is using nccl==2.21.5

�[1;36m(VllmWorkerProcess pid=350)�[0;0m WARNING 09-12 08:36:29 custom_all_reduce.py:136] Custom allreduce is disabled because it's not supported on more than two PCIe-only GPUs. To silence this warning, specify disable_custom_all_reduce=True explicitly.

�[1;36m(VllmWorkerProcess pid=349)�[0;0m WARNING 09-12 08:36:29 custom_all_reduce.py:136] Custom allreduce is disabled because it's not supported on more than two PCIe-only GPUs. To silence this warning, specify disable_custom_all_reduce=True explicitly.

WARNING 09-12 08:36:29 custom_all_reduce.py:136] Custom allreduce is disabled because it's not supported on more than two PCIe-only GPUs. To silence this warning, specify disable_custom_all_reduce=True explicitly.

�[1;36m(VllmWorkerProcess pid=348)�[0;0m WARNING 09-12 08:36:29 custom_all_reduce.py:136] Custom allreduce is disabled because it's not supported on more than two PCIe-only GPUs. To silence this warning, specify disable_custom_all_reduce=True explicitly.

INFO 09-12 08:36:29 shm_broadcast.py:258] vLLM message queue communication handle: Handle(connect_ip='127.0.0.1', local_reader_ranks=[1, 2, 3], buffer_handle=(3, 4194304, 6, 'psm_1f0b3033'), local_subscribe_port=41965, remote_subscribe_port=None)

INFO 09-12 08:36:29 model_runner.py:1110] Starting to load model /models/DeepSeek-R1-Distill-Llama-70B...

�[1;36m(VllmWorkerProcess pid=350)�[0;0m INFO 09-12 08:36:29 model_runner.py:1110] Starting to load model /models/DeepSeek-R1-Distill-Llama-70B...

�[1;36m(VllmWorkerProcess pid=348)�[0;0m INFO 09-12 08:36:29 model_runner.py:1110] Starting to load model /models/DeepSeek-R1-Distill-Llama-70B...

�[1;36m(VllmWorkerProcess pid=349)�[0;0m INFO 09-12 08:36:29 model_runner.py:1110] Starting to load model /models/DeepSeek-R1-Distill-Llama-70B...

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Exception in worker VllmWorkerProcess while processing method load_model.

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Traceback (most recent call last):

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/multiproc_worker_utils.py", line 236, in _run_worker_process

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] output = run_method(worker, method, args, kwargs)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/utils.py", line 2196, in run_method

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/worker.py", line 183, in load_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model_runner.load_model()

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/model_runner.py", line 1112, in load_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = get_model(vllm_config=self.vllm_config)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/__init__.py", line 14, in get_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return loader.load_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 406, in load_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] model = _initialize_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 125, in _initialize_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return model_class(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 496, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = self._init_model(vllm_config=vllm_config,

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 533, in _init_model

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return LlamaModel(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/compilation/decorators.py", line 151, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] old_init(self, vllm_config=vllm_config, prefix=prefix, **kwargs)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 326, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.start_layer, self.end_layer, self.layers = make_layers(

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/utils.py", line 558, in make_layers

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] maybe_offload_to_cpu(layer_fn(prefix=f"{prefix}.{idx}"))

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 328, in <lambda>

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] lambda prefix: layer_type(config=config,

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 254, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.mlp = LlamaMLP(

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 70, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.gate_up_proj = MergedColumnParallelLinear(

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 441, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] super().__init__(input_size=input_size,

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 314, in __init__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.quant_method.create_weights(

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 129, in create_weights

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] weight = Parameter(torch.empty(sum(output_partition_sizes),

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/torch/utils/_device.py", line 106, in __torch_function__

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=350)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] torch.OutOfMemoryError: CUDA out of memory. Tried to allocate 224.00 MiB. GPU 3 has a total capacity of 23.64 GiB of which 164.81 MiB is free. Process 25379 has 23.47 GiB memory in use. Of the allocated memory 22.91 GiB is allocated by PyTorch, and 24.14 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Exception in worker VllmWorkerProcess while processing method load_model.

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Traceback (most recent call last):

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/multiproc_worker_utils.py", line 236, in _run_worker_process

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] output = run_method(worker, method, args, kwargs)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/utils.py", line 2196, in run_method

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/worker.py", line 183, in load_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model_runner.load_model()

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/model_runner.py", line 1112, in load_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = get_model(vllm_config=self.vllm_config)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/__init__.py", line 14, in get_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return loader.load_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 406, in load_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] model = _initialize_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 125, in _initialize_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return model_class(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 496, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = self._init_model(vllm_config=vllm_config,

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 533, in _init_model

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return LlamaModel(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/compilation/decorators.py", line 151, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] old_init(self, vllm_config=vllm_config, prefix=prefix, **kwargs)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 326, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.start_layer, self.end_layer, self.layers = make_layers(

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/utils.py", line 558, in make_layers

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] maybe_offload_to_cpu(layer_fn(prefix=f"{prefix}.{idx}"))

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 328, in <lambda>

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] lambda prefix: layer_type(config=config,

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 254, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.mlp = LlamaMLP(

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 70, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.gate_up_proj = MergedColumnParallelLinear(

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 441, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] super().__init__(input_size=input_size,

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 314, in __init__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.quant_method.create_weights(

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 129, in create_weights

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] weight = Parameter(torch.empty(sum(output_partition_sizes),

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/torch/utils/_device.py", line 106, in __torch_function__

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=348)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] torch.OutOfMemoryError: CUDA out of memory. Tried to allocate 224.00 MiB. GPU 1 has a total capacity of 23.64 GiB of which 164.81 MiB is free. Process 25377 has 23.47 GiB memory in use. Of the allocated memory 22.91 GiB is allocated by PyTorch, and 24.14 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Exception in worker VllmWorkerProcess while processing method load_model.

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] Traceback (most recent call last):

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/multiproc_worker_utils.py", line 236, in _run_worker_process

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] output = run_method(worker, method, args, kwargs)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/utils.py", line 2196, in run_method

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/worker.py", line 183, in load_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model_runner.load_model()

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/model_runner.py", line 1112, in load_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = get_model(vllm_config=self.vllm_config)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/__init__.py", line 14, in get_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return loader.load_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 406, in load_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] model = _initialize_model(vllm_config=vllm_config)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 125, in _initialize_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return model_class(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 496, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.model = self._init_model(vllm_config=vllm_config,

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 533, in _init_model

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return LlamaModel(vllm_config=vllm_config, prefix=prefix)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/compilation/decorators.py", line 151, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] old_init(self, vllm_config=vllm_config, prefix=prefix, **kwargs)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 326, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.start_layer, self.end_layer, self.layers = make_layers(

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/utils.py", line 558, in make_layers

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] maybe_offload_to_cpu(layer_fn(prefix=f"{prefix}.{idx}"))

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 328, in <lambda>

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] lambda prefix: layer_type(config=config,

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 254, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.mlp = LlamaMLP(

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 70, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.gate_up_proj = MergedColumnParallelLinear(

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 441, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] super().__init__(input_size=input_size,

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 314, in __init__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] self.quant_method.create_weights(

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 129, in create_weights

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] weight = Parameter(torch.empty(sum(output_partition_sizes),

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] File "/usr/local/lib/python3.12/dist-packages/torch/utils/_device.py", line 106, in __torch_function__

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] return func(*args, **kwargs)

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] ^^^^^^^^^^^^^^^^^^^^^

�[1;36m(VllmWorkerProcess pid=349)�[0;0m ERROR 09-12 08:36:30 multiproc_worker_utils.py:242] torch.OutOfMemoryError: CUDA out of memory. Tried to allocate 224.00 MiB. GPU 2 has a total capacity of 23.64 GiB of which 164.81 MiB is free. Process 25378 has 23.47 GiB memory in use. Of the allocated memory 22.91 GiB is allocated by PyTorch, and 24.14 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

ERROR 09-12 08:36:30 engine.py:400] CUDA out of memory. Tried to allocate 224.00 MiB. GPU 0 has a total capacity of 23.64 GiB of which 164.81 MiB is free. Process 25094 has 23.47 GiB memory in use. Of the allocated memory 22.91 GiB is allocated by PyTorch, and 24.14 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

ERROR 09-12 08:36:30 engine.py:400] Traceback (most recent call last):

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/engine/multiprocessing/engine.py", line 391, in run_mp_engine

ERROR 09-12 08:36:30 engine.py:400] engine = MQLLMEngine.from_engine_args(engine_args=engine_args,

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/engine/multiprocessing/engine.py", line 124, in from_engine_args

ERROR 09-12 08:36:30 engine.py:400] return cls(ipc_path=ipc_path,

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/engine/multiprocessing/engine.py", line 76, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.engine = LLMEngine(*args, **kwargs)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/engine/llm_engine.py", line 273, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.model_executor = executor_class(vllm_config=vllm_config, )

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/executor_base.py", line 271, in __init__

ERROR 09-12 08:36:30 engine.py:400] super().__init__(*args, **kwargs)

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/executor_base.py", line 52, in __init__

ERROR 09-12 08:36:30 engine.py:400] self._init_executor()

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/mp_distributed_executor.py", line 125, in _init_executor

ERROR 09-12 08:36:30 engine.py:400] self._run_workers("load_model",

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/executor/mp_distributed_executor.py", line 185, in _run_workers

ERROR 09-12 08:36:30 engine.py:400] driver_worker_output = run_method(self.driver_worker, sent_method,

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/utils.py", line 2196, in run_method

ERROR 09-12 08:36:30 engine.py:400] return func(*args, **kwargs)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/worker.py", line 183, in load_model

ERROR 09-12 08:36:30 engine.py:400] self.model_runner.load_model()

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/worker/model_runner.py", line 1112, in load_model

ERROR 09-12 08:36:30 engine.py:400] self.model = get_model(vllm_config=self.vllm_config)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/__init__.py", line 14, in get_model

ERROR 09-12 08:36:30 engine.py:400] return loader.load_model(vllm_config=vllm_config)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 406, in load_model

ERROR 09-12 08:36:30 engine.py:400] model = _initialize_model(vllm_config=vllm_config)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/model_loader/loader.py", line 125, in _initialize_model

ERROR 09-12 08:36:30 engine.py:400] return model_class(vllm_config=vllm_config, prefix=prefix)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 496, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.model = self._init_model(vllm_config=vllm_config,

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 533, in _init_model

ERROR 09-12 08:36:30 engine.py:400] return LlamaModel(vllm_config=vllm_config, prefix=prefix)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/compilation/decorators.py", line 151, in __init__

ERROR 09-12 08:36:30 engine.py:400] old_init(self, vllm_config=vllm_config, prefix=prefix, **kwargs)

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 326, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.start_layer, self.end_layer, self.layers = make_layers(

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/utils.py", line 558, in make_layers

ERROR 09-12 08:36:30 engine.py:400] maybe_offload_to_cpu(layer_fn(prefix=f"{prefix}.{idx}"))

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 328, in <lambda>

ERROR 09-12 08:36:30 engine.py:400] lambda prefix: layer_type(config=config,

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 254, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.mlp = LlamaMLP(

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/models/llama.py", line 70, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.gate_up_proj = MergedColumnParallelLinear(

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 441, in __init__

ERROR 09-12 08:36:30 engine.py:400] super().__init__(input_size=input_size,

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 314, in __init__

ERROR 09-12 08:36:30 engine.py:400] self.quant_method.create_weights(

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/vllm/model_executor/layers/linear.py", line 129, in create_weights

ERROR 09-12 08:36:30 engine.py:400] weight = Parameter(torch.empty(sum(output_partition_sizes),

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] File "/usr/local/lib/python3.12/dist-packages/torch/utils/_device.py", line 106, in __torch_function__

ERROR 09-12 08:36:30 engine.py:400] return func(*args, **kwargs)

ERROR 09-12 08:36:30 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^

ERROR 09-12 08:36:30 engine.py:400] torch.OutOfMemoryError: CUDA out of memory. Tried to allocate 224.00 MiB. GPU 0 has a total capacity of 23.64 GiB of which 164.81 MiB is free. Process 25094 has 23.47 GiB memory in use. Of the allocated memory 22.91 GiB is allocated by PyTorch, and 24.14 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)

ERROR 09-12 08:36:30 multiproc_worker_utils.py:124] Worker VllmWorkerProcess pid 348 died, exit code: -15

INFO 09-12 08:36:30 multiproc_worker_utils.py:128] Killing local vLLM worker processes

使用上面的配置后还是报错

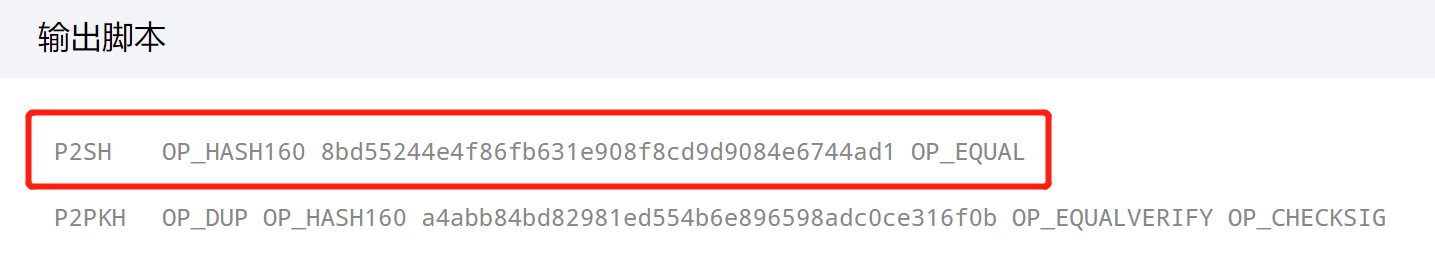

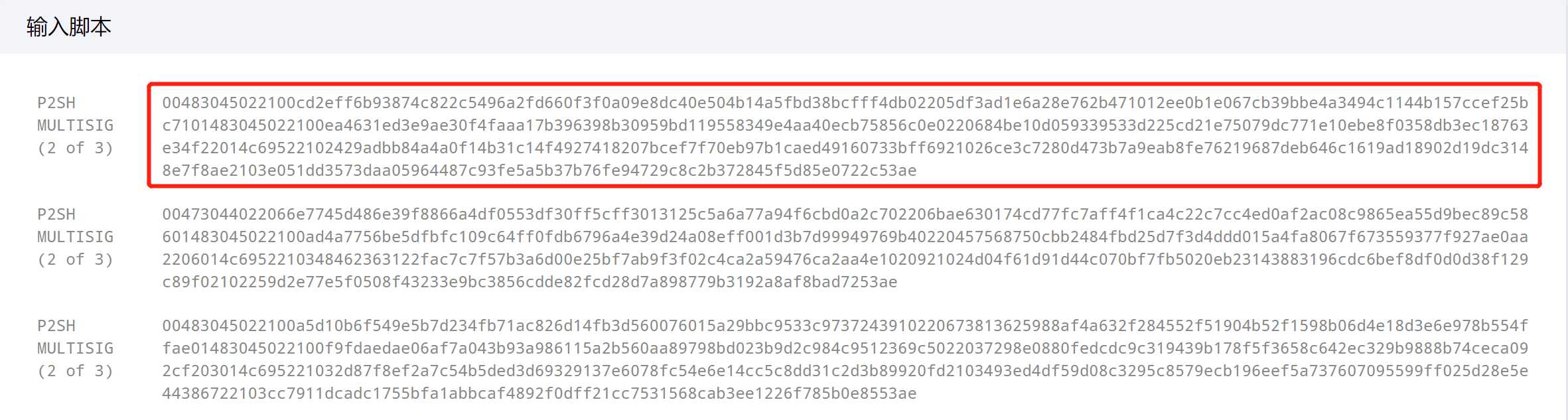

本文介绍比特币复杂交易类型,帮助理解“可编程加密货币”。付款到多重签名需客户用特制钱包生成含复杂脚本的交易,且付款方要付更多交易费。付款到脚本哈希(P2SH)可避免付款方了解锁定脚本细节,让付款到复杂脚本更简单,还提及网络默认传播标准交易类型。

本文介绍比特币复杂交易类型,帮助理解“可编程加密货币”。付款到多重签名需客户用特制钱包生成含复杂脚本的交易,且付款方要付更多交易费。付款到脚本哈希(P2SH)可避免付款方了解锁定脚本细节,让付款到复杂脚本更简单,还提及网络默认传播标准交易类型。

2022

2022

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?