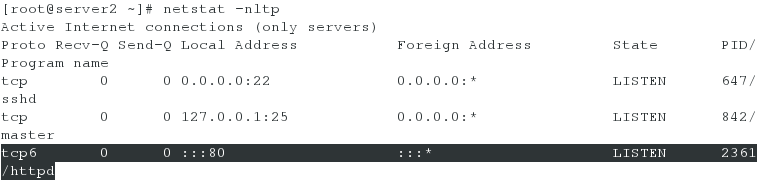

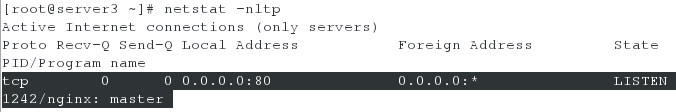

在上篇的基础上,salt-master节点向server2与server3分别推送了httpd和nginx服务:

向两台节点机的相关服务上配置默认发布页面:

![]()

![]()

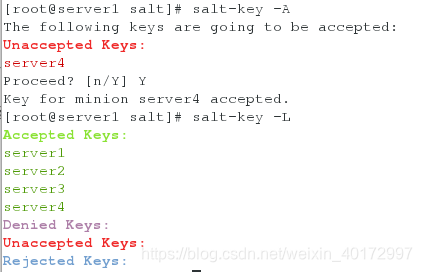

准备第四台虚拟机,安装配置并开启salt-minion服务:

在master端接受该minion节点的minion-key

服务部署:

前端代理使用haproxy+keepalived,后端web server使用server2的httpd服务与server3的nginx服务用于负载效果的体现

server1 172.25.81.1 salt-master,haproxy高可用+keepalived

server2 172.25.81.2 salt-minion,httpd服务器

server3 172.25.81.3 salt-minion,nginx服务器

server4 172.25.81.4 salt-minion,haproxy高可用+keepalived

saltstack部署haproxy服务

[root@server1 ~]# mkdir /srv/salt/haproxy

[root@server1 ~]# mkdir /srv/salt/haproxy/files

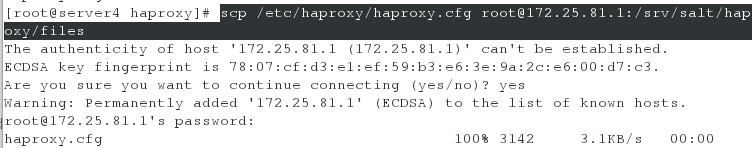

拷贝haproxy配置文件到master主机的/srv/salt/haproxy/files目录下

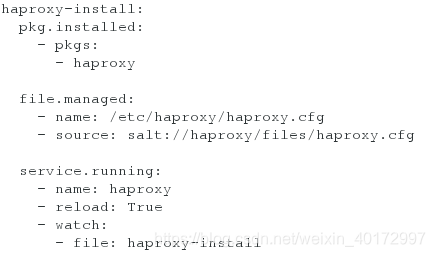

[root@server1 ~]# vim /srv/salt/haproxy/install.sls

haproxy-install:

pkg.installed:

- pkgs:

- haproxyfile.managed:

- name: /etc/haproxy/haproxy.cfg

- source: salt://haproxy/files/haproxy.cfgservice.running:

- name: haproxy

- reload: True

- watch:

- file: haproxy-install

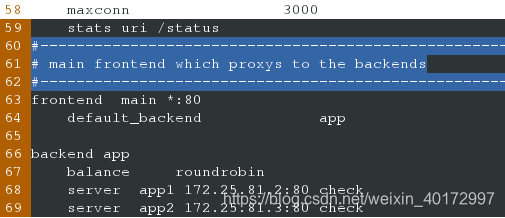

[root@server1 ~]# vim /srv/salt/haproxy/files/haproxy.cfg

59 stats uri /status

60 #---------------------------------------------------------------------

61 # main frontend which proxys to the backends

62 #---------------------------------------------------------------------

63 frontend main *:80 ##均衡器监听80端口

64 default_backend app ##引用后端自定义服务器组名app

65

66 backend app ##设定默认后端,名字为app

67 balance roundrobin ##轮询算法

68 server app1 172.25.81.2:80 check ##设定后端apache服务器的IP,并引入健康检查

69 server app2 172.25.81.3:80 check ##设定后端nginx服务器的IP,并引入健康检查

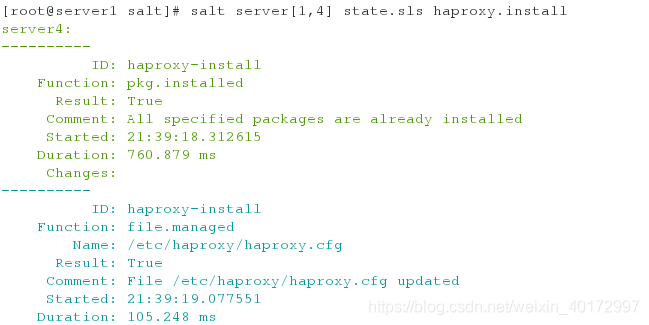

向server1和server4节点推haproxy服务:

[root@server1 salt]# salt server[1,4] state.sls haproxy.install

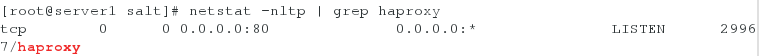

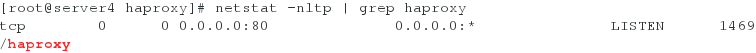

在查看两台节点机查看服务是否推送成功:

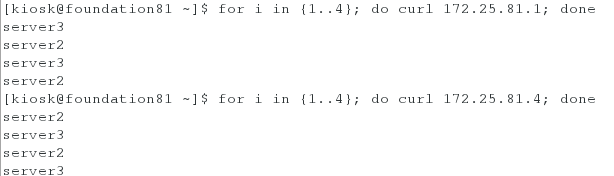

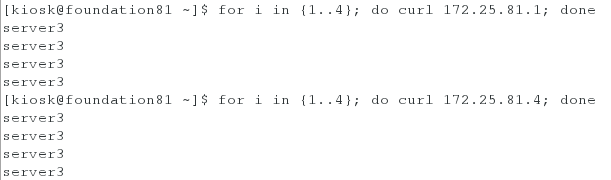

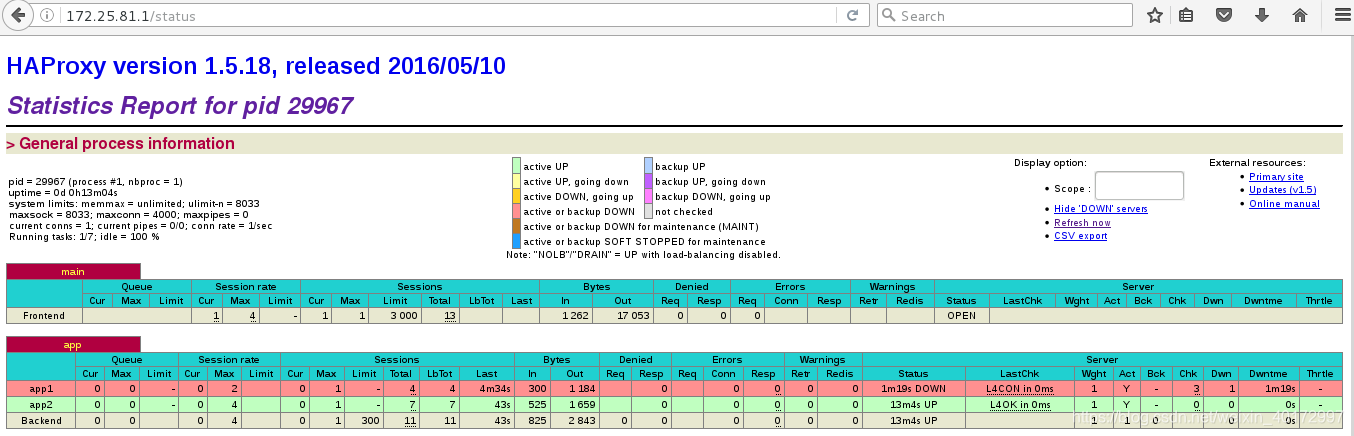

测试负载均衡是否实现:

自带健康检查:

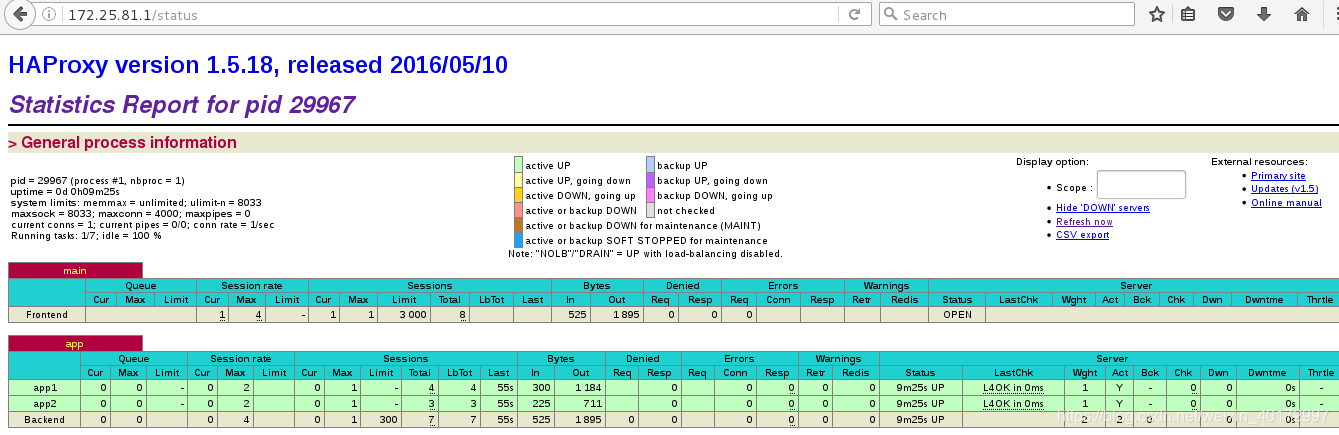

停掉server2的apache服务:

重新测试负载均衡:

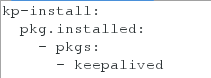

saltstack部署keepalived服务

[root@server1 ~]# mkdir /srv/salt/keepalived

[root@server1 ~]# vim /srv/salt/keepalived/install.sls

kp-install:

pkg.installed:

- pkgs:

- keepalived

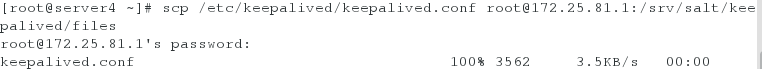

拷贝keepalived配置文件到master主机的/srv/salt/keepalived/files目录下

[root@server1 salt]# mkdir /srv/salt/keepalived/files

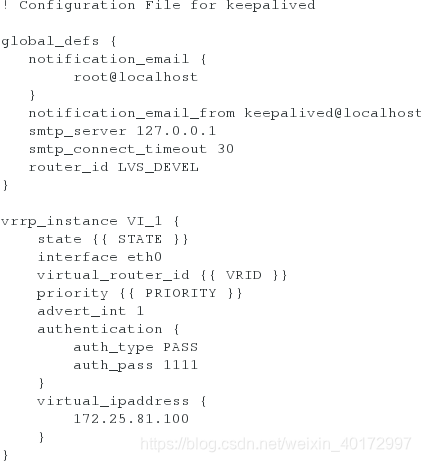

[root@server1 salt]# vim /srv/salt/keepalived/files/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}virtual_ipaddress {

172.25.81.100

}

}

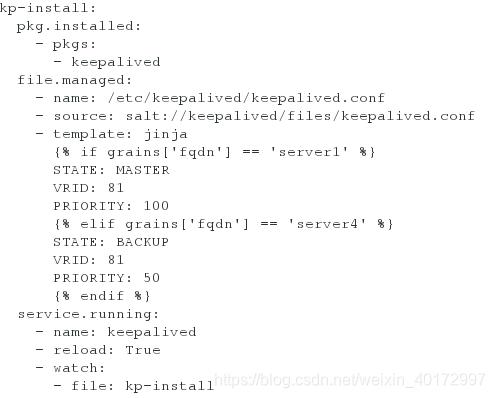

[root@server1 salt]# vim /srv/salt/keepalived/install.sls

kp-install:

pkg.installed:

- pkgs:

- keepalived

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://keepalived/files/keepalived.conf

- template: jinja

{% if grains['fqdn'] == 'server1' %}

STATE: MASTER

VRID: 81

PRIORITY: 100

{% elif grains['fqdn'] == 'server4' %}

STATE: BACKUP

VRID: 81

PRIORITY: 50

{% endif %}

service.running:

- name: keepalived

- reload: True

- watch:

- file: kp-install

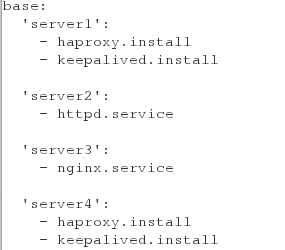

[root@server1 salt]# vim /srv/salt/top.sls

base:

'server1':

- haproxy.install

- keepalived.install'server2':

- httpd.service'server3':

- nginx.service'server4':

- haproxy.install

- keepalived.install

[root@server1 salt]# salt '*' state.highstate

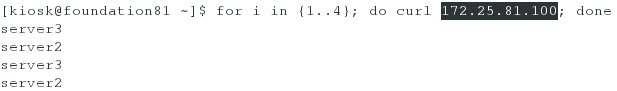

测试:

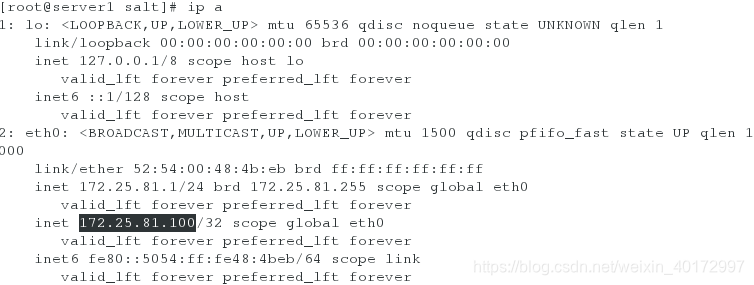

此时虚拟ip在server1上:

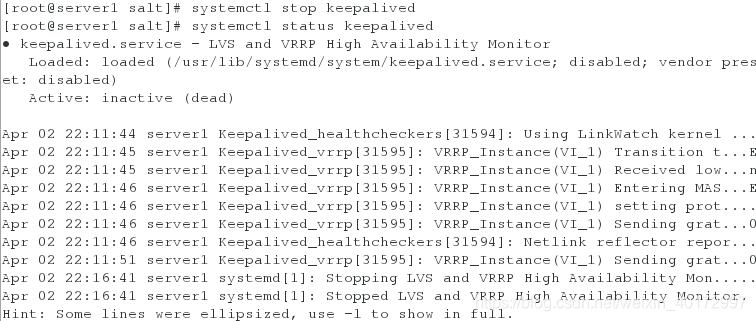

停掉server1的keepalived服务:

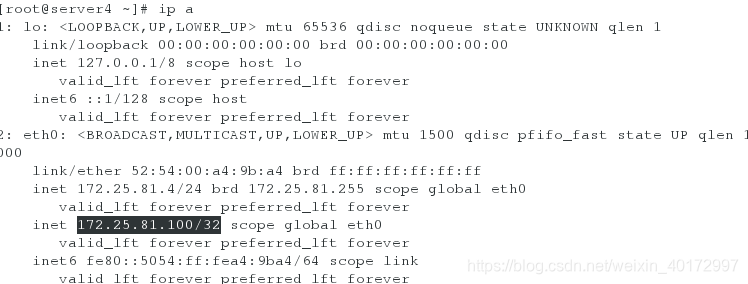

虚拟ip漂移到server4上:

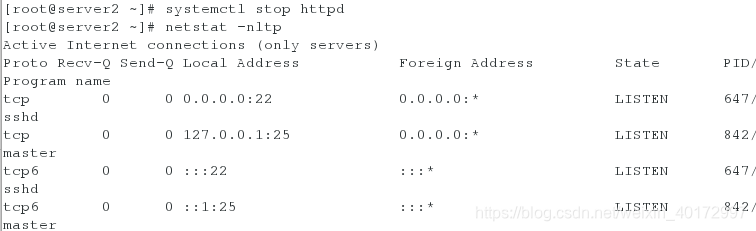

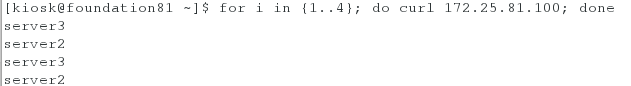

依然可以实现负载均衡:

本文基于上篇内容,介绍了使用SaltStack进行服务部署。包括向节点机推送httpd和nginx服务、配置默认发布页面,安装配置salt - minion服务。详细阐述了使用haproxy + keepalived实现前端代理、后端web server负载均衡的部署过程,还进行了负载均衡和虚拟IP漂移测试。

本文基于上篇内容,介绍了使用SaltStack进行服务部署。包括向节点机推送httpd和nginx服务、配置默认发布页面,安装配置salt - minion服务。详细阐述了使用haproxy + keepalived实现前端代理、后端web server负载均衡的部署过程,还进行了负载均衡和虚拟IP漂移测试。

470

470

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?