环境:Win10(宿主机)+SecureCRT 7.3 +虚拟机(VMware workstation full 15.0.4)+CentOS 7

工作目录:

Linux系统下,/opt,这个目录一般是给主机额外安装软件(第三方软件)所摆放的目录,默认是空的。那么我的Hadoop相关就放这了。

[root@master opt]# tree -L 1

.

└── bigdata

1 directory, 0 files

[root@master opt]# cd bigdata

[root@master bigdata]# pwd

/opt/bigdata

3个节点都要创建同样的目录。

0、准备工作:

0.0 安装好上述环境。

基本系统配置

- 配置网络服务

- 配置主机

- 防火墙

- SSH互信配置

- JDK安装

0.1 配置网络服务(3个节点都需要配置,除IP不同外,其他都一样的)

[root@master master]# cat /etc/sysconfig/network-scripts/ifcfg-ens32

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens32"

UUID="0585b7f9-58d1-40d5-b330-f46145448de1"

DEVICE="ens32"

ONBOOT="yes"

IPADDR="192.168.11.128"

NETMASK="255.255.255.0"

GATEWAY="192.168.11.2"

DNS1="119.29.29.29"

小结:

修改一项:BOOTPROTO="static"

增加以下项:

IPADDR="192.168.11.128"

NETMASK="255.255.255.0"

GATEWAY="192.168.11.2"

DNS1="119.29.29.29"

重启网络服务

service network restart

查看是否成功:ip addr(CentOS 7用它,之前从ifconfig)。形如下方,表示成功。

[root@slave1 slave1]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:58:dc:c7 brd ff:ff:ff:ff:ff:ff

inet 192.168.11.129/24 brd 192.168.11.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet6 fe80::8e4e:257f:432b:447e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

还可以通过如下两种方式检测是否能上网:

方式1:curl www.baidu.com

方式2:ping www.baidu.com

0.2 配置主机(3个节点都要操作)

由于我在安装CentOS7就已经设置好了。未设置的话,可设置,3个节点内容分别:master、slave1、slave2

[root@master master]# cat /etc/hostname

master

在修改后,需要reboot重启服务器(CentOS7 系统)才会生效。

接着配置主机文件(3个节点内容一样)

# 原有默认的内容可删除。也可以保留

[root@master master]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.11.128 master

192.168.11.129 slave1

192.168.11.130 slave2

0.3 关闭内核和系统防火墙(3个节点都要做)

内核防火墙

临时关闭:setenforce 0

永久关闭:vi /etc/selinux/config,将SELINUX=disabled修改为其。

系统防火墙

临时关闭:

[root@slave2 slave2]# systemctl stop firewalld.service

永久关闭:

[root@slave2 slave2]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

0.4 SSH互信配置

ssh-keygen -t rsa 3次回车生成密钥

3个节点都要生成密钥。

[root@master master]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:cJU72XgIvPFz4y4esXTMuXj03nRKxZHYrpdBIX5geN0 root@master

The key's randomart image is:

+---[RSA 2048]----+

| . ...+...|

| +...o.=.E|

| . .= *.o * |

| o. Oo=.+..|

| S o**. oo|

| . *.o..o|

| +.o.ooo|

| .o.o.+.|

| ... o .|

+----[SHA256]-----+

[root@master master]#

生成公钥(主节点master执行 即可)

[root@master master]# cat /root/.ssh/id_rsa.pub > /root/.ssh/authorized_keys #重定向到后面那个文件

[root@master master]# chmod 600 /root/.ssh/authorized_keys #增加权限

在主节点master执行,复制从节点slave1、slave2的公钥 到主节点来。下方仅展示复制slave1的。

[root@master master]# ssh slave1 cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

The authenticity of host 'slave1 (192.168.11.129)' can't be established.

ECDSA key fingerprint is SHA256:frd5jDze5XA5EElyErJ8Ifla2G4u4sRFK/UO3PWOo4o.

ECDSA key fingerprint is MD5:94:ef:ac:65:b2:cf:50:95:d5:5f:21:52:af:8b:f7:85.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave1,192.168.11.129' (ECDSA) to the list of known hosts.

root@slave1's password:

[root@master master]#

在主节点master执行,复制这份公钥到从节点slave1、slave2去:下方仅展示复制slave1的。

[root@master master]# scp /root/.ssh/authorized_keys root@slave1:/root/.ssh/authorized_keys

root@slave1's password:

authorized_keys 100% 1179 821.7KB/s 00:00

免密SSH测试:下方仅展示slave2

[root@master master]# ssh slave2 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:80:fe:db brd ff:ff:ff:ff:ff:ff

inet 192.168.11.130/24 brd 192.168.11.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet6 fe80::a5f9:64ef:e953:c351/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master master]# ssh slave2 ping www.baidu.com

PING www.baidu.com (14.215.177.38) 56(84) bytes of data.

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=1 ttl=128 time=10.6 ms

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=2 ttl=128 time=9.70 ms

64 bytes from 14.215.177.38 (14.215.177.38): icmp_seq=3 ttl=128 time=9.55 ms

^CKilled by signal 2.

[root@master master]#

0.5 JDK安装

【因为我是最小化安装的CentOS 7,所以没有jdk】

首先查看是否已有jdk,是否符合要求。不符合的话,卸载。【3个节点都有检查】

[root@master master]# rpm -qa|grep java

卸载方法:

rpm -e --nodeps [jdk包名]

将文件从windows目录下 传到 linux下,方式有:

- Windows下安装的 SecureCRT的SFTP 或 SSHSecureShellClient 参考

- Linux下安装lrzsz

- 通过curl也能下载在线网址文件

在master窗口标签,右键-选择【Connect SFTP session】

sftp> put E:\share\jdk-8u211-linux-x64.tar.gz

Uploading jdk-8u211-linux-x64.tar.gz to /home/master/jdk-8u211-linux-x64.tar.gz

100% 190420KB 47605KB/s 00:00:04

E:/share/jdk-8u211-linux-x64.tar.gz: 194990602 bytes transferred in 4 seconds (47605 KB/s)

sftp>

如何设置SFTP指定的上传、下载目录待研究。

[root@master bigdata]# cd /home/master

[root@master master]# ls

jdk-8u211-linux-x64.tar.gz

[root@master master]# mv jdk-8u211-linux-x64.tar.gz /opt/bigdata

# 解压JDK包

[root@master bigdata]# tar zxvf jdk-8u211-linux-x64.tar.gz

...

# 配置环境变量,在配置文件的最后加入

[root@master jdk1.8.0_211]# vi /etc/profile

JAVA_HOME=/opt/bigdata/jdk1.8.0_211

JAVA_BIN=/opt/bigdata/jdk1.8.0_211/bin

JRE_HOME=/opt/bigdata/jdk1.8.0_211/jre

CLASSPATH=/opt/bigdata/jdk1.8.0_211/jre/lib:/opt/bigdata/jdk1.8.0_211/lib:/

opt/bigdata/jdk1.8.0_211/jre/lib/charsets.jar

PATH=$PATH:$JAVA_BIN:$JRE_HOME/bin

# 重新加载环境变量

[root@master jdk1.8.0_211]# source /etc/profile

# 测试是否配置JDK成功

[root@master jdk1.8.0_211]# java -version

java version "1.8.0_211"

Java(TM) SE Runtime Environment (build 1.8.0_211-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.211-b12, mixed mode)

给另外两个节点(从节点)安装JDK

# 复制环境变量到从节点

[root@master jdk1.8.0_211]# scp /etc/profile root@slave1:/etc/profile

profile 100% 2089 1.3MB/s 00:00

[root@master jdk1.8.0_211]# scp /etc/profile root@slave2:/etc/profile

profile 100% 2089 1.3MB/s 00:00

# 复制JDK包到从节点

[root@master jdk1.8.0_211]# scp -r /opt/bigdata/jdk1.8.0_211 root@slave1:/opt/bigdata/jdk1.8.0_211

...

[root@master jdk1.8.0_211]# scp -r /opt/bigdata/jdk1.8.0_211 root@slave2:/opt/bigdata/jdk1.8.0_211

同样,从节点上重新加载环境变量、检测JDK安装是否成功。

1、安装Hadoop

大数据Hadoop2.x与Hadoop3.x相比较有哪些变化

Hadoop3 新特性、端口号的改变

在SFTP会话窗口

sftp> pwd

/opt/bigdata

sftp> put E:\share\hadoop-2.6.5.tar.gz

Uploading hadoop-2.6.5.tar.gz to /opt/bigdata/hadoop-2.6.5.tar.gz

put: failed to upload E:/share/hadoop-2.6.5.tar.gz. 拒绝访问。

在master节点:给bigdata文件夹对于其他用户增加读权限。之后SFTP就可以put成功了。

[root@master opt]# chmod a+w bigdata

修改配置文件

[root@master hadoop]# pwd

/opt/bigdata/hadoop-2.6.5/etc/hadoop

# 第1个配置文件:hadoop-env.sh

[root@master hadoop]# cat /opt/bigdata/hadoop-2.6.5/etc/hadoop/hadoop-env.sh

#可发现 hadoop-env.sh已有JAVA的环境变量。所以不必再添加,这是错误的。 这句必须改成下方这样:

export JAVA_HOME=/opt/bigdata/jdk1.8.0_211

[root@master hadoop]# echo ${JAVA_HOME}

/opt/bigdata/jdk1.8.0_211

#第2个配置文件:yarn-env.sh

[root@master hadoop]# vi /opt/bigdata/hadoop-2.6.5/etc/hadoop/yarn-env.sh

...

#export JAVA_HOME=${JAVA_HOME} #这句必须改成下方这样:

export JAVA_HOME=/opt/bigdata/jdk1.8.0_211

...

# 第3个配置文件:slaves。添加从节点主机名(hostname)

[root@master hadoop]# vi /opt/bigdata/hadoop-2.6.5/etc/hadoop/slaves

slave1

slave2

# 第4个配置文件:core-site.xml。添加RPC配置

[root@master hadoop]# vi /opt/bigdata/hadoop-2.6.5/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.11.128:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/opt/bigdata/hadoop-2.6.5/tmp</value>

</property>

</configuration>

# 第5个配置文件:hdfs-site.xml

[root@master hadoop]# vi /opt/bigdata/hadoop-2.6.5/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/bigdata/hadoop-2.6.5/dfs/name</value>

</property>

<property>

<name>dfs.datanode.dir</name>

<value>file:/opt/bigdata/hadoop-2.6.5/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

# 第6个配置文件:mapred-site.xml

[root@master hadoop]# pwd

/opt/bigdata/hadoop-2.6.5/etc/hadoop

[root@master hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@master hadoop]# vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

# 第7个配置文件:yarn-site.xml

[root@master hadoop]# vi /opt/bigdata/hadoop-2.6.5/etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred_ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.aux-address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

其中,core-site.xml是全局配置(它的更多可参考官方文档),hdfs-site.xml、mapred-site.xml分别是hdfs、mapred的局部配置。

创建临时目录和文件夹

[root@master hadoop-2.6.5]# pwd

/opt/bigdata/hadoop-2.6.5

[root@master hadoop-2.6.5]# ls

bin include libexec NOTICE.txt sbin

etc lib LICENSE.txt README.txt share

[root@master hadoop-2.6.5]# mkdir tmp

[root@master hadoop-2.6.5]# mkdir -p dfs/name

[root@master hadoop-2.6.5]# mkdir -p dfs/data

[root@master hadoop-2.6.5]# ls

bin etc lib LICENSE.txt README.txt share

dfs include libexec NOTICE.txt sbin tmp

配置环境变量,在最后加入

[root@master dfs]# vi /etc/profile

HADOOP_HOME=/opt/bigdata/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin

复制环境变量到从节点,并重新加载环境变量

[root@master dfs]# scp /etc/profile root@slave1:/etc/profile

profile 100% 2162 1.5MB/s 00:00

[root@master dfs]# scp /etc/profile root@slave2:/etc/profile

profile

[root@master dfs]# source /etc/profile

复制Hadoop包到从节点,接着两个从节点都重新加载环境变量

[root@master dfs]# scp -r /opt/bigdata/hadoop-2.6.5 root@slave1:/opt/bigdata/hadoop-2.6.5

...

[root@master dfs]# scp -r /opt/bigdata/hadoop-2.6.5 root@slave2:/opt/bigdata/hadoop-2.6.5

...

slave1和slave2:

source /etc/profile

主节点master 格式化Namenode

[root@master hadoop-2.6.5]# hadoop namenode -format

...

...

19/06/02 03:30:47 INFO common.Storage: Storage directory /opt/bigdata/hadoop-2.6.5/dfs/name has been successfully formatted.

...

有上面这句说明:成功格式化

启动集群

[root@master hadoop-2.6.5]# /opt/bigdata/hadoop-2.6.5/sbin/start-all.sh

...不报错即可

[root@master hadoop-2.6.5]# jps

8994 ResourceManager

8723 NameNode

8856 SecondaryNameNode

9084 Jps

从节点

[root@slave1 bigdata]# jps

22824 Jps

22701 NodeManager

22639 DataNode

有上述进程,说明启动hadoop成功。

宿主机windows下,修改本地hosts文件,添加主机记录

路径:C:\Windows\System32\drivers\etc\hosts

内容:

192.168.11.128 master

192.168.11.129 slave1

192.168.11.130 slave2

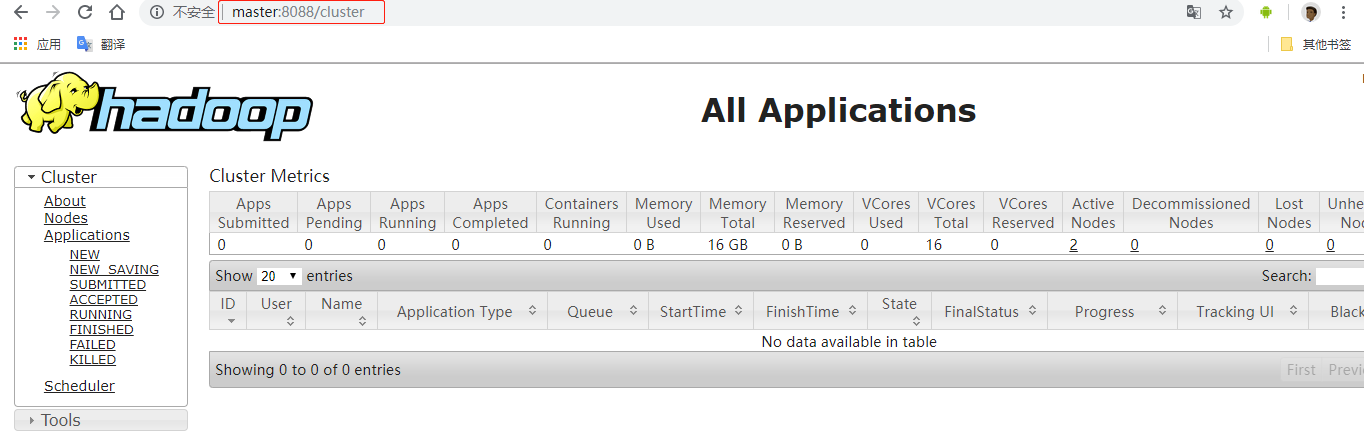

在宿主浏览器上就能访问web:http://master:8088/cluster

(主节点)关闭集群

[root@master hadoop-2.6.5]# /opt/bigdata/hadoop-2.6.5/sbin/stop-all.sh

本文详细介绍在CentOS7环境下,使用SecureCRT和VMware搭建Hadoop2.6.5集群的过程,包括网络配置、SSH互信、JDK安装、Hadoop配置文件调整及环境变量设置,最终实现Hadoop集群的启动与验证。

本文详细介绍在CentOS7环境下,使用SecureCRT和VMware搭建Hadoop2.6.5集群的过程,包括网络配置、SSH互信、JDK安装、Hadoop配置文件调整及环境变量设置,最终实现Hadoop集群的启动与验证。

1002

1002

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?