本文为《Python深度学习》的学习笔记。

第5章 深度学习用于计算机视觉

5.2.1 深度学习与小数据问题的相关性 5.2.2 下载数据

5.2.3 构建网络 5.2.4 数据预处理 5.2.5 使用数据增强

5.3.1 特征提取 5.3.2 微调模型 5.3.3 小结

5.4.1 可视化中间激活 5.4.2 可视化卷积神经网络的过滤器 5.4.3 可视化类激活的热力图

Part2 深度学习实践

1-4章主要是介绍深度学习,以及其工作原理,5-9章将通过实践培养出如何用深度学习解决实际问题。

第5章 深度学习用于计算机视觉

- 理解卷积神经网络

- 使用数据增强来降低过拟合

- 使用预训练的卷积神经网络进行特征提取

- 微调预训练的卷积神经网络

- 将卷积神经网络学到的内容及其如何做出分类决策可视化

5.1 卷积神经网络简介

深入介绍神经网络原理,以及在计算机视觉任务上为何如此成功。

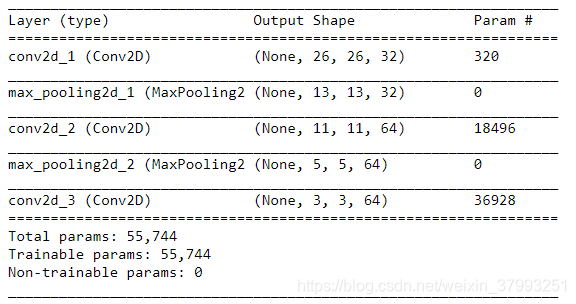

下面是Conv2D层和MaxPooling2D层的堆叠。

# 5-1 实例化一个小型的神经网络

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation = 'relu', input_shape = (28, 28, 1)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation = 'relu'))

model.summary()

可以看到,每个Conv2D层和MaxPooling2D层的输出都是一个形状为(heights, width, channels)的3D张量。

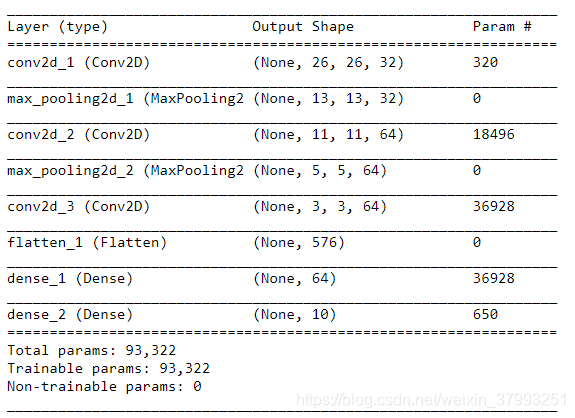

# 5-2 在卷积神经网络上添加分类器

model.add(layers.Flatten())

model.add(layers.Dense(64, activation = 'relu'))

model.add(layers.Dense(10, activation = 'softmax'))

model.summary()

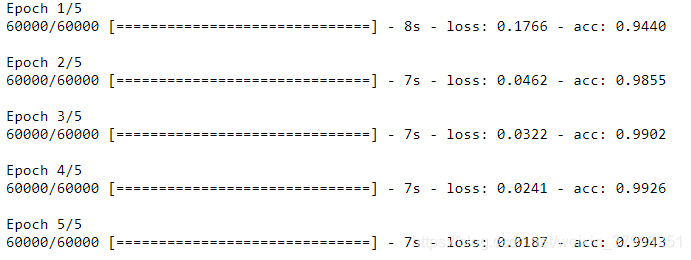

# 5-3 在MNIST图像上训练卷积神经网络

from keras.datasets import mnist

from keras.utils import to_categorical

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

train_images = train_images.reshape((60000, 28, 28 ,1))

train_images = train_images.astype('float32') / 255

test_images = test_images.reshape((10000, 28, 28, 1))

test_images = test_images.astype('float32') / 255

train_labels = to_categorical(train_labels)

test_labels = to_categorical(test_labels)

model.compile(optimizer = 'rmsprop',

loss = 'categorical_crossentropy',

metrics = ['accuracy'])

model.fit(train_images, train_labels, epochs = 5, batch_size = 64)

# 评估

test_loss, test_acc = model.evaluate(test_images, test_labels)

print(test_acc)

# 0.99129999999999996

5.1.1 卷积运算

密集连接层:从输入特征空间学习到的是全局模型

卷积层:学到的是局部模式

- 卷积神经网络学习到的模式具有平移不变性(translation invariant)。卷积网络在图像右下角学习到某个模式后,可以在任何地方识别这个模式。对于密集连接层只能重新学习。(并且视觉世界从根本上具有平移不变性)

- 卷积世界网络可以学到模式的空间层次结构(spatial hierarchies of patterns)。第一层卷积层会学到较小的局部模式(比如边缘),而第二层将第一层的特征组合成更大的模式,从而使卷积层越来越复杂。(并且视觉世界从根本上具有看见层次结构)

两个空间轴(高度和宽度)和一个深度轴(channel)的3D张量,其卷积叫做特征图(feature map)。

- padding: “same”表示前后大小一致,“valid”表示不用填充(default)

- stride

5.1.2 最大池化运算

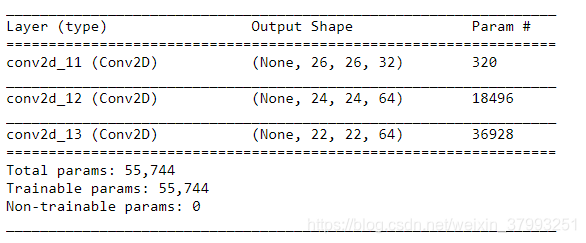

在每次MaxPoolingD后,特征图的尺寸会减半。最大池化通常使用2x2的窗口或者步幅2。而卷积通常使用3x3的窗口或者步幅1。

model_no_max_pool = models.Sequential()

model_no_max_pool.add(layers.Conv2D(32, (3, 3), activation = 'relu', input_shape = (28, 28, 1)))

model_no_max_pool.add(layers.Conv2D(64, (3, 3), activation = 'relu'))

model_no_max_pool.add(layers.Conv2D(64, (3, 3), activation = 'relu'))

model_no_max_pool.summary()

- 不利于学习特征的空间层级结构。第三层3x3的窗口仍然只包含初始的输入,卷积神经网络学到的高级模式相对于初始输入来说依然很小。

- 最后一层每个样本元素有36928个元素,会导致严重的过拟合。

注意,最大池化不是实现这种下采样的唯一方法。你已经知道,还可以在前一个卷积层中使用步幅来实现。此外,你还可以使用平均池化来代替最大池化,其方法是将每个局部输人图块变换为取该图块各通道的平均值,而不是最大值。但最大池化的效果往往比这些替代方法更好。简而言之,原因在于特征中往往编码了某种模式或概念在特征图的不同位置是否存在(因此得名特征图),而观察不同特征的最大值而不是平均值能够给出更多的信息。因此,最合理的子采样策略是首先生成密集的特征图(通过无步进的卷积),然后观察特征每个小图块上的最大激活,而不是查看输入的稀疏窗口(通过步进卷积)或对输人图块取平均,因为后两种方法可能导致错过或淡化特征是否存在的信息

5.2 在小型数据集从头开始训练一个卷积神经网络

5.2.1 深度学习与小数据问题的相关性

通常来说,深度学习需要大量数据。但是有时候如果模型很小,并做了很好的正则化,同时任务十分简单,可能几百个样本就足够了。

此外,神经网络还有高度的可复用性。特别是在计算机视觉领域,许多预训练模型都能公开下载到。

5.2.2 下载数据

本章数据在kaggle上https://www.kaggle.com/c/dogs-vs-cats/data 这个数据集包含25000张猫狗图片,大小为543MB,我们创建三个小的子集:每个类别各1000个样本的训练集,每个类别各500个样本验证集和每个类别各500个样本的测试集.

# 5-4 将图像复制到训练、验证和测试的目录

import os, shutil

original_dataset_dir = "D:/kaggle_data/kaggle_original_data/train"

#生成保存小数据集的目录

base_dir = 'D:/kaggle_data/cats_and_dogs_small'

os.mkdir(base_dir)os.mkdir() —— 创建文件夹

os.path.join() —— 路径拼接

-

会从第一个以”/”开头的参数开始拼接,之前的参数全部丢弃。

-

以上一种情况为先。在上一种情况确保情况下,若出现”./”开头的参数,会从”./”开头的参数的上一个参数开始拼接。

# 对应划分后的序列、验证和测试的目录

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)# 设置猫和狗的训练、验证、测试目录

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

validation_cats_dir = os.path.join(validation_dir , 'cats')

os.mkdir(validation_cats_dir)

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)# 将图片复制到目录

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

shutil.copyfile(src, dst)

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)print('total training cat images:',len(os.listdir(train_cats_dir)))

print('total training dog images:',len(os.listdir(train_dogs_dir)))

print('total validation cat images:',len(os.listdir(validation_cats_dir)))

print('total validation dog images:',len(os.listdir(validation_dogs_dir)))

print('total test cat images:',len(os.listdir(test_cats_dir)))

print('total test dog images:',len(os.listdir(test_dogs_dir)))

'''

total training cat images: 1000

total training dog images: 1000

total validation cat images: 500

total validation dog images: 500

total test cat images: 500

total test dog images: 500

'''完整代码

# 5-4 将图像复制到训练、验证和测试的目录

import os, shutil

original_dataset_dir = "D:/kaggle_data/kaggle_original_data/train"

base_dir = 'D:/kaggle_data/cats_and_dogs_small'

# 1.创建对应划分后的序列、验证和测试的目录

train_dir = os.path.join(base_dir, 'train')

#os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

#os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

#os.mkdir(test_dir)

# 2.创建猫和狗的训练、验证、测试目录

train_cats_dir = os.path.join(train_dir, 'cats')

#os.mkdir(train_cats_dir)

train_dogs_dir = os.path.join(train_dir, 'dogs')

#os.mkdir(train_dogs_dir)

validation_cats_dir = os.path.join(validation_dir , 'cats')

#os.mkdir(validation_cats_dir)

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

#os.mkdir(validation_dogs_dir)

test_cats_dir = os.path.join(test_dir, 'cats')

#os.mkdir(test_cats_dir)

test_dogs_dir = os.path.join(test_dir, 'dogs')

#os.mkdir(test_dogs_dir)

# 3.将图片复制到目录

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

#shutil.copyfile(src, dst)

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

#shutil.copyfile(src, dst)

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

#shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

#shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

#shutil.copyfile(src, dst)

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

#shutil.copyfile(src, dst)5.2.3 构建网络

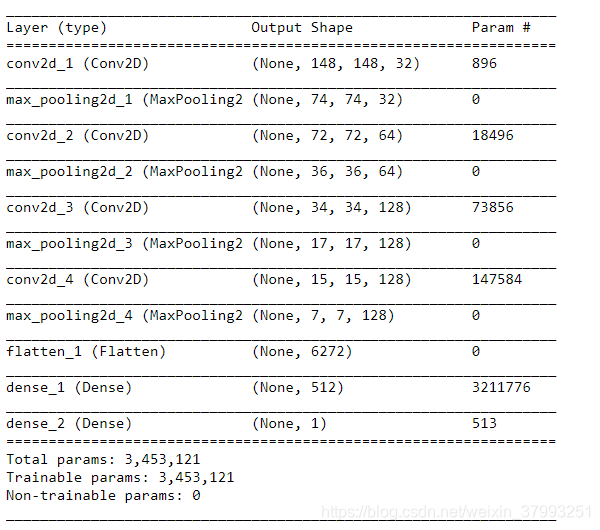

# 5-5 将猫狗分类的小型卷积神经网络实例化

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation = 'relu', input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation = 'relu'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation = 'relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))

model.summary()

编译这一步,使用RMSprop优化器。因为网络最后一层是单一sigmoid单元,使用二元交叉熵作为损失函数。

# 5-6 配置模型用于训练

from keras import optimizers

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr = 1e-4),

metrics = ['acc'])

5.2.4 数据预处理

(1)读取图像文件

(2)将JEPG文件解码为RGB像素网络

(3)将这些像素网络转换为浮点数张量

(4)将像素值缩放到[0,1]区间

keras.preprocessing.image能快速创建python生成器。

ImageDataGenerator(rescale = 1./255) —— 缩放图片

keras.preprocessing.image.ImageDataGenerator.flow_from_directory( ) —— 读取文件夹下的所有图片

flow_from_directory(self, directory,

target_size=(256, 256), color_mode='rgb',

classes=None, class_mode='categorical',

batch_size=32, shuffle=True, seed=None,

save_to_dir=None,

save_prefix='',

save_format='jpeg',

follow_links=False)# 5-7 使用ImageDataGenerator从目录中读取图像

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255)

test_datagen = ImageDataGenerator(rescale = 1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')Found 2000 images belonging to 2 classes. Found 1000 images belonging to 2 classes.

观察生成器的输出

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

# data batch shape: (20, 150, 150, 3)

# labels batch shape: (20,)train_generator相当于一个迭代器,这里只用fit_generator来拟合数据

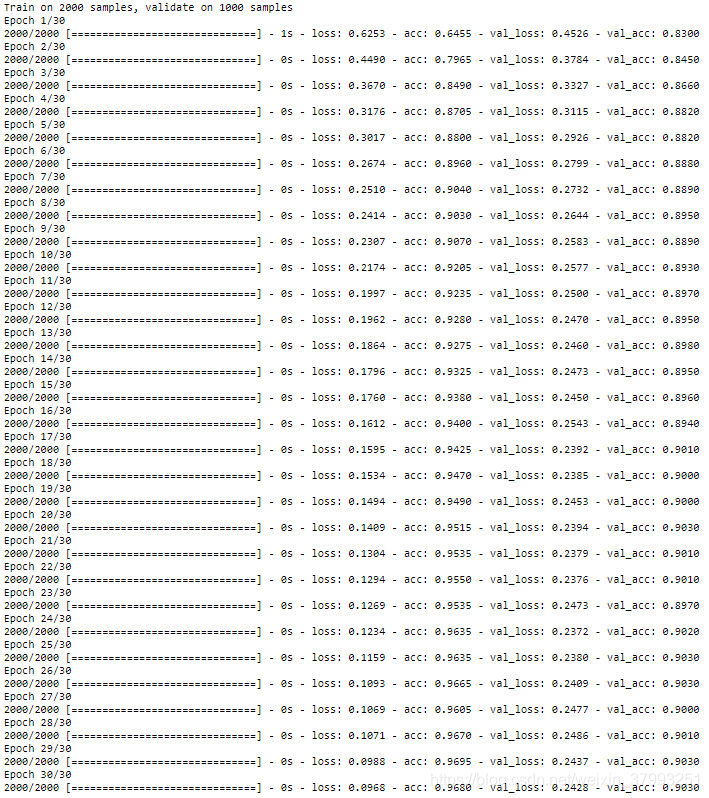

# 5-8 利用批量生成器拟合数据

history = model.fit_generator(

train_generator,

steps_per_epoch = 100,

epochs = 30,

validation_data = validation_generator,

validation_steps = 50)

# 5-9 保存模型

model.save('cats_and_dogs_small_1.h5')

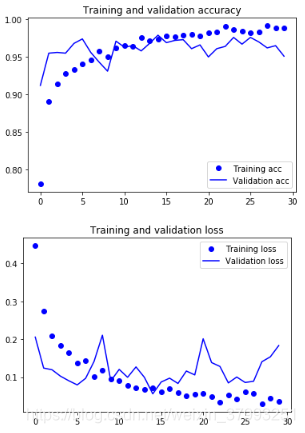

# 5-10 绘制过程中的损失曲线和精度曲线

import matpltlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validation acc')

plt.title('Training and validation loss')

plt.legend()

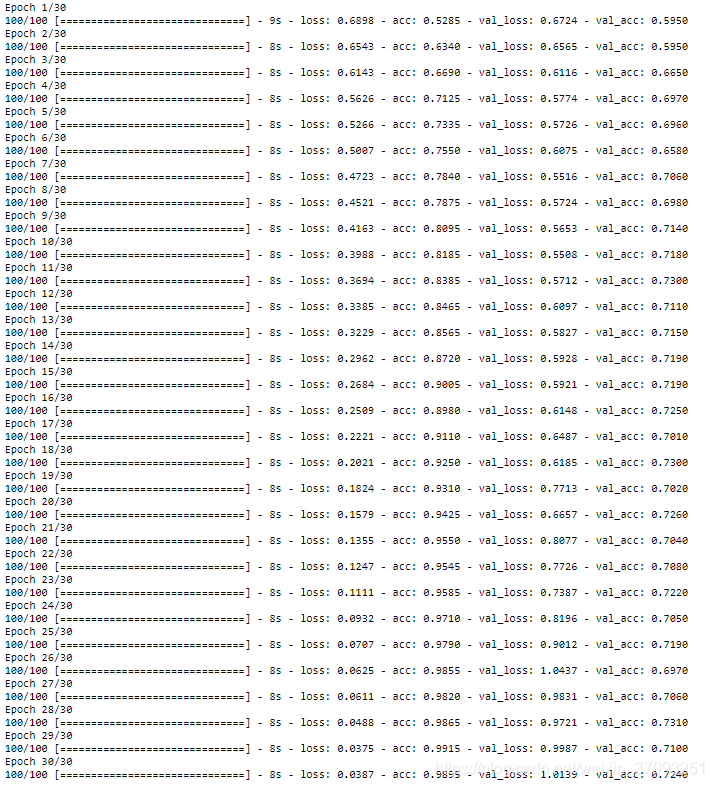

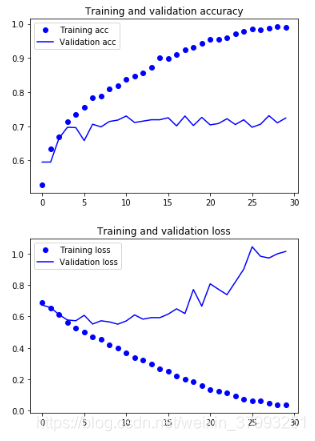

plt.show()训练精度随时间线性增加,直到接近100%,而验证精度停留在70%左右,显然已经过拟合了。这里我们使用视觉领域的新方法,数据增强(data augmentation)

5.2.5 使用数据增强

过拟合的原因是学习样本太少,这里我们生成可信图像。

# 5-11 利用ImageDatagenerator来设置数据增强

datagen= ImageDataGenerator(

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True,

fill_modee = 'nearest')- rotation_range是角度值(0-180),随机旋转

- width_shift_range和height_shift_range是图像在水平或者垂直方向上平移的范围

- shear_range是随机错切变换的角度

- zoom_range是图像随机缩放的范围

- horizontal_flip是随机将一般图像水平翻转

- flip_mode是用于填充新建图像的方法

# 5-12 显示几个随机增强后的训练图像

from keras.preprocessing import image

fnames = [os.path.join(train_cats_dir, fname) for fname in os.listdir(train_cats_dir)]

img_path = fname[3]

img = image.load_img(img_path, target_size = (150, 150))

x = image.img_to_array(img)

x = x.reshape((1,) + x.shape)

i = 0

for batch in datagen.flow(x, batch_size = 1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i % 4 == 0:

break

plt.show()

这里再添加Dropout层

# 5-13 定义一个包含dropout的新卷积神经网络

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.dense(512, activation='relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))

mdoel.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr = 1e-4),

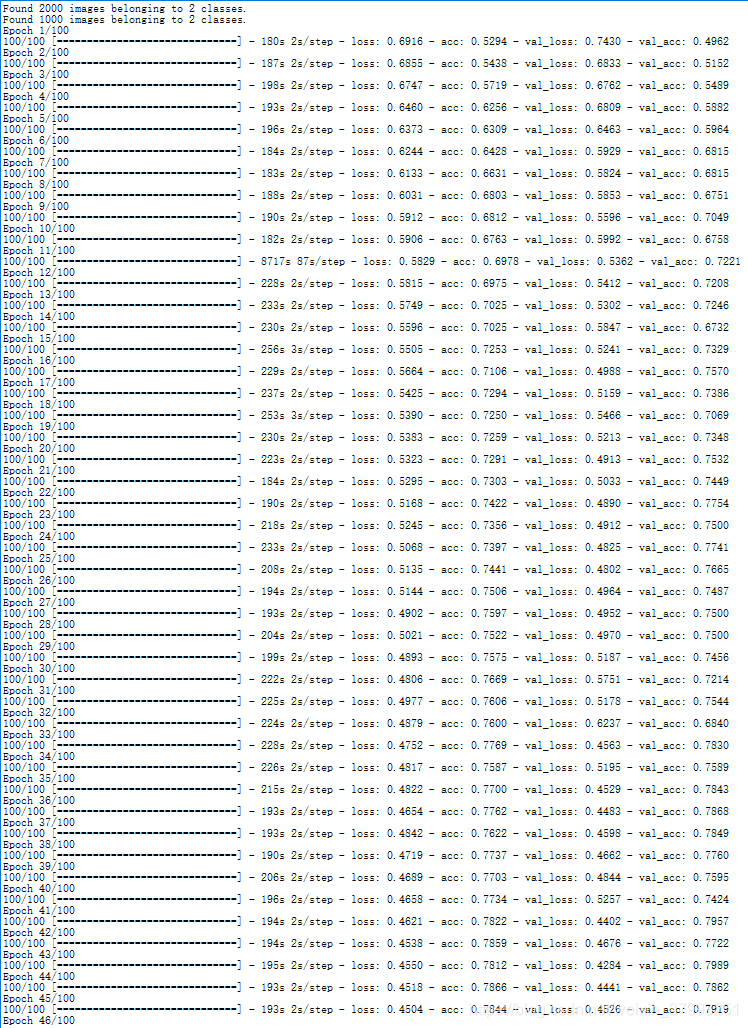

metrics = ['acc'])# 5-14 利用数据增强生成器训练卷积神经网络

train_datagen = ImageDataGenerator(

rescale = 1./255,

rotation_range = 40,

width_shift_rantge = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horiontal_flip = Ture)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 32,

class_mode = 'binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 32,

class_mode = 'binary')

history = model.fit_generator(

train_generator,

steps_per_epoch = 100,

epochs = 100,

validation_data = validation_generator,

validation_steps = 50)

# 5-15 保存模型

model.save('cats_and_dogs_small_2.h5')

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

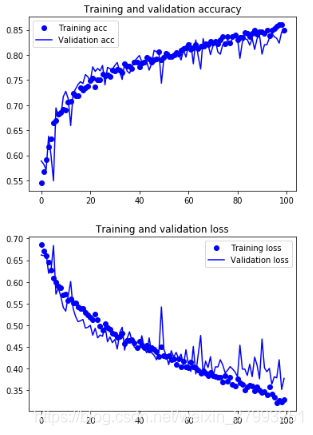

5.3 使用与训练的卷积神经网络

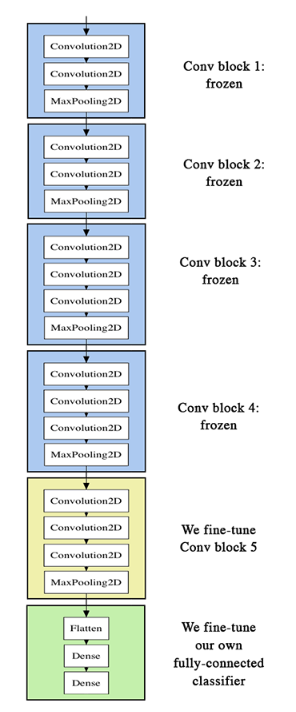

使用VGG16架构:特征提取(feature extraction)和微调模型(fine-tuning)

5.3.1 特征提取

重复使用卷积基(convolutional base),卷积层提取的通用性取决于该层在模型中的深度。

keras.applications中的部分模型Xception、Inception V3、Resnet50、VGG16、VGG19、MobileNet

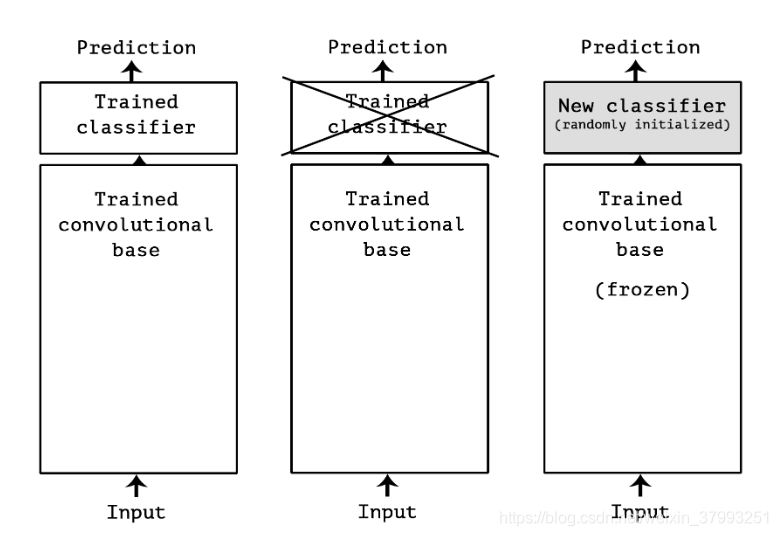

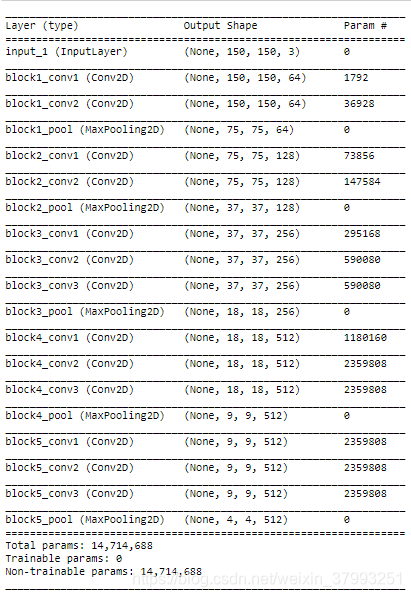

# 5-16 将VGG16卷积基实例化

from keras.applications import VGG16

conv_base = VGG16(weights = 'imagenet',

include_top = False,

input_shape = (150, 150, 3))

# weights指定模型初始化的权重

# include_top指定是否包含密集连接分类器

# input_shape是输入到网络中的图像张量

conv_base.summary()特征图现在为(4, 4, 512),在这个特征上添加一个密集连接分类器

你的数据集上运行卷积基,将输出的数组作为输入,输入到独立的密集连接分类器中。不允许使用数据增强。

在顶部添加Dense层来扩展已有模型。

1.不使用预训练的卷积提取特征

- 运行ImageDataGenerator实例,将图像及标签保存为numpy数组,调用conv_base模型的predict方法提取特征。

# 5-17 使用预训练的卷积基提取特征

import os

import numpy as np

from keras.preprocessing.image import ImageDataGenerator

base_dir = 'D:/kaggle_data/cats_and_dogs_small'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

test_dir = os.path.join(base_dir, 'test')

datagen = ImageDataGenerator(rescale = 1./255)

batch_size = 20

def extract_features(directory, sample_count):

features = np.zeros(shape = (sample_count, 4, 4, 512))

labels = np.zeros(shape=(sample_count))

generator = datagen.flow_from_directory(

directory,

target_size = (150, 150),

batch_size = batch_size,

class_mode = 'binary')

i = 0

for inputs_batch, labels_batch in generator:

features_batch = conv_base.predict(inputs_batch)

features[i * batch_size : (i + 1) * batch_size] = features_batch

labels[i * batch_size : (i + 1) * batch_size] = labels_batch

i += 1

if i * batch_size >= sample_count:

break

return features, labels

train_features, train_labels = extract_features(train_dir, 2000)

validation_features, validation_labels = extract_features(validation_dir, 1000)

test_features, test_labels = extract_features(test_dir, 1000)

train_features = np.reshape(train_features, (2000, 4 * 4 * 512))

validation_features = np.reshape(validation_features, (1000, 4 * 4 * 512))

test_features = np.reshape(test_features, (1000, 4 * 4 * 512))# Found 2000 images belonging to 2 classes.

# Found 1000 images belonging to 2 classes.

# Found 1000 images belonging to 2 classes.

# 5-18 定义并训练密集连接分类器

from keras import models

from keras import layers

from keras import optimizers

model = models.Sequential()

model.add(layers.Dense(256, activation = 'relu', input_dim = 4*4*512))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation = 'sigmoid'))

model.compile(optimizer = optimizers.RMSprop(lr=2e-5),

loss = 'binary_crossentropy',

metrics = ['acc'])

history = model.fit(train_features, train_labels, epochs = 30, batch_size = 20, validation_data=(validation_features, validation_labels))

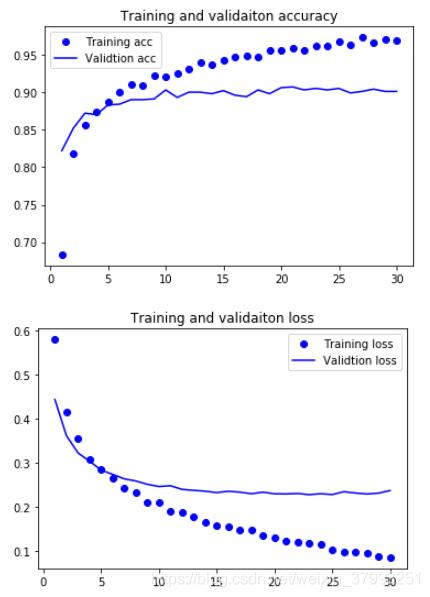

# 5-19 绘制结果

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc)+1)

plt.plot(epochs, acc, 'bo', label = 'Training acc')

plt.plot(epochs, val_acc, 'b', label = 'Validtion acc')

plt.title('Training and validaiton accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label = 'Training loss')

plt.plot(epochs, val_loss, 'b', label = 'Validtion loss')

plt.title('Training and validaiton loss')

plt.legend()

plt.show()

验证精度达到了90%,但是模型几乎一开始就过拟合了。

2.使用数据增强的特征提取

- 可以再训练期间使用数据增强,扩展conv_base模型,然后在输入数据上端到端地运行数据。

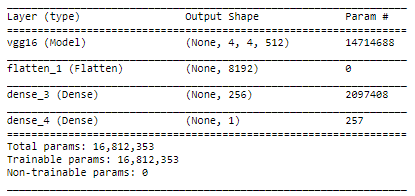

# 5-20 在卷积基上添加一个密集连接分类器

from keras import models

from keras import layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation = 'relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))

model.summary()

- 冻结卷积基(freeze)

print('This is the number of trainable weights '

'before freezing the conv base:', len(model.trainable_weights))

# This is the number of trainable weights before freezing the conv base: 30

conv_base.trainable = False

print('This is the number of trainable weights '

'after freezing the conv base:', len(model.trainable_weights))

# This is the number of trainable weights after freezing the conv base: 4- 在编译和训练模型之前,冻结卷积基。

# 5-21 利用冻结的卷积基端到端地训练模型

from keras.preprocessing.image import ImageDataGenerator

from keras import optimizers

train_datagen = ImageDataGenerator(

rescale = 1./255,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

horizontal_flip = True,

fill_mode = 'nearest')

test_datagen = ImageDataGenerator(rescale = 1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr=2e-5),

metrics = ['acc'])

history = model.fit_generator(

train_generator,

steps_per_epoch = 100,

epochs = 30,

validation_data = validation_generator,

validation_steps= 50)

model.save('cats_and_dogs_small_3.h5')

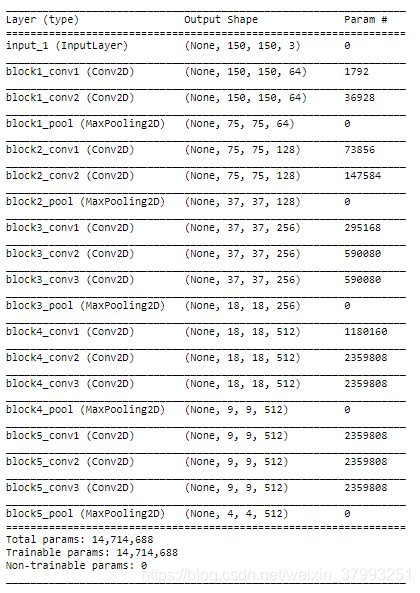

- 绘制结果

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

5.3.2 微调模型

对于提取的冻结的模型基,微调让顶层的几层解冻。

- 卷积基中更靠底层的编码是更可复用特征,靠顶层则更专业化的特征。

- 参数训练越多,过拟合风险越大。

conv_base.summary()

- 微调后三层网络,从block4——pool开始冻结

# 5-22 冻结直到某一层的所有层

conv_base.trainable = True

set_trainable = False

for layer in conv_base.layers:

if(layer.name == 'block5_conv1'):

set_trainable = True

if(set_trainable):

layer.trainable = True

else:

layer.trainable = False- 编译模型

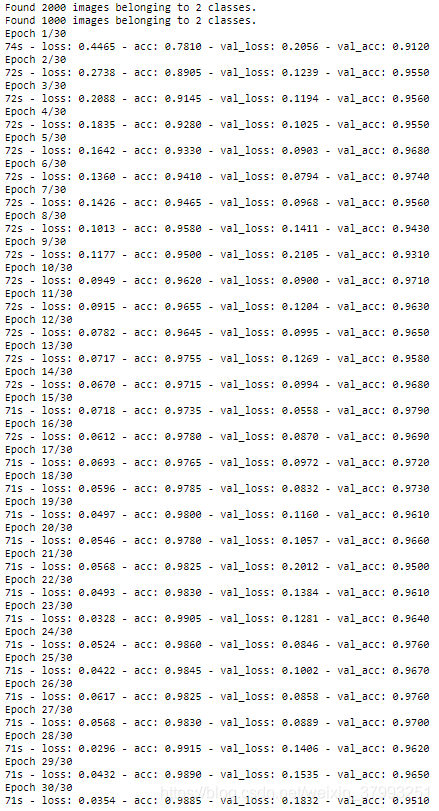

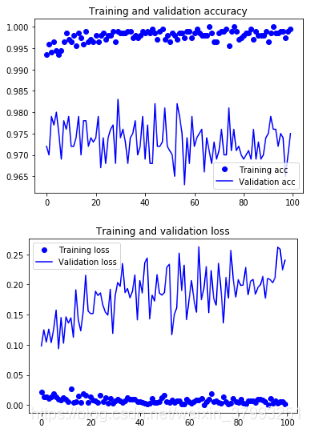

# 5-23 微调模型

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.RMSprop(lr=1e-5),

metrics = ['acc'])

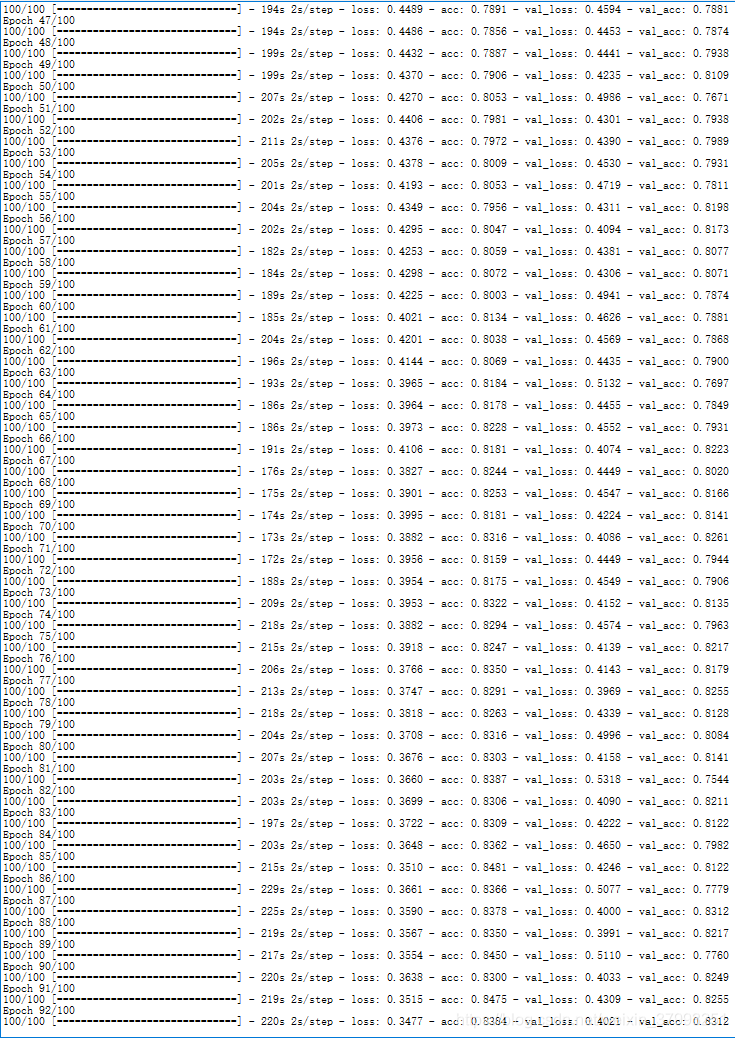

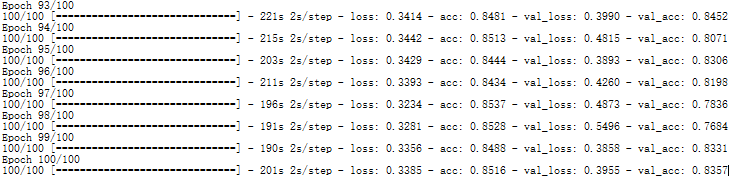

history = model.fit_generator(

train_generator,

steps_per_epoch = 100,

epochs = 100,

validation_data = validation_generator,

validation_steps = 50)

model.save('cats_and_dogs_small_4.h5')

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

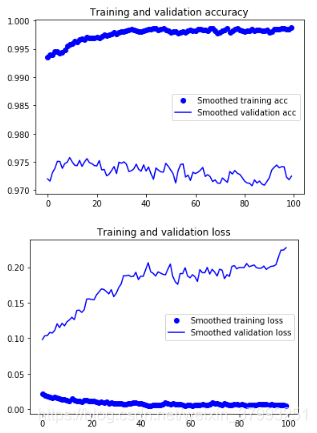

# 5-24 使曲线变得平滑

def smooth_curve(points, factor = 0.8):

smoothed_points = []

for point in points:

if(smoothed_points):

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

plt.plot(epochs, smooth_curve(acc), 'bo', label = 'Smoothed training acc')

plt.plot(epochs, smooth_curve(val_acc), 'b', label = 'Smoothed validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, smooth_curve(loss), 'bo', label = 'Smoothed training loss')

plt.plot(epochs, smooth_curve(val_loss), 'b', label = 'Smoothed validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

5.3.3 小结

- 卷积神经网络是用于计算机视觉最佳的机器学习模型。

- 在小型数据集上主要问题是过拟合,可以使用数据增强。

- 利用特征提取,很容易将现有的卷积神经网络复用于新的数据集。

- 还可以使用微调,将现有的模型之前学到的一些数据表示应用于新问题。

# 测试结果

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_loss, test_acc = model.evaluate_generator(test_generator, steps=50)

print('test acc:', test_acc)

# Found 1000 images belonging to 2 classes.

# test acc: 0.9679999923715.4 卷积神经网络的可视化

可视化神经网络的中间输出

可视化神经网络的过滤器

可视化图像中类激活的热力图

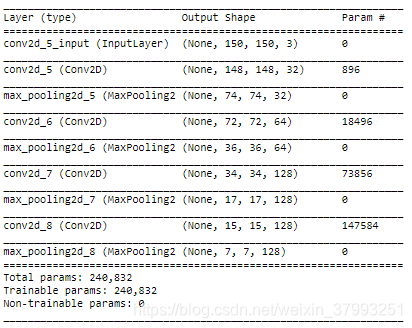

5.4.1 可视化中间激活

1. 对给定输入,激活函数的输出。首先加载5.2保存的模型。

from keras.models import load_model

model = load_model('cats_and_dogs_small_2.h5')

model.summary()

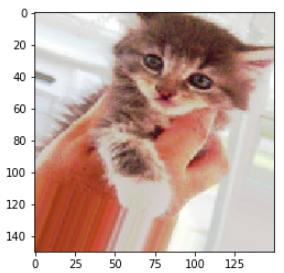

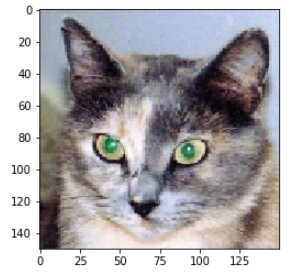

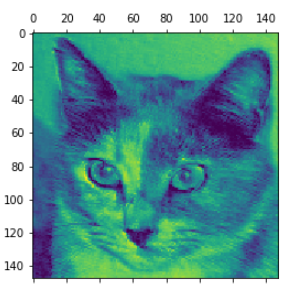

2.将单个图像预处理为一个4D张量 from keras.preprocessing import image,并且显示图像。

# 5-25 预处理单张图像

img_path = 'D:/kaggle_data/cats_and_dogs_small/test/cats/cat.1700.jpg'

from keras.preprocessing import image

import numpy as np

img = image.load_img(img_path, target_size = (150, 150))

img_tensor = img.img_to_array(img)

img_tensor = np.expand_dims(img_tensor, axis = 0)

img_tensor /= 255.

print(img_tensor.shape)

# (1, 150, 150, 3)# 5-26 显示测试图像

import matplotlib.pyplot as plt

plt.imshow(img_tensor[0])

plt.show()

3. 用一个输出和输入张量将模型实例化

# 5-27 用一个输入张量和一个输出张量列表将模型实例化

from keras import models

# 提取前8层的输出

layer_outputs = [layer.output for layer in model.layers[:8]]

# print(layer_outputs.value)

for i in layer_outputs:

print(i)

# 创建一个模型,给定模型输入,可以返回这些输出

activation_model = models.Model(inputs = model.input, outputs = layer_outputs)

activation_model.summary()- 提取模型前8层的输出 layer_outputs:

Tensor("conv2d_5_1/Relu:0", shape=(?, 148, 148, 32), dtype=float32)

Tensor("max_pooling2d_5_1/MaxPool:0", shape=(?, 74, 74, 32), dtype=float32)

Tensor("conv2d_6_1/Relu:0", shape=(?, 72, 72, 64), dtype=float32)

Tensor("max_pooling2d_6_1/MaxPool:0", shape=(?, 36, 36, 64), dtype=float32)

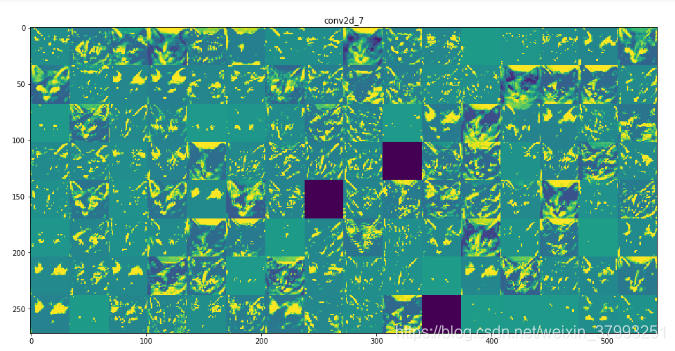

Tensor("conv2d_7_1/Relu:0", shape=(?, 34, 34, 128), dtype=float32)

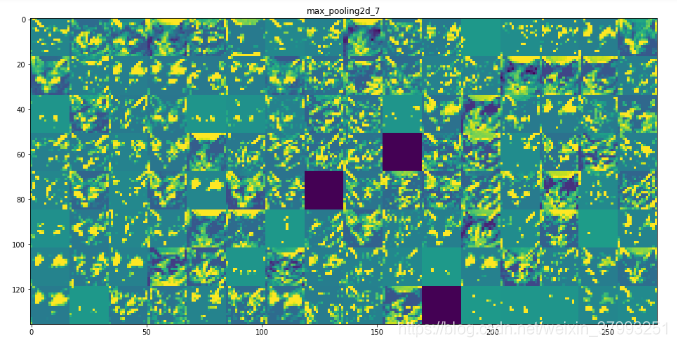

Tensor("max_pooling2d_7_1/MaxPool:0", shape=(?, 17, 17, 128), dtype=float32)

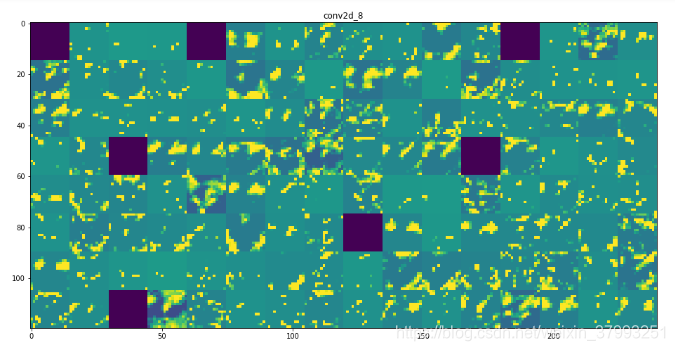

Tensor("conv2d_8_1/Relu:0", shape=(?, 15, 15, 128), dtype=float32)

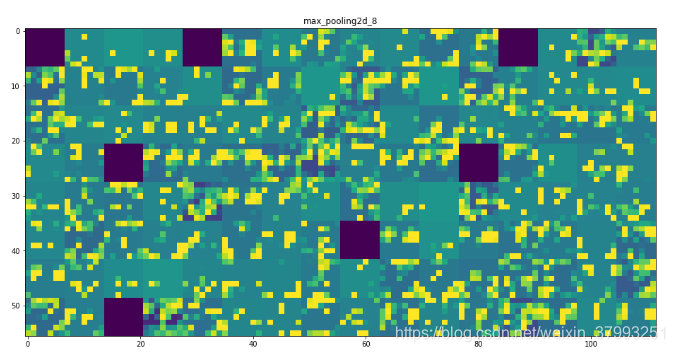

Tensor("max_pooling2d_8_1/MaxPool:0", shape=(?, 7, 7, 128), dtype=float32)- 创建一个模型activation_model,给定模型输入,可以返回这些输出

4. 对于每一次的输出激活对应一个数组

# 5-28 以预测模式运行模型

activations = activation_model.predict(img_tensor)

# 对于输入的猫图像第一个卷积层的激活如下

first_layer_activation = activations[0]

print(first_layer_activation.shape)

# (1, 148, 148, 32)- 生成的模型activation_model

- 生成的图片img_tensor

- 进行预测 activation_model.predict

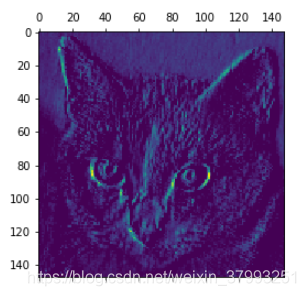

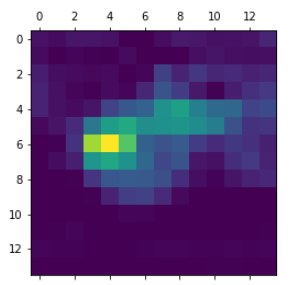

# 5-29 将第4个通道可视化

import matplotlib.pyplot as plt

plt.matshow(first_layer_activation[0,:,:,4], cmap = 'viridis')

plt.show()

# 5-30 将第7个通道可视化

plt.matshow(first_layer_activation[0, :, :, 7], cmap = 'viridis')

plt.show()

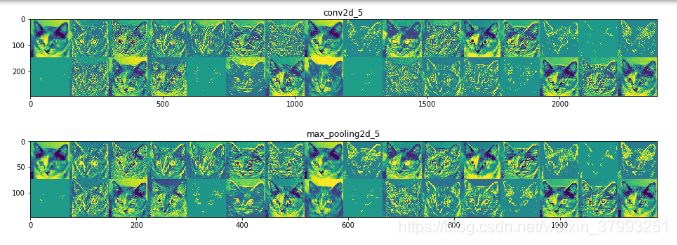

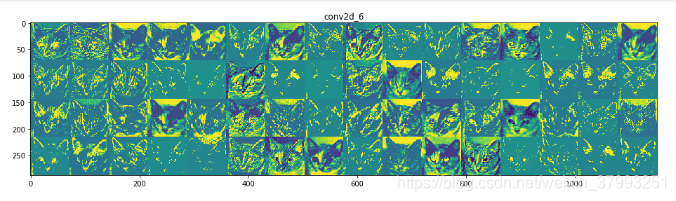

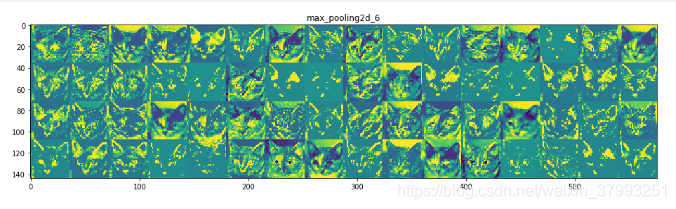

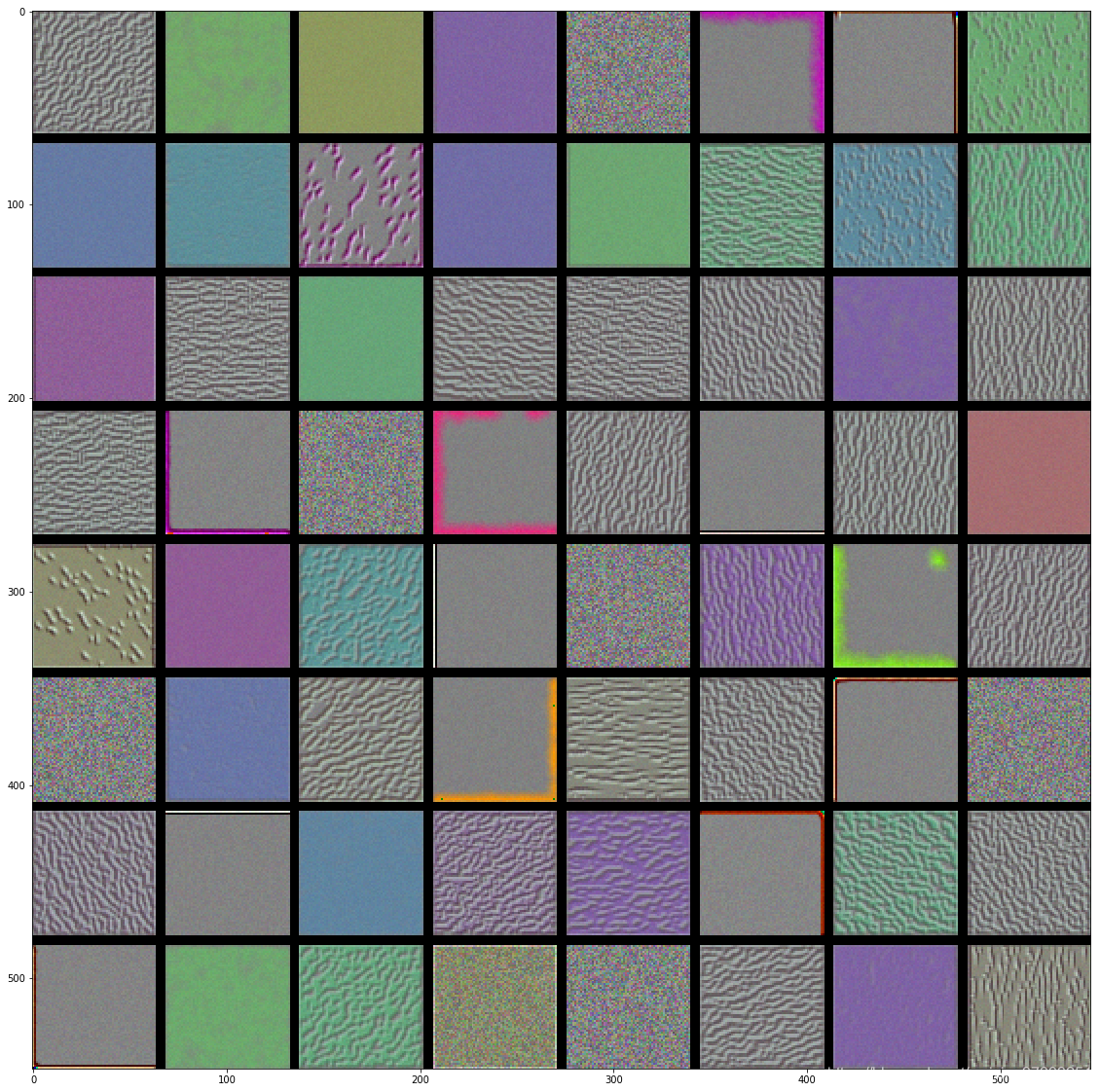

# 5-31 将每个中间激活的所有通道可视化

layer_names = []

# 每一个层的名字

for layer in model.layers[:8]:

layer_name.append(layer.name)

image_per_row = 16

# 显示特征图

for layer_name, layer_activation in zip(layer_names, activations):

n_features = layer_activation.shape[-1] # 特征图的特征个数

size = layer_activation.shape[1] # 特征图的形状

n_cols = n_features

display_grid = np.zeros((size * n_cols, image_per_row * size))

for col in range(n_cols):

for row in range(image_per_row):

channel_image = layer_activation[0, : , : , col * images_per_row + row]

channel_image -= channel_image.mean()

channel_image /= channel_iamge.std()

channel_image *= 64

channel_image += 128

channel_image = np.clip(channel_image, 0, 225).astype('uint8')

display_grid[col * size : (col + 1 ) * size,

row * size : (row + 1) * size] = channel_image

scale = 1./size

plt.figure(figsize = (scale * display_grid.shape[1],

scale * display_grid.shape[0]))

plt.title(layer_name)

plt.grid(False)

plt.imshow(display_grid, aspect = 'auto', cmap = 'virids')

for layer_name, layer_activation in zip(layer_names, activations):

print(layer_name)

print(layer_activation.shape)conv2d_5

(1, 148, 148, 32)

max_pooling2d_5

(1, 74, 74, 32)

conv2d_6

(1, 72, 72, 64)

max_pooling2d_6

(1, 36, 36, 64)

conv2d_7

(1, 34, 34, 128)

max_pooling2d_7

(1, 17, 17, 128)

conv2d_8

(1, 15, 15, 128)

max_pooling2d_8

(1, 7, 7, 128)总结

- 第一层是各种边缘探测器的集合,几乎保留了原始图像所有信息。

- 随着层数的加深,激活变得越来越抽象,难以理解。

- 激活的稀疏度随着层数的加深而增大。

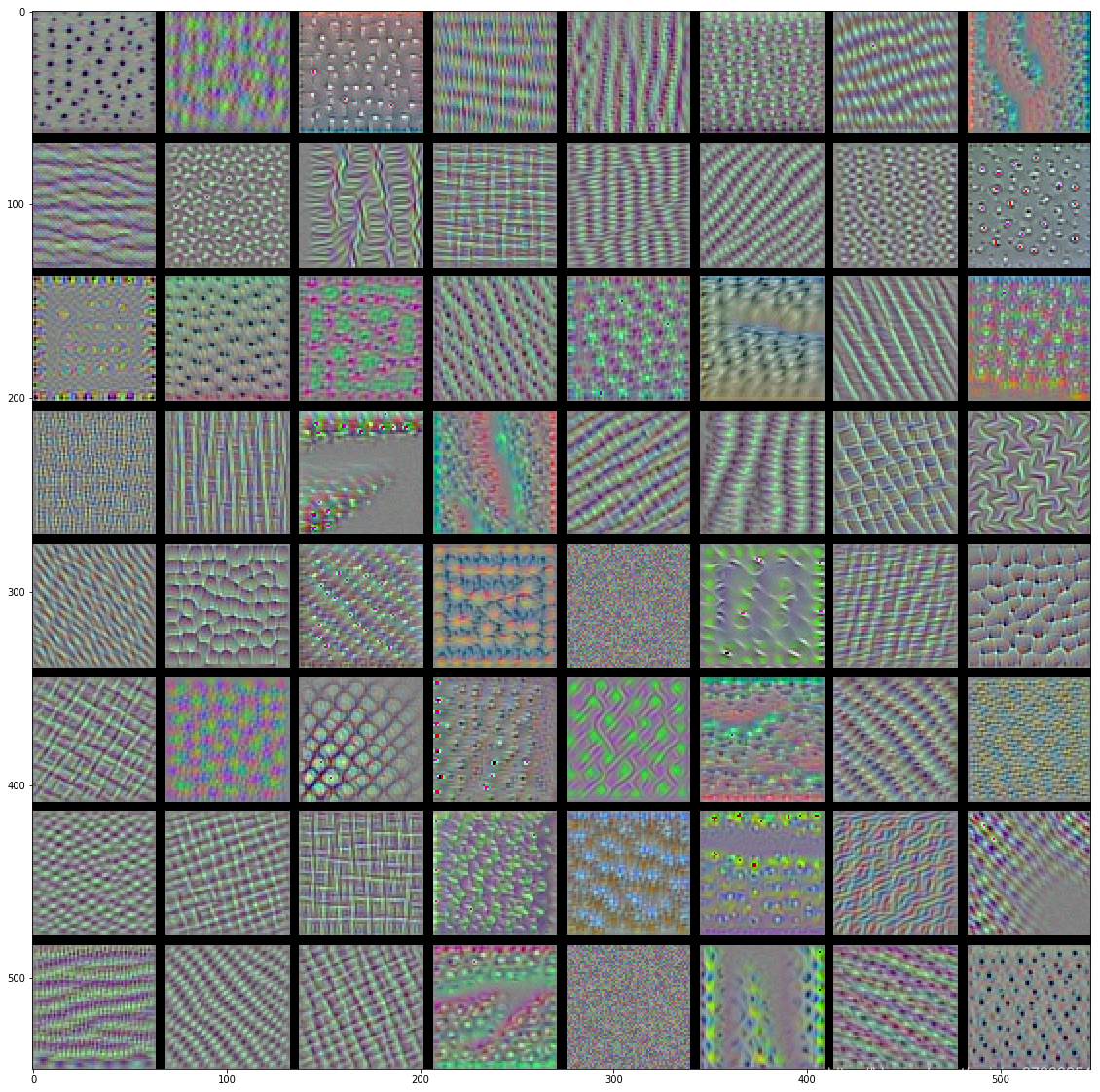

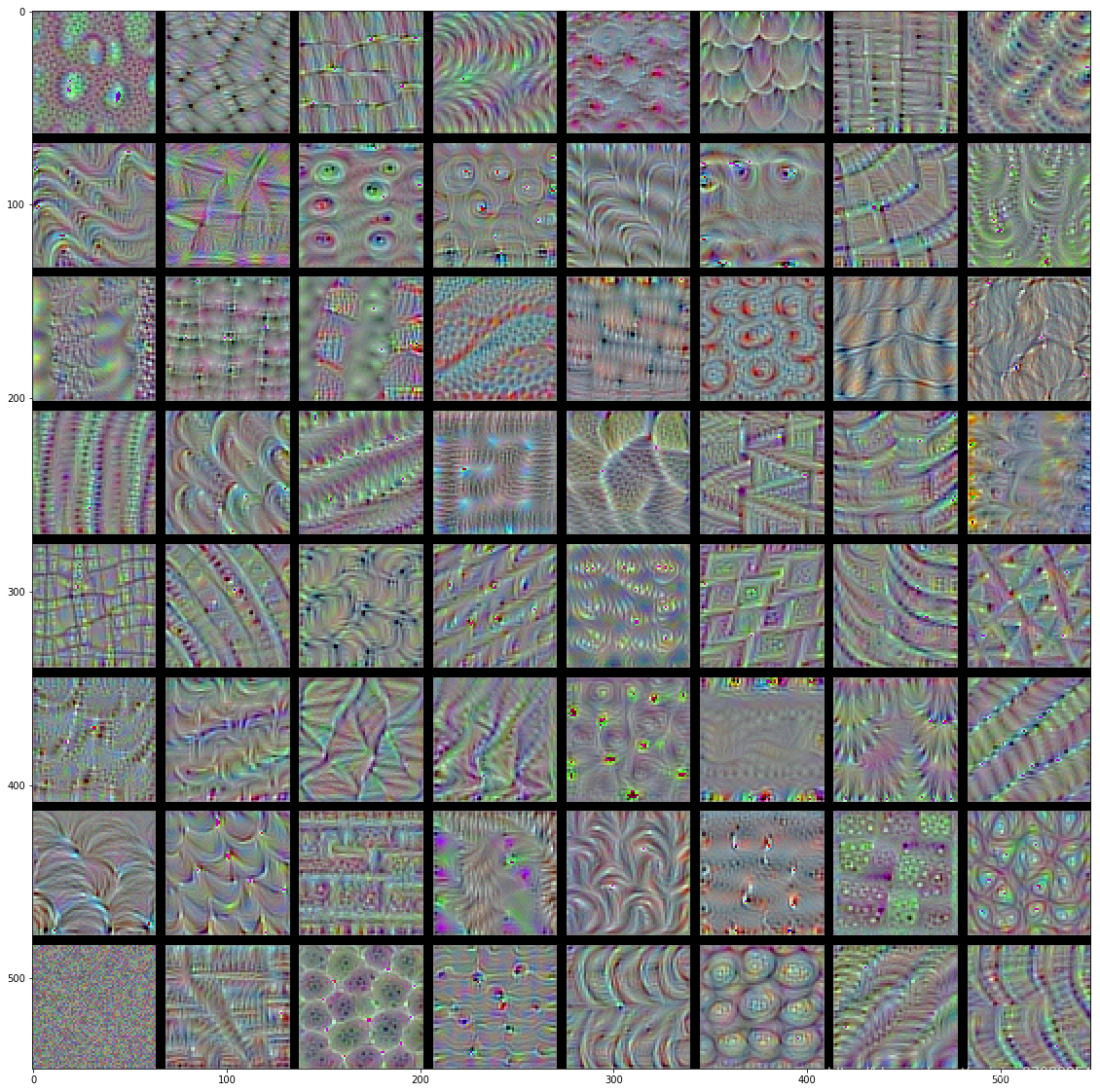

5.4.2 可视化卷积神经网络的过滤器

有助于精确理解卷积神经网络中,每个过滤器容易接受的视觉模式或视觉概念

# 5-32 为过滤器的可视化定义损失张量

from keras.applications import VGG16

from keras import backend as K

model = VGG16(weights = 'imagenet',

include_top = False)

layer_name = 'block3_conv1'

filter_index = 0

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])# 5-33 获取损失相对于输入的梯度

grads = K.gradients(loss, model.input)[0]# 5-34 梯度标准化技巧

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)# 5-35 给定Numpy输入值,得到Numpy输出值

iterate = K.function([model.input], [loss, grads])

import numpy as np

loss_value, grads_value = iterate([np.zeros((1, 150, 150, 3))])定义一个循环来进行随机梯度下降

# 5-36 通过随机梯度下降让损失最大化

input_img_data = np.random.random((1, 150, 150, 3)) * 20 + 128.

step = 1.

for i in range(40):

loss_value, grads_value = iterate([input_img_data])

input_img_data += grads_value * step# 5-37 将张量转换为有效图像的实用函数

def deprocess_image(x):

x -= x.mean()

x /= (x.std() + 1e-5)

x *= 0.1

x += 0.5

x = np.clip(x, 0, 1)

x *= 255

x = np.clip(x, 0 , 255).astype('uint8')

return x# 5-38 生成过滤器可视化的函数

def generate_pattern(layer_name, filter_index, size = 150):

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])

grads = K.gradients(loss, model.input)[0]

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)

iterate = K.function([model.input], [loss, grads])

input_img_data = np.random.random((1, size, size, 3)) * 20 + 128.

step = 1.

for i in range(40):

loss_value, grads_value = iterate([input_img_data])

input_img_data += grads_value * step

img = input_img_data[0]

return deprocess_image(img)

plt.imshow(generate_pattern('block3_conv1', 0))

# 5-39 生成某一层中所有过滤器相应模型组成的网络

layer_name = 'block1_conv1'

size = 64

margin = 5

results = np.zeros((8 * size + 7 * margin, 8 * size + 7 * margin, 3))

for i in range(8):

for j in range(8):

filter_img = generate_pattern(layer_name, i + (j * 8), size = size)

horizontal_start = i * size + i * margin

horizontal_end = horizontal_start + size

vertical_start = j * size + j * margin

vertical_end = vertical_start + size

results[horizontal_start: horizontal_end, vertical_start: vertical_end, :] = filter_img

plt.figure(figsize = (20, 20))

plt.imshow(results)

5.4.3 可视化类激活的热力图

Grad-CAM

# 5-40 加载带有预训练权重的VGG16网络

from keras.applications.vgg16 import VGG16

model = VGG16(weights = 'imagenet')# 5-41 为VGG16模型预处理一张输入图像

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input, decode_predictions

import numpy as np

img_path = '' # 目标图像的本地路径

img = img.load_img(img_path, target_size = (224, 224)) # 大小为224x224的图形库图像

x = image.img_to_array(img) # 大小为224,224,3的numpy数组

x = np.enpand_dims(x, axis = 0) # 添加一个维度 转化为1,224,224,3

x = preprocess_input(x) # 对批量预处理

preds = model.predict(x)

print('Predicted:', decode_predictions(preds, top = 3)[0])Predicted: [('n02504458', 'African_elephant', 0.90942144), ('n01871265', 'tusker', 0.08618243), ('n02504013', 'Indian_elephant', 0.0043545929)]为了展示图像中的哪些部分最像非洲象,使用Grad-CAM算法

# 5-42 应用Grad-CAM算法

african_elephant_output = model.output[:, 386]

last_conv_layer = model.get_layer('block5_conv3')

grads = K.gradient(african_elephant_output, last_conv_layer.output)[0]

pooled_grads = K.mean(grads, axis = (0, 1, 2))

iterate = K.function([model.input], [pooled_grads, last_conv_layer.output[0]])

pooled_grads_value, conv_layer_output_value = iterate([x])

for i in range(512):

conv_layer_output_value[:, :, i] *= pooled_grads_value[i]

heatmap = np.mean(conv_layer_output_value, axis = -1)# 5-43 热力图后处理

heatmap = np.maximum(heatmap, 0)

heatmap /= np.max(heatmap)

plt.matshow(heatmap)

plt.show()

# 5-44 将热力图与原始图像叠加

import cv2

img = cv2.imread(img_path)

heatmap = cv2.resize(heatmap, (img.shape[1], img.shape[0]))

heatmap = np.uint8(255 * heatmap)

heatmap = cv2.applyColorMap(heatmap, cv2.COLORMAP_JET)

superimposed_img = heatmap * 0.4 + img

cv2.imwrite('', superimposed_img)

本文深入探讨了深度学习在计算机视觉领域的应用,包括卷积神经网络的基本原理、数据增强技术、预训练模型的特征提取与微调,以及卷积神经网络的可视化方法。

本文深入探讨了深度学习在计算机视觉领域的应用,包括卷积神经网络的基本原理、数据增强技术、预训练模型的特征提取与微调,以及卷积神经网络的可视化方法。

1262

1262

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?