Sigmoid neurons simulating perceptrons, part I

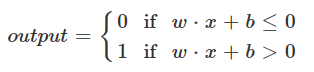

Suppose we take all the weights and biases in a network of perceptrons, and multiply them by a positive constant, c>0c>0. Show that the behaviour of the network doesn't change. 对于perceptron rule :

两边同时乘上c,等式不变

Sigmoid neurons simulating perceptrons, part II

Suppose we have the same setup as the last problem - a network of perceptrons. Suppose also that the overall input to the network of perceptrons has been chosen. We won't need the actual input value, we just need the input to have been fixed. Suppose the weights and biases are such that w⋅x+b≠0 for the input x to any particular perceptron in the network. Now replace all the perceptrons in the network by sigmoid neurons, and multiply the weights and biases by a positive constant c>0. Show that in the limit as c→∞the behaviour of this network of sigmoid neurons is exactly the same as the network of perceptrons. How can this fail when w⋅x+b=0 for one of the perceptrons?

对于sigmoid 函数来说,(wx + b)同时乘上c,不影响wx + b > 0 或 wx + b < 0的结果,因此对于σ(wx + b) > 0.5 或 σ(wx + b) < 0.5 的判定没有影响。但是当wx + b = 0时,σ(wx + b) = 0.5,判断不了结果的类别,因此不能进行二元分类。

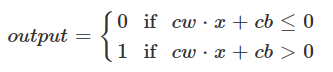

Four output neural exercise

There is a way of determining the bitwise representation of a digit by adding an extra layer to the three-layer network above. The extra layer converts the output from the previous la!yer into a binary representation, as illustrated in the figure below. Find a set of weights and biases for the new output layer. Assume that the first 3 layers of neurons are such that the correct output in the third layer (i.e., the old output layer) has activation at least 0.99, and incorrect outputs have activation less than 0.01.

我们先列出0~9的二进制数字:

我们先列出0~9的二进制数字:

-

0 :0000

-

1 :0001

-

2 :0010

-

3 :0011

-

4 :0100

-

5 :0101

-

6 :0110

-

7 :0111

-

8 :1000

-

9 :1001

output layer从上至下依次表示2^0, 2^1, 2^2, 2^3,那么1,3,5,7,9会使2^0的output neural输出1,而2,3,6,7会使2^1的output neural输出1,依次类推。 那么对于第一个output neural,我们可以令向量w=-1,1,-1,1,-1,1,-1,1,-1,1,b = 0则对于这个neural来说,wx + b = -1x0 + x1 - x2 + x3 - x4 + x5 - x6 + x7 - x8 + x9 > 0 当且仅当输入中x1,x3,x5,x7,x9的值的和大于其余的和(正常情况下只有一项为0.99,其余都是0.01)。比如x5=0.99,其余是0.01,那么wx + b = 0.98 > 0 ,所以σ(wx + b)> 0.5,2^0位输出为1。 同理,得到4个ouput neural的w:

-

-1, 1,-1, 1,-1, 1,-1, 1,-1, 1

-

-1,-1, 1, 1,-1,-1, 1, 1,-1,-1

-

-1,-1,-1,-1, 1, 1, 1, 1,-1,-1

-

-1,-1,-1,-1,-1,-1,-1,-1, 1, 1

注意前面给出的条件是:正确的结果一定大于0.99,错误的一定小于0.01,这样就避免了比如数字0为0.8最大,其余的是0.7比0.8小,但是其他的和加起来大于0.8,输出错误的情况。

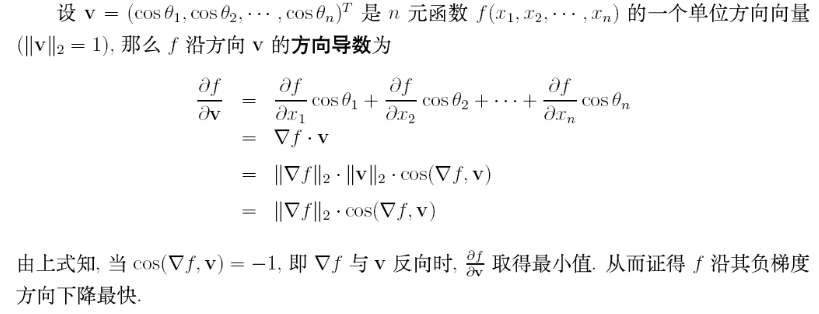

proof that gradient descent is the optimal strategy for minimizing a cost function

Prove the assertion of the last paragraph. Hint: If you're not already familiar with the Cauchy-Schwarz inequality, you may find it helpful to familiarize yourself with it.

看了网上的一个答案:

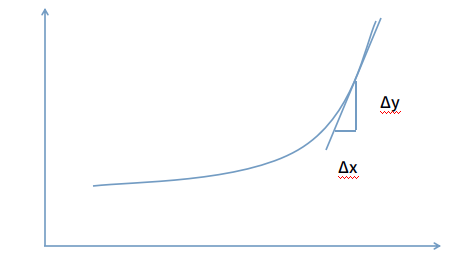

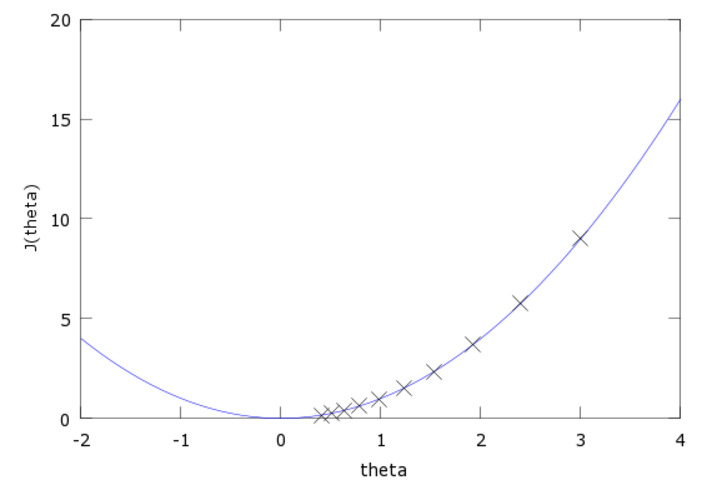

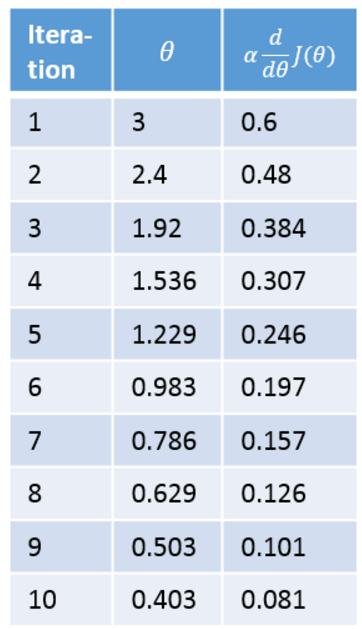

geometric interpretation of what gradient descent is doing in the one-dimensional case

I explained gradient descent when C is a function of two variables, and when it's a function of more than two variables. What happens when C is a function of just one variable? Can you provide a geometric interpretation of what gradient descent is doing in the one-dimensional case?

如图,Δy = Δx * y`,容易看出y`的方向是y下降最快的方向。例如y=x^2,则y`=2x。迭代过程图像如下:

如图,Δy = Δx * y`,容易看出y`的方向是y下降最快的方向。例如y=x^2,则y`=2x。迭代过程图像如下:

compared stochastic gradient descent with incremental gradient descent

An extreme version of gradient descent is to use a mini-batch size of just 1. That is, given a training input, x, we update our weights and biases according to the rules wk→w′k=wk−η∂Cx/∂wk and bl→b′l=bl−η∂Cx/∂bl. Then we choose another training input, and update the weights and biases again. And so on, repeatedly. This procedure is known as online, on-line, or incremental learning. In online learning, a neural network learns from just one training input at a time (just as human beings do). Name one advantage and one disadvantage of online learning, compared to stochastic gradient descent with a mini-batch size of, say, 20.

在Andrew Ng的machine learning课上,第二课就讲了这个问题。不过对方法的名字有点疑惑。Andrew Ng课上,online learning方法叫stochastic gradient descent (also incremental gradient descent),而按参数循环迭代的方法叫batch gradient descent。但是本文stochastic/incremental指两个不同的方法。。。。 抛开名字不说,在线的方法相对于离线方法来说,不用必须遍历整个训练集,每循环一个样本,就迭代一次,因此通常在线方法比离线方法更快的收敛到最小值。但是,在先方法可能永远到不了绝对的最小值,会一直在最小值附近徘徊。

activations vector in component form

Write out Equation a′=σ(wa+b) in component form, and verify that it gives the same result as the rule 1/(1+exp(−∑jwjxj−b)) for computing the output of a sigmoid neuron.

creating a network with just two layers

Try creating a network with just two layers - an input and an output layer, no hidden layer - with 784 and 10 neurons, respectively. Train the network using stochastic gradient descent. What classification accuracy can you achieve?

In [8]: net = Network.Network([784, 10])

In [9]: net.SGD(training_data, 10, 10, 3, test_data=test_data)

Epoch 0: 1903 / 10000

Epoch 1: 1903 / 10000

Epoch 2: 1903 / 10000

Epoch 3: 1903 / 10000

Epoch 4: 1903 / 10000

Epoch 5: 1903 / 10000

Epoch 6: 1903 / 10000

Epoch 7: 1903 / 10000

Epoch 8: 1903 / 10000

Epoch 9: 1903 / 10000

准确率真是相当地低啊。

本文探讨了在网络中调整权重和偏置的影响,包括Perceptron和Sigmoid神经元的关系,梯度下降的最优性证明,以及不同训练策略的优势与劣势。

本文探讨了在网络中调整权重和偏置的影响,包括Perceptron和Sigmoid神经元的关系,梯度下降的最优性证明,以及不同训练策略的优势与劣势。

730

730

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?