1.0 TensorFlow graphs

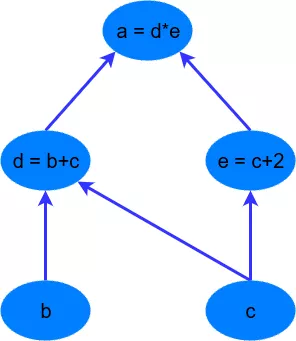

Tensorflow是基于graph based computation:

如:

a=(b+c)∗(c+2)

可分解为

d=b+c

e=c+2

a=d∗e

这样做的目的在于,d=b+c,e=c+2,这两个式子就可以并行地计算。

2.0 A Simple TensorFlow example

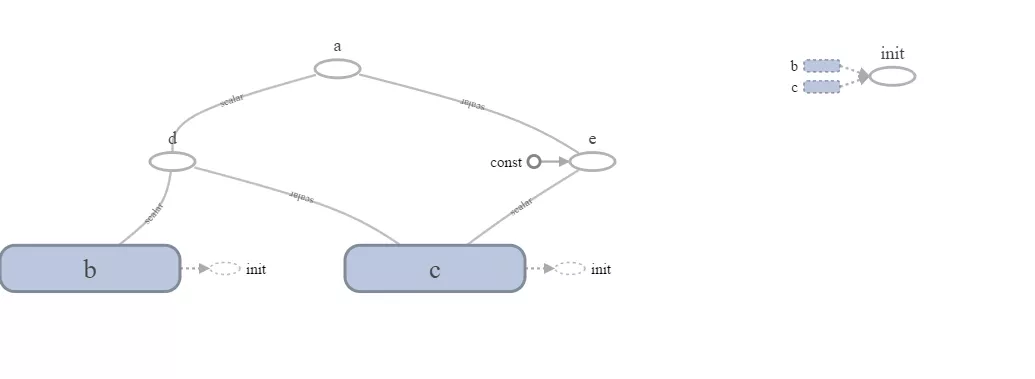

import tensorflow as tf # first, create a TensorFlow constant const = tf.constant(2.0, name="const") # create TensorFlow variables b = tf.Variable(2.0, name='b') c = tf.Variable(1.0, name='c') # now create some operations d = tf.add(b, c, name='d') e = tf.add(c, const, name='e') a = tf.multiply(d, e, name='a')

声明地常量和变量可以可选地使用一个string来标记,Tensorflow会根据初始值推断变量或常量地类型,也可以使用dtype参数来显式指定类型,如tf.float32, tf.int32等,更多类型

注意:

It’s important to note that, as the Python code runs through these commands, the variables haven’t actually been declared as they would have been if you just had a standard Python declaration (i.e. b = 2.0). Instead, all the constants, variables, operations and the computational graph are only created when the initialisation commands are run.

在执行上述python代码地时候,这些变量和常量并不像标准python那样立刻被声明,而是所有地常量、变量、运算和计算图(computational graph)必须在初始化命令执行时才会被创建。

# setup the variable initialisation init_op = tf.global_variables_initializer()

To run the operations between the variables, we need to start a TensorFlow session – tf.Session. The TensorFlow session is an object where all operations are run. TensorFlow was initially created in a static graph paradigm – in other words, first all the operations and variables are defined (the graph structure) and then these are compiled within the tf.Sessionobject.

为了执行变量间的运算,需要启动一个tf.Session,所有运算都是在session对象中运行的。TensorFlow首先创建静态图。

# start the session with tf.Session() as sess: # initialise the variables sess.run(init_op) # compute the output of the graph a_out = sess.run(a) print("Variable a is {}".format(a_out))

此处a是运算(operation)而不是变量,所以可以run。

Note something cool - 在a之前还声明了d和e,在计算a之前需要先执行d和e,我们不需要显式执行d和e,Tensorflow通过数据流图(data flow graph)知道a所有的依赖,自动执行依赖的运算。使用TensorBoard的功能可以看到TensorFlow创建的graph:

2.1 The TensorFlow placeholder

Let’s also say that we didn’t know what the value of the array b would be during the declaration phase of the TensorFlow problem (i.e. before the with tf.Session() as sess) stage. In this case, TensorFlow requires us to declare the basic structure of the data by using the tf.placeholder variable declaration. Let’s use it for b:

在Tensorflow训练问题声明阶段,我们可能不知道数组b的具体值。这种情况下,需要我们使用变量声明 tf.placeholder 来声明数据的基本结构。

# create TensorFlow variables b = tf.placeholder(tf.float32, [None, 1], name='b')

第二个参数是data的shape,placeholder可以接收一个None的size参数。

a_out = sess.run(a, feed_dict={b: np.arange(0, 10)[:, np.newaxis]})

调用session.run时需要将b的具体数据feed进去。

本文介绍了TensorFlow基于图的计算方式,如将复杂运算分解并行计算。还给出简单示例,说明常量、变量和计算图需在初始化命令执行时创建,执行运算要启动tf.Session。此外,讲解了在不知数组具体值时,可用tf.placeholder声明数据基本结构。

本文介绍了TensorFlow基于图的计算方式,如将复杂运算分解并行计算。还给出简单示例,说明常量、变量和计算图需在初始化命令执行时创建,执行运算要启动tf.Session。此外,讲解了在不知数组具体值时,可用tf.placeholder声明数据基本结构。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?