Pairwise Markov Network

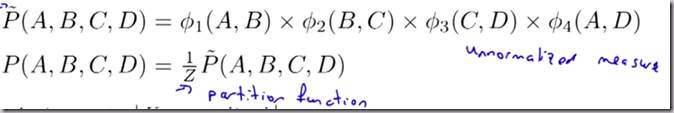

The normalized measure to get normalized probability distribution is called the partition function(or simply normalizing constant).

Consider the pairwise factor ϕ1(A,B). That potential is proportional to none of

- The marginal probability P(A,B)

- The conditional probability P(A|B)

- The conditional probability P(A,B|C,D)

General Gibb’s Distribution

Fully connected pairwise MN is not fully expressive(represent any probability distribution over the random variables) —— not every distribution can be represented as a pairwise Markov network.

An edge in the network between two nodes means that those two nodes can influence each other directly. If X and Y occur in the same factor, then that means they can influence each other directly.

In order to parameterize what we call a general Gibb’s Distribution, we’re going to parameterize it using general factors each of which has a scope that might contain more than two variables.

We can not read the factorization from a graph.That is, we have different factorizations that are quite different than their expressive power, all of which induce the exact same graph.

Q:The difference between an active trail in a Markov Network and an active trail in a Bayesian Network?

A:They are different in the case where Z is the descendant of a v-structure.

Let's look at a v-structure and Z is the descendent of the v-structure. If Z is observed in Bayesian network, it will create an active trail allowing influence to flow. If Z is observed in a Markov Network, then influence stops at Z and the trail is inactive.

Conditional Random Field

Conditional Random Field(CRF)在Task-specific prediction中很常用,假设我们有一些输入变量(观察变量)集合和一个目标变量集合,我们想要预测目标变量,与Markov Random field不同(其中input X和target Y是同种类型的变量),我们这里举两个例子:

| Image segmentation | Text processing | |

| input X | pixel values(which we can process to produce more expressive features) | words in a sentence |

| target Y | label of a particular super-pixel(grass,sky,cow) | label of the words(person, location, organization) |

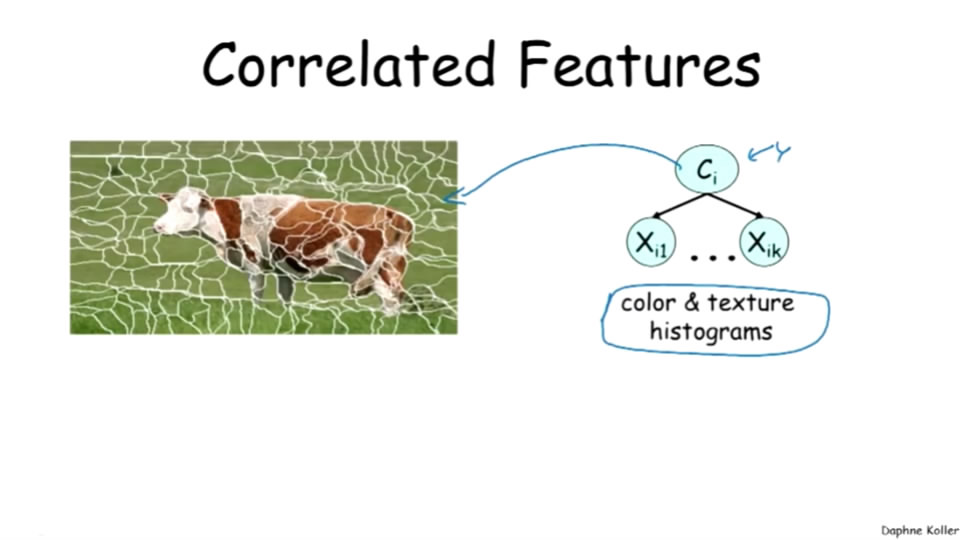

processed features(not trivial features,也就是上面的input variables)彼此之间是非常correlated的,那么它们就存在着很多redundant信息。如果我们使用bayes model建模,建立一个这样的模型:

假设features是independent的,那么因为这个不合理的假设,得到的就是有偏差(skewed)的概率分布。那么什么是正确的independent假设呢,如果我们加一些edge来capture这样的correlations,不仅是很难的,而且还造成了densely connected models。

“So a completely different solution to this problem, basically says, well, I don't care about the image features. I don't want to predict the probability distributions over pixels, you know, I am not trying to do image synthesis.”

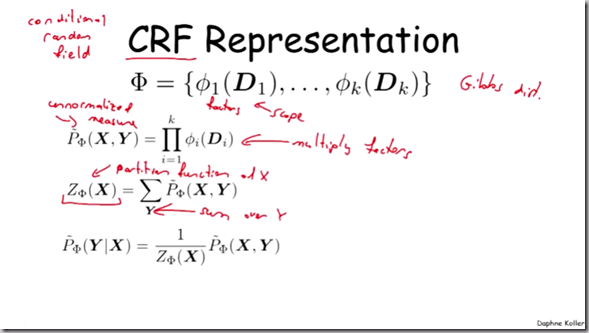

实际上我们对于X的分布不感兴趣,更别提它们之间的correlation了,我们关心的是给定X的条件下,Y的分布。所以别model联合概率P(X,Y)了,干脆对条件概率P(X|Y)进行建模。这就引出了CRF,我们先来看一下定义。

CRF的定义看上去很像Gibbs distribution,这里也有一个factor集合,factor有各自的scope,和Gibbs distribution一样,将这些factors相乘得到一个unnormalized measure,不同的是这里我要model的是条件概率,所以这里的separate normalization constant(partition function)是X的一个函数,是一个X specific partition function(for every given X来sum up Y)。

本文深入探讨了PairwiseMarkovNetwork的概念,介绍了归一化常数的作用,并对比了马尔科夫网与贝叶斯网中活动路径的区别。同时,文章重点介绍了ConditionalRandomField(CRF)在特定任务预测中的应用,包括图像分割和文本处理等场景。

本文深入探讨了PairwiseMarkovNetwork的概念,介绍了归一化常数的作用,并对比了马尔科夫网与贝叶斯网中活动路径的区别。同时,文章重点介绍了ConditionalRandomField(CRF)在特定任务预测中的应用,包括图像分割和文本处理等场景。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?