摘自:https://github.com/cockroachdb/cockroach/blob/master/docs/design.md

CockroachDB is a distributed SQL database. The primary design goals are scalability, strong consistency and survivability(hence the name). CockroachDB aims to tolerate disk, machine, rack, and even datacenter failures with minimal latency disruption and no manual intervention. CockroachDB nodes are symmetric; a design goal is homogeneous deployment (one binary) with minimal configuration and no required external dependencies.

The entry point for database clients is the SQL interface. Every node in a CockroachDB cluster can act as a client SQL gateway. A SQL gateway transforms and executes client SQL statements to key-value (KV) operations, which the gateway distributes across the cluster as necessary and returns results to the client. CockroachDB implements a single, monolithic sorted mapfrom key to value where both keys and values are byte strings.

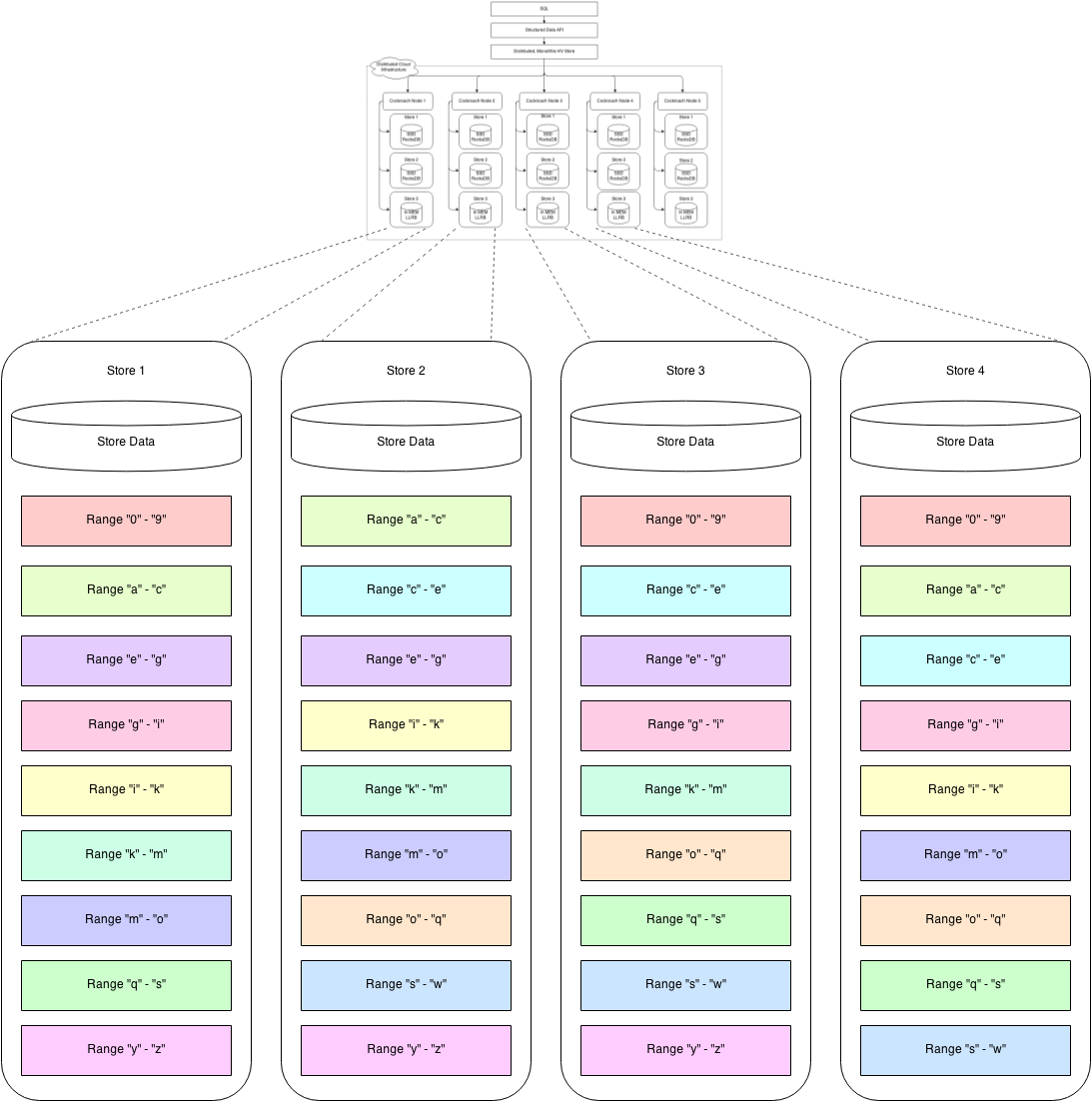

The KV map is logically composed of smaller segments of the keyspace called ranges. Each range is backed by data stored in a local KV storage engine (we use RocksDB, a variant of LevelDB). Range data is replicated to a configurable number of additional CockroachDB nodes. Ranges are merged and split to maintain a target size, by default 64M. The relatively small size facilitates quick repair and rebalancing to address node failures, new capacity and even read/write load. However, the size must be balanced against the pressure on the system from having more ranges to manage.

CockroachDB achieves horizontally scalability:

- adding more nodes increases the capacity of the cluster by the amount of storage on each node (divided by a configurable replication factor), theoretically up to 4 exabytes (4E) of logical data;

- client queries can be sent to any node in the cluster, and queries can operate independently (w/o conflicts), meaning that overall throughput is a linear factor of the number of nodes in the cluster.

- queries are distributed (ref: distributed SQL) so that the overall throughput of single queries can be increased by adding more nodes.

CockroachDB achieves strong consistency:

- uses a distributed consensus protocol for synchronous replication of data in each key value range. We’ve chosen to use the Raft consensus algorithm; all consensus state is stored in RocksDB.

- single or batched mutations to a single range are mediated via the range's Raft instance. Raft guarantees ACID semantics.

- logical mutations which affect multiple ranges employ distributed transactions for ACID semantics. CockroachDB uses an efficient non-locking distributed commit protocol.

CockroachDB achieves survivability:

- range replicas can be co-located within a single datacenter for low latency replication and survive disk or machine failures. They can be distributed across racks to survive some network switch failures.

- range replicas can be located in datacenters spanning increasingly disparate geographies to survive ever-greater failure scenarios from datacenter power or networking loss to regional power failures (e.g.

{ US-East-1a, US-East-1b, US-East-1c },{ US-East, US-West, Japan },{ Ireland, US-East, US-West},{ Ireland, US-East, US-West, Japan, Australia }).

CockroachDB provides snapshot isolation (SI) and serializable snapshot isolation (SSI) semantics, allowing externally consistent, lock-free reads and writes--both from a historical snapshot timestamp and from the current wall clock time. SI provides lock-free reads and writes but still allows write skew. SSI eliminates write skew, but introduces a performance hit in the case of a contentious system. SSI is the default isolation; clients must consciously decide to trade correctness for performance. CockroachDB implements a limited form of linearizability, providing ordering for any observer or chain of observers.

Similar to Spanner directories, CockroachDB allows configuration of arbitrary zones of data. This allows replication factor, storage device type, and/or datacenter location to be chosen to optimize performance and/or availability. Unlike Spanner, zones are monolithic and don’t allow movement of fine grained data on the level of entity groups.

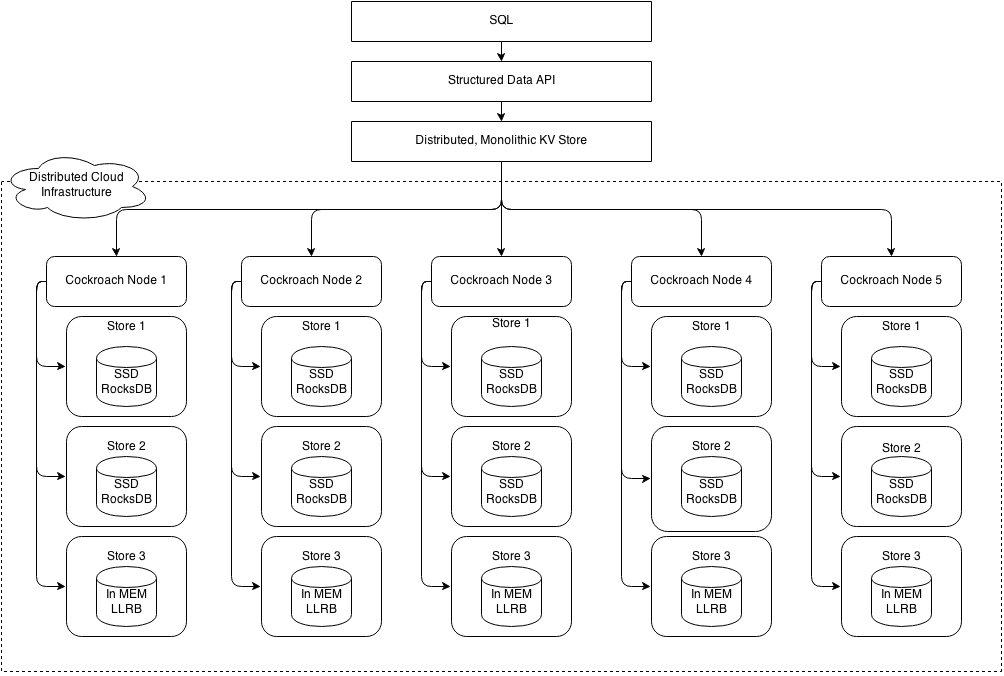

Architecture

CockroachDB implements a layered architecture. The highest level of abstraction is the SQL layer (currently unspecified in this document). It depends directly on the SQL layer, which provides familiar relational concepts such as schemas, tables, columns, and indexes. The SQL layer in turn depends on the distributed key value store, which handles the details of range addressing to provide the abstraction of a single, monolithic key value store. The distributed KV store communicates with any number of physical cockroach nodes. Each node contains one or more stores, one per physical device.

Each store contains potentially many ranges, the lowest-level unit of key-value data. Ranges are replicated using the Raft consensus protocol. The diagram below is a blown up version of stores from four of the five nodes in the previous diagram. Each range is replicated three ways using raft. The color coding shows associated range replicas.

Each physical node exports two RPC-based key value APIs: one for external clients and one for internal clients (exposing sensitive operational features). Both services accept batches of requests and return batches of responses. Nodes are symmetric in capabilities and exported interfaces; each has the same binary and may assume any role.

Nodes and the ranges they provide access to can be arranged with various physical network topologies to make trade offs between reliability and performance. For example, a triplicated (3-way replica) range could have each replica located on different:

- disks within a server to tolerate disk failures.

- servers within a rack to tolerate server failures.

- servers on different racks within a datacenter to tolerate rack power/network failures.

- servers in different datacenters to tolerate large scale network or power outages.

Up to F failures can be tolerated, where the total number of replicas N = 2F + 1 (e.g. with 3x replication, one failure can be tolerated; with 5x replication, two failures, and so on).

CockroachDB设计原理

CockroachDB设计原理

CockroachDB是一款分布式SQL数据库,旨在实现可扩展性、强一致性和高生存能力。它能够容忍从单个节点到整个数据中心的故障,并且通过最小的延迟中断和无需人工干预。每个节点都能作为客户端SQL网关,将SQL语句转换为键值操作并分布在整个集群中。CockroachDB使用Raft一致性算法来确保数据同步复制时的ACID语义。

CockroachDB是一款分布式SQL数据库,旨在实现可扩展性、强一致性和高生存能力。它能够容忍从单个节点到整个数据中心的故障,并且通过最小的延迟中断和无需人工干预。每个节点都能作为客户端SQL网关,将SQL语句转换为键值操作并分布在整个集群中。CockroachDB使用Raft一致性算法来确保数据同步复制时的ACID语义。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?